scikit-learn : Unsupervised Learning - Clustering

"Clustering is the task of grouping a set of objects in such a way that objects in the same group (called a cluster) are more similar (in some sense or another) to each other than to those in other groups (clusters). It is a main task of exploratory data mining, and a common technique for statistical data analysis, used in many fields, including machine learning, pattern recognition, image analysis, information retrieval, and bioinformatics." - wiki : Cluster analysis

Clustering performs the task of gathering samples into groups of similar samples according to some predefined similarity or dissimilarity measure (such as the Euclidean distance).

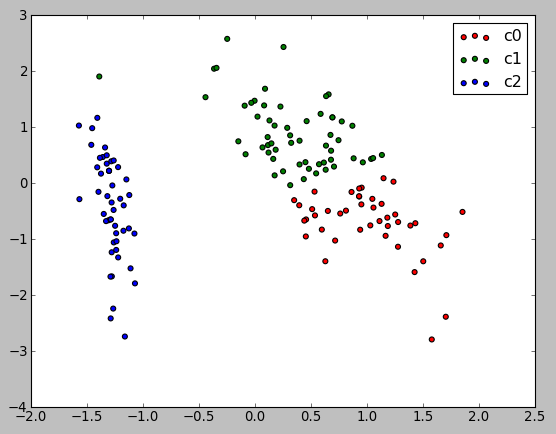

In this section, we'll use KMeans algorithm which is one of the simplest clustering algorithms. We will reuse the output of the 2D PCA of the iris dataset from the previous chapter (scikit-learn : PCA dimensionality reduction with iris dataset) and try to find 3 groups of samples:

>>> from sklearn.datasets import load_iris

>>> iris = load_iris()

>>> X = iris.data

>>> y = iris.target

>>> from sklearn.decomposition import PCA

>>> pca = PCA(n_components=2, whiten=True).fit(X)

>>> X_pca = pca.transform(X)

>>> from sklearn.cluster import KMeans

>>> from numpy.random import RandomState

>>> rng = RandomState(42)

>>> kmeans = KMeans(n_clusters=3, random_state=rng).fit(X_pca)

>>> import numpy as np

>>> np.round(kmeans.cluster_centers_, decimals=2)

array([[ 1.02, -0.71],

[ 0.33, 0.89],

[-1.29, -0.44]])

>>> kmeans.labels_[:10]

array([2, 2, 2, 2, 2, 2, 2, 2, 2, 2], dtype=int32)

>>> kmeans.labels_[-10:]

array([0, 0, 1, 0, 0, 0, 1, 0, 0, 1], dtype=int32)

The code (clustering.py)looks like this:

from sklearn.datasets import load_iris

from itertools import cycle

from sklearn.decomposition import PCA

from sklearn.cluster import KMeans

from numpy.random import RandomState

import pylab as pl

class clustering:

def __init__(self):

self.plot(load_iris().data)

def plot(self, X):

pca = PCA(n_components=2, whiten=True).fit(X)

X_pca = pca.transform(X)

kmeans = KMeans(n_clusters=3, random_state=RandomState(42)).fit(X_pca)

plot_2D(X_pca, kmeans.labels_, ["c0", "c1", "c2"])

def plot_2D(data, target, target_names):

colors = cycle('rgbcmykw')

target_ids = range(len(target_names))

pl.figure()

for i, c, label in zip(target_ids, colors, target_names):

pl.scatter(data[target == i, 0], data[target == i, 1],

c=c, label=label)

pl.legend()

pl.show()

if __name__ == '__main__':

c = clustering()

The code draws the picture below, "KMeans cluster assignements on 2D PCA iris data":

Machine Learning with scikit-learn

scikit-learn installation

scikit-learn : Features and feature extraction - iris dataset

scikit-learn : Machine Learning Quick Preview

scikit-learn : Data Preprocessing I - Missing / Categorical data

scikit-learn : Data Preprocessing II - Partitioning a dataset / Feature scaling / Feature Selection / Regularization

scikit-learn : Data Preprocessing III - Dimensionality reduction vis Sequential feature selection / Assessing feature importance via random forests

Data Compression via Dimensionality Reduction I - Principal component analysis (PCA)

scikit-learn : Data Compression via Dimensionality Reduction II - Linear Discriminant Analysis (LDA)

scikit-learn : Data Compression via Dimensionality Reduction III - Nonlinear mappings via kernel principal component (KPCA) analysis

scikit-learn : Logistic Regression, Overfitting & regularization

scikit-learn : Supervised Learning & Unsupervised Learning - e.g. Unsupervised PCA dimensionality reduction with iris dataset

scikit-learn : Unsupervised_Learning - KMeans clustering with iris dataset

scikit-learn : Linearly Separable Data - Linear Model & (Gaussian) radial basis function kernel (RBF kernel)

scikit-learn : Decision Tree Learning I - Entropy, Gini, and Information Gain

scikit-learn : Decision Tree Learning II - Constructing the Decision Tree

scikit-learn : Random Decision Forests Classification

scikit-learn : Support Vector Machines (SVM)

scikit-learn : Support Vector Machines (SVM) II

Flask with Embedded Machine Learning I : Serializing with pickle and DB setup

Flask with Embedded Machine Learning II : Basic Flask App

Flask with Embedded Machine Learning III : Embedding Classifier

Flask with Embedded Machine Learning IV : Deploy

Flask with Embedded Machine Learning V : Updating the classifier

scikit-learn : Sample of a spam comment filter using SVM - classifying a good one or a bad one

Machine learning algorithms and concepts

Batch gradient descent algorithmSingle Layer Neural Network - Perceptron model on the Iris dataset using Heaviside step activation function

Batch gradient descent versus stochastic gradient descent

Single Layer Neural Network - Adaptive Linear Neuron using linear (identity) activation function with batch gradient descent method

Single Layer Neural Network : Adaptive Linear Neuron using linear (identity) activation function with stochastic gradient descent (SGD)

Logistic Regression

VC (Vapnik-Chervonenkis) Dimension and Shatter

Bias-variance tradeoff

Maximum Likelihood Estimation (MLE)

Neural Networks with backpropagation for XOR using one hidden layer

minHash

tf-idf weight

Natural Language Processing (NLP): Sentiment Analysis I (IMDb & bag-of-words)

Natural Language Processing (NLP): Sentiment Analysis II (tokenization, stemming, and stop words)

Natural Language Processing (NLP): Sentiment Analysis III (training & cross validation)

Natural Language Processing (NLP): Sentiment Analysis IV (out-of-core)

Locality-Sensitive Hashing (LSH) using Cosine Distance (Cosine Similarity)

Artificial Neural Networks (ANN)

[Note] Sources are available at Github - Jupyter notebook files1. Introduction

2. Forward Propagation

3. Gradient Descent

4. Backpropagation of Errors

5. Checking gradient

6. Training via BFGS

7. Overfitting & Regularization

8. Deep Learning I : Image Recognition (Image uploading)

9. Deep Learning II : Image Recognition (Image classification)

10 - Deep Learning III : Deep Learning III : Theano, TensorFlow, and Keras

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization