Flask with Embedded Machine Learning V : Updating the classifier

Continued from Flask with Embedded Machine Learning IV : Deploy.

Now that our Flask app has been deployed to our remote web server, we can go on further and update our model from the feedback data.

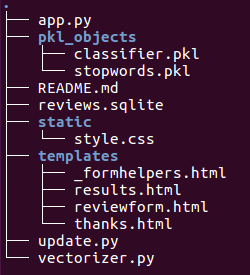

After implementing the code for updating our prediction model, we get the following directory structure:

We may want to update the predictive model from the feedback data that is being collected in the SQLite database.

One option that is not computationally expensive would be to download the SQLite database from the server, update the clf object locally on our computer, and then upload the new pickle file back to the server.

To update the classifier locally on our computer, we created an update.py file in the movieclassifier, and lit looks like this:

import pickle

import sqlite3

import numpy as np

import os

# import HashingVectorizer from local dir

from vectorizer import vect

def update_model(db_path, model, batch_size=10000):

conn = sqlite3.connect(db_path)

c = conn.cursor()

c.execute('SELECT * from review_db')

results = c.fetchmany(batch_size)

while results:

data = np.array(results)

X = data[:, 0]

y = data[:, 1].astype(int)

classes = np.array([0, 1])

X_train = vect.transform(X)

clf.partial_fit(X_train, y, classes=classes)

results = c.fetchmany(batch_size)

conn.close()

return None

cur_dir = os.path.dirname(__file__)

clf = pickle.load(open(os.path.join(cur_dir,

'pkl_objects', 'classifier.pkl'), 'rb'))

db = os.path.join(cur_dir, 'reviews.sqlite')

update_model(db_path=db, model=clf, batch_size=10000)

# Uncomment the following lines to update

# classifier.pkl file permanently.

# pickle.dump(clf, open(os.path.join(cur_dir,

# 'pkl_objects', 'classifier.pkl'), 'wb'),

# protocol=None)

The update_model() function will fetch entries from the SQLite database in batches of 10,000 entries at a time unless the database contains fewer entries.

Alternatively, we could also fetch one entry at a time by using fetchone() instead of fetchmany(), which would be computationally very inefficient. Though we may use fetchall() method, however, this could be a problem if we are working with large datasets that exceed the computer or server's memory capacity.

Now that we have created the update.py script, we could also upload it to the movieclassifier directory on our server and import the update_model function in the main application script app.py to update the classifier from the SQLite database every time we restart the web application. In order to do it, all we have to do is just adding a line of code to import the update_model function from the update.py script at the top of app.py as shown below:

# import update function from local dir from update import update_model ... if __name__ == '__main__': update_model(db_path=db, model=clf, batch_size=10000) app.run(debug=False, host='0.0.0.0', port=5077)

Source is available from ahaman-Flask-with-Machine-Learning-Sentiment-Analysis

Python Machine Learning, Sebastian Raschka

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization