Artificial Neural Network (ANN) - Introduction

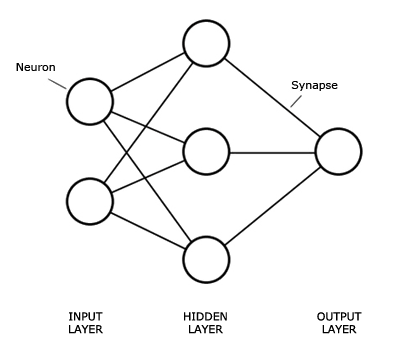

An Artificial Neural Network (ANN) is an interconnected group of nodes, similar to the our brain network.

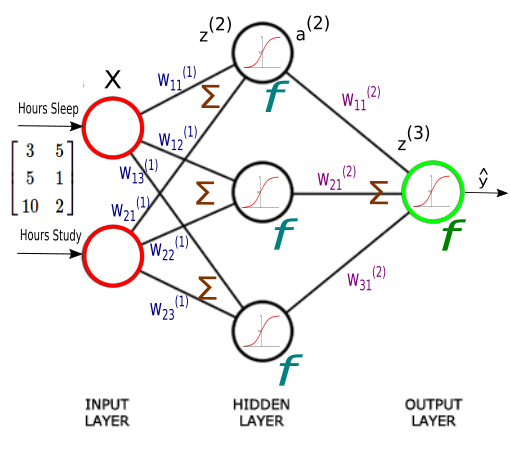

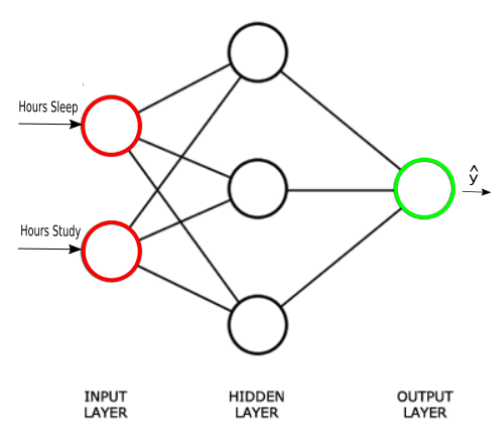

Here, we have three layers, and each circular node represents a neuron and a line represents a connection from the output of one neuron to the input of another.

The first layer has input neurons which send data via synapses to the second layer of neurons, and then via more synapses to the third layer of output neurons.

This tutorial uses IPython's Jupyter notebook.

If you don't have it, please visit iPython and Jupyter - Install Jupyter, iPython Notebook, drawing with Matplotlib, and publishing it to Github.

This tutorial consists of 7 parts and each of it has its own notebook. Please check out Jupyter notebook files at Github.

We'll follow Neural Networks Demystified throughout this series of articles.

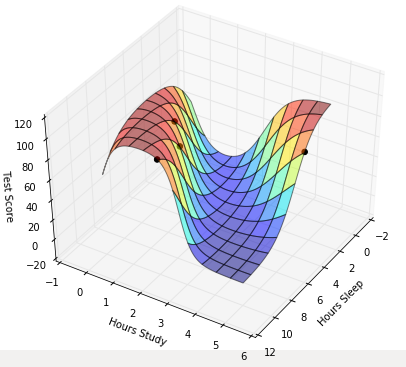

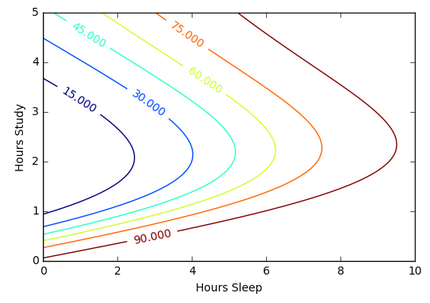

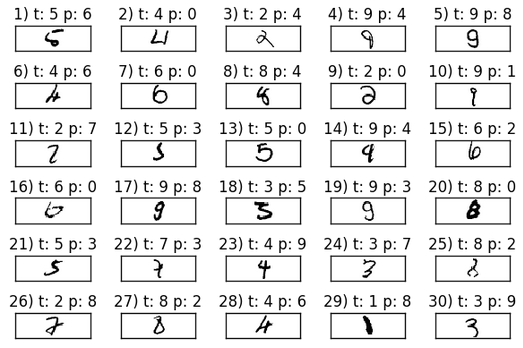

Suppose we want to predict our test score based on how many hours we sleep and how many hours we study the night before.

In other words, we want to predict output value $y$ which are scores for a given set of input values $X$ which are hours of (sleep, study).

| $X$ (sleep, study) | y (test score) |

|---|---|

| (3,5) | 75 |

| (5,1) | 82 |

| (10,2) | 93 |

| (8,3) | ? |

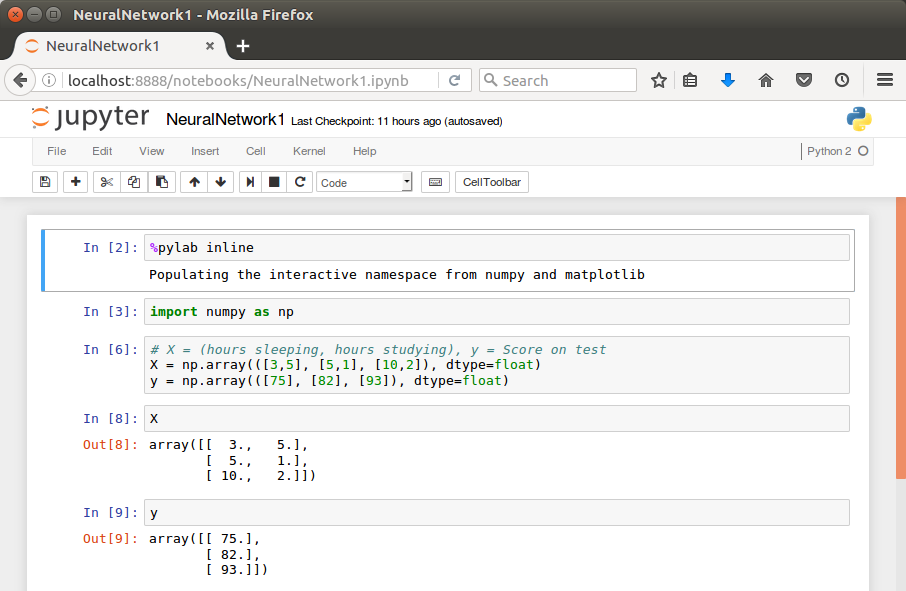

In our machine learning approach, we'll use the python to store our data in 2-dimensional numpy arrays.

We'll use the data to train a model to predict how we will do on our next test.

This is a supervised regression problem.

It's supervised because our examples have outputs($y$).

It's a regression because we're predicting the test score, which is a continuous output.

If we we're predicting the grade (A,B, etc.), however, this is going to be a classification problem but not a regression problem.

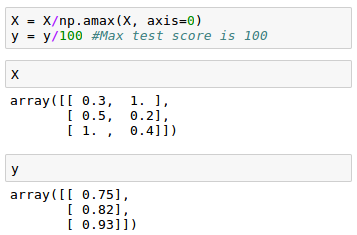

We may want to scale our data so that the result should be in [0,1].

Now we can start building our Neural Network.

We know our network must have 2 inputs($X$) and 1 output($y$).

We'll call our output $\hat y$, because it's an estimate of $y$.

Any layer between our input and output layer is called a hidden layer. Here, we're going to use just one hidden layer with 3 neurons.

As explained in the earlier section, circles represent neurons and lines represent synapses.

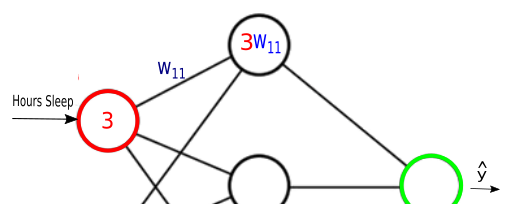

Synapses have a really simple job.

They take a value from their input, multiply it by a specific weight, and output the result. In other words, the synapses store parameters called "weights" which are used to manipulate the data.

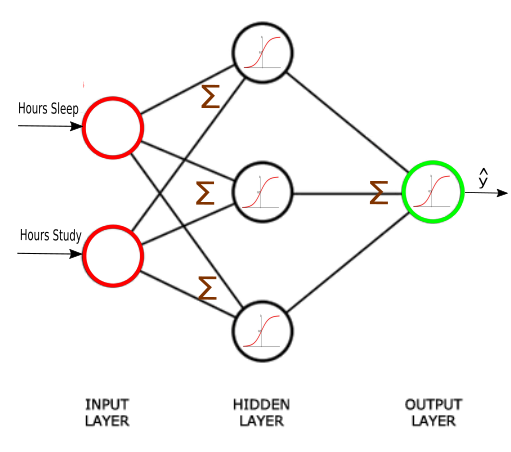

Neurons are a little more complicated.

Neurons' job is to add together the outputs of all their synapses, and then apply an activation function.

Certain activation functions allow neural nets to model complex non-linear patterns.

For our neural network, we're going to use sigmoid activation functions.

Next:

Machine Learning with scikit-learn

scikit-learn installation

scikit-learn : Features and feature extraction - iris dataset

scikit-learn : Machine Learning Quick Preview

scikit-learn : Data Preprocessing I - Missing / Categorical data

scikit-learn : Data Preprocessing II - Partitioning a dataset / Feature scaling / Feature Selection / Regularization

scikit-learn : Data Preprocessing III - Dimensionality reduction vis Sequential feature selection / Assessing feature importance via random forests

Data Compression via Dimensionality Reduction I - Principal component analysis (PCA)

scikit-learn : Data Compression via Dimensionality Reduction II - Linear Discriminant Analysis (LDA)

scikit-learn : Data Compression via Dimensionality Reduction III - Nonlinear mappings via kernel principal component (KPCA) analysis

scikit-learn : Logistic Regression, Overfitting & regularization

scikit-learn : Supervised Learning & Unsupervised Learning - e.g. Unsupervised PCA dimensionality reduction with iris dataset

scikit-learn : Unsupervised_Learning - KMeans clustering with iris dataset

scikit-learn : Linearly Separable Data - Linear Model & (Gaussian) radial basis function kernel (RBF kernel)

scikit-learn : Decision Tree Learning I - Entropy, Gini, and Information Gain

scikit-learn : Decision Tree Learning II - Constructing the Decision Tree

scikit-learn : Random Decision Forests Classification

scikit-learn : Support Vector Machines (SVM)

scikit-learn : Support Vector Machines (SVM) II

Flask with Embedded Machine Learning I : Serializing with pickle and DB setup

Flask with Embedded Machine Learning II : Basic Flask App

Flask with Embedded Machine Learning III : Embedding Classifier

Flask with Embedded Machine Learning IV : Deploy

Flask with Embedded Machine Learning V : Updating the classifier

scikit-learn : Sample of a spam comment filter using SVM - classifying a good one or a bad one

Machine learning algorithms and concepts

Batch gradient descent algorithmSingle Layer Neural Network - Perceptron model on the Iris dataset using Heaviside step activation function

Batch gradient descent versus stochastic gradient descent

Single Layer Neural Network - Adaptive Linear Neuron using linear (identity) activation function with batch gradient descent method

Single Layer Neural Network : Adaptive Linear Neuron using linear (identity) activation function with stochastic gradient descent (SGD)

Logistic Regression

VC (Vapnik-Chervonenkis) Dimension and Shatter

Bias-variance tradeoff

Maximum Likelihood Estimation (MLE)

Neural Networks with backpropagation for XOR using one hidden layer

minHash

tf-idf weight

Natural Language Processing (NLP): Sentiment Analysis I (IMDb & bag-of-words)

Natural Language Processing (NLP): Sentiment Analysis II (tokenization, stemming, and stop words)

Natural Language Processing (NLP): Sentiment Analysis III (training & cross validation)

Natural Language Processing (NLP): Sentiment Analysis IV (out-of-core)

Locality-Sensitive Hashing (LSH) using Cosine Distance (Cosine Similarity)

Artificial Neural Networks (ANN)

[Note] Sources are available at Github - Jupyter notebook files1. Introduction

2. Forward Propagation

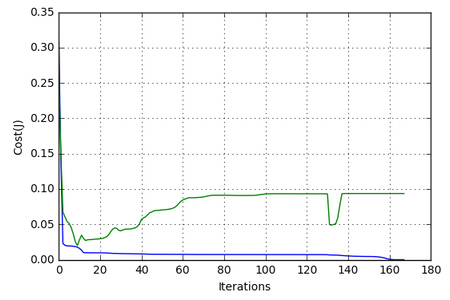

3. Gradient Descent

4. Backpropagation of Errors

5. Checking gradient

6. Training via BFGS

7. Overfitting & Regularization

8. Deep Learning I : Image Recognition (Image uploading)

9. Deep Learning II : Image Recognition (Image classification)

10 - Deep Learning III : Deep Learning III : Theano, TensorFlow, and Keras

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization