Algorithms - Shannon entropy

What is "news"?

"A Dog bites a man" - Not a news.

"A man bits a dog" - It's a news.

Why?

Because it's rare. The probability of a man biting a dog is very low.

Shannon entropy is the average unpredictability. So, when a man bites a dog, the incident's entropy is very high.

For a coin toss, the entropy (unpredictability) is high because we cannot predict the output though it is either face or tail. Suppose we have a coin that has no tail but faces only. In that case, we can safely predict the outcome. In other words, for that unfair coin toss, the entropy (unpredictability) is 0. So, if we get a face for a toss, it cannot be a news because it's been expected (no surprize) and it does not carry any bit of new information. The Shannon entropy is 0 in that case.

Using Shannon entropy formula, the uncertainty $u$ is: $u = -log_2 p$

where $p$ is the probability of an incident. So, for a fair coin toss:

$u = -log_2 \frac{1}{2} = 1$Therefore, a fair coin toss gives a bit of information. Note that the bit here is the same bit used in bits and bytes!

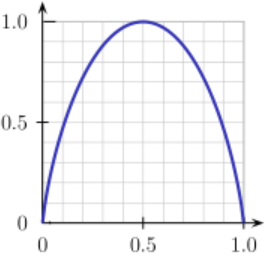

Actually, depending on the fairness of the coin (x-axis), we can plot the entropy (y-axis):

We can see we have the highest entropy (surprizal or unpredictability or a news) value of 1 when we have a fair coin where we have $p = 0.5$, and as the coin toss becomes biased one way or the other we starts to get a non-news incident.

Since I have a separate page regarding this encoding

(Compression Algorithm), I'll briefly summarize what it is about primarily focused on the bit's perspective.

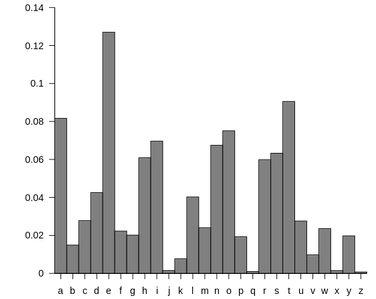

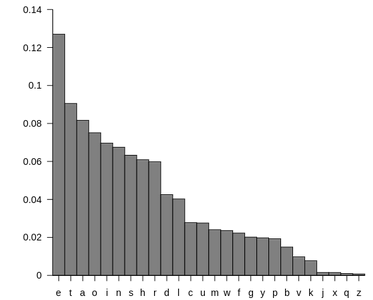

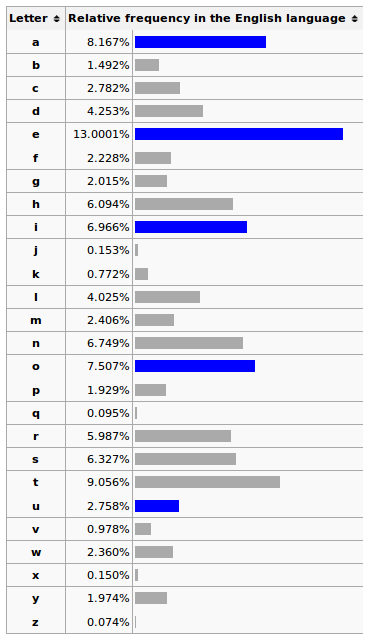

Pictures from wiki

As we can see from the plots and the table, in English, the most frequent ones are 'e', 't',' 'a', 'o', 'i' in that order and 'z' is the least frequent letter. In Huffman encoding, the less bit is used for 'e' than 'z' to compress efficiently. As a result, the letter 'e' carries less information than the 'z'. It's not a news or a surprizal if we see 'e' in English text, but it's a news if we see 'z'. In other words, 'e' has lowest Shannon Entropy while 'z' has the highest Shannon Entropy.

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization