AWS : EKS install

This is just a note for myself and it's not meant to be a guide for EKS. We have an authentic guide - Getting started with Amazon EKS.

We'll install EKS in two ways:

- via EKS console and kubectl

- via eksctl

- AWS CLI

$ aws --version aws-cli/2.1.37 Python/3.8.8 Darwin/17.4.0 exe/x86_64 prompt/off $ kubectl version --client Client Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.0", GitCommit:"9e991415386e4cf155a24b1da15becaa390438d8", GitTreeState:"clean", BuildDate:"2020-03-25T14:58:59Z", GoVersion:"go1.13.8", Compiler:"gc", Platform:"darwin/amd64"} $ eksctl version 0.44.0 - Create an Amazon VPC with public and private subnets that meets Amazon EKS requirements:

$ aws cloudformation create-stack \ --stack-name bogo-eks-vpc-stack \ --template-url https://s3.us-west-2.amazonaws.com/amazon-eks/cloudformation/2020-10-29/amazon-eks-vpc-private-subnets.yaml { "StackId": "arn:aws:cloudformation:us-east-1:526262051452:stack/bogo-eks-vpc-stack/14566050-9d7d-11eb-a10e-0a9dd5d6e113" } - Create a cluster IAM role and attach the required Amazon EKS IAM managed policy to it.

Kubernetes clusters managed by Amazon EKS make calls to other AWS services to manage the resources.

Copy the following contents to a file named cluster-role-trust-policy.json:{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": { "Service": "eks.amazonaws.com" }, "Action": "sts:AssumeRole" } ] }

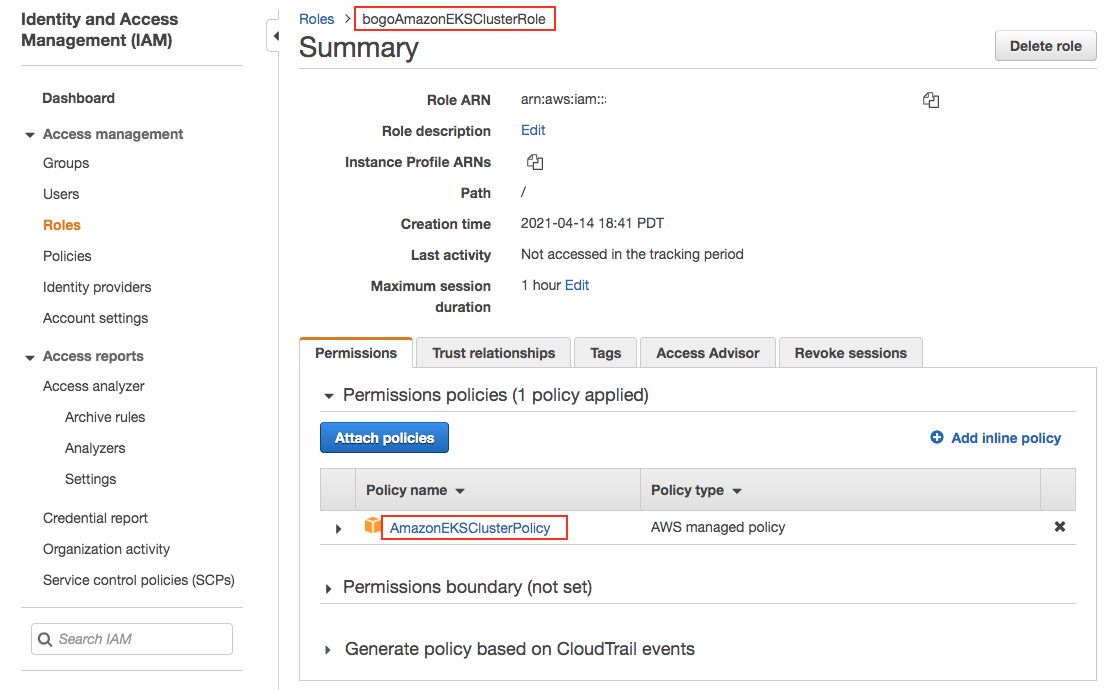

Create the role:$ aws iam create-role \ --role-name bogoAmazonEKSClusterRole \ --assume-role-policy-document file://"cluster-role-trust-policy.json" { "Role": { "Path": "/", "RoleName": "bogoAmazonEKSClusterRole", "RoleId": "AROAXVB5JUJ6JVLH45GWT", "Arn": "arn:aws:iam::526262051452:role/bogoAmazonEKSClusterRole", "CreateDate": "2021-04-15T01:41:12+00:00", "AssumeRolePolicyDocument": { "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": { "Service": "eks.amazonaws.com" }, "Action": "sts:AssumeRole" } ] } } }

Attach the required Amazon EKS managed IAM policy to the role:$ aws iam attach-role-policy \ --policy-arn arn:aws:iam::aws:policy/AmazonEKSClusterPolicy \ --role-name bogoAmazonEKSClusterRole

Here is the role created:

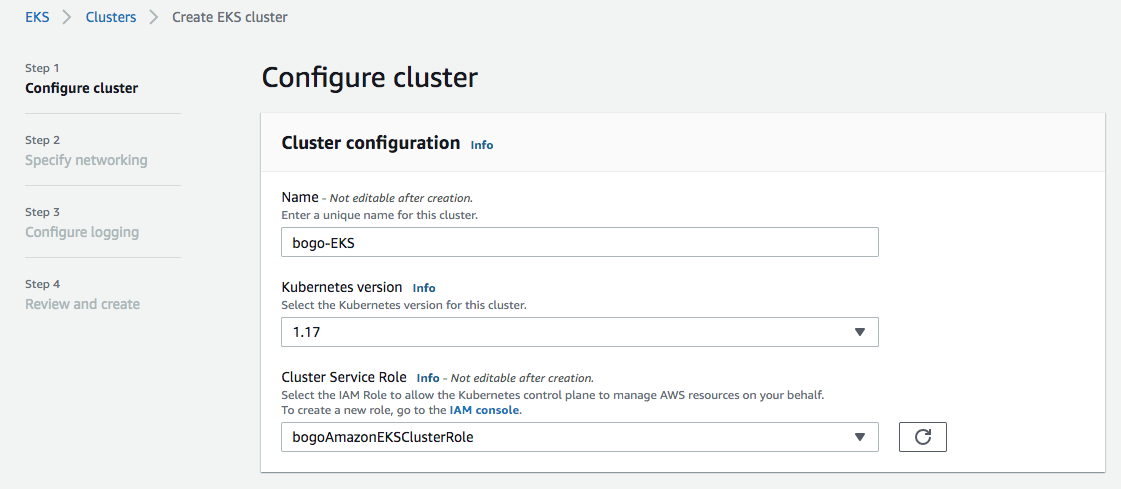

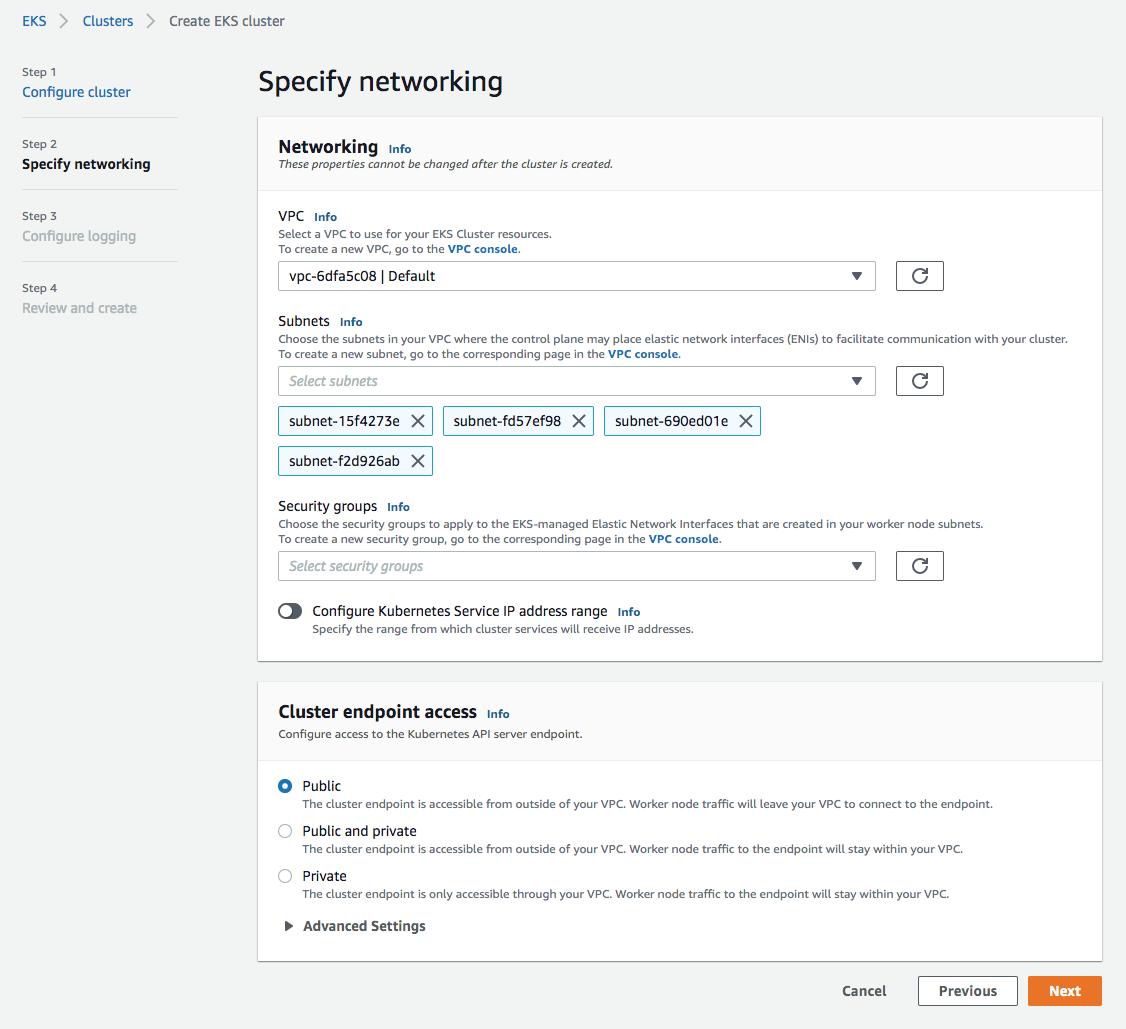

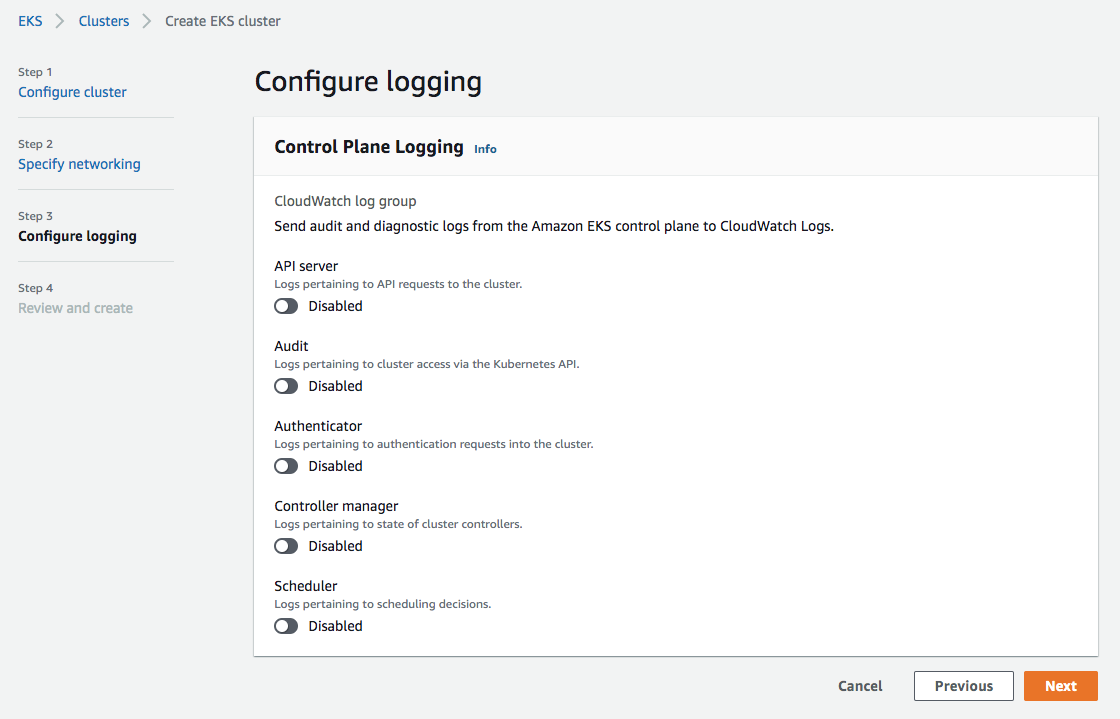

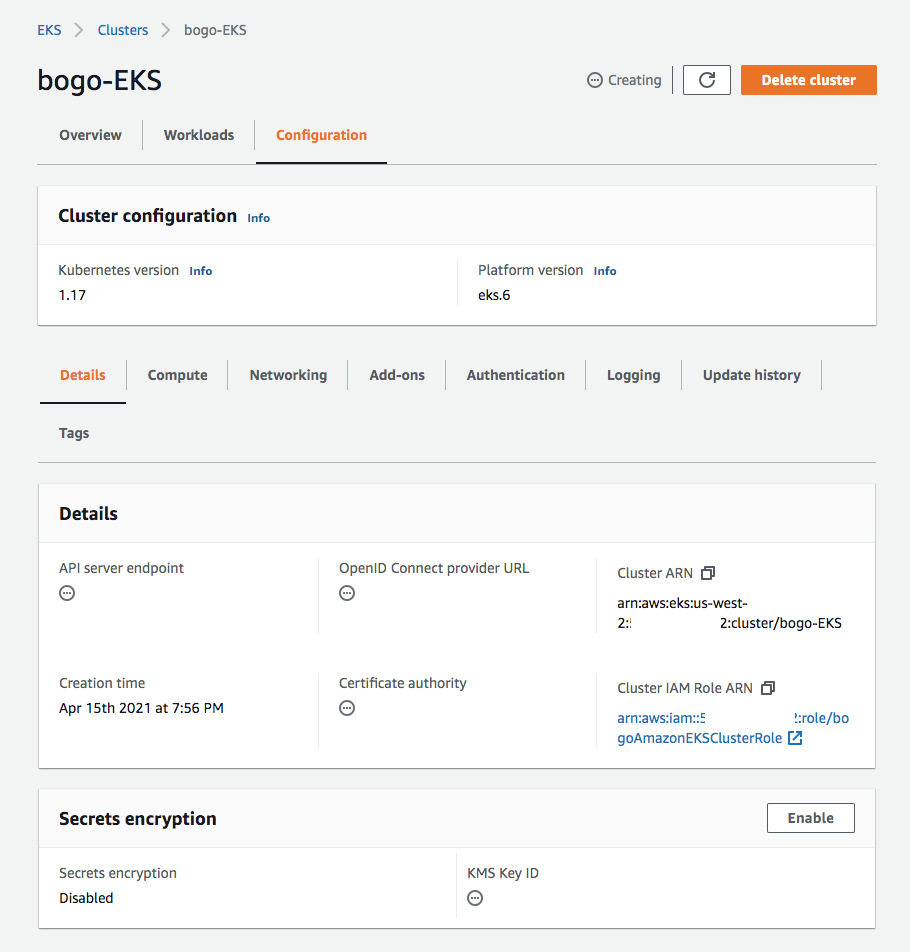

- Now, create an EKS cluster ("bogo-EKS") in us-west-2 (Oregon):

- On the Review and create page, select Create.

Let's create a kubeconfig file for the cluster. The settings in this file enable the kubectl CLI to communicate with our cluster.

To configure our desktop to communicate with our cluster, we need to do the followings:

$ aws eks update-kubeconfig \ --region us-west-2 \ --name bogo-EKS Added new context arn:aws:eks:us-west-2:526262051452:cluster/bogo-EKS to ~/.kube/config

Test the configuration if there is any Cluster-IP service:

$ kubectl get svc --all-namespaces NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 43m kube-system kube-dns ClusterIP 10.100.0.10 <none> 53/UDP,53/TCP 43m

We'll skip the step of creating worker nodes and pass it to the eksctl command in the next section.

eksctl is a simple CLI tool for creating and managing clusters on EKS - Amazon's managed Kubernetes service for EC2.

It is written in Go, uses CloudFormation, was created by Weaveworks and it welcomes contributions from the community.

We can create a basic cluster with just one command. We can pass args to the command line, here, we'll use yaml instead:

with the cluster.yaml looks like this:

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: bogo-EKS

region: us-west-2

version: "1.18"

nodeGroups:

- name: bogo-ng-1

instanceType: t2.nano

desiredCapacity: 2

- name: bogo-ng-2

instanceType: t2.nano

desiredCapacity: 1

$ eksctl create cluster -f cluster.yaml

2021-04-16 11:22:27 [ℹ] eksctl version 0.44.0

2021-04-16 11:22:27 [ℹ] using region us-west-2

2021-04-16 11:22:28 [ℹ] setting availability zones to [us-west-2b us-west-2d us-west-2c]

2021-04-16 11:22:28 [ℹ] subnets for us-west-2b - public:192.168.0.0/19 private:192.168.96.0/19

2021-04-16 11:22:28 [ℹ] subnets for us-west-2d - public:192.168.32.0/19 private:192.168.128.0/19

2021-04-16 11:22:28 [ℹ] subnets for us-west-2c - public:192.168.64.0/19 private:192.168.160.0/19

2021-04-16 11:22:28 [ℹ] nodegroup "bogo-ng-1" will use "ami-0be674eea7877cd6d" [AmazonLinux2/1.18]

2021-04-16 11:22:28 [ℹ] nodegroup "bogo-ng-2" will use "ami-0be674eea7877cd6d" [AmazonLinux2/1.18]

2021-04-16 11:22:28 [ℹ] using Kubernetes version 1.18

2021-04-16 11:22:28 [ℹ] creating EKS cluster "bogo-EKS" in "us-west-2" region with un-managed nodes

2021-04-16 11:22:28 [ℹ] 2 nodegroups (bogo-ng-1, bogo-ng-2) were included (based on the include/exclude rules)

2021-04-16 11:22:28 [ℹ] will create a CloudFormation stack for cluster itself and 2 nodegroup stack(s)

2021-04-16 11:22:28 [ℹ] will create a CloudFormation stack for cluster itself and 0 managed nodegroup stack(s)

2021-04-16 11:22:28 [ℹ] if you encounter any issues, check CloudFormation console or try 'eksctl utils describe-stacks --region=us-west-2 --cluster=bogo-EKS'

2021-04-16 11:22:28 [ℹ] CloudWatch logging will not be enabled for cluster "bogo-EKS" in "us-west-2"

2021-04-16 11:22:28 [ℹ] you can enable it with 'eksctl utils update-cluster-logging --enable-types={SPECIFY-YOUR-LOG-TYPES-HERE (e.g. all)} --region=us-west-2 --cluster=bogo-EKS'

2021-04-16 11:22:28 [ℹ] Kubernetes API endpoint access will use default of {publicAccess=true, privateAccess=false} for cluster "bogo-EKS" in "us-west-2"

2021-04-16 11:22:28 [ℹ] 2 sequential tasks: { create cluster control plane "bogo-EKS", 3 sequential sub-tasks: { wait for control plane to become ready, create addons, 2 parallel sub-tasks: { create nodegroup "bogo-ng-1", create nodegroup "bogo-ng-2" } } }

2021-04-16 11:22:28 [ℹ] building cluster stack "eksctl-bogo-EKS-cluster"

2021-04-16 11:22:29 [ℹ] deploying stack "eksctl-bogo-EKS-cluster"

2021-04-16 11:22:59 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-cluster"

2021-04-16 11:23:31 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-cluster"

2021-04-16 11:24:31 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-cluster"

2021-04-16 11:25:31 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-cluster"

2021-04-16 11:26:32 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-cluster"

2021-04-16 11:27:32 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-cluster"

2021-04-16 11:28:32 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-cluster"

2021-04-16 11:29:33 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-cluster"

2021-04-16 11:30:33 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-cluster"

2021-04-16 11:31:33 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-cluster"

2021-04-16 11:32:34 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-cluster"

2021-04-16 11:33:34 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-cluster"

2021-04-16 11:34:34 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-cluster"

2021-04-16 11:34:36 [ℹ] building nodegroup stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:34:36 [ℹ] building nodegroup stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 11:34:37 [ℹ] --nodes-min=1 was set automatically for nodegroup bogo-ng-2

2021-04-16 11:34:37 [ℹ] --nodes-min=2 was set automatically for nodegroup bogo-ng-1

2021-04-16 11:34:37 [ℹ] --nodes-max=2 was set automatically for nodegroup bogo-ng-1

2021-04-16 11:34:37 [ℹ] --nodes-max=1 was set automatically for nodegroup bogo-ng-2

2021-04-16 11:34:37 [ℹ] deploying stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:34:37 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:34:37 [ℹ] deploying stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 11:34:37 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 11:34:54 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:34:55 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 11:35:13 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:35:14 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 11:35:32 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 11:35:32 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:35:50 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 11:35:52 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:36:07 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 11:36:10 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:36:22 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 11:36:28 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:36:28 [!] retryable error (RequestError: send request failed

caused by: Post "https://cloudformation.us-west-2.amazonaws.com/": EOF) from cloudformation/DescribeStacks - will retry after delay of 54.761006ms

2021-04-16 11:36:40 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 11:36:47 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:36:47 [!] retryable error (RequestError: send request failed

caused by: Post "https://cloudformation.us-west-2.amazonaws.com/": EOF) from cloudformation/DescribeStacks - will retry after delay of 57.562606ms

2021-04-16 11:37:00 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 11:37:05 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:37:16 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 11:37:20 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:37:34 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 11:37:39 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:37:51 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 11:37:55 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:38:11 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 11:38:13 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:38:29 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:38:31 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 11:38:46 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:38:50 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 11:39:05 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 11:39:06 [ℹ] waiting for the control plane availability...

2021-04-16 11:39:06 [✔] saved kubeconfig as "/Users/kihyuckhong/.kube/config"

2021-04-16 11:39:06 [ℹ] no tasks

2021-04-16 11:39:06 [✔] all EKS cluster resources for "bogo-EKS" have been created

2021-04-16 11:39:06 [ℹ] adding identity "arn:aws:iam::526262051452:role/eksctl-bogo-EKS-nodegroup-bogo-ng-NodeInstanceRole-17CCXSCEWY36W" to auth ConfigMap

2021-04-16 11:39:06 [ℹ] nodegroup "bogo-ng-1" has 0 node(s)

2021-04-16 11:39:06 [ℹ] waiting for at least 2 node(s) to become ready in "bogo-ng-1"

2021-04-16 11:39:51 [ℹ] nodegroup "bogo-ng-1" has 2 node(s)

2021-04-16 11:39:51 [ℹ] node "ip-192-168-17-247.us-west-2.compute.internal" is ready

2021-04-16 11:39:51 [ℹ] node "ip-192-168-95-138.us-west-2.compute.internal" is ready

2021-04-16 11:39:51 [ℹ] adding identity "arn:aws:iam::526262051452:role/eksctl-bogo-EKS-nodegroup-bogo-ng-NodeInstanceRole-1JENEM823PK0O" to auth ConfigMap

2021-04-16 11:39:51 [ℹ] nodegroup "bogo-ng-2" has 0 node(s)

2021-04-16 11:39:51 [ℹ] waiting for at least 1 node(s) to become ready in "bogo-ng-2"

2021-04-16 11:40:52 [ℹ] nodegroup "bogo-ng-2" has 1 node(s)

2021-04-16 11:40:52 [ℹ] node "ip-192-168-23-95.us-west-2.compute.internal" is ready

2021-04-16 11:40:54 [ℹ] kubectl command should work with "/Users/kihyuckhong/.kube/config", try 'kubectl get nodes'

2021-04-16 11:40:54 [✔] EKS cluster "bogo-EKS" in "us-west-2" region is ready

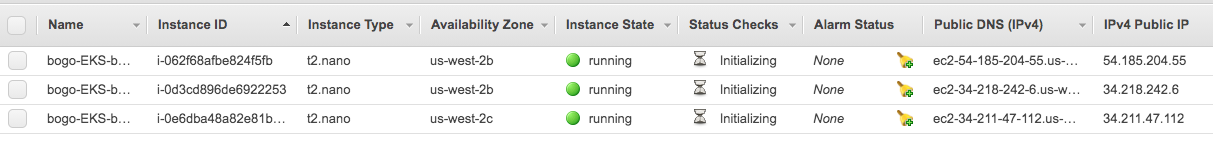

We can see our cluster and worker nodes have been create:

$ eksctl get cluster --region=us-west-2 2021-04-16 12:00:42 [ℹ] eksctl version 0.44.0 2021-04-16 12:00:42 [ℹ] using region us-west-2 NAME REGION EKSCTL CREATED bogo-EKS us-west-2 True

$ kubectl get nodes NAME STATUS ROLES AGE VERSION ip-192-168-17-247.us-west-2.compute.internal Ready <none> 6m42s v1.18.9-eks-d1db3c ip-192-168-23-95.us-west-2.compute.internal Ready <none> 5m58s v1.18.9-eks-d1db3c ip-192-168-95-138.us-west-2.compute.internal Ready <none> 6m39s v1.18.9-eks-d1db3c $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 14m $ kubectl get ns NAME STATUS AGE default Active 15m kube-node-lease Active 15m kube-public Active 15m kube-system Active 15m

The eksctl created the following into the ~/.kube/config:

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN5RENDQWJDZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeE1EUXhOakU0TWpreE4xb1hEVE14TURReE5ERTRNamt4TjFvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTXV5Ck13MXRQTkpiOHk1L2sxSVV1UVlQa2lSTTArSVRFemVBM0dvbCttK2doa2xuTGhva1BFbmFjZGNCeTIzbFl6VlIKZmFoYk5WcDVydjA4bFZWSkVWUUZ4WDNneEhrcDFDRnBnMFJpbkUveFF3L2E1ckNCZExtbTE4S05xb0p0Q3E0UwpFMWxycjlJQjZ5ZDB6Z0ZCQ1hJTnlmcGthLzR4dFZjY01KemgrK09aSE5rYUtKNzl3a3JQN1VDVFc4ZHRzT0wrCldJN1VEYks3QlJIc0dBMEhqdW1EaTJVbXcwV0JsNzROZkpNRjJOM1ZjUHVTRTJMMnRhMEFWcmtZOERiak1TaSsKSVJTcDJTa0tJZHFiWkJya2E1blVjR0NSQStEQ21YVkRFSEd2b0NOQzhJNTJoSHRHTG9Nb3FTUGJmWnFkVFhiNwpvTHlVdHB4alNZUmdDMU56cDY4Q0F3RUFBYU1qTUNFd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFDWUFGQkdSTHJqTFRuS2lwT1laeGQ1M2t1UnQKZXd4Q0VyUU9WQU1aRzhQZkgwa0ZWZnJjU1o4TkVLMHdtaTU4aC9XUHkrZjZtSlVib0JBcWNvREJvM3o1ME5SSQoxazR0UkFBRVVOTDJiRlJHNnc2eEloK3dOYXE0d2RUUkdVZUNXaW1pQmdKc3RBdDlSMUlPMjFDRS9ocXNLVDJ4Cm4zYUR4WXVDT2YwelhSR1Y1MUdENmM5MFZhNGJpbTJSTk9Ua2JZaUlNZjdPWUxyQ05rN1BIM3NRL1J6R2RGUTEKNTFTdG5kOUVibWdWWS8xSHBRSTloT2pRYUFOTlF6bHQwck8wM0VmK3FiSkVDTDlVNG1CRUxROVdINCt6UGZSKwoxblNJaDlXUzh1VG5xemJjV2c0Sjdna1RlZUZiaGVvT056RzJJNWdzTUZHcldoWUZXb1AyaGlSUG9TZz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://7E15034C82C7D8EC04494AF0A02D33D1.yl4.us-west-2.eks.amazonaws.com

name: bogo-EKS.us-west-2.eksctl.io

...

contexts:

- context:

cluster: bogo-EKS.us-west-2.eksctl.io

user: K@bogo-EKS.us-west-2.eksctl.io

name: K@bogo-EKS.us-west-2.eksctl.io

current-context: K@bogo-EKS.us-west-2.eksctl.io

kind: Config

preferences: {}

users:

- name: K@bogo-EKS.us-west-2.eksctl.io

user:

exec:

apiVersion: client.authentication.k8s.io/v1alpha1

args:

- token

- -i

- bogo-EKS

command: aws-iam-authenticator

env:

- name: AWS_STS_REGIONAL_ENDPOINTS

value: regional

- name: AWS_DEFAULT_REGION

value: us-west-2

...

To delete the cluster:

$ eksctl delete cluster --name bogo-EKS --region us-west-2

2021-04-16 12:07:05 [ℹ] eksctl version 0.44.0

2021-04-16 12:07:05 [ℹ] using region us-west-2

2021-04-16 12:07:05 [ℹ] deleting EKS cluster "bogo-EKS"

2021-04-16 12:07:06 [ℹ] deleted 0 Fargate profile(s)

2021-04-16 12:07:06 [✔] kubeconfig has been updated

2021-04-16 12:07:06 [ℹ] cleaning up AWS load balancers created by Kubernetes objects of Kind Service or Ingress

2021-04-16 12:07:09 [!] retryable error (Throttling: Rate exceeded

status code: 400, request id: 1bd3a2f9-5fbc-4323-8528-b80b6cef4d85) from cloudformation/DescribeStacks - will retry after delay of 8.428049304s

2021-04-16 12:07:18 [ℹ] 2 sequential tasks: { 2 parallel sub-tasks: { delete nodegroup "bogo-ng-2", delete nodegroup "bogo-ng-1" }, delete cluster control plane "bogo-EKS" [async] }

2021-04-16 12:07:18 [ℹ] will delete stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 12:07:18 [ℹ] waiting for stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2" to get deleted

2021-04-16 12:07:18 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 12:07:18 [ℹ] will delete stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 12:07:18 [ℹ] waiting for stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1" to get deleted

2021-04-16 12:07:18 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 12:07:34 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 12:07:36 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 12:07:54 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 12:07:55 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 12:08:13 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 12:08:13 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 12:08:31 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 12:08:33 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 12:08:48 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 12:08:50 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-2"

2021-04-16 12:09:03 [ℹ] waiting for CloudFormation stack "eksctl-bogo-EKS-nodegroup-bogo-ng-1"

2021-04-16 12:09:04 [ℹ] will delete stack "eksctl-bogo-EKS-cluster"

2021-04-16 12:09:04 [✔] all cluster resources were deleted

Or:

$ eksctl delete cluster -f cluster.yaml

A dry-run feature allows us to inspect and change the instances matched by the instance selector before proceeding to creating a nodegroup:

The dry-run feature allows you to inspect and change the instances matched by the instance selector before proceeding to creating a nodegroup:

$ eksctl create cluster -f cluster.yaml --dry-run

apiVersion: eksctl.io/v1alpha5

iam:

vpcResourceControllerPolicy: true

withOIDC: false

kind: ClusterConfig

metadata:

name: bogo-EKS

region: us-west-2

version: "1.18"

nodeGroups:

- amiFamily: AmazonLinux2

desiredCapacity: 2

disableIMDSv1: false

disablePodIMDS: false

iam:

withAddonPolicies:

albIngress: false

appMesh: null

appMeshPreview: null

autoScaler: false

certManager: false

cloudWatch: false

ebs: false

efs: false

externalDNS: false

fsx: false

imageBuilder: false

xRay: false

instanceSelector: {}

instanceType: t2.nano

labels:

alpha.eksctl.io/cluster-name: bogo-EKS

alpha.eksctl.io/nodegroup-name: bogo-ng-1

name: bogo-ng-1

privateNetworking: false

securityGroups:

withLocal: true

withShared: true

ssh:

allow: false

volumeIOPS: 3000

volumeSize: 80

volumeThroughput: 125

volumeType: gp3

- amiFamily: AmazonLinux2

desiredCapacity: 1

disableIMDSv1: false

disablePodIMDS: false

iam:

withAddonPolicies:

albIngress: false

appMesh: null

appMeshPreview: null

autoScaler: false

certManager: false

cloudWatch: false

ebs: false

efs: false

externalDNS: false

fsx: false

imageBuilder: false

xRay: false

instanceSelector: {}

instanceType: t2.nano

labels:

alpha.eksctl.io/cluster-name: bogo-EKS

alpha.eksctl.io/nodegroup-name: bogo-ng-2

name: bogo-ng-2

privateNetworking: false

securityGroups:

withLocal: true

withShared: true

ssh:

allow: false

volumeIOPS: 3000

volumeSize: 80

volumeThroughput: 125

volumeType: gp3

privateCluster:

enabled: false

vpc:

autoAllocateIPv6: false

cidr: 192.168.0.0/16

clusterEndpoints:

privateAccess: false

publicAccess: true

manageSharedNodeSecurityGroupRules: true

nat:

gateway: Single

AWS (Amazon Web Services)

- AWS : EKS (Elastic Container Service for Kubernetes)

- AWS : Creating a snapshot (cloning an image)

- AWS : Attaching Amazon EBS volume to an instance

- AWS : Adding swap space to an attached volume via mkswap and swapon

- AWS : Creating an EC2 instance and attaching Amazon EBS volume to the instance using Python boto module with User data

- AWS : Creating an instance to a new region by copying an AMI

- AWS : S3 (Simple Storage Service) 1

- AWS : S3 (Simple Storage Service) 2 - Creating and Deleting a Bucket

- AWS : S3 (Simple Storage Service) 3 - Bucket Versioning

- AWS : S3 (Simple Storage Service) 4 - Uploading a large file

- AWS : S3 (Simple Storage Service) 5 - Uploading folders/files recursively

- AWS : S3 (Simple Storage Service) 6 - Bucket Policy for File/Folder View/Download

- AWS : S3 (Simple Storage Service) 7 - How to Copy or Move Objects from one region to another

- AWS : S3 (Simple Storage Service) 8 - Archiving S3 Data to Glacier

- AWS : Creating a CloudFront distribution with an Amazon S3 origin

- AWS : Creating VPC with CloudFormation

- AWS : WAF (Web Application Firewall) with preconfigured CloudFormation template and Web ACL for CloudFront distribution

- AWS : CloudWatch & Logs with Lambda Function / S3

- AWS : Lambda Serverless Computing with EC2, CloudWatch Alarm, SNS

- AWS : Lambda and SNS - cross account

- AWS : CLI (Command Line Interface)

- AWS : CLI (ECS with ALB & autoscaling)

- AWS : ECS with cloudformation and json task definition

- AWS Application Load Balancer (ALB) and ECS with Flask app

- AWS : Load Balancing with HAProxy (High Availability Proxy)

- AWS : VirtualBox on EC2

- AWS : NTP setup on EC2

- AWS: jq with AWS

- AWS & OpenSSL : Creating / Installing a Server SSL Certificate

- AWS : OpenVPN Access Server 2 Install

- AWS : VPC (Virtual Private Cloud) 1 - netmask, subnets, default gateway, and CIDR

- AWS : VPC (Virtual Private Cloud) 2 - VPC Wizard

- AWS : VPC (Virtual Private Cloud) 3 - VPC Wizard with NAT

- DevOps / Sys Admin Q & A (VI) - AWS VPC setup (public/private subnets with NAT)

- AWS - OpenVPN Protocols : PPTP, L2TP/IPsec, and OpenVPN

- AWS : Autoscaling group (ASG)

- AWS : Setting up Autoscaling Alarms and Notifications via CLI and Cloudformation

- AWS : Adding a SSH User Account on Linux Instance

- AWS : Windows Servers - Remote Desktop Connections using RDP

- AWS : Scheduled stopping and starting an instance - python & cron

- AWS : Detecting stopped instance and sending an alert email using Mandrill smtp

- AWS : Elastic Beanstalk with NodeJS

- AWS : Elastic Beanstalk Inplace/Rolling Blue/Green Deploy

- AWS : Identity and Access Management (IAM) Roles for Amazon EC2

- AWS : Identity and Access Management (IAM) Policies, sts AssumeRole, and delegate access across AWS accounts

- AWS : Identity and Access Management (IAM) sts assume role via aws cli2

- AWS : Creating IAM Roles and associating them with EC2 Instances in CloudFormation

- AWS Identity and Access Management (IAM) Roles, SSO(Single Sign On), SAML(Security Assertion Markup Language), IdP(identity provider), STS(Security Token Service), and ADFS(Active Directory Federation Services)

- AWS : Amazon Route 53

- AWS : Amazon Route 53 - DNS (Domain Name Server) setup

- AWS : Amazon Route 53 - subdomain setup and virtual host on Nginx

- AWS Amazon Route 53 : Private Hosted Zone

- AWS : SNS (Simple Notification Service) example with ELB and CloudWatch

- AWS : Lambda with AWS CloudTrail

- AWS : SQS (Simple Queue Service) with NodeJS and AWS SDK

- AWS : Redshift data warehouse

- AWS : CloudFormation

- AWS : CloudFormation Bootstrap UserData/Metadata

- AWS : CloudFormation - Creating an ASG with rolling update

- AWS : Cloudformation Cross-stack reference

- AWS : OpsWorks

- AWS : Network Load Balancer (NLB) with Autoscaling group (ASG)

- AWS CodeDeploy : Deploy an Application from GitHub

- AWS EC2 Container Service (ECS)

- AWS EC2 Container Service (ECS) II

- AWS Hello World Lambda Function

- AWS Lambda Function Q & A

- AWS Node.js Lambda Function & API Gateway

- AWS API Gateway endpoint invoking Lambda function

- AWS API Gateway invoking Lambda function with Terraform

- AWS API Gateway invoking Lambda function with Terraform - Lambda Container

- Amazon Kinesis Streams

- AWS: Kinesis Data Firehose with Lambda and ElasticSearch

- Amazon DynamoDB

- Amazon DynamoDB with Lambda and CloudWatch

- Loading DynamoDB stream to AWS Elasticsearch service with Lambda

- Amazon ML (Machine Learning)

- Simple Systems Manager (SSM)

- AWS : RDS Connecting to a DB Instance Running the SQL Server Database Engine

- AWS : RDS Importing and Exporting SQL Server Data

- AWS : RDS PostgreSQL & pgAdmin III

- AWS : RDS PostgreSQL 2 - Creating/Deleting a Table

- AWS : MySQL Replication : Master-slave

- AWS : MySQL backup & restore

- AWS RDS : Cross-Region Read Replicas for MySQL and Snapshots for PostgreSQL

- AWS : Restoring Postgres on EC2 instance from S3 backup

- AWS : Q & A

- AWS : Security

- AWS : Security groups vs. network ACLs

- AWS : Scaling-Up

- AWS : Networking

- AWS : Single Sign-on (SSO) with Okta

- AWS : JIT (Just-in-Time) with Okta

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization