Docker & Kubernetes - MongoDB with StatefulSets on GCP Kubernetes Engine

In this post, we'll be creating a MongoDB replica set with Kubernetes StatefulSets, connecting to the MongoDB replica set, and then do scaling the replica set.

Google Cloud Shell is loaded with development tools and it offers a persistent 5GB home directory and runs on the Google Cloud. Google Cloud Shell provides command-line access to our GCP resources. We can activate the shell: in GCP console, on the top right toolbar, click the Open Cloud Shell button:

In the dialog box that opens, click "START CLOUD SHELL".

gcloud is the command-line tool for Google Cloud Platform. It comes pre-installed on Cloud Shell and supports tab-completion.

Set our zone:

$ gcloud config set compute/zone us-central1-f Updated property [compute/zone].

Run the following command to create a Kubernetes cluster:

$ gcloud container clusters create hello-world ... kubeconfig entry generated for hello-world. NAME LOCATION MASTER_VERSION MASTER_IP MACHINE_TYPE NODE_VERSION NUM_NODES STATUS hello-world us-central1-f 1.11.6-gke.2 35.222.37.132 n1-standard-1 1.11.6-gke.2 3 RUNNING

Now that we have our Kubernetes cluster, let's set up MongoDB.

We will be using a replica set so that our data is highly available and redundant. To get that set up, we need to do the following:

- Download the MongoDB replica set/sidecar.

- Instantiate a StorageClass.

- Instantiate a headless service.

- Instantiate a StatefulSet.

Run the following command to clone the MongoDB/Kubernetes replica set from the Github repository:

$ git clone https://github.com/thesandlord/mongo-k8s-sidecar.git Cloning into 'mongo-k8s-sidecar'... remote: Enumerating objects: 306, done. remote: Total 306 (delta 0), reused 0 (delta 0), pack-reused 306 Receiving objects: 100% (306/306), 328.29 KiB | 0 bytes/s, done. Resolving deltas: 100% (155/155), done.

Navigate to the StatefulSet directory and then later we'll create a Kubernetes StorageClass which tells Kubernetes what kind of storage we want to use for database nodes.

$ cd ./mongo-k8s-sidecar/example/StatefulSet/ $ ls azure_hdd.yaml azure_ssd.yaml googlecloud_hdd.yaml googlecloud_ssd.yaml mongo-statefulset.yaml README.md

On the Google Cloud Platform, we have a couple of storage choices: SSDs and hard disks.

Let's take a look at the googlecloud_ssd.yaml file:

kind: StorageClass apiVersion: storage.k8s.io/v1beta1 metadata: name: fast provisioner: kubernetes.io/gce-pd parameters: type: pd-ssd

The configuration creates a new StorageClass called "fast" that is backed by SSD volumes. Run the following command to deploy the StorageClass:

$ kubectl apply -f googlecloud_ssd.yaml storageclass.storage.k8s.io "fast" created

Now that our StorageClass is configured, our StatefulSet can now request a volume that will automatically be created.

Let's open up the configuration file (mongo-statefulset.yaml) which houses Headless service and StatefulSets.

apiVersion: v1 <----------- Headless Service configuration

kind: Service

metadata:

name: mongo

labels:

name: mongo

spec:

ports:

- port: 27017

targetPort: 27017

clusterIP: None

selector:

role: mongo

---

apiVersion: apps/v1beta1 <------- StatefulSet configuration

kind: StatefulSet

metadata:

name: mongo

spec:

serviceName: "mongo"

replicas: 3

template:

metadata:

labels:

role: mongo

environment: test

spec:

terminationGracePeriodSeconds: 10

containers:

- name: mongo

image: mongo

command:

- mongod

- "--replSet"

- rs0

- "--smallfiles"

- "--noprealloc"

ports:

- containerPort: 27017

volumeMounts:

- name: mongo-persistent-storage

mountPath: /data/db

- name: mongo-sidecar

image: cvallance/mongo-k8s-sidecar

env:

- name: MONGO_SIDECAR_POD_LABELS

value: "role=mongo,environment=test"

volumeClaimTemplates:

- metadata:

name: mongo-persistent-storage

annotations:

volume.beta.kubernetes.io/storage-class: "fast"

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 100Gi

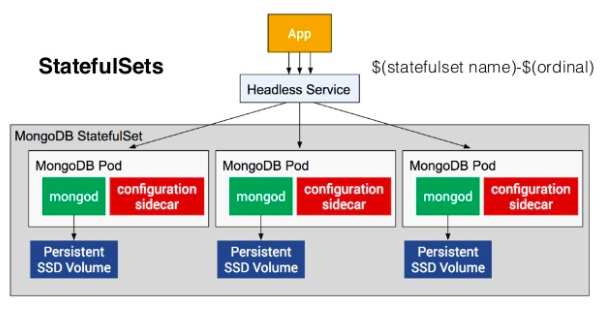

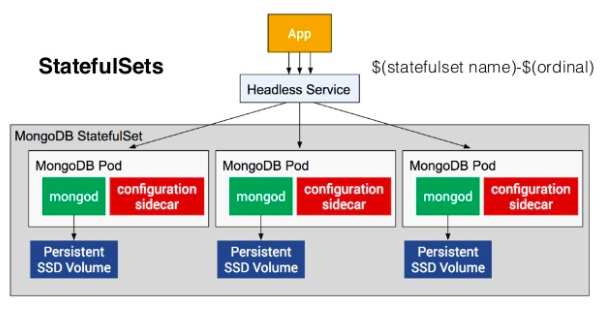

Headless service:

The first section of mongo-statefulset.yaml refers to a headless service. In Kubernetes terms, a service describes policies or rules for accessing specific pods. In brief, a headless service is one that doesn't prescribe load balancing. When combined with StatefulSets, this will give us individual DNSs to access our pods, and in turn, a way to connect to all of our MongoDB nodes individually. In the yaml file, we can make sure that the service is headless by verifying that the clusterIP field is set to None.

StatefulSet:

The StatefulSet configuration is the second section of mongo-statefulset.yaml. This is the bread and butter of the application: it's the workload that runs MongoDB and what orchestrates our Kubernetes resources. Referencing the yaml file, we see that the first section describes the StatefulSet object. Then, we move into the Metadata section, where labels and the number of replicas are specified.

Next comes the pod spec. The terminationGracePeriodSeconds is used to gracefully shutdown the pod when we scale down the number of replicas. Then the configurations for the two containers are shown. The first one runs MongoDB with command line flags that configure the replica set name. It also mounts the persistent storage volume to /data/db: the location where MongoDB saves its data. The second container runs the sidecar. This sidecar container will configure the MongoDB replica set automatically. As mentioned earlier, a "sidecar" is a helper container that helps the main container run its jobs and tasks.

Finally, there is the volumeClaimTemplates. This is what talks to the StorageClass we created before to provision the volume. It provisions a 100 GB disk for each MongoDB replica.

Now that we have a basic understanding of what a headless service and StatefulSet are, let's go ahead and deploy them. Since the two are packaged in one mongo-statefulset.yaml, we can run the following command to run both of them at one shot:

$ kubectl apply -f mongo-statefulset.yaml service "mongo" created statefulset.apps "mongo" created

Now that we have a cluster running and our replica set deployed, it's time to connect to it.

Kubernetes StatefulSets deploys each pod sequentially. It waits for the MongoDB replica set member to fully boot up and create the backing disk before starting the next member. Run the following command to view and confirm that all three members are up:

$ kubectl get statefulset NAME DESIRED CURRENT AGE mongo 3 3 2m

At this point, we should have three pods created in our cluster. These correspond to the three nodes in our MongoDB replica set. To view them:

$ kubectl get pods NAME READY STATUS RESTARTS AGE mongo-0 2/2 Running 0 3m mongo-1 2/2 Running 0 2m mongo-2 2/2 Running 0 2m

Wait for all three members to be created before moving on. Connect to the first replica set member:

$ kubectl exec -ti mongo-0 mongo

Defaulting container name to mongo.

Use 'kubectl describe pod/mongo-0 -n default' to see all of the containers in this pod.

MongoDB shell version v4.0.6

connecting to: mongodb://127.0.0.1:27017/?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("1c19e64b-0e5f-478e-b22c-aa06365a93c4") }

MongoDB server version: 4.0.6

Welcome to the MongoDB shell.

...

---

Enable MongoDB's free cloud-based monitoring service, which will then receive and display

metrics about your deployment (disk utilization, CPU, operation statistics, etc).

The monitoring data will be available on a MongoDB website with a unique URL accessible to you

and anyone you share the URL with. MongoDB may use this information to make product

improvements and to suggest MongoDB products and deployment options to you.

To enable free monitoring, run the following command: db.enableFreeMonitoring()

To permanently disable this reminder, run the following command: db.disableFreeMonitoring()

---

>

We now have a REPL environment connected to the MongoDB. Let's instantiate the replica set with a default configuration by running the rs.initiate() command:

> rs.initiate()

{

"info2" : "no configuration specified. Using a default configuration for the set",

"me" : "localhost:27017",

"ok" : 1,

"operationTime" : Timestamp(1550555064, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1550555064, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

rs0:OTHER>

Print the replica set configuration; run the rs.conf() command.

This outputs the details for the current member of replica set rs0. In this post, we see only one member. To get details of all members we need to expose the replica set through additional services like NodePort or Load Balancer.

rs0:PRIMARY> rs.conf()

{

"_id" : "rs0",

"version" : 1,

"protocolVersion" : NumberLong(1),

"writeConcernMajorityJournalDefault" : true,

"members" : [

{

"_id" : 0,

"host" : "localhost:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : -1,

"catchUpTakeoverDelayMillis" : 30000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" : ObjectId("5c6b97b8e729cff1da837701")

}

}

rs0:PRIMARY>

rs0:PRIMARY> exit

bye

A big advantage of Kubernetes and StatefulSets is that we can scale the number of MongoDB Replicas up and down with a single command. To scale up the number of replica set members from 3 to 5, run this command:

$ kubectl scale --replicas=5 statefulset mongo statefulset.apps "mongo" scaled

In a few minutes, there will be 5 MongoDB pods. Run this command to view them:

$ kubectl get pods NAME READY STATUS RESTARTS AGE mongo-0 2/2 Running 0 16m mongo-1 2/2 Running 0 16m mongo-2 2/2 Running 0 15m mongo-3 2/2 Running 0 1m mongo-4 2/2 Running 0 34s

To scale down the number of replica set members from 5 back to 3, run this command:

$ kubectl scale --replicas=3 statefulset mongo statefulset.apps "mongo" scaled $ kubectl get pods NAME READY STATUS RESTARTS AGE mongo-0 2/2 Running 0 18m mongo-1 2/2 Running 0 17m mongo-2 2/2 Running 0 16m

Each pod in a StatefulSet backed by a Headless Service will have a stable DNS name. The template follows this format: <pod-name>.<service-name>

This means the DNS names for the MongoDB replica set are:

mongo-0.mongo mongo-1.mongo mongo-2.mongo

We can use these names directly in the connection string URI of our app.

Using a database is outside the scope of this post, however for this case, the connection string URI would be:

"mongodb://mongo-0.mongo,mongo-1.mongo,mongo-2.mongo:27017/dbname_?"

$ kubectl delete statefulset mongo statefulset.apps "mongo" deleted $ kubectl delete svc mongo service "mongo" deleted $ kubectl delete pvc -l role=mongo persistentvolumeclaim "mongo-persistent-storage-mongo-0" deleted persistentvolumeclaim "mongo-persistent-storage-mongo-1" deleted persistentvolumeclaim "mongo-persistent-storage-mongo-2" deleted persistentvolumeclaim "mongo-persistent-storage-mongo-3" deleted persistentvolumeclaim "mongo-persistent-storage-mongo-4" deleted $ gcloud container clusters delete "hello-world" The following clusters will be deleted. - [hello-world] in [us-central1-f] Do you want to continue (Y/n)? y Deleting cluster hello-world...done. Deleted [https://container.googleapis.com/v1/projects/qwiklabs-gcp-af2d261ece8f1f40/zones/us-central1-f/clusters/hello-world].

Reference: Running a MongoDB Database in Kubernetes with StatefulSets

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization