Docker : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

Engine

In this post, we'll do the following:

- Provision a Jenkins application into a Kubernetes Engine Cluster.

- Install and set up Jenkins application from the Charts repository using Helm Package Manager within the Cluster (exposing the Jenkins web UI using using a ClusterIP and builder/agent registration ports within the Kubernetes cluster).

- Managing Jenkins plugins can land us in dependency hell. However, thanks to Helm with its magic chart, most of the pains are taken away from us. In fact, once we have helm and tiller configured for our cluster, deploying the Jenkins application including persistent volumes, pods, services and a load balancer become much easier.

- Setup Jenkins Multibranch Pipeline. This enables us to implement different Jenkinsfiles for different branches of the same project. Jenkins automatically discovers, manages and executes Pipelines for branches which contain a Jenkinsfile in source control. So, there is no more manual Pipeline creation and management.

- Deploy a sample application written in Go language in Canary and Production environments.

- Better understanding of Kubernetes namespaces (default, kube-system, production, new-feature).

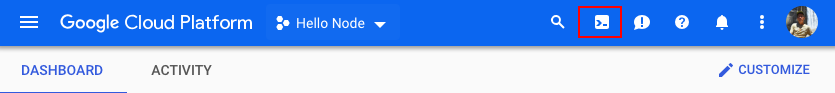

Google Cloud Shell is loaded with development tools and it offers a persistent 5GB home directory and runs on the Google Cloud. Google Cloud Shell provides command-line access to our GCP resources. We can activate the shell: in GCP console, on the top right toolbar, click the Open Cloud Shell button:

In the dialog box that opens, click "START CLOUD SHELL".

gcloud is the command-line tool for Google Cloud Platform. It comes pre-installed on Cloud Shell and supports tab-completion.

Set our zone:

$ gcloud config set compute/zone us-central1-f Updated property [compute/zone].

Run the following command to create a Kubernetes cluster:

$ gcloud container clusters create jenkins-cd \ --num-nodes 2 \ --machine-type n1-standard-2 \ --scopes "https://www.googleapis.com/auth/projecthosting,cloud-platform" kubeconfig entry generated for jenkins-cd. NAME LOCATION MASTER_VERSION MASTER_IP MACHINE_TYPE NODE_VERSION NUM_NODES STATUS jenkins-cd us-central1-f 1.11.6-gke.2 35.222.179.66 n1-standard-2 1.11.6-gke.2 2 RUNNING

The extra scopes enable Jenkins to access Cloud Source Repositories and Google Container Registry.

Let's check if our cluster is setup by running the following command:

$ gcloud container clusters list NAME LOCATION MASTER_VERSION MASTER_IP MACHINE_TYPE NODE_VERSION NUM_NODES STATUS jenkins-cd us-central1-f 1.11.6-gke.2 35.222.179.66 n1-standard-2 1.11.6-gke.2 2 RUNNING

Get the credentials for our cluster:

$ gcloud container clusters get-credentials jenkins-cd Fetching cluster endpoint and auth data. kubeconfig entry generated for jenkins-cd.

Kubernetes Engine uses these credentials to access our newly provisioned cluster.

Then, confirm that we can connect to it by running the following command:

$ kubectl cluster-info Kubernetes master is running at https://35.222.179.66 GLBCDefaultBackend is running at https://35.222.179.66/api/v1/namespaces/kube-system/services/default-http-backend:http/proxy Heapster is running at https://35.222.179.66/api/v1/namespaces/kube-system/services/heapster/proxy KubeDNS is running at https://35.222.179.66/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy Metrics-server is running at https://35.222.179.66/api/v1/namespaces/kube-system/services/https:metrics-server:/proxy To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

Clone the sample code into our Cloud Shell:

$ git clone https://github.com/Einsteinish/Continuous-Deployment-to-GKE-using-Jenkins-MultibranchPipeline-with-Helm.git $ cd Continuous-Deployment-to-GKE-using-Jenkins-MultibranchPipeline-with-Helm

We will use Helm to install Jenkins from the Charts repository.

The Helm project started as a Kubernetes sub-project, and Helm calls itself as the package manager for Kubernetes. Helm makes it easy to configure and deploy Kubernetes applications. Actually, Helm provides a way to simplify complex applications by grouping together all of their necessary components, parameterizing them and packaging them up into a single Helm chart. We can think of it as a collection of files that describe a related set of Kubernetes resources.

Though the Helm charts are easy to write, there are also curated charts available in their repo for most common applications.

Helm consists of 2 components:

- The helm client (this is the helm when we talk about helm).

- The helm server component, called tiller which is responsible for handling requests from the helm client and interacting with the Kubernetes APIs.

Download and install the helm binary and unzip it:

$ wget https://storage.googleapis.com/kubernetes-helm/helm-v2.9.1-linux-amd64.tar.gz $ tar zxfv helm-v2.9.1-linux-amd64.tar.gz $ cp linux-amd64/helm .

Add a cluster administrator in the cluster's RBAC so that we can give Jenkins permissions in the cluster:

$ kubectl create clusterrolebinding cluster-admin-binding --clusterrole=cluster-admin --user=$(gcloud config get-value account) Your active configuration is: [cloudshell-23716] clusterrolebinding.rbac.authorization.k8s.io "cluster-admin-binding" created

Grant Tiller, the server side of Helm, the "cluster-admin" role in our cluster:

$ kubectl create serviceaccount tiller --namespace kube-system serviceaccount "tiller" created $ kubectl create clusterrolebinding tiller-admin-binding --clusterrole=cluster-admin --serviceaccount=kube-system:tiller clusterrolebinding.rbac.authorization.k8s.io "tiller-admin-binding" created

Note that before we install tiller on our cluster we set up a service account with a defined role (cluster-admin) for tiller to operate within. This is due to the introduction of Role Based Access Control (RBAC).

Initialize Helm to make sure the server side of Helm (Tiller) is properly installed in our cluster.:

$ ./helm init --service-account=tiller ... Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster. Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy. For more information on securing your installation see: https://docs.helm.sh/using_helm/#securing-your-helm-installation Happy Helming! $ ./helm update Command "update" is deprecated, use 'helm repo update' Hang tight while we grab the latest from your chart repositories... ...Skip local chart repository ...Successfully got an update from the "stable" chart repository Update Complete. ⎈ Happy Helming!⎈

Ensure Helm is properly installed by running the following command. You should see versions appear for both the server and the client of v2.9.1:

$ ./helm version

Client: &version.Version{SemVer:"v2.9.1", GitCommit:"20adb27c7c5868466912eebdf6664e7390ebe710", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.9.1", GitCommit:"20adb27c7c5868466912eebdf6664e7390ebe710", GitTreeState:"clean"}

We will use a custom values file to add the GCP specific plugin necessary to use service account credentials to reach our Cloud Source Repository.

Use the Helm CLI to deploy the chart with our configuration settings:

$ ./helm install -n cd stable/jenkins -f jenkins/values.yaml --version 0.16.6 --wait ... Configure the Kubernetes plugin in Jenkins to use the following Service Account name cd-jenkins using the following steps: Create a Jenkins credential of type Kubernetes service account with service account name cd-jenkins Under configure Jenkins -- Update the credentials config in the cloud section to use the service account credential you created in the step above.

Make sure the Jenkins pod goes to the Running state and the container is in the READY state:

$ kubectl get pods NAME READY STATUS RESTARTS AGE cd-jenkins-7676c895d6-b54n5 0/1 Init:0/1 0 27s $ kubectl get pods NAME READY STATUS RESTARTS AGE cd-jenkins-7676c895d6-p9vkf 1/1 Running 0 3m

Run the following command to setup port forwarding to the Jenkins UI from the Cloud Shell:

$ export POD_NAME=$(kubectl get pods -l "component=cd-jenkins-master" -o jsonpath="{.items[0].metadata.name}")

$ echo $POD_NAME

cd-jenkins-7676c895d6-b54n5

$ kubectl port-forward $POD_NAME 8080:8080 >> /dev/null &

Check if the Jenkins Service was created properly:

$ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE cd-jenkins ClusterIP 10.11.252.185 <none> 8080/TCP 6m cd-jenkins-agent ClusterIP 10.11.244.127 <none> 50000/TCP 6m kubernetes ClusterIP 10.11.240.1 <none> 443/TCP 20m

Kubernetes Plugin enables builder nodes to be automatically launched as necessary when the Jenkins master requests them. Upon completion of their work, they will automatically be turned down and their resources added back to the clusters resource pool.

Notice that this service exposes ports 8080 and 50000 for any pods that match the selector. This will expose the Jenkins web UI and builder/agent registration ports within the Kubernetes cluster. Additionally, the jenkins-ui services is exposed using a ClusterIP so that it is not accessible from outside the cluster.

The Jenkins chart will automatically create an admin password for us. We can retrieve it:

$ printf $(kubectl get secret cd-jenkins -o jsonpath="{.data.jenkins-admin-password}" | base64 --decode);echo

VyakokGkw4

To get to the Jenkins user interface, click on the Web Preview button in cloud shell, then click "Preview on port 8080":

We should now be able to log in with username "admin" and our "auto-generated password".

We now have Jenkins set up in our Kubernetes cluster!

Jenkins will drive our automated CI/CD pipelines in the following sections.

In our continuous deployment pipeline, we'll deploy the sample application, gceme, written in the Go language. We can find it in the repo's sample-app directory. When we run the gceme binary on a Compute Engine instance, the app displays the instance's metadata in an info card.

Continuous Delivery with Jenkins in Kubernetes Engine

The application mimics a microservice by supporting two operation modes:

- backend mode: gceme listens on port 8080 and returns Compute Engine instance metadata in JSON format.

- frontend mode: gceme queries the backend gceme service and renders the resulting JSON in the user interface.

We will deploy the application into two different environments:

- Production environment

- Canary: A smaller-capacity site that receives only a percentage of our user traffic. We use this environment to validate our software with live traffic before it's released to all of our users.

In Google Cloud Shell, navigate to the sample application directory and create the Kubernetes namespace to logically isolate the deployment:

$ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE cd-jenkins ClusterIP 10.11.252.185 <none> 8080/TCP 6m cd-jenkins-agent ClusterIP 10.11.244.127 <none> 50000/TCP 6m kubernetes ClusterIP 10.11.240.1 <none> 443/TCP 20m $ kubectl create ns production namespace "production" created

Create the production and canary deployments, and the services using the kubectl apply commands:

$ cd sample-app $ kubectl apply -f k8s/production -n production deployment.extensions "gceme-backend-production" created deployment.extensions "gceme-frontend-production" created $ kubectl apply -f k8s/canary -n production deployment.extensions "gceme-backend-canary" created deployment.extensions "gceme-frontend-canary" created $ kubectl apply -f k8s/services -n production service "gceme-backend" created service "gceme-frontend" created

Only one replica of the frontend is deployed, by default. We can use the kubectl scale command to ensure that there are at least 4 replicas running at all times.

Let's scale up the production environment frontends by running the following command:

$ kubectl scale deployment gceme-frontend-production -n production --replicas 4 deployment.extensions "gceme-frontend-production" scaled

Now confirm that we have 5 pods running for the frontend, 4 for production traffic and 1 for canary releases (changes to the canary release will only affect 1 out of 5 (20%) of users):

$ kubectl get pods -n production -l app=gceme -l role=frontend NAME READY STATUS RESTARTS AGE gceme-frontend-canary-c9846cd9c-dmcnx 1/1 Running 0 2m gceme-frontend-production-6976ccd9cd-9vm46 1/1 Running 0 3m gceme-frontend-production-6976ccd9cd-bll5z 1/1 Running 0 37s gceme-frontend-production-6976ccd9cd-dn55c 1/1 Running 0 37s gceme-frontend-production-6976ccd9cd-gmg7s 1/1 Running 0 37s

Also confirm that you have 2 pods for the backend, 1 for production and 1 for canary::

$ kubectl get pods -n production -l app=gceme -l role=backend NAME READY STATUS RESTARTS AGE gceme-backend-canary-59dcdd58c5-6xfgn 1/1 Running 0 3m gceme-backend-production-7fd77cf554-7b5r2 1/1 Running 0 3m

Retrieve the external IP for the production services:

$ kubectl get service gceme-frontend -n production NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE gceme-frontend LoadBalancer 10.11.252.43 35.184.193.110 80:31509/TCP 5m

Paste External IP into a browser to see the info card displayed on a card:

For future use, let's store the frontend service load balancer IP in an environment variable:

$ export FRONTEND_SERVICE_IP=$(kubectl get -o jsonpath="{.status.loadBalancer.ingress[0].ip}" --namespace=production services gceme-frontend)

Confirm that both services are working by opening the frontend external IP address in the browser. Check the version output of the service by running the following command (it should read 1.0.0):

$ curl http://$FRONTEND_SERVICE_IP/version 1.0.0

Now that we have successfully deployed the sample application, we will set up a pipeline for continuous deployments.

We'll be creating a repository to host the sample app source code. Jenkins will download code from the Cloud Source Repositories.

Let's create a copy of the gceme sample app and push it to a Cloud Source Repository:$ gcloud alpha source repos create default Created [default].

Initialize the sample-app directory as its own Git repository:

$ git init $ git config credential.helper gcloud.sh

Run the following command:

$ git remote add origin https://source.developers.google.com/p/$DEVSHELL_PROJECT_ID/r/default

Set the username and email address for our Git commits. Replace [EMAIL_ADDRESS] with our Git email address and [USERNAME] with our Git username:

$ git config --global user.email "[EMAIL_ADDRESS]" $ git config --global user.name "[USERNAME]"

Add, commit, and push the files:

$ git add . $ git commit -m "Initial commit" [master (root-commit) 0c27228] initial commit 31 files changed, 2434 insertions(+) create mode 100644 Dockerfile create mode 100644 Gopkg.lock create mode 100644 Gopkg.toml create mode 100644 Jenkinsfile create mode 100644 html.go create mode 100644 k8s/canary/backend-canary.yaml create mode 100644 k8s/canary/frontend-canary.yaml create mode 100644 k8s/dev/backend-dev.yaml create mode 100644 k8s/dev/default.yml create mode 100644 k8s/dev/frontend-dev.yaml create mode 100644 k8s/production/backend-production.yaml create mode 100644 k8s/production/frontend-production.yaml create mode 100644 k8s/services/backend.yaml create mode 100644 k8s/services/frontend.yaml create mode 100644 main.go create mode 100644 main_test.go create mode 100644 vendor/cloud.google.com/go/AUTHORS create mode 100644 vendor/cloud.google.com/go/CONTRIBUTORS create mode 100644 vendor/cloud.google.com/go/LICENSE create mode 100644 vendor/cloud.google.com/go/compute/metadata/metadata.go create mode 100644 vendor/golang.org/x/net/AUTHORS create mode 100644 vendor/golang.org/x/net/CONTRIBUTORS create mode 100644 vendor/golang.org/x/net/LICENSE create mode 100644 vendor/golang.org/x/net/PATENTS create mode 100644 vendor/golang.org/x/net/context/context.go create mode 100644 vendor/golang.org/x/net/context/ctxhttp/ctxhttp.go create mode 100644 vendor/golang.org/x/net/context/ctxhttp/ctxhttp_pre17.go create mode 100644 vendor/golang.org/x/net/context/go17.go create mode 100644 vendor/golang.org/x/net/context/go19.go create mode 100644 vendor/golang.org/x/net/context/pre_go17.go create mode 100644 vendor/golang.org/x/net/context/pre_go19.go $ git push origin master ... * [new branch] master -> master

Configure our credentials to allow Jenkins to access the code repository. Jenkins will use our cluster's service account credentials in order to download code from the Cloud Source Repositories.

- In the Jenkins user interface, click "Credentials" in the left navigation.

- Click "Jenkins"

- Click "Global credentials (unrestricted)".

- Click "Add Credentials" in the left navigation.

- Select "Google Service Account from metadata" from the "Kind" drop-down and click OK.

Navigate to our Jenkins user interface and follow these steps to configure a Pipeline job.

- Click "Jenkins" > "New Item" in the left navigation.

- Name the project "sample-app", then choose the "Multibranch Pipeline" option and click "OK".

- On the next page, in the "Branch Sources" section, click "Add Source" and select git.

- Paste the HTTPS clone URL of our sample-app repo in "Cloud Source Repositories" into the "Project Repository" field. Replace [PROJECT_ID] with our GCP Project ID:

https://source.developers.google.com/p/[PROJECT_ID]/r/default

- From the "Credentials" drop-down, select the name of the credentials we created when adding our service account in the previous steps.

- Under "Scan Multibranch Pipeline Triggers" section, check the "Periodically if not otherwise run box" and set the "Interval" value to "1 minute".

- Click "Save" leaving all other options with their defaults

After we complete these steps, a job named "Branch indexing" runs. This meta-job identifies the branches in our repository and ensures changes haven't occurred in existing branches. If we click "sample-app" in the top left, the master job should be seen.

Note: The first run of the master job might fail until we make a few code changes in the next step.

Now, we have successfully created a Jenkins pipeline.

We'll create the development environment for continuous integration in the following sections.

Development branches are a set of environments that our developers use to test their code changes before submitting them for integration into the live site. These environments are scaled-down versions of our application, but need to be deployed using the same mechanisms as the live environment.

To create a development environment from a feature branch, we can push the branch to the Git server and let Jenkins deploy our environment.

Create a development branch:

$ git branch * master $ git checkout -b new-feature Switched to a new branch 'new-feature'

We need to modify the pipeline definition.

The Jenkinsfile that defines that pipeline is written using the Jenkins Pipeline Groovy syntax. Using a Jenkinsfile allows an entire build pipeline to be expressed in a single file that lives alongside our source code.

Here is our Jenkinsfile under sample-app folder:

def project = 'REPLACE_WITH_YOUR_PROJECT_ID'

def appName = 'gceme'

def feSvcName = "${appName}-frontend"

def imageTag = "gcr.io/${project}/${appName}:${env.BRANCH_NAME}.${env.BUILD_NUMBER}"

pipeline {

agent {

kubernetes {

label 'sample-app'

defaultContainer 'jnlp'

yaml """

apiVersion: v1

kind: Pod

metadata:

labels:

component: ci

spec:

# Use service account that can deploy to all namespaces

serviceAccountName: cd-jenkins

containers:

- name: golang

image: golang:1.10

command:

- cat

tty: true

- name: gcloud

image: gcr.io/cloud-builders/gcloud

command:

- cat

tty: true

- name: kubectl

image: gcr.io/cloud-builders/kubectl

command:

- cat

tty: true

"""

}

}

stages {

stage('Test') {

steps {

container('golang') {

sh """

ln -s `pwd` /go/src/sample-app

cd /go/src/sample-app

go test

"""

}

}

}

stage('Build and push image with Container Builder') {

steps {

container('gcloud') {

sh "PYTHONUNBUFFERED=1 gcloud builds submit -t ${imageTag} ."

}

}

}

stage('Deploy Canary') {

// Canary branch

when { branch 'canary' }

steps {

container('kubectl') {

// Change deployed image in canary to the one we just built

sh("sed -i.bak 's#gcr.io/cloud-solutions-images/gceme:1.0.0#${imageTag}#' ./k8s/canary/*.yaml")

sh("kubectl --namespace=production apply -f k8s/services/")

sh("kubectl --namespace=production apply -f k8s/canary/")

sh("echo http://`kubectl --namespace=production get service/${feSvcName} -o jsonpath='{.status.loadBalancer.ingress[0].ip}'` > ${feSvcName}")

}

}

}

stage('Deploy Production') {

// Production branch

when { branch 'master' }

steps{

container('kubectl') {

// Change deployed image in canary to the one we just built

sh("sed -i.bak 's#gcr.io/cloud-solutions-images/gceme:1.0.0#${imageTag}#' ./k8s/production/*.yaml")

sh("kubectl --namespace=production apply -f k8s/services/")

sh("kubectl --namespace=production apply -f k8s/production/")

sh("echo http://`kubectl --namespace=production get service/${feSvcName} -o jsonpath='{.status.loadBalancer.ingress[0].ip}'` > ${feSvcName}")

}

}

}

stage('Deploy Dev') {

// Developer Branches

when {

not { branch 'master' }

not { branch 'canary' }

}

steps {

container('kubectl') {

// Create namespace if it doesn't exist

sh("kubectl get ns ${env.BRANCH_NAME} || kubectl create ns ${env.BRANCH_NAME}")

// Don't use public load balancing for development branches

sh("sed -i.bak 's#LoadBalancer#ClusterIP#' ./k8s/services/frontend.yaml")

sh("sed -i.bak 's#gcr.io/cloud-solutions-images/gceme:1.0.0#${imageTag}#' ./k8s/dev/*.yaml")

sh("kubectl --namespace=${env.BRANCH_NAME} apply -f k8s/services/")

sh("kubectl --namespace=${env.BRANCH_NAME} apply -f k8s/dev/")

echo 'To access your environment run `kubectl proxy`'

echo "Then access your service via http://localhost:8001/api/v1/proxy/namespaces/${env.BRANCH_NAME}/services/${feSvcName}:80/"

}

}

}

}

}

Pipelines support powerful features like parallelization and require manual user approval.

In order for the pipeline to work as expected, we need to modify the Jenkinsfile to set our project ID.

Add PROJECT_ID to the REPLACE_WITH_YOUR_PROJECT_ID value. To find it, we can run:

$ gcloud config get-value project

To change the application, we will change the gceme cards from blue to orange (html.go):

<div class="card blue">

Then, in main.go, the version should be changed to:

const version string = "2.0.0"

Commit and push our changes:

$ git add Jenkinsfile html.go main.go $ git commit -m "Version 2.0.0" $ git push origin new-feature

This will trigger a build of our development environment.

After the change is pushed to the Git repository, navigate to the Jenkins user interface where we can see that our build started for the "new-feature" branch. It can take up to a minute for the changes to be picked up.

Track the output of the build for a few minutes and watch for the "kubectl --namespace=new-feature apply..." messages to begin. Our new-feature branch will now be deployed to our cluster.

Once that's all taken care of, start the proxy in the background:

$ kubectl proxy & [2] 1214

Verify that our application is accessible by sending a request to localhost and letting kubectl proxy forward it to our service:

$ curl \ http://localhost:8001/api/v1/namespaces/new-feature/services/gceme-frontend:80/proxy/version 2.0.0

We see it responded with 2.0.0, which is the version that is now running.

We have set up the development environment.

In the following sections, we will build on what we learned in the previous module by deploying a canary release to test out a new feature.

Now that we have verified that our app is running the latest code in the development environment, let's deploy that code to the canary environment.

Create a canary branch from new-feature branch and push it to the Git server:

$ git branch master * new-feature $ git checkout -b canary Switched to a new branch 'canary' $ git push origin canary

In Jenkins, we should see the canary pipeline has kicked off. Once complete, we can check the service URL to ensure that some of the traffic is being served by our new version. We should see about 1 in 5 requests (in no particular order) returning version 2.0.0.

$ export FRONTEND_SERVICE_IP=$(kubectl get -o \

jsonpath="{.status.loadBalancer.ingress[0].ip}" --namespace=production services gceme-frontend)

$ echo $FRONTEND_SERVICE_IP

35.184.162.219

$ while true; do curl http://$FRONTEND_SERVICE_IP/version; sleep 1; done

1.0.0

1.0.0

1.0.0

1.0.0

1.0.0

1.0.0

1.0.0

1.0.0

1.0.0

1.0.0

1.0.0

1.0.0

1.0.0

1.0.0

1.0.0

1.0.0

1.0.0

1.0.0

1.0.0

2.0.0

1.0.0

1.0.0

1.0.0

1.0.0

2.0.0

1.0.0

1.0.0

1.0.0

1.0.0

1.0.0

1.0.0

2.0.0

1.0.0

^C

In the next section, we will deploy the new version to production.

Now that our canary release was successful, let's deploy to the rest of our production fleet.

Merge the canary branch into the master branch and push it to the Git server:

$ git branch * canary master new-feature $ git checkout master Switched to branch 'master' $ git merge canary Updating f571b23..f74a71c Fast-forward Jenkinsfile | 2 +- html.go | 4 ++-- main.go | 2 +- 3 files changed, 4 insertions(+), 4 deletions(-) $ git push origin master ... f571b23..f74a71c master -> master

In Jenkins, we should see the master pipeline has kicked off.

Once complete, we can check the service URL to ensure that all of the traffic is being served by our new version, 2.0.0.

$ export FRONTEND_SERVICE_IP=$(kubectl get -o \

jsonpath="{.status.loadBalancer.ingress[0].ip}" --namespace=production services gceme-frontend)

$ echo $FRONTEND_SERVICE_IP

35.184.162.219

$ while true; do curl http://$FRONTEND_SERVICE_IP/version; sleep 1; done

2.0.0

2.0.0

2.0.0

2.0.0

2.0.0

2.0.0

2.0.0

2.0.0

2.0.0

2.0.0

2.0.0

2.0.0

2.0.0

2.0.0

2.0.0

2.0.0

^C

We can also navigate to site on which the gceme application displays the info cards. The card color changed from blue to orange. Here's the command again to get the external IP address so we can check it out:

$ kubectl get service gceme-frontend -n production NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE gceme-frontend LoadBalancer 10.11.253.155 35.184.162.219 80:31055/TCP 51m

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization