Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

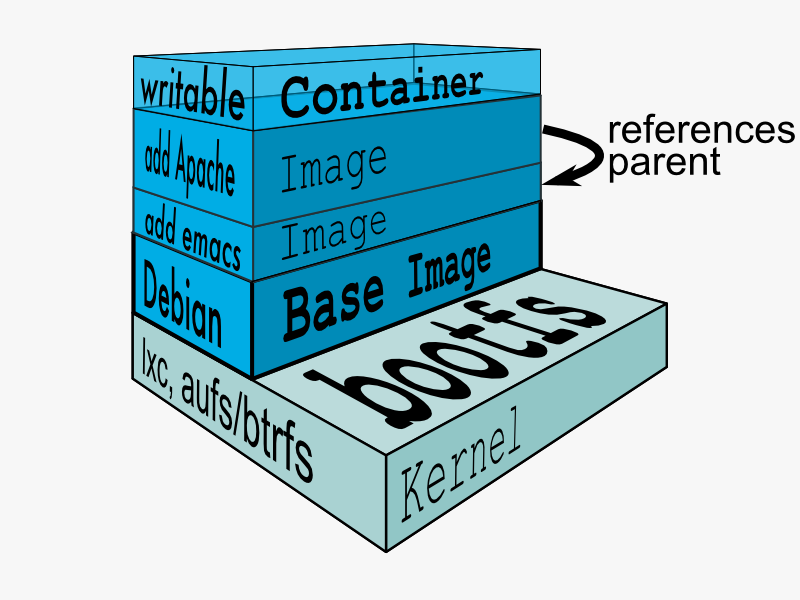

The problem with Virtual Machines built using VirtualBox or VMWare is that we have to run entire OS for every VM. That's where Docker comes in. Docker virtualizes on top of one OS so that we can run Linux using technology known as LinuX Containers (LXC). LXC combines cgroups and namespace support to provide an isolated environment for applications. Docker can also use LXC as one of its execution drivers, enabling image management and providing deployment services.

Docker allows us to run applications inside containers. Running an application inside a container takes a single command: docker run.

We need to have a disk image to make the virtualization work. The disk image represents the system we're running on and they are the basis of containers.

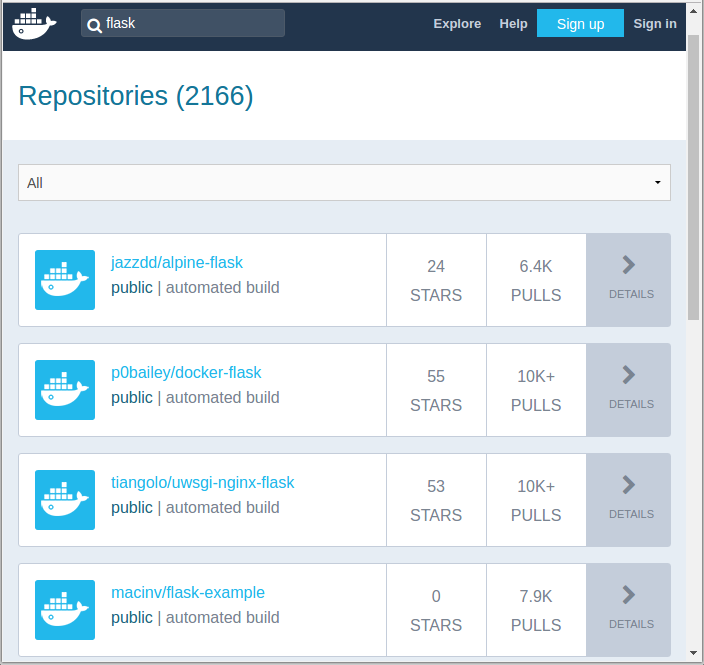

Docker registry is a registry of already existing images that we can use to run and create containerized applications.

There are lots of communities and works already been done to build the system. Docker company supports and maintains its registry and the community around it.

We can search images within the registry hub, for example, the sample picture is the result from searching "flask".

The Docker search command allows us to go and look at the registry in search for the images that we want.

$ docker search --help Usage: docker search [OPTIONS] TERM Search the Docker Hub for images --automated=false Only show automated builds --no-trunc=false Don't truncate output -s, --stars=0 Only displays with at least x stars

If we do the same search, Jenkins, we get exactly the same result as we got from the web:

$ docker search ubuntu NAME DESCRIPTION STARS OFFICIAL AUTOMATED ubuntu Ubuntu is a Debian-based Linux operating s... 5969 [OK] rastasheep/ubuntu-sshd Dockerized SSH service, built on top of of... 83 [OK] ubuntu-upstart Upstart is an event-based replacement for ... 71 [OK] ubuntu-debootstrap debootstrap --variant=minbase --components... 30 [OK] torusware/speedus-ubuntu Always updated official Ubuntu docker imag... 27 [OK] nuagebec/ubuntu Simple always updated Ubuntu docker images... 20 [OK] ...

We got too many outputs, so we need to filter it out items with more than 10 stars:

$ docker search --filter=stars=20 ubuntu NAME DESCRIPTION STARS OFFICIAL AUTOMATED ubuntu Ubuntu is a Debian-based Linux operating s... 5969 [OK] rastasheep/ubuntu-sshd Dockerized SSH service, built on top of of... 83 [OK] ubuntu-upstart Upstart is an event-based replacement for ... 71 [OK] ubuntu-debootstrap debootstrap --variant=minbase --components... 30 [OK] torusware/speedus-ubuntu Always updated official Ubuntu docker imag... 27 [OK] nuagebec/ubuntu Simple always updated Ubuntu docker images... 20 [OK]

Once we found the image we like to use it, we can use Docker's pull command:

$ docker pull --help

Usage: docker pull [OPTIONS] NAME[:TAG|@DIGEST]

Pull an image or a repository from a registry

Options:

-a, --all-tags Download all tagged images in the repository

--disable-content-trust Skip image verification (default true)

--help Print usage

The pull command will go up to the web site and grab the image and download it to our local machine.

$ docker pull ubuntu Using default tag: latest latest: Pulling from library/ubuntu aafe6b5e13de: Pull complete 0a2b43a72660: Pull complete 18bdd1e546d2: Pull complete 8198342c3e05: Pull complete f56970a44fd4: Pull complete Digest: sha256:f3a61450ae43896c4332bda5e78b453f4a93179045f20c8181043b26b5e79028 Status: Downloaded newer image for ubuntu:latest

The pull command without any tag will download all Ubuntu images though I've already done it. To see what Docker images are available on our machine, we use docker images:

$ docker images REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE ubuntu latest 5506de2b643b 4 weeks ago 199.3 MB

So, the output indicates only one image is currently on my local machine. We also see the image has a TAG inside of it.

As we can see from the command below, docker pull centos:latest, we can also be more specific, and download only the version we need. In Docker, versions are marked with tags.

$ docker pull centos:latest centos:latest: The image you are pulling has been verified 5b12ef8fd570: Pull complete ae0c2d0bdc10: Pull complete 511136ea3c5a: Already exists Status: Downloaded newer image for centos:latest

Here is the images on our local machine.

$ docker images REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE centos latest ae0c2d0bdc10 2 weeks ago 224 MB ubuntu latest 5506de2b643b 4 weeks ago 199.3 MB

The command, docker images, returns the following columns:

- REPOSITORY: The name of the repository, which in this case is "ubuntu".

- TAG: Tags represent a specific set point in the repositories' commit history. As we can see from the list, we've pulled down different versions of linux. Each of these versions is tagged with a version number, a name, and there's even a special tag called "latest" which represents the latest version.

- IMAGE ID: This is like the primary key for the image. Sometimes, such as when we commit a container without specifying a name or tag, the repository or the tag is <NONE>, but we can always refer to a specific image or container using its ID.

- CREATED: The date the repository was created, as opposed to when it was pulled. This can help us assess how "fresh" a particular build is. Docker appears to update their master images on a fairly frequent basis.

- VIRTUAL SIZE: The size of the image.

Now we have images on our local machine. What do we do with them? This is where docker run command comes in.

$ docker run --help

Usage: docker run [OPTIONS] IMAGE [COMMAND] [ARG...]

Run a command in a new container

-a, --attach=[] Attach to STDIN, STDOUT or STDERR.

--add-host=[] Add a custom host-to-IP mapping (host:ip)

-c, --cpu-shares=0 CPU shares (relative weight)

--cap-add=[] Add Linux capabilities

--cap-drop=[] Drop Linux capabilities

--cidfile="" Write the container ID to the file

--cpuset="" CPUs in which to allow execution (0-3, 0,1)

-d, --detach=false Detached mode: run the container in the background and print the new container ID

--device=[] Add a host device to the container (e.g. --device=/dev/sdc:/dev/xvdc)

--dns=[] Set custom DNS servers

--dns-search=[] Set custom DNS search domains

-e, --env=[] Set environment variables

--entrypoint="" Overwrite the default ENTRYPOINT of the image

--env-file=[] Read in a line delimited file of environment variables

--expose=[] Expose a port from the container without publishing it to your host

-h, --hostname="" Container host name

-i, --interactive=false Keep STDIN open even if not attached

--link=[] Add link to another container in the form of name:alias

--lxc-conf=[] (lxc exec-driver only) Add custom lxc options --lxc-conf="lxc.cgroup.cpuset.cpus = 0,1"

-m, --memory="" Memory limit (format: <number><optional unit>, where unit = b, k, m or g)

--name="" Assign a name to the container

--net="bridge" Set the Network mode for the container

'bridge': creates a new network stack for the container on the docker bridge

'none': no networking for this container

'container:<name|id>': reuses another container network stack

'host': use the host network stack inside the container. Note: the host mode gives the container full access to local system services such as D-bus and is therefore considered insecure.

-P, --publish-all=false Publish all exposed ports to the host interfaces

-p, --publish=[] Publish a container's port to the host

format: ip:hostPort:containerPort | ip::containerPort | hostPort:containerPort | containerPort

(use 'docker port' to see the actual mapping)

</name|id></optional></number>

docker run --help is a rather big help, and we have more:

--privileged=false Give extended privileges to this container --restart="" Restart policy to apply when a container exits (no, on-failure[:max-retry], always) --rm=false Automatically remove the container when it exits (incompatible with -d) --security-opt=[] Security Options --sig-proxy=true Proxy received signals to the process (even in non-TTY mode). SIGCHLD, SIGSTOP, and SIGKILL are not proxied. -t, --tty=false Allocate a pseudo-TTY -u, --user="" Username or UID -v, --volume=[] Bind mount a volume (e.g., from the host: -v /host:/container, from Docker: -v /container) --volumes-from=[] Mount volumes from the specified container(s) -w, --workdir="" Working directory inside the container

Currently we are on Ubuntu 14.04.1 LTS machine (local):

$ cat /etc/issue Ubuntu 14.04.1 LTS \n \l

Now we're going to Docker run centos image.

This will create container based upon the image

and execute the bin/bash command. Then it will take us into a shell on

that machine that can continue to do things:

$ docker run -it centos:latest /bin/bash [root@98f52715ecfa /]#

By executing it, we're now on a bash.

If we look at /etc/redhat-release:

[root@98f52715ecfa /]# cat /etc/redhat-release CentOS Linux release 7.0.1406 (Core)

We're now on CentOS 7.0 on top of my Ubuntu 14.04 machine.

We have an access to yum:

[root@98f52715ecfa /]# yum Loaded plugins: fastestmirror You need to give some command Usage: yum [options] COMMAND List of Commands: check Check for problems in the rpmdb check-update Check for available package updates ...

Let's make a new file in our home directory:

[root@98f52715ecfa /]# ls bin dev etc home lib lib64 lost+found media mnt opt proc root run sbin selinux srv sys tmp usr var [root@98f52715ecfa /]# cd /home [root@98f52715ecfa home]# ls [root@98f52715ecfa home]# touch bogotobogo.txt [root@98f52715ecfa home]# ls bogotobogo.txt [root@98f52715ecfa home]# exit exit k@laptop:~$

After making a new file on our Docker container, we exited from there, and we're back to our local machine with Ubuntu system.

$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

The docker ps lists containers but currently we do not have any.

That's because nothing is running. It shows only running containers.

We can list all containers using -a option:

$ docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 98f52715ecfa centos:latest "/bin/bash" 12 minutes ago Exited (0) 5 minutes ago goofy_yonath f8c5951db6f5 ubuntu:latest "/bin/bash" 4 hours ago Exited (0) 4 hours ago furious_almeida

How can we restart Docker container?

$ docker restart --help Usage: docker restart [OPTIONS] CONTAINER [CONTAINER...] Restart a running container -t, --time=10 Number of seconds to try to stop for before killing the container. Once killed it will then be restarted. Default is 10 seconds.

We can restart the container that's already created:

$ docker restart 98f52715ecfa 98f52715ecfa $ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 98f52715ecfa centos:latest "/bin/bash" 20 minutes ago Up 10 seconds goofy_yonath

Now we have one active running container, and it already executed the /bin/bash command.

The docker attach command allows us to attach to a running container using the container's ID or name, either to view its ongoing output or to control it interactively. We can attach to the same contained process multiple times simultaneously, screen sharing style, or quickly view the progress of our daemonized process.

$ docker attach --help Usage: docker attach [OPTIONS] CONTAINER Attach to a running container --no-stdin=false Do not attach STDIN --sig-proxy=true Proxy all received signals to the process (even in non-TTY mode). SIGCHLD, SIGKILL, and SIGSTOP are not proxied.

We can attach to a running container:

$ docker attach 98f52715ecfa [root@98f52715ecfa /]# [root@98f52715ecfa /]# cd /home [root@98f52715ecfa home]# ls bogotobogo.txt

Now we're back to the CentOS container we've created, and the file we made is still there in our home directory.

We can delete the container:

[root@98f52715ecfa home]# exit exit $ docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 98f52715ecfa centos:latest "/bin/bash" 30 minutes ago Exited (0) 14 seconds ago goofy_yonath f8c5951db6f5 ubuntu:latest "/bin/bash" 5 hours ago Exited (0) 5 hours ago furious_almeida $ docker rm f8c5951db6f5 f8c5951db6f5 $ docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 98f52715ecfa centos:latest "/bin/bash" 32 minutes ago Exited (0) 2 minutes ago goofy_yonath

We deleted the Ubuntu container and now we have only one container, CentOS.

We use Docker, but working with it creates lots of images and containers. So, we may want to remove all of them to save disk space.

To delete all containers:

$ docker rm $(docker ps -a -q)

To delete all images:

$ docker rmi $(docker images -q)

Here the -a and -q do this:

- -a: Show all containers (default shows just running)

- -q: Only display numeric IDs

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization