Docker & Kubernetes : Cassandra with a StatefulSet

In this post, we'll learn how to deploy Apache Cassandra on Kubernetes by using Minikube.

Apache Cassandra is a massively scalable open source NoSQL database.

Leveraging Kubernetes concepts such as PersistentVolume and StatefulSets, we can provide a resilient installation of Cassandra and be confident that its data (state) are safe.

In our example, a custom Cassandra seed provider lets the database discover new Cassandra instances as they join the Cassandra cluster.

We'll also see that StatefulSets make it easier to deploy stateful applications into our Kubernetes cluster.

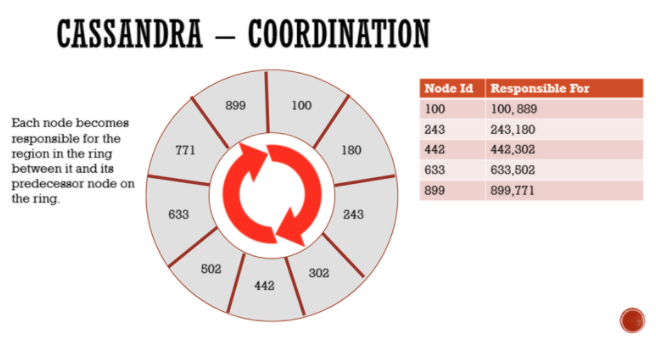

Picture source: Classic Cassandra and Coordination

In this post, the Pods that belong to the StatefulSet are Cassandra nodes and are members of the Cassandra cluster (called a ring). When those Pods run in our Kubernetes cluster, the Kubernetes control plane schedules those Pods onto Kubernetes Nodes.

When a Cassandra node starts, it uses a seed list to bootstrap discovery of other nodes in the ring. We'll deploy a custom Cassandra seed provider that lets the database discover new Cassandra Pods as they appear inside our Kubernetes cluster.

Let's begin with starting minikube.

Minikube defaults to 1024MiB of memory and 1 CPU. Running Minikube with the default resource configuration may result in insufficient resource errors.

$ minikube start --memory 5120 --cpus=4 Stopping "minikube" in hyperkit ... Node "m01" stopped. Kihyucks-MacBook-Air:Cassandra kihyuckhong$ Kihyucks-MacBook-Air:Cassandra kihyuckhong$ Kihyucks-MacBook-Air:Cassandra kihyuckhong$ minikube start --memory 5120 --cpus=4 minikube v1.9.2 on Darwin 10.13.3 KUBECONFIG=/Users/kihyuckhong/.kube/config Using the hyperkit driver based on existing profile Starting control plane node m01 in cluster minikube Restarting existing hyperkit VM for "minikube" ... Preparing Kubernetes v1.18.0 on Docker 19.03.8 ... Enabling addons: dashboard, default-storageclass, storage-provisioner Done! kubectl is now configured to use "minikube"

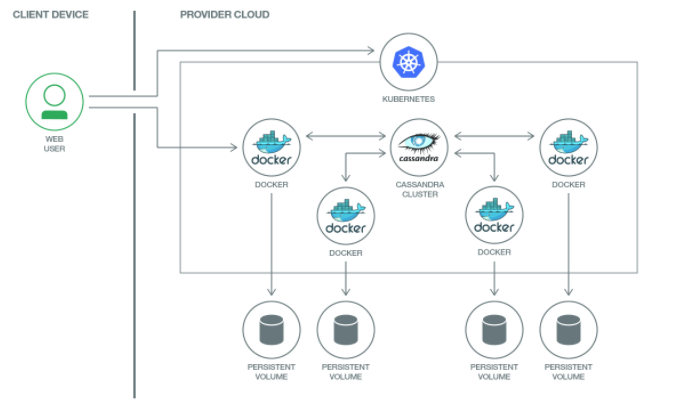

We will utilize a headless service for Cassandra so that we can provide a way for applications to access it via KubeDNS and not expose it to the outside world.

To access it from our developer workstation we can use kubectl exec commands against any of the cassandra pods.

If we do wish to connect an application to it we can use the KubeDNS value of cassandra.default.svc.cluster.local when configuring our application.

Picture source: Scalable multi-node Cassandra deployment on Kubernetes Cluster

The following Service (cassandra-service.yaml) is used for DNS lookups between Cassandra Pods and clients within our cluster:

apiVersion: v1

kind: Service

metadata:

labels:

app: cassandra

name: cassandra

spec:

clusterIP: None

ports:

- port: 9042

selector:

app: cassandra

To allow us to do simple discovery of the cassandra seed node (which we will deploy shortly) we can create a headless service. We do this by specifying None for the clusterIP in the cassandra-service.yaml. This headless service allows us to use KubeDNS for the Pods to discover the IP address of the Cassandra seed.

Let's create a Service to track all Cassandra StatefulSet members from the cassandra-service.yaml file:

$ kubectl apply -f cassandra-service.yaml service/cassandra created $ kubectl get svc cassandra NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE cassandra ClusterIP None <none> 9042/TCP 25s

Because Cassandra is a database, we may want to use Stateful sets and Persistent Volumes to ensure resiliency in our database.

The following StatefulSet manifest (cassandra-statefuleset.yaml) that uses the default provisioner for Minikube creates a Cassandra ring that consists of three Pods:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: cassandra

labels:

app: cassandra

spec:

serviceName: cassandra

replicas: 1

selector:

matchLabels:

app: cassandra

template:

metadata:

labels:

app: cassandra

spec:

terminationGracePeriodSeconds: 1800

containers:

- name: cassandra

image: gcr.io/google-samples/cassandra:v13

imagePullPolicy: Always

ports:

- containerPort: 7000

name: intra-node

- containerPort: 7001

name: tls-intra-node

- containerPort: 7199

name: jmx

- containerPort: 9042

name: cql

resources:

limits:

cpu: "500m"

memory: 1Gi

requests:

cpu: "500m"

memory: 1Gi

securityContext:

capabilities:

add:

- IPC_LOCK

lifecycle:

preStop:

exec:

command:

- /bin/sh

- -c

- nodetool drain

env:

- name: MAX_HEAP_SIZE

value: 512M

- name: HEAP_NEWSIZE

value: 100M

- name: CASSANDRA_SEEDS

value: "cassandra-0.cassandra.default.svc.cluster.local"

- name: CASSANDRA_CLUSTER_NAME

value: "K8Demo"

- name: CASSANDRA_DC

value: "DC1-K8Demo"

- name: CASSANDRA_RACK

value: "Rack1-K8Demo"

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

readinessProbe:

exec:

command:

- /bin/bash

- -c

- /ready-probe.sh

initialDelaySeconds: 15

timeoutSeconds: 5

# These volume mounts are persistent. They are like inline claims,

# but not exactly because the names need to match exactly one of

# the stateful pod volumes.

volumeMounts:

- name: cassandra-data

mountPath: /cassandra_data

# These are converted to volume claims by the controller

# and mounted at the paths mentioned above.

# do not use these in production until ssd GCEPersistentDisk or other ssd pd

volumeClaimTemplates:

- metadata:

name: cassandra-data

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: fast

resources:

requests:

storage: 1Gi

---

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: fast

provisioner: k8s.io/minikube-hostpath

parameters:

type: pd-ssd

The StatefulSet is responsible for creating the Pods. It provides ordered deployment, ordered termination and unique network names. Run the following command to start a single Cassandra server:

$ kubectl apply -f cassandra-statefulset.yaml statefulset.apps/cassandra created storageclass.storage.k8s.io/fast created

We can check if our StatefulSet has deployed using the command below:

$ kubectl get statefulsets cassandra NAME READY AGE cassandra 1/1 8m48s

Get the pods. In this case, we have only one pod and we cannot see the ordered ceration with this "replicaset=1":

$ kubectl get pods -l="app=cassandra" NAME READY STATUS RESTARTS AGE cassandra-0 1/1 Running 0 6m24s

The Pod in this post uses the gcr.io/google-samples/cassandra:v13 image from Google’s container registry. The Docker image is based on debian-base and includes OpenJDK 8. This image includes a standard Cassandra installation from the Apache Debian repo.

Run the Cassandra nodetool inside the first Pod, to display the status of the ring:

$ kubectl exec -it cassandra-0 -- nodetool status Datacenter: DC1-K8Demo ====================== Status=Up/Down |/ State=Normal/Leaving/Joining/Moving -- Address Load Tokens Owns (effective) Host ID Rack UN 172.17.0.6 99.59 KiB 32 100.0% 8b22647a-81a6-4f07-98b4-1f7cd0efd13a Rack1-K8Demo

To delete everything in the Cassandra StatefulSet:

$ kubectl delete statefulset -l app=cassandra statefulset.apps "cassandra" deleted $ kubectl delete persistentvolumeclaim -l app=cassandra persistentvolumeclaim "cassandra-data-cassandra-0" deleted

To delete the Service you set up for Cassandra:

$ kubectl delete service -l app=cassandra service "cassandra" deleted

To clean up the minikube itself:

$ minikube delete Deleting "minikube" in hyperkit ... Removed all traces of the "minikube" cluster.

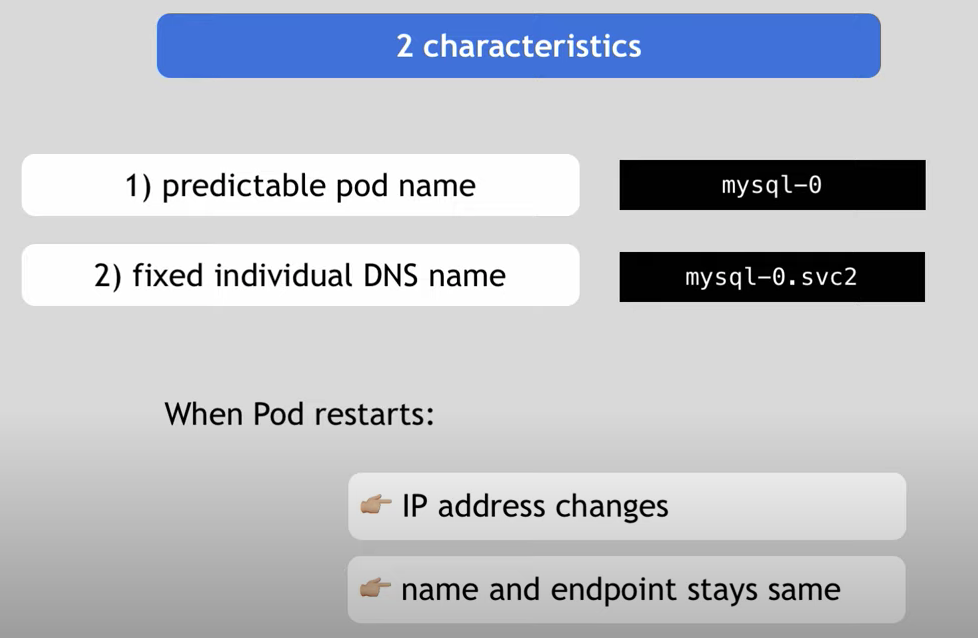

- Explains why we need the stateful set, MySQL example: Kubernetes Tutorial: Why do you need StatefulSets in Kubernetes?

- Explains difference between regular pod deploy (stateless) and the stateful set deploy, MySQL example:

Kubernetes StatefulSet simply explained | Deployment vs StatefulSet

The two main characteristics of a statefuleset compared with a regular deployment:

"Stateful applications are not perfect for containerized environment"

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization