Docker - Deploy Elastic Stack via Helm on minikube

Elastic Stack docker/kubernetes series:

The installation guide is available at Install Minikube.

On Mac:

$ brew install minikube

Start it up. My previous virtualbox VM has only 4196MB of memory. So, I have to delete one minikube and create a new one.

$ minikube delete

$ minikube start --cpus 4 --memory 6144

o minikube v1.0.1 on darwin (amd64)

$ Downloading Kubernetes v1.14.1 images in the background ...

> Creating virtualbox VM (CPUs=4, Memory=6144MB, Disk=20000MB) ...

- "minikube" IP address is 192.168.99.107

- Configuring Docker as the container runtime ...

- Version of container runtime is 18.06.3-ce

: Waiting for image downloads to complete ...

- Preparing Kubernetes environment ...

- Pulling images required by Kubernetes v1.14.1 ...

- Launching Kubernetes v1.14.1 using kubeadm ...

: Waiting for pods: apiserver proxy etcd scheduler controller dns

- Configuring cluster permissions ...

- Verifying component health .....

+ kubectl is now configured to use "minikube"

= Done! Thank you for using minikube!

Note: we can configure the cpu and memory size before we start minikube :

$ minikube config set memory 6144 $ minikube config set cpus 4 $ minikube start

If the cluster is running, the output from minikube status should be similar to:

$ minikube status host: Running kubelet: Running apiserver: Running kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.107

Another way to verify our single-node Kubernetes cluster is up and running:

$ kubectl cluster-info Kubernetes master is running at https://192.168.99.107:8443 KubeDNS is running at https://192.168.99.107:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

Dashboard is a web-based Kubernetes user interface. We can use Dashboard to deploy containerized applications to a Kubernetes cluster, troubleshoot our containerized application, and manage the cluster resources. To access the Kubernetes Dashboard, run this command:

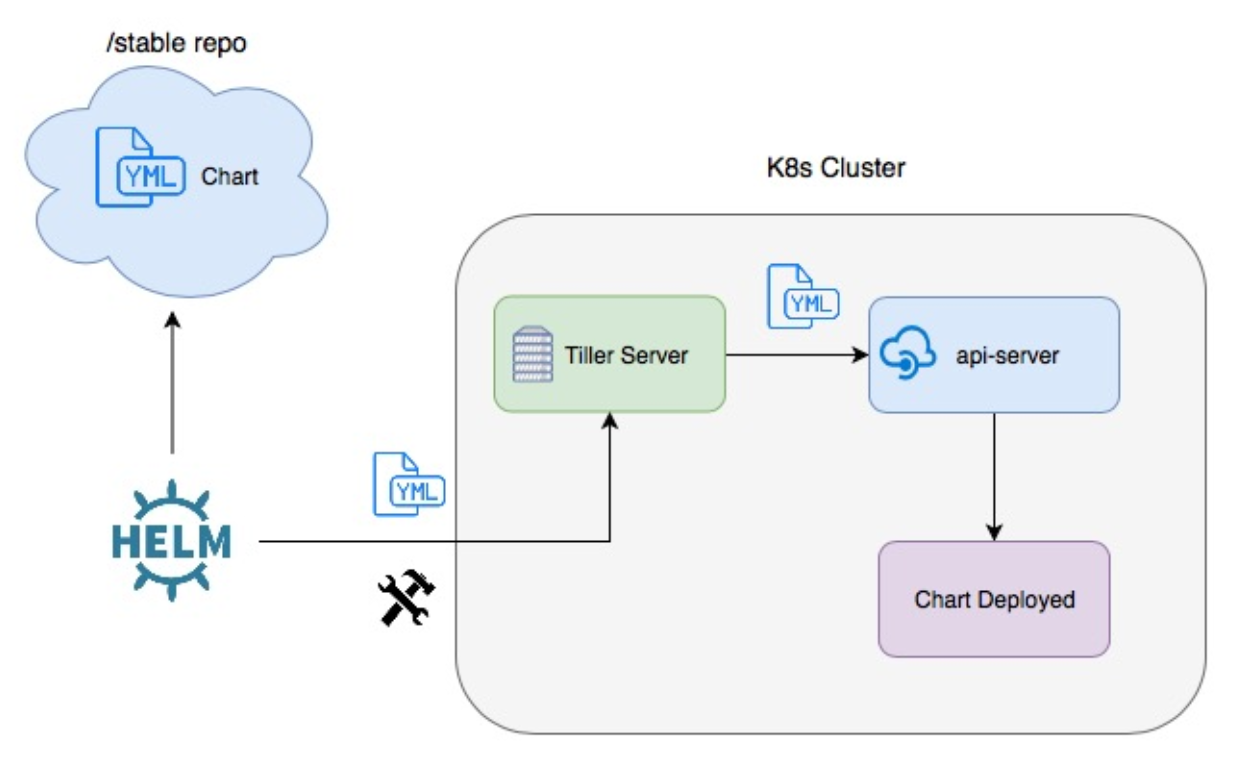

Check What is Helm?

source: Packaging Applications for Kubernetes

Let's install Helm:

$ curl https://raw.githubusercontent.com/kubernetes/Helm/master/scripts/get > get_Helm.sh $ chmod 700 get_Helm.sh $ ./get_Helm.sh Downloading https://get.helm.sh/helm-v2.16.6-darwin-amd64.tar.gz Preparing to install helm and tiller into /usr/local/bin Password: helm installed into /usr/local/bin/helm tiller installed into /usr/local/bin/tiller Run 'helm init' to configure helm.

To start Helm:

$ Helm init Creating /Users/ki.hong/.helm Creating /Users/ki.hong/.helm/repository Creating /Users/ki.hong/.helm/repository/cache Creating /Users/ki.hong/.helm/repository/local Creating /Users/ki.hong/.helm/plugins Creating /Users/ki.hong/.helm/starters Creating /Users/ki.hong/.helm/cache/archive Creating /Users/ki.hong/.helm/repository/repositories.yaml Adding stable repo with URL: https://kubernetes-charts.storage.googleapis.com Adding local repo with URL: http://127.0.0.1:8879/charts $HELM_HOME has been configured at /Users/ki.hong/.helm. Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster. Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy. To prevent this, run `helm init` with the --tiller-tls-verify flag. For more information on securing your installation see: https://v2.helm.sh/docs/securing_installation/ $ helm repo list NAME URL stable https://kubernetes-charts.storage.googleapis.com local http://127.0.0.1:8879/charts

To check if the Tiller server is running:

$ kubectl get pods -n kube-system coredns-fb8b8dccf-54gfs 1/1 Running 1 4m23s coredns-fb8b8dccf-zt92w 1/1 Running 1 4m23s etcd-minikube 1/1 Running 0 3m31s kube-addon-manager-minikube 1/1 Running 0 3m8s kube-apiserver-minikube 1/1 Running 0 3m31s kube-controller-manager-minikube 1/1 Running 0 3m8s kube-proxy-h4hpb 1/1 Running 0 4m22s kube-scheduler-minikube 1/1 Running 0 3m25s kubernetes-dashboard-79dd6bfc48-rl8js 1/1 Running 4 4m20s storage-provisioner 1/1 Running 0 4m19s tiller-deploy-6864dbcb4f-9jdmn 1/1 Running 0 28s

Note that the Tiller is gone for Helm V3. One of the reason is the security issue with Tiller: it was too powerful with lots of permissions. Though we're using V2 in this post, with T3, we have to deal with version control such as rollback feature that the Tiller has via its records of deploy history.

Let's start deploying the Elasticsearch. We'll be using Elastic's Helm repository so we need adding it:

$ Helm repo add elastic https://Helm.elastic.co "elastic" has been added to your repositories $ helm repo list NAME URL stable https://kubernetes-charts.storage.googleapis.com local http://127.0.0.1:8879/charts elastic https://Helm.elastic.co

Then, we need to download the Helm configuration for installing a multi-node Elasticsearch cluster on Minikube

(github.com/elastic/helm-charts/tree/master/elasticsearch/examples/minikube):

$ curl -O https://raw.githubusercontent.com/elastic/Helm-charts/master/elasticsearch/examples/minikube/values.yaml $ ls get_Helm.sh values.yaml

The vaules.yaml looks like this:

---

# Permit co-located instances for solitary minikube virtual machines.

antiAffinity: "soft"

# Shrink default JVM heap.

esJavaOpts: "-Xmx128m -Xms128m"

# Allocate smaller chunks of memory per pod.

resources:

requests:

cpu: "100m"

memory: "512M"

limits:

cpu: "1000m"

memory: "512M"

# Request smaller persistent volumes.

volumeClaimTemplate:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "standard"

resources:

requests:

storage: 100M

Install the Elasticsearch Helm chart using the configuration from vaules.yaml:

$ Helm install --name elasticsearch elastic/elasticsearch -f ./values.yaml NAME: elasticsearch LAST DEPLOYED: Thu Apr 16 20:06:43 2020 NAMESPACE: default STATUS: DEPLOYED RESOURCES: ==> v1/Pod(related) NAME READY STATUS RESTARTS AGE elasticsearch-master-0 0/1 Pending 0 0s elasticsearch-master-1 0/1 Pending 0 0s elasticsearch-master-2 0/1 Pending 0 0s ==> v1/Service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE elasticsearch-master ClusterIP 10.101.22.140 <none> 9200/TCP,9300/TCP 0s elasticsearch-master-headless ClusterIP None <none> 9200/TCP,9300/TCP 0s ==> v1/StatefulSet NAME READY AGE elasticsearch-master 0/3 0s ==> v1beta1/PodDisruptionBudget NAME MIN AVAILABLE MAX UNAVAILABLE ALLOWED DISRUPTIONS AGE elasticsearch-master-pdb N/A 1 0 0s NOTES: 1. Watch all cluster members come up. $ kubectl get pods --namespace=default -l app=elasticsearch-master -w 2. Test cluster health using Helm test. $ helm test elasticsearch --namespace=default

$ kubectl get pods --namespace=default -l app=elasticsearch-master NAME READY STATUS RESTARTS AGE elasticsearch-master-0 1/1 Running 0 5m36s elasticsearch-master-1 1/1 Running 0 5m36s elasticsearch-master-2 1/1 Running 0 5m36s $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE elasticsearch-master ClusterIP 10.101.22.140 <none> 9200/TCP,9300/TCP 13m elasticsearch-master-headless ClusterIP None <none> 9200/TCP,9300/TCP 13m kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h20m

As the last step for deploying Elasticsearch is to set up port forwarding. From our local workstation, use the following command in a separate terminal:

$ kubectl port-forward svc/elasticsearch-master 9200 Forwarding from 127.0.0.1:9200 -> 9200 Forwarding from [::1]:9200 -> 9200

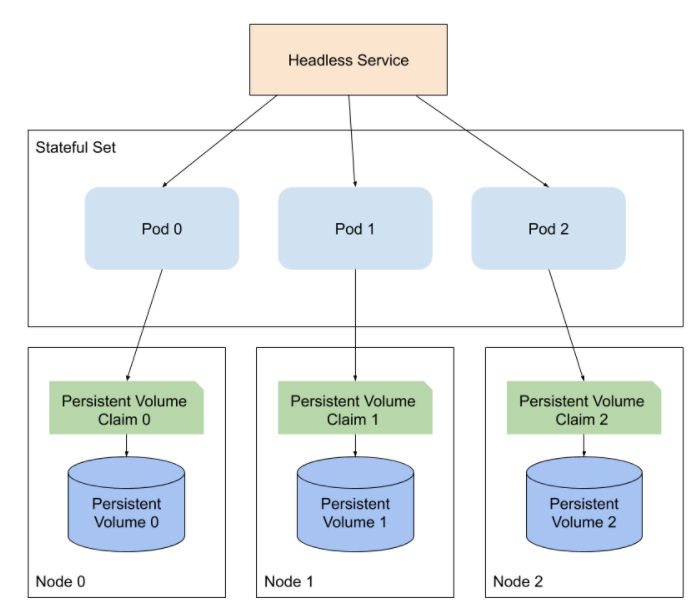

Note that for our elasticsearch we used StatefulSets to mainstate state.

When using StatefulSets we also need to use PersistentVolumes and PersistentVolumeClaims. A StatefulSet will ensure the same PersistentVolumeClaim stays bound to the same Pod throughout its lifetime. Unlike a Deployment which ensures the group of Pods within the Deployment stay bound to a PersistentVolumeClaim.

Pic. source https://sematext.com/blog/kubernetes-elasticsearch/

Also, note that alongside StatefulSets we have Headless Services that are used for discovery of StatefulSet Pods. Instead of load-balancing it will return the IPs of the associated Pods. Headless Services do not have a Cluster IP allocated. They will not be proxied by kube-proxy, instead Elasticsearch will handle the service discovery.

Install Kibana Helm Chart:

$ Helm install --name kibana elastic/kibana NAME: kibana LAST DEPLOYED: Thu Apr 16 19:33:37 2020 NAMESPACE: default STATUS: DEPLOYED RESOURCES: ==> v1/Deployment NAME READY UP-TO-DATE AVAILABLE AGE kibana-kibana 0/1 1 0 1s ==> v1/Pod(related) NAME READY STATUS RESTARTS AGE kibana-kibana-74bc9874c5-7sk4l 0/1 ContainerCreating 0 1s ==> v1/Service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kibana-kibana ClusterIP 10.97.22.14 <none> 5601/TCP 1s

Check if our Kibana pod is running:

$ kubectl get pods NAME READY STATUS RESTARTS AGE elasticsearch-master-0 1/1 Running 0 15m elasticsearch-master-1 1/1 Running 0 15m elasticsearch-master-2 1/1 Running 0 15m kibana-kibana-74bc9874c5-7sk4l 1/1 Running 0 5m29s

Set up port forwarding for Kibana:

$ kubectl port-forward deployment/kibana-kibana 5601 Forwarding from 127.0.0.1:5601 -> 5601 Forwarding from [::1]:5601 -> 5601

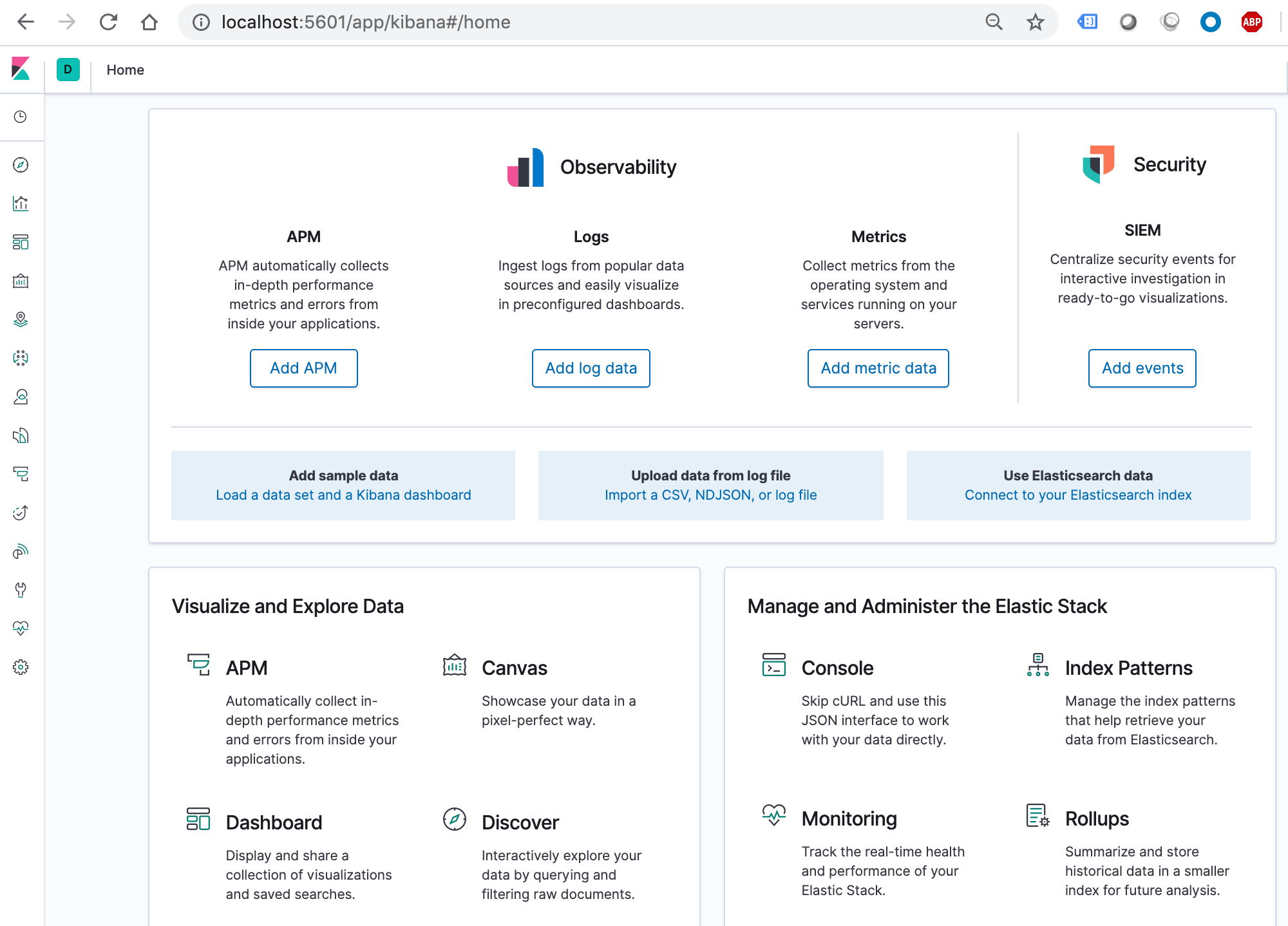

Now we can now access Kibana:

Let's deploy Metricbeat:

$ Helm install --name metricbeat elastic/metricbeat NAME: metricbeat LAST DEPLOYED: Thu Apr 16 20:23:54 2020 NAMESPACE: default STATUS: DEPLOYED RESOURCES: ==> v1/ConfigMap NAME DATA AGE metricbeat-metricbeat-config 2 1s ==> v1/DaemonSet NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE metricbeat-metricbeat 1 1 0 1 0 <none> 1s ==> v1/Deployment NAME READY UP-TO-DATE AVAILABLE AGE metricbeat-kube-state-metrics 0/1 1 0 0s metricbeat-metricbeat-metrics 0/1 1 0 0s ==> v1/Pod(related) NAME READY STATUS RESTARTS AGE metricbeat-kube-state-metrics-69487cddcf-r2rfp 0/1 ContainerCreating 0 1s metricbeat-kube-state-metrics-69487cddcf-r2rfp 0/1 ContainerCreating 0 1s metricbeat-kube-state-metrics-69487cddcf-r2rfp 0/1 ContainerCreating 0 1s ==> v1/Service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE metricbeat-kube-state-metrics ClusterIP 10.105.153.210 <none> 8080/TCP 1s ==> v1/ServiceAccount NAME SECRETS AGE metricbeat-kube-state-metrics 1 1s metricbeat-metricbeat 1 1s ==> v1beta1/ClusterRole NAME AGE metricbeat-kube-state-metrics 2s metricbeat-metricbeat-cluster-role 2s ==> v1beta1/ClusterRoleBinding NAME AGE metricbeat-kube-state-metrics 2s metricbeat-metricbeat-cluster-role-binding 2s NOTES: 1. Watch all containers come up. $ kubectl get pods --namespace=default -l app=metricbeat-metricbeat -w

Check if our Metricbeat pods are running:

$ kubectl get pods NAME READY STATUS RESTARTS AGE elasticsearch-master-0 1/1 Running 0 20m elasticsearch-master-1 1/1 Running 0 20m elasticsearch-master-2 1/1 Running 0 20m kibana-kibana-74bc9874c5-7sk4l 1/1 Running 0 10m metricbeat-kube-state-metrics-69487cddcf-r2rfp 1/1 Running 0 2m52s metricbeat-metricbeat-9jk6p 1/1 Running 0 2m52s metricbeat-metricbeat-metrics-d785d8658-bnmk2 1/1 Running 0 2m52s

We should be able to see that metrics have already begun to be indexed in Elasticsearch:

$ curl localhost:9200/_cat/indices green open .kibana_task_manager_1 4mHQWAfdTwSwn43HiBwkvQ 1 1 2 0 56.3kb 39.8kb green open ilm-history-1-000001 -LhFfS93RoyfdkcR66Zlcw 1 1 6 0 52.3kb 29.6kb green open .apm-agent-configuration 3jccs1yuSv6_d6fQkkFSyQ 1 1 0 0 566b 283b green open metricbeat-7.6.2-2020.04.17-000001 dftr6FE-QCKYvvrX5xSGbQ 1 1 2239 0 4.9mb 2.8mb green open .kibana_1 cjeyD4HgSkCEVcKyffpt2Q 1 1 4 0 38.4kb 19.2kb

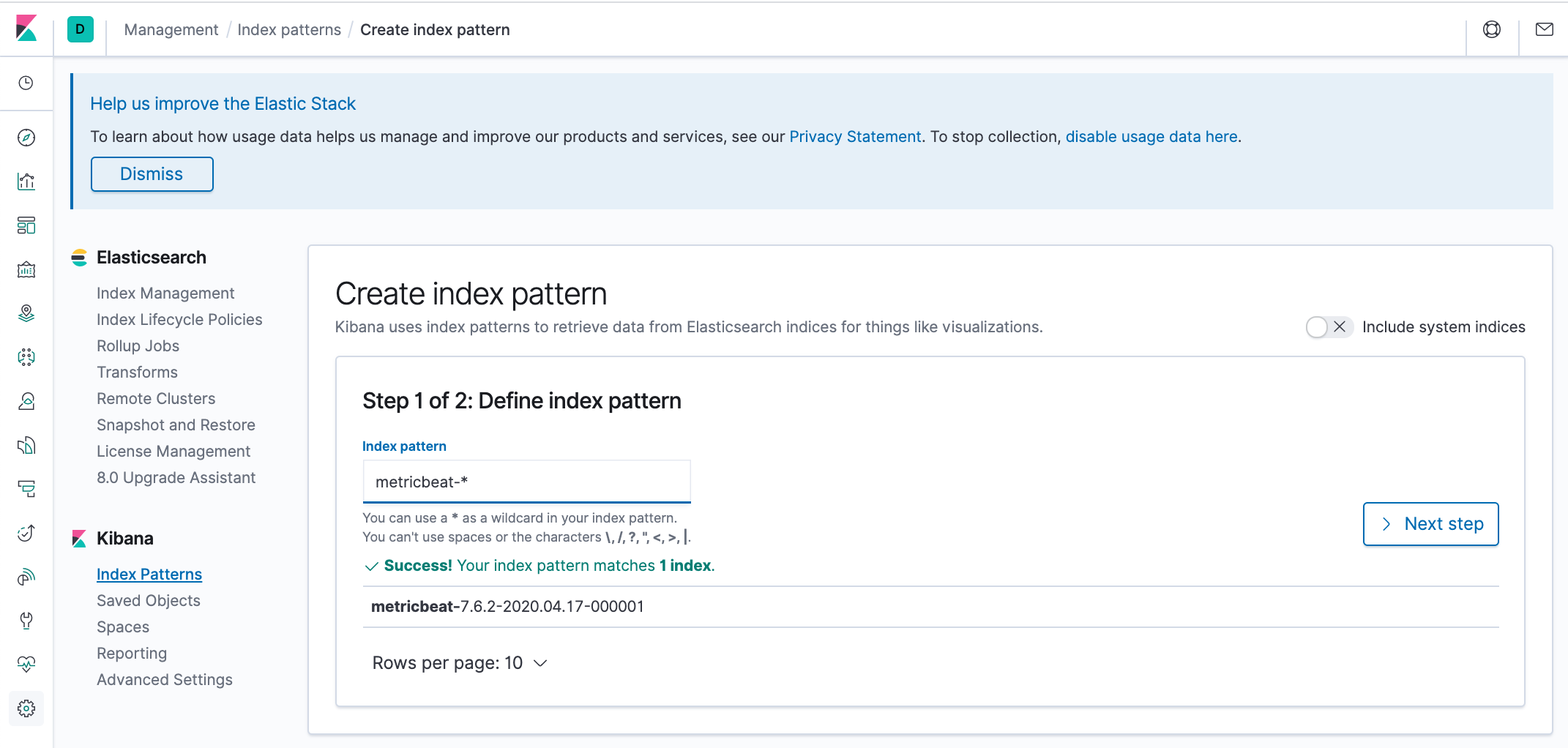

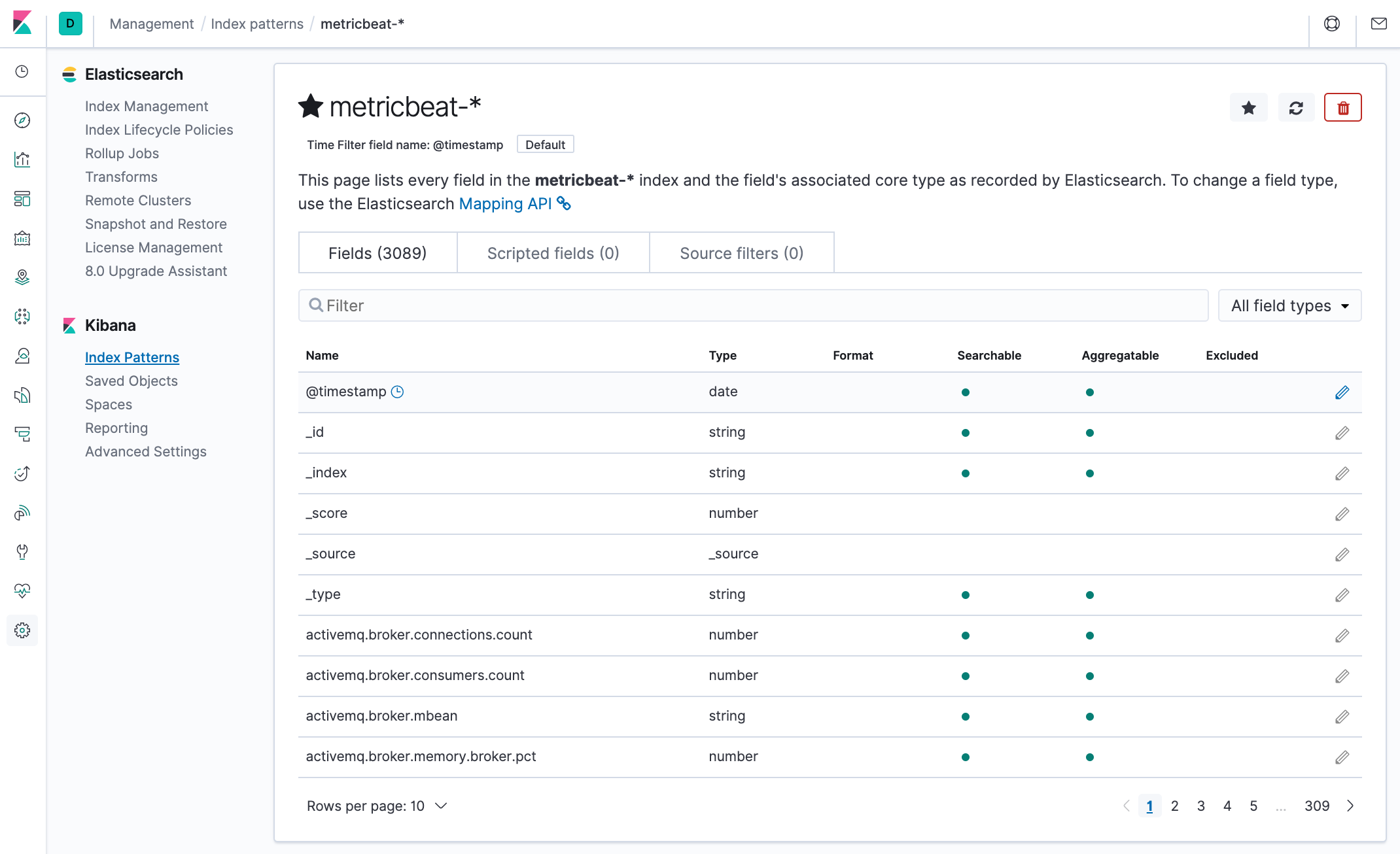

Let's go back to Kibana and define an index pattern. Go to the Management => Kibana => Index Patterns page, and click Create index pattern. Kibana will automatically identify and display the Metricbeat index:

Enter metricbeat-* and on the next step select the @timestamp field:

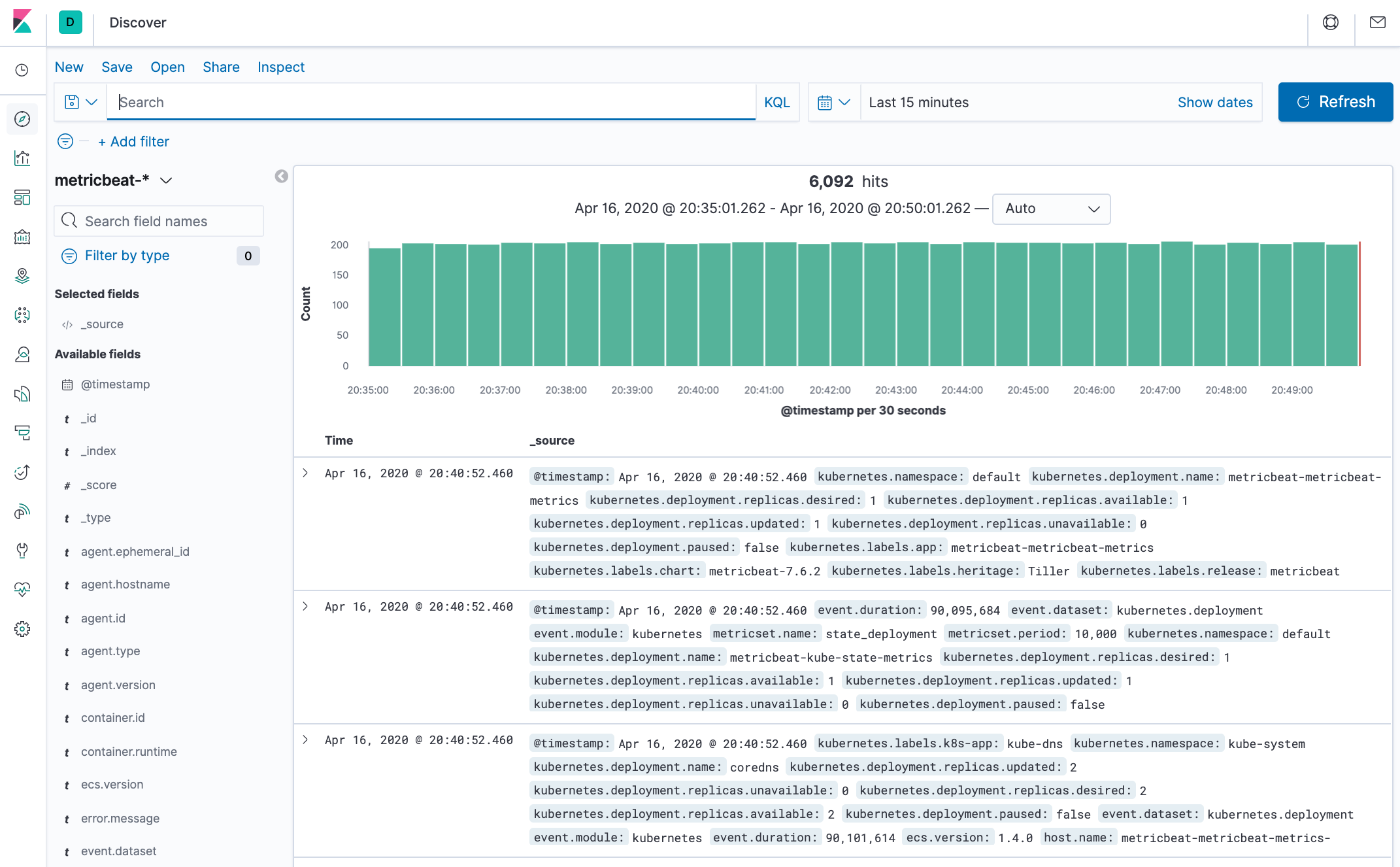

Discover page:

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization