Docker & Kubernetes - Kubernetes Ingress with AWS ALB Ingress Controller in EKS

This post explains how to set up ALB Ingress for Kubernetes on Amazon EKS.

The ALB ingress controller can program ALB with ingress traffic forwarding rules for EKS. The controller itself would be deployed as a native Kubernetes app that would listen to ingress resource events , and program ALB accordingly.

Note that we'll be deploying our ALB related pods into kube-system, then deploy our game into its own namespace.

Kubernetes ingress is not a service. Kubernetes Ingress is a collection of routing rules (a set of rules that have to be configured on an actual load balancer, so it is just a Load Balancer spec) for inbound traffic to reach the services in a Kubernetes cluster.

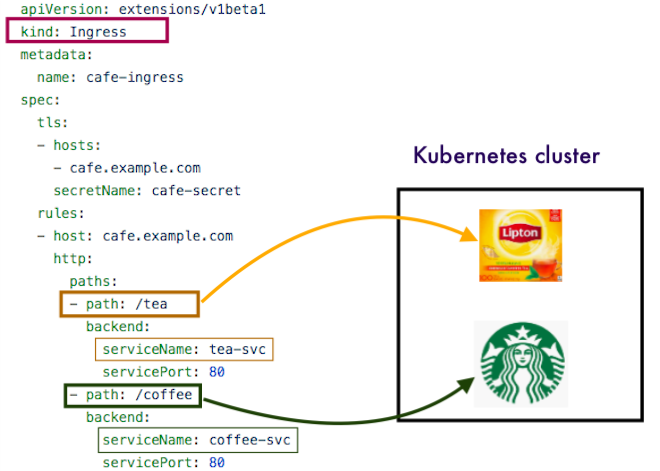

The followings are the sample definitions of "Ingress rules" and "Ingress services".

Ingress rules

Let's assume that we want to access this "coffee" and "tea" from the internet with the base URL https://cafe.example.com, and we want to access the "coffee" on the path /coffee while /tea endpoints should be directed to the "tea". We can create the following Ingress entry in the apply directory as ingress.yaml:

Ingress service

The Ingress services, when detecting a new or modified Ingress entry, will create/update the DNS record for the defined hostname, will update the load balancer to use a TLS certificate and route the requests to the cluster nodes, and will define the routes that find the right service based on the hostname and the path.

Let's assume that we have a deployment with label "application=cafe-app", providing an "coffee" service on port 8080 and an "tea" on port 8081. In order to make them accessible from the internet, we need to create the services first.

The service definition looks like this, we can create it in the apply directory as service.yaml:

apiVersion: v1

kind: Service

metadata:

name: cafe-app-service

labels:

application: cafe-app-service

spec:

ports:

- port: 8080

protocol: TCP

targetPort: 8080

name: coffee-port

- port: 8081

protocol: TCP

targetPort: 8081

name: tea-port

selector:

application: cafe-app

Note that we didn't define the service type in the "spec.ports" section. This means that the service type will be the default "ClusterIP", and will be accessible only from inside the cluster.

According to https://kubernetes.io/docs/concepts/services-networking/ingress/, Ingress is defined as "An API object that manages external access to the services in a cluster, typically HTTP".

Ingress can provide load balancing, SSL termination and name-based virtual hosting. However, in the real sense, Ingress is not a service but a construct that sits on top of our services as an entry point to the cluster, providing simple host and URL based HTTP routing capabilities.

Therefore, in real-world Kubernetes deployments, we need additional configurations (implementations) to expose services through external urls, ssl-terminated endpoints, load balancers, etc.

These implementations are known as Ingress Controllers. Traffic routing is done by an ingress controller, and it is responsible for reading the Ingress Resource information and processing that data accordingly. For ingress resources to work, we must have an ingress controller running.

Different ingress controllers have extended the specification in different ways to support additional use cases.

Note that an Ingress Controllers typically doesn't rule out the need for an external load balancer in that the ingress controller simply adds an additional layer of routing and control behind the load balancer.

Ingress Load Balancer - outside of Kubernetes cluster or inside?

- Outside:

For EKS, an ALB ingress controller can program ALB with ingress traffic routing rules. The controller itself would be deployed as a native Kubernetes app that would listen to ingress resource events, and program ALB accordingly.

When users hit the url "mysite.com/main", ALB would redirect the traffic to the corresponding Kubernetes Node Port service. Given the Load Balancer is external to the cluster, the service has to be of a NodePort type.

This ALB ingress controller is the primary focus of this post!

Other Ingress Controllers for LBs deployed outside of Kubernetes cluster are: AWS ALB, Ingress controller for Google Cloud, F5 BIG-IG Ingress controller, Netscaler, and OpenStack Octavia.

- Inside:

Nginx ingress controller is this type. It acts both as a resource programming Load Balancer records, and as a Load Balancer itself. The nginx ingress controller is deployed as a daemonset, which means every node in the cluster will get one nginx instance deployed as a Kubernetes pod.

Other Ingress Controllers for LBs deployed inside of Kubernetes cluster are: Nginx, HAProxy, Traefik, and Contour Ingress controllers.

The AWS ALB Ingress controller is a controller that triggers the creation of an ALB and the necessary supporting AWS resources whenever a Kubernetes user declares an Ingress resource on the cluster. The Ingress resource uses the ALB to route HTTP[s] traffic to different endpoints within the cluster. The AWS ALB Ingress controller works on any Kubernetes cluster including Amazon Elastic Container Service for Kubernetes (EKS).

ALB Ingress controller supports two traffic modes: instance mode and ip mode.

Users can explicitly specify these traffic modes by declaring the alb.ingress.kubernetes.io/target-type annotation on the Ingress and the Service definitions.

- instance mode: Ingress traffic starts from the ALB and reaches the NodePort opened for your service. Traffic is then routed to the container Pods within cluster. The number of hops for the packet to reach its destination in this mode is always two.

- ip mode: Ingress traffic starts from the ALB and reaches the container Pods within cluster directly. In order to use this mode, the networking plugin for the Kubernetes cluster must use a secondary IP address on ENI as pod IP, aka AWS CNI plugin for Kubernetes. The number of hops for the packet to reach its destination in this mode is always one.

First, let's deploy an EKS cluster with eksctl cli.

Install eksctl with Homebrew for macOS:

$ brew install weaveworks/tap/eksctl

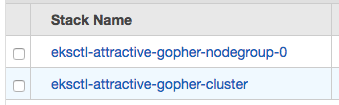

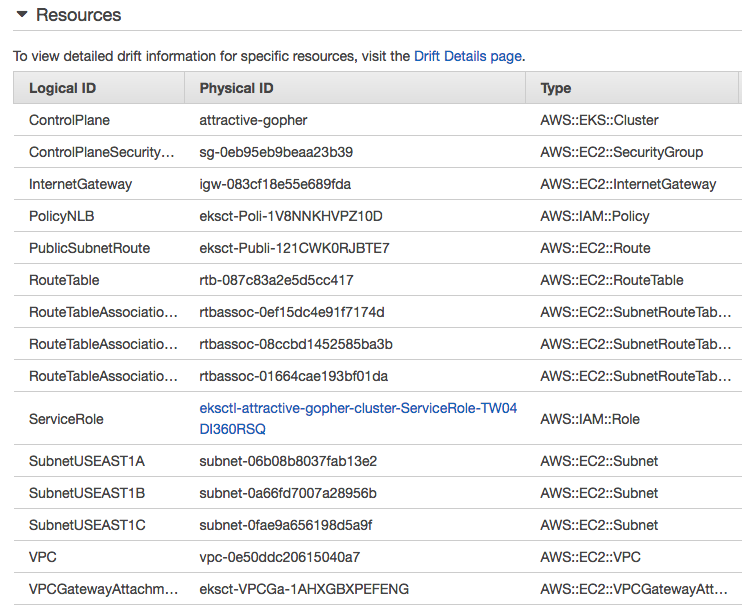

Create EKS cluster with cluster name "attractive-gopher":

$ eksctl create cluster --name=attractive-gopher \ --node-type=t2.small --nodes-min=2 --nodes-max=2 \ --region=us-east-1 --zones=us-east-1a,us-east-1b,us-east-1c,us-east-1d,us-east-1f

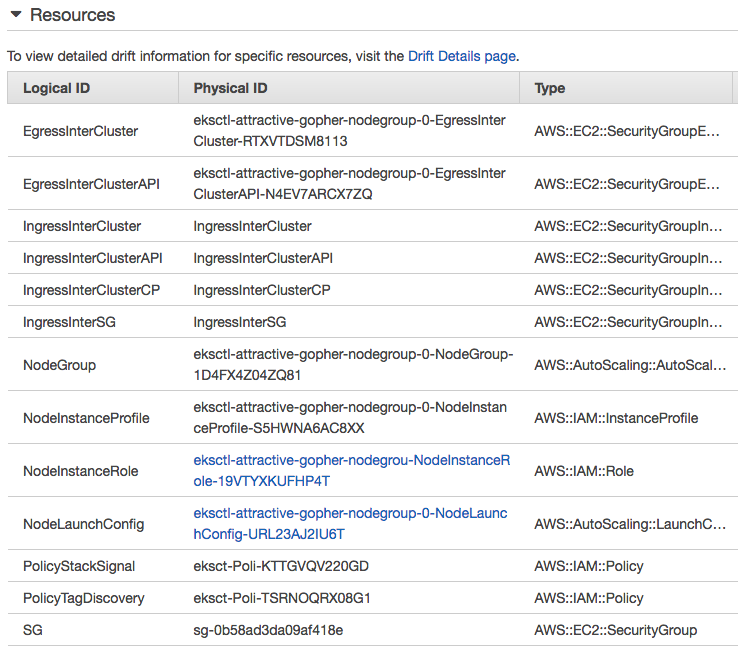

Note that this will create not only the "Control Plane" but also "Data Plane". So, we'll have 2 worker nodes with instance type of "t2.small" in "us-east-1" region with the AZs (a,b,c,d, and f).

Control Plane:

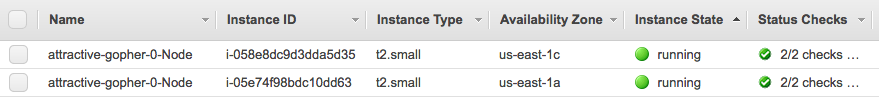

Data Plane worker nodes:

Worker nodes:

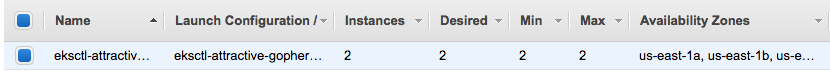

Autoscaling Group:

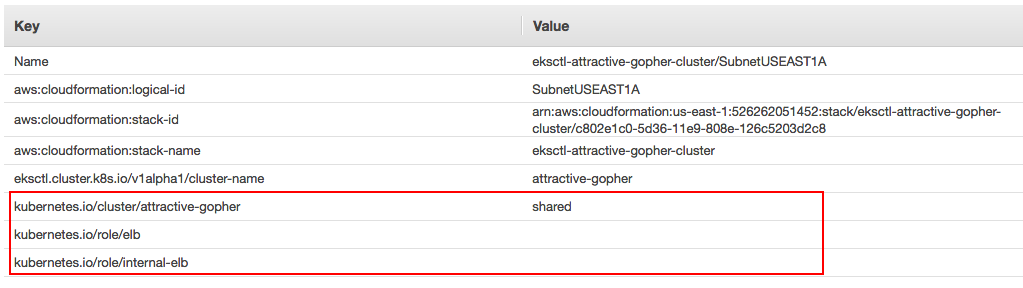

Go to the "Subnets" section in the VPC Console. Find all the Public subnets for the EKS cluster. For example:

eksctl-attractive-gopher-cluster/SubnetPublic<USEAST1a> eksctl-attractive-gopher-cluster/SubnetPublic<USEAST1b> eksctl-attractive-gopher-cluster/SubnetPublic<USEAST1c>

Configure the Public subnets in the console as defined in ALB Ingress Controller Configuration#subnet-auto-discovery (Most Kubernetes distributions on AWS already do this for us, e.g. kops) or follow the steps below:

Subnet Auto Discovery

We want to tag AWS subnets to allow ingress controller auto discover subnets used for ALBs (see Cluster VPC Considerations):

- kubernetes.io/cluster/${cluster-name} must be set to owned or shared. Remember ${cluster-name} needs to be the same name we're passing to the controller in the --cluster-name option.

- kubernetes.io/role/internal-elb must be set to 1 or `` for internal LoadBalancers.

- kubernetes.io/role/elb must be set to 1 or `` for internet-facing LoadBalancers.

Here are the Tags for subnet1a. We should do it for all subnets:

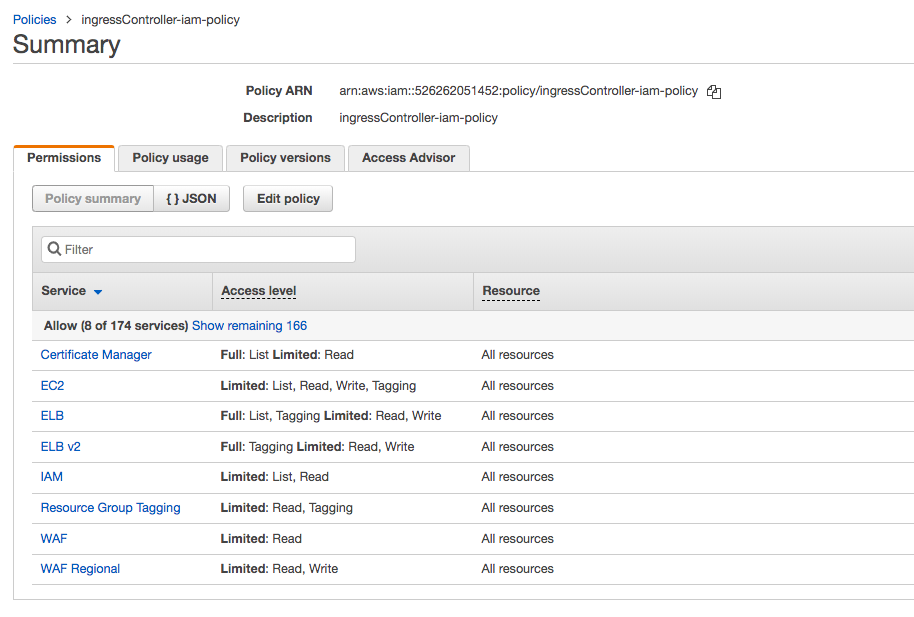

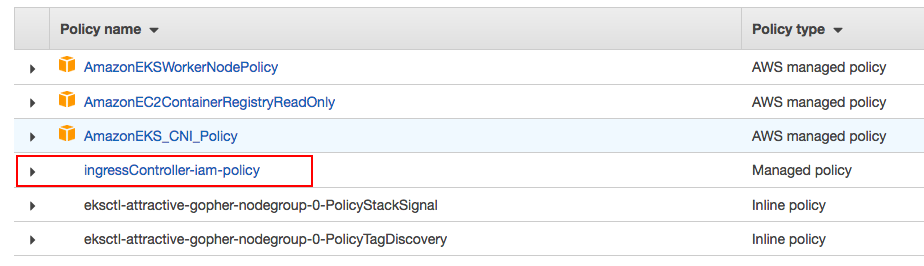

To perform operations, the controller must have required IAM role capabilities for accessing and provisioning ALB resources. We need to create an IAM policy to give the Ingress controller the right permissions:

- Go to the IAM Console and choose the section Policies.

- Select Create policy.

- Embed the contents of the template iam-policy.json in the JSON section.

- Review policy and save as ingressController-iam-policy

We can guess what the EKS IngressController will do based on the policies. Actually, those are:

- The controller watches for ingress events from the API server. When it finds ingress resources that satisfy its requirements, it begins creation of AWS resources.

- An ALB is created for the Ingress resource.

- TargetGroups are created for each backend specified in the Ingress resource.

- Listeners are created for every port specified as Ingress resource annotation. When no port is specified, sensible defaults (80 or 443) are used.

- Rules are created for each path specified in our ingress resource. This ensures that traffic to a specific path is routed to the correct TargetGroup created.

Attach the IAM policy to the EKS worker nodes:

- Go back to the IAM Console.

- Choose the section Roles and search for the NodeInstanceRole of our EKS worker node. Example: eksctl-attractive-gopher-NodeInstanceRole-xxxxxx.

- Attach policy ingressController-iam-policy.

Deploy RBAC Roles and RoleBindings needed by the AWS ALB Ingress controller:

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/aws-alb-ingress-controller/v1.0.0/docs/examples/rbac-role.yaml clusterrole.rbac.authorization.k8s.io/alb-ingress-controller created clusterrolebinding.rbac.authorization.k8s.io/alb-ingress-controller created serviceaccount/alb-ingress created

Next, let's deploy the AWS ALB Ingress controller into our Kubernetes cluster.

Download the AWS ALB Ingress controller YAML into a local file:

$ curl -sS "https://raw.githubusercontent.com/kubernetes-sigs/aws-alb-ingress-controller/v1.0.0/docs/examples/alb-ingress-controller.yaml" > alb-ingress-controller.yaml

Edit the AWS ALB Ingress controller YAML to include the clusterName of the Kubernetes (or) Amazon EKS cluster: the –cluster-name flag to be the real name of our Kubernetes (or) Amazon EKS cluster:

# Application Load Balancer (ALB) Ingress Controller Deployment Manifest.

# This manifest details sensible defaults for deploying an ALB Ingress Controller.

# GitHub: https://github.com/kubernetes-sigs/aws-alb-ingress-controller

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: alb-ingress-controller

name: alb-ingress-controller

# Namespace the ALB Ingress Controller should run in. Does not impact which

# namespaces it's able to resolve ingress resource for. For limiting ingress

# namespace scope, see --watch-namespace.

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: alb-ingress-controller

...

# Name of your cluster. Used when naming resources created

# by the ALB Ingress Controller, providing distinction between

# clusters.

- --cluster-name=attractive-gopher

...

Deploy the AWS ALB Ingress controller YAML:

$ kubectl apply -f alb-ingress-controller.yaml deployment.apps/alb-ingress-controller created

Verify that the deployment was successful and the controller started:

$ kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE alb-ingress-controller-7d5674cf9d-7jkxb 1/1 Running 0 2m aws-node-4fw9q 1/1 Running 0 38m aws-node-zqz2t 1/1 Running 1 38m kube-dns-6f455bb957-qgrht 3/3 Running 0 43m kube-proxy-2brkx 1/1 Running 0 38m kube-proxy-7rwk4 1/1 Running 0 38m

Or use this:

$ kubectl logs -n kube-system $(kubectl get po -n kube-system | egrep -o alb-ingress[a-zA-Z0-9-]+)

We should be able to see the output similar to the following:

------------------------------------------------------------------------------- AWS ALB Ingress controller Release: v1.0.0 Build: git-c25bc6c5 Repository: https://github.com/kubernetes-sigs/aws-alb-ingress-controller -------------------------------------------------------------------------------

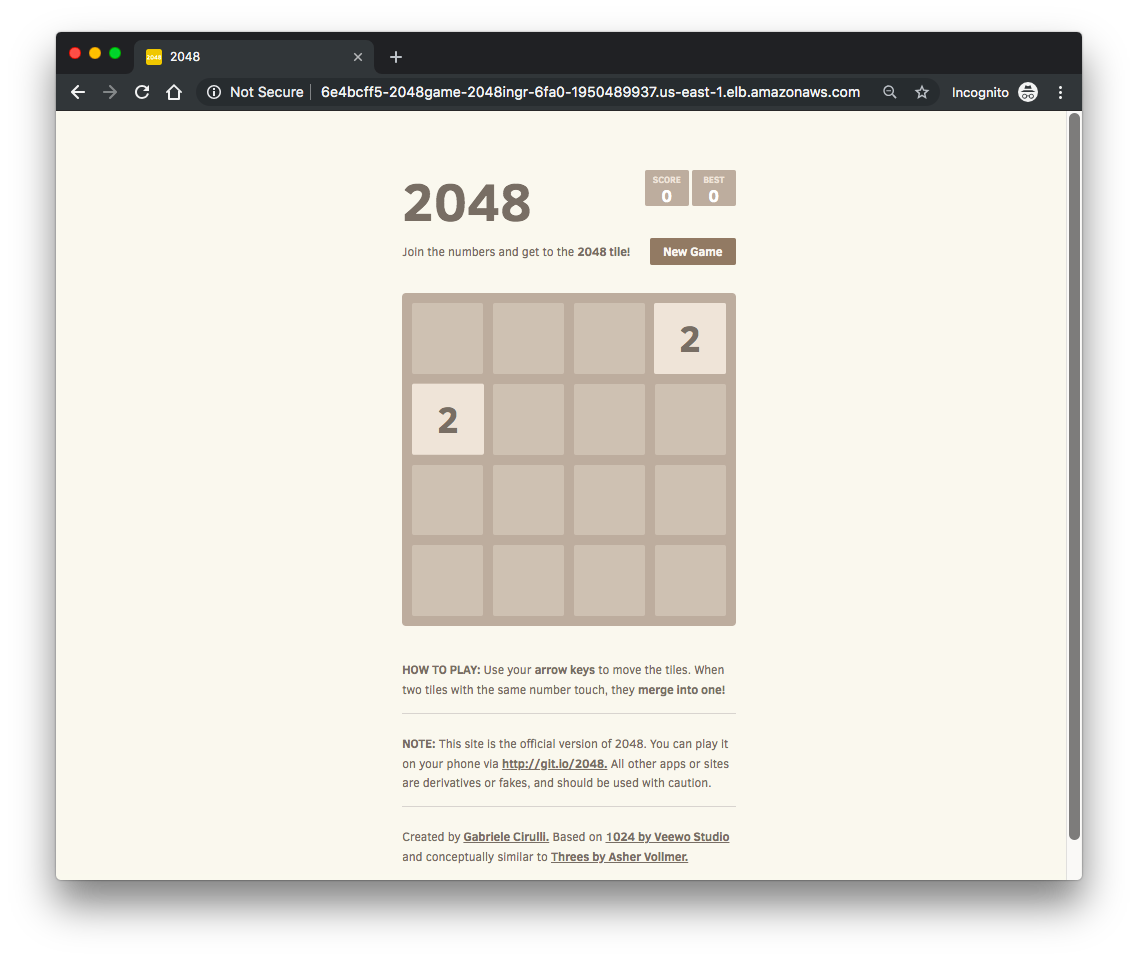

Now let's deploy a sample 2048 game into our Kubernetes cluster and use the Ingress resource to expose it to traffic.

Deploy 2048 game resources:

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/aws-alb-ingress-controller/v1.0.0/docs/examples/2048/2048-namespace.yaml namespace/2048-game created $ kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/aws-alb-ingress-controller/v1.0.0/docs/examples/2048/2048-deployment.yaml deployment.extensions/2048-deployment created $ kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/aws-alb-ingress-controller/v1.0.0/docs/examples/2048/2048-service.yaml service/service-2048 created

Deploy an Ingress resource for the 2048 game:

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/aws-alb-ingress-controller/v1.0.0/docs/examples/2048/2048-ingress.yaml ingress.extensions/2048-ingress created

After few seconds, verify that the Ingress resource is enabled:

$ kubectl get ingress/2048-ingress -n 2048-game

We should be able to see the following output:

NAME HOSTS ADDRESS PORTS AGE 2048-ingress * 6e4bcff5-2048game-2048ingr-6fa0-1950489937.us-east-1.elb.amazonaws.com 80 23m

Open a browser. Copy and paste our "DNS-Name-Of-Your-ALB". We should be to access our newly deployed 2048 game!

We may want to check what resources we have now:

$ kubectl get svc --all-namespaces NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE 2048-game service-2048 NodePort 10.100.21.189 <none> 80:31851/TCP 38m default kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 1h kube-system kube-dns ClusterIP 10.100.0.10 <none> 53/UDP,53/TCP 1h $ kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE 2048-game 2048-deployment-7cb694c876-8j6sq 1/1 Running 0 23m 2048-game 2048-deployment-7cb694c876-cpblx 1/1 Running 0 23m 2048-game 2048-deployment-7cb694c876-gj7br 1/1 Running 0 23m 2048-game 2048-deployment-7cb694c876-kswwd 1/1 Running 0 23m 2048-game 2048-deployment-7cb694c876-n8jkf 1/1 Running 0 23m kube-system alb-ingress-controller-7d5674cf9d-l4gpj 1/1 Running 0 23m kube-system aws-node-9vzhn 1/1 Running 1 21m kube-system aws-node-mshwj 1/1 Running 0 21m kube-system kube-dns-6f455bb957-b79x2 3/3 Running 0 23m kube-system kube-proxy-l6dnd 1/1 Running 0 21m kube-system kube-proxy-tfhrz 1/1 Running 0 21m $ kubectl get deployments --all-namespaces NAMESPACE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE 2048-game 2048-deployment 5 5 5 5 44m kube-system alb-ingress-controller 1 1 1 1 51m kube-system kube-dns 1 1 1 1 1h $ kubectl get nodes NAME STATUS ROLES AGE VERSION ip-192-168-146-159.ec2.internal Ready <none> 26m v1.10.3 ip-192-168-230-240.ec2.internal Ready <none> 26m v1.10.3 $ kubectl config current-context k8s@attractive-gopher.us-east-1.eksctl.io $ kubens 2048-game default kube-public kube-system

Since we deployed a cluster specifically to run this test and we may want to tear it down. We may want to delete the attached policy to "eksctl-attractive-gopher-NodeInstanceRole" first, and probably we need to delete the alb manually, then proceed:

$ eksctl delete cluster --name=attractive-gopher --region=us-east-1

References:

- Kubernetes Ingress with AWS ALB Ingress Controller

- AWS ALB Ingress Controller

- Kubernetes Ingress Controllers: How to choose the right one: Part 1

- Learning Kubernetes on EKS by Doing Part 4— Ingress

- Load Balancing on Kubernetes with Rancher

- Kubernetes on AWS - Ingress

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization