Docker / Kubernetes - Scaling and Updating application

This post starts from the basics of the Kubernetes cluster orchestration. We'll learn major Kubernetes features and concepts including how we can scale and update an app via Minikube:

- Deploy a containerized application on a cluster.

- Scale the deployment.

- Update the containerized application with a new software version.

- Debug the containerized application.

This post is largely based on https://kubernetes.io/docs/tutorials/kubernetes-basics/.

Prerequisite: minikube / kubectl should be installed:

$ minikube version

minikube version: v1.9.2

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"11", GitVersion:"v1.11.9", GitCommit:"16236ce91790d4c75b79f6ce96841db1c843e7d2", GitTreeState:"clean", BuildDate:"2019-03-27T14:42:18Z", GoVersion:"go1.10.8", Compiler:"gc", Platform:"darwin/amd64"}

Server Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.0", GitCommit:"9e991415386e4cf155a24b1da15becaa390438d8", GitTreeState:"clean", BuildDate:"2020-03-25T14:50:46Z", GoVersion:"go1.13.8", Compiler:"gc", Platform:"linux/amd64"}

kubectl is configured and we can see both the version of the client and as well as the server. The client version is the kubectl version; the server version is the Kubernetes version installed on the master.

Start the cluster, by running the minikube start command:

$ minikube start

😄 minikube v1.9.2 on Darwin 10.13.3

▪ KUBECONFIG=/Users/kihyuckhong/.kube/config

✨ Using the hyperkit driver based on existing profile

👍 Starting control plane node m01 in cluster minikube

🔄 Restarting existing hyperkit VM for "minikube" ...

🐳 Preparing Kubernetes v1.18.0 on Docker 19.03.8 ...

🌟 Enabling addons: default-storageclass, storage-provisioner

🏄 Done! kubectl is now configured to use "minikube"

❗ /Users/kihyuckhong/bin/kubectl is v1.11.9, which may be incompatible with Kubernetes v1.18.0.

💡 You can also use 'minikube kubectl -- get pods' to invoke a matching version

Minikube started a virtual machine for us, and a Kubernetes cluster is now running in that VM.

A Kubernetes cluster can be deployed on either physical or virtual machines. To get started with Kubernetes development, we'll use Minikube. Minikube is a lightweight Kubernetes implementation that creates a VM on our local machine and deploys a simple cluster containing only one node.

The Minikube CLI provides basic bootstrapping operations for working with our cluster, including start, stop, status, and delete.

Let's view the cluster details. We'll do that by running kubectl cluster-info:

$ kubectl cluster-info Kubernetes master is running at https://192.168.64.2:8443 KubeDNS is running at https://192.168.64.2:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

To view the nodes in the cluster, run the kubectl get nodes command:

$ kubectl get nodes NAME STATUS ROLES AGE VERSION minikube Ready master 23h v1.18.0

This command shows all nodes that can be used to host our applications. Now we have only one node, and we can see that its status is ready (it is ready to accept applications for deployment).

Before we deploy to Kubernetes cluster, let's do it with plain Docker.

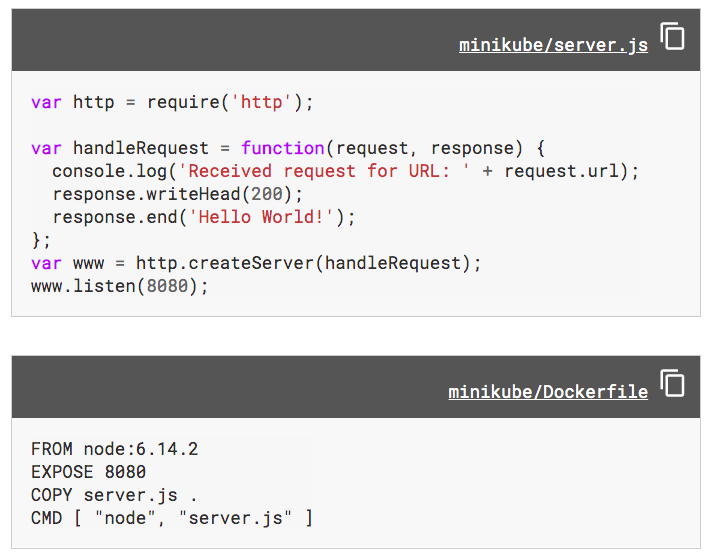

For the Deployment, we'll use a Node.js application packaged in a Docker container from the Hello Minikube tutorial as shown in the pictue below:

Let's just run it on local:

$ ls Dockerfile server.js $ node server.js

On anotehr terminal:

$ curl -i localhost:8080 HTTP/1.1 200 OK Date: Fri, 01 May 2020 17:27:06 GMT Connection: keep-alive Transfer-Encoding: chunked Hello World!

Now, let's run it within a container using Docker:

$ docker build -t echoserver . ... Successfully tagged echoserver:latest $ docker images REPOSITORY TAG IMAGE ID CREATED SIZE echoserver latest f42b5d3cbd29 About a minute ago 660MB

Running our image with -d runs the container in a detached mode, leaving the container running in the background. The -p flag redirects a public (host) port to a private port inside the container. Run the image we built:

$ docker run -p 8080:8080 -d echoserver f9e57d92323b15e67643aca414d427857301298429830750dd6e249aa3b39832 $ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES f9e57d92323b echoserver "node server.js" 4 seconds ago Up 3 seconds 0.0.0.0:8080->8080/tcp keen_golick $ curl -i localhost:8080 HTTP/1.1 200 OK Date: Fri, 01 May 2020 17:34:03 GMT Connection: keep-alive Transfer-Encoding: chunked Hello World!

Kubernetes is usually configured using YAML files. Here is a YAML file (pod.yaml) for running the hello-node image in a pod:

apiVersion: v1

kind: Pod

metadata:

name: hello-node-pod

labels:

app: hello-node

spec:

containers:

- name: hello-node

image: dockerbogo/echoserver:v1

imagePullPolicy: IfNotPresent

- The definition uses the Kubernetes API v1.

- The kind of resource being defined is a Pod.

- There is some metadata and a specification for the Pod.

- In the metadata there is a name for the Pod, and a label is also applied.

- The specification says what is to go inside the pod. There is just one container called hello-node, and the image is dockerbogo/echoserver:v1. Each container needs a name for identification purposes.

- Setting imagePullPolicy to Never means that the node pulls the image only if it is not already present locally.

Because minikube, by default, pulls an image from public repo, let's push it to dockerhub:

$ docker login Login with your Docker ID to push and pull images from Docker Hub. If you don't have a Docker ID, head over to https://hub.docker.com to create one. Username: dockerbogo Password: Login Succeeded $ docker images REPOSITORY TAG IMAGE ID CREATED SIZE echoserver latest f42b5d3cbd29 1 hours ago 660MB $ docker tag f42b5d3cbd29 dockerbogo/echoserver:v1 $ docker push dockerbogo/echoserver The push refers to repository [docker.io/dockerbogo/echoserver] a8f92026c82f: Pushed aeaa1edefd60: Mounted from library/node ...

We can tell Kubernetes to act on the contents of a YAML file with the apply subcommand:

$ kubectl apply -f pod.yaml pod/hello-node-pod created

Now that the cluster knows about the pod, we can use get for the information about it:

$ kubectl get pods NAME READY STATUS RESTARTS AGE hello-node-pod 1/1 Running 0 3m

We can use describe to get more information about the pod:

$ kubectl describe pod hello-node-pod

Name: hello-node-pod

Namespace: default

...

Labels: app=hello-node

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"labels":{"app":"hello-node"},"name":"hello-node-pod","namespace":"default"},"spec":{"cont...

Status: Running

IP: 172.17.0.4

Containers:

hello-node:

Container ID: docker://fff26073236a3feade75323b2e832b099b71d2859362bea846fae675c08d6d6d

Image: dockerbogo/echoserver:v1

...

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-pjv8t (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-pjv8t:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-pjv8t

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

...

- The name of the pod is as specified in the pod's metadata.

- We see information about the pod and about the container within it. In the Containers section of the describe output you should see the container called hello. It includes the Container ID, as well as the name and ID of the image this container was created from.

- In the description we should see an IP address. Each pod in Kubernetes gets allocated its own IP address.

Kubernetes performs several actions when it is asked to run a pod:

- It selects a node for the pod to run on. In this scenario there is only one node so it's a very simple choice.

- If the node doesn't already have a copy of the container image for each container in the pod specification, it will pull it from the container registry.

- Once pulled, the node can start running the container(s) for the pod.

- The component on each node that runs containers is called the kubelet. The kubelet has done the conceptual equivalent of docker run for us.

- In practice the component that runs containers might be Docker, or it might be an alternative such as RedHat's cri-o. This is called the runtime.

To stop the code from running, we need to delete the pod:

$ kubectl delete pod hello-node-pod pod "hello-node-pod" deleted

Once we have a running Kubernetes cluster, we can deploy our containerized applications on top of it.

$ kubectl get nodes NAME STATUS ROLES AGE VERSION minikube Ready master 23h v1.18.0

Here we see the available nodes (1 in our case). Kubernetes will choose where to deploy our application based on Node available resources.

To do so, we create a Kubernetes Deployment configuration. The Deployment instructs Kubernetes how to create and update instances of our application.

Once we've created a Deployment, the Kubernetes master schedules the application instances included in that Deployment to run on individual Nodes in the cluster.

We can create and manage a Deployment by using the Kubernetes command line interface, kubectl which uses the Kubernetes API to interact with the cluster.

When we create a Deployment, we'll need to specify the container image for our application and the number of replicas that we want to run.

One of the benefits of Kubernetes is the ability to run multiple instances of a pod. The easiest way to do this is with a deployment. The following deployment.yaml defines a deployment that will run two instances (replicas) of pods that are practically identical to the pod we just ran:

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-node-deployment

spec:

replicas: 2

selector:

matchLabels:

app: hello-node

template:

metadata:

labels:

app: hello-node

spec:

containers:

- name: hello-node

image: hdockerbogo/echoserver:v1

- The first metadata of the file applies to the deployment object (not the pods).

- The pods take their definition from the template part of the YAML definition. This includes the metadata that will apply to the pods.

- Kubernetes will autogenerate a name for each pod based on the deployment name plus some random characters.

Let's apply this deployment:

$ kubectl apply -f deployment.yaml deployment.apps/hello-node-deployment created $ kubectl get pods NAME READY STATUS RESTARTS AGE hello-node-deployment-5c6bb445fb-g25n5 1/1 Running 0 14s hello-node-deployment-5c6bb445fb-lwxvc 1/1 Running 0 14s

We just deployed our first application by creating a deployment. This performed a few things for us:

- searched for a suitable node where an instance of the application could be run (we have only 1 available node)

- scheduled the application to run on that Node

- configured the cluster to reschedule the instance on a new Node when needed

Pods that are running inside Kubernetes are running on a private, isolated network. By default they are visible from other pods and services within the same kubernetes cluster, but not outside that network. When we use kubectl, we're interacting through an API endpoint to communicate with our application.

The kubectl command can create a proxy that will forward communications into the cluster-wide, private network. The proxy can be terminated by pressing control-C and won't show any output while its running. Let's run the proxy:

$ kubectl proxy Starting to serve on 127.0.0.1:8001

Now, we have a connection between our host (the online terminal) and the Kubernetes cluster. The proxy enables direct access to the API from these terminals.

We can see all those APIs hosted through the proxy endpoint. For example, we can query the version directly through the API using the curl command:

On a second terminal window:

$ curl http://localhost:8001/version

{

"major": "1",

"minor": "18",

"gitVersion": "v1.18.0",

"gitCommit": "9e991415386e4cf155a24b1da15becaa390438d8",

"gitTreeState": "clean",

"buildDate": "2020-03-25T14:50:46Z",

"goVersion": "go1.13.8",

"compiler": "gc",

"platform": "linux/amd64"

}

The API server will automatically create an endpoint for each pod, based on the pod name, that is also accessible through the proxy.

When we have multiple instances of a container image, we'll typically want to load balance requests to them so that they can share the load of incoming requests. This is achieved in Kubernetes with a service. In other words, in order for the new deployment to be accessible without using the Proxy, a service is required which will be explained in the next step.

A Kubernetes Service groups together a collection of pods and makes them accessible as a service. Here is the file, service.yaml:

apiVersion: v1

kind: Service

metadata:

name: hello-node-svc

spec:

type: NodePort

ports:

- targetPort: 8080

port: 30000

selector:

app: hello-node

- The type of the service you're using here is NodePort.

- This service maps a request to the host's port 30000 to port 8080 on any of the service pods.

- The service uses the app: hello-node label as a selector to identify the pods that it will load balance between.

Make sure that the deployment that we ran earlier still exists:

$ kubectl get pods NAME READY STATUS RESTARTS AGE hello-node-deployment-5c6bb445fb-g25n5 1/1 Running 0 163m hello-node-deployment-5c6bb445fb-lwxvc 1/1 Running 0 163m

Let's create a service:

$ kubectl apply -f service.yaml service/hello-node-svc created $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE hello-node-svc NodePort 10.106.113.201 <none> 30000:32427 /TCP 5s kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 47h

As we can see the Cluster IP address has been allocated to this service. We can use this address to make requests to the service, within cluster. But from outside (from host machine), we can access the service like this:

$ curl $(minikube ip):32427 Hello World!

Note that we can get the cluster IP info:

$ kubectl cluster-info Kubernetes master is running at https://192.168.64.2:8443 KubeDNS is running at https://192.168.64.2:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

Or:

$ minikube ip 192.168.64.2

Let's clean up the service:

$ kubectl delete -f service.yaml service "hello-node-svc" deleted

In this section we will learn how to expose Kubernetes applications outside the cluster using the kubectl expose command instead of applying the service yaml file.

We will also learn how to view and apply labels to objects with the kubectl label command.

$ kubectl get nodes NAME STATUS ROLES AGE VERSION minikube Ready master 23h v1.18.0 $ kubectl get pods NAME READY STATUS RESTARTS AGE hello-node-deployment-5c6bb445fb-g25n5 1/1 Running 0 3h23m hello-node-deployment-5c6bb445fb-lwxvc 1/1 Running 0 3h23m $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d $ kubectl get deployments NAME READY UP-TO-DATE AVAILABLE AGE hello-node-deployment 2/2 2 2 3h25m

We have a Service called kubernetes that is created by default when minikube starts the cluster.

To create a new service and expose it to external traffic we'll use the expose command with NodePort

as parameter (minikube does not support the LoadBalancer option yet).

$ kubectl expose deployment/hello-node-deployment --type="NodePort" --port 8080 service/hello-node-deployment exposed $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE hello-node-deployment NodePort 10.106.158.78 <none> 8080:30518/TCP 58s kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d

We have now a running Service called hello-node-deployment. Here we see that the Service received a unique cluster-IP, an internal port and an external-IP (the IP of the Node).

To find out what port was opened externally (by the NodePort option) we’ll run the describe service sub command:

$ kubectl describe services/hello-node-deployment Name: hello-node-deployment Namespace: default Labels: <none> Annotations: <none> Selector: app=hello-node Type: NodePort IP: 10.106.158.78 Port: <unset> 8080/TCP TargetPort: 8080/TCP NodePort: <unset> 30518/TCP Endpoints: 172.17.0.4:8080,172.17.0.7:8080 Session Affinity: None External Traffic Policy: Cluster Events: <none>

Create an environment variable called NODE_PORT that has the value of the Node port assigned:

$ export NODE_PORT=$(kubectl get services/kubernetes-bootcamp -o go-template='{{(index .spec.ports 0).nodePort}}')

$ echo NODE_PORT=$NODE_PORT

NODE_PORT=30518

Now we can test that the app is exposed outside of the cluster using curl, the IP of the Node and the externally exposed port, curl $(minikube ip):$NODE_PORT:

$ curl $(minikube ip):$NODE_PORT Hello World!

From the response, we can see the Service is exposed!

In the previous section, we were able to get the response from the node server via exposed NodePort from outside the cluster:

$ minikube ip 192.168.64.2 $ curl $(minikube ip):$NODE_PORT Hello World!

How about from within?

There are two ways of connecting to the hello-node service within the cluster (in our case from the "alpine" pod).

We will access the hello-node service from alpine pod:

$ kubectl run --generator=run-pod/v1 --image=alpine -it my-alpine-shell -- /bin/sh If you don't see a command prompt, try pressing enter. / # apk update fetch http://dl-cdn.alpinelinux.org/alpine/v3.11/main/x86_64/APKINDEX.tar.gz fetch http://dl-cdn.alpinelinux.org/alpine/v3.11/community/x86_64/APKINDEX.tar.gz v3.11.6-10-g3d1aef7a83 [http://dl-cdn.alpinelinux.org/alpine/v3.11/main] v3.11.6-13-g5da24b5794 [http://dl-cdn.alpinelinux.org/alpine/v3.11/community] OK: 11270 distinct packages available / # apk add curl (1/4) Installing ca-certificates (20191127-r1) (2/4) Installing nghttp2-libs (1.40.0-r0) (3/4) Installing libcurl (7.67.0-r0) (4/4) Installing curl (7.67.0-r0) Executing busybox-1.31.1-r9.trigger Executing ca-certificates-20191127-r1.trigger OK: 7 MiB in 18 packages / # curl 10.106.158.78:8080 Hello World!/ # / # curl 172.17.0.4:8080 Hello World!/ # / # curl 172.17.0.7:8080 Hello World!/ #

The IPs and the port info is available from the output of th following commands:

$ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE hello-node-deployment NodePort 10.106.158.78 <none> 8080:30518/TCP 123m kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d2h $ kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES hello-node-deployment-5c6bb445fb-g25n5 1/1 Running 0 6h16m 172.17.0.4 minikube <none> <none> hello-node-deployment-5c6bb445fb-lwxvc 1/1 Running 0 6h16m 172.17.0.7 minikube <none> <none> my-alpine-shell 1/1 Running 0 15m 172.17.0.8 minikube <none> <none> $ kubectl get ep NAME ENDPOINTS AGE hello-node-deployment 172.17.0.4:8080,172.17.0.7:8080 149m kubernetes 192.168.64.2:8443 2d3h

As a summary, here are the two ways of connecting to the service within the cluster:

- Via ClusterIP:

- Via pod IP:

/ # curl 10.106.158.78:8080

/ # curl 172.17.0.4:8080 / # curl 172.17.0.7:8080

The Deployment created automatically a label for our Pod.

With describe deployment command we can see the name of the label:

$ kubectl describe services/hello-node-deployment Name: hello-node-deployment Namespace: default Labels: <none> Annotations: <none> Selector: app=hello-node Type: NodePort IP: 10.106.158.78 Port: <unset> 8080/TCP TargetPort: 8080/TCP NodePort: <unset> 30518/TCP Endpoints: 172.17.0.4:8080,172.17.0.7:8080 Session Affinity: None External Traffic Policy: Cluster Events: <none> $ kubectl get pods -l app=hello-node NAME READY STATUS RESTARTS AGE hello-node-deployment-5c6bb445fb-g25n5 1/1 Running 0 16h hello-node-deployment-5c6bb445fb-lwxvc 1/1 Running 0 16h

We will apply a new label to our Pod (we pinned the application version to the Pod), and we can check it with the describe pod command:

$ kubectl label pods hello-node-deployment-5c6bb445fb-g25n5 new-label=awesome pod/hello-node-deployment-5c6bb445fb-g25n5 labeled $ kubectl describe pod/hello-node-deployment-5c6bb445fb-g25n5 Name: hello-node-deployment-5c6bb445fb-g25n5 Namespace: default Priority: 0 PriorityClassName:Node: minikube/192.168.64.2 Start Time: Fri, 01 May 2020 15:43:59 -0700 Labels: app=hello-node new-label=awesome pod-template-hash=5c6bb445fb ...

We see here that the label is attached now to our Pod.

And we can query now the list of pods using the new label:

$ kubectl get pods -l new-label=awesome NAME READY STATUS RESTARTS AGE hello-node-deployment-5c6bb445fb-g25n5 1/1 Running 0 17h

To delete Services we can use the delete service command.

$ kubectl delete service hello-node-deployment service "hello-node-deployment" deleted $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d14h

This confirms that our Service was removed. To confirm that route is not exposed anymore we can curl the previously exposed IP and port:

$ curl $(minikube ip):30518 curl: (7) Failed to connect to 192.168.64.2 port 30518: Connection refused

This proves that the app is not reachable anymore from outside of the cluster. We can confirm that the app is still running with a curl inside the pod:

$ kubectl get pods NAME READY STATUS RESTARTS AGE hello-node-deployment-5c6bb445fb-g25n5 1/1 Running 0 17h hello-node-deployment-5c6bb445fb-lwxvc 1/1 Running 0 17h $ kubectl exec -it hello-node-deployment-5c6bb445fb-g25n5 curl localhost:8080 Hello World!

We see here that the application is up. This is because the Deployment is managing the application. To shut down the application, we would need to delete the Deployment as well.

In the previous sections, we created a Deployment, and then exposed it publicly via a Service. The Deployment created two Pods for running our application. When traffic increases, we will need to scale the application to keep up with user demand.

Scaling is accomplished by changing the number of replicas in a Deployment.

$ kubectl get deployments NAME READY UP-TO-DATE AVAILABLE AGE hello-node-deployment 2/2 2 2 18h

AVAILABLE displays how many replicas of the application are available to users.

To see the ReplicaSet created by the Deployment, run kubectl get rs:

$ kubectl get rs NAME DESIRED CURRENT READY AGE hello-node-deployment-5c6bb445fb 2 2 2 18h

Notice that the name of the ReplicaSet is always formatted as [DEPLOYMENT-NAME]-[RANDOM-STRING]. The random string is randomly generated and uses the pod-template-hash as a seed.

Two important columns of this command are:

- DESIRED displays the desired number of replicas of the application, which we define when we create the Deployment. This is the desired state.

- CURRENT displays how many replicas are currently running.

Next, let's scale the Deployment to 3 replicas.

We'll use the kubectl scale command, followed by the deployment type, name and desired number of instances:

$ kubectl scale deployment/hello-node-deployment --replicas=3 deployment.apps/hello-node-deployment scaled $ kubectl get rs NAME DESIRED CURRENT READY AGE hello-node-deployment-5c6bb445fb 3 3 3 18h

The change was applied, and we have 3 instances of the application available. Next, let's check if the number of Pods changed:

$ kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES hello-node-deployment-5c6bb445fb-g25n5 1/1 Running 0 18h 172.17.0.4 minikube <none> <none> hello-node-deployment-5c6bb445fb-lwxvc 1/1 Running 0 18h 172.17.0.7 minikube <none> <none> hello-node-deployment-5c6bb445fb-vmp59 1/1 Running 0 2m48s 172.17.0.9 minikube <none> <none>

There are 3 Pods now, with different IP addresses. The change was registered in the Deployment events log.

To check that, use the describe command:

$ kubectl describe deployments/hello-node-deployment

Name: hello-node-deployment

Namespace: default

...

Selector: app=hello-node

Replicas: 3 desired | 3 updated | 3 total | 3 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=hello-node

Containers:

hello-node:

Image: dockerbogo/echoserver:v1

...

NewReplicaSet: hello-node-deployment-5c6bb445fb (3/3 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 5m deployment-controller Scaled up replica set hello-node-deployment-5c6bb445fb to 3

Let's check if the Service is load-balancing the traffic. To find out the exposed IP and Port we can use the describe service as we learned in the previous sections.

Becase we deleted our service, we need to create the service again:

$ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d15h $ kubectl expose deployment/hello-node-deployment --type="NodePort" --port 8080 service/hello-node-deployment exposed $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE hello-node-deployment NodePort 10.108.150.219 <none> 8080:31090/TCP 3s kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d15h

Next, we'll do a curl to the exposed IP and port:

$ curl $(minikube ip):31090 Hello World!

Execute the command multiple times and probably, we hit a different Pod with every request.

To scale down the Service to 2 replicas, run again the scale command:

$ kubectl scale deployment/hello-node-deployment --replicas=2 deployment.apps/hello-node-deployment scaled $ kubectl get rs NAME DESIRED CURRENT READY AGE hello-node-deployment-5c6bb445fb 2 2 2 18h

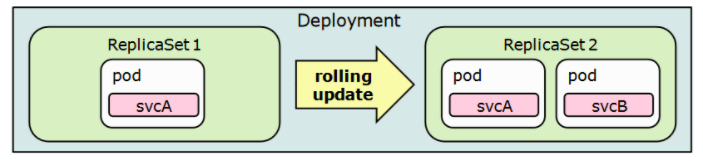

Rolling updates allow Deployments' update to take place with zero downtime by incrementally updating Pods instances with new ones. The new Pods will be scheduled on Nodes with available resources.

In Kubernetes, updates are versioned and any Deployment update can be reverted to a previous (stable) version.

Rolling updates allow the following actions:

- Promote an application from one environment to another (via container image updates)

- Rollback to previous versions

- Continuous Integration and Continuous Delivery of applications with zero downtime

$ kubectl get deployments NAME READY UP-TO-DATE AVAILABLE AGE hello-node-deployment 2/2 2 2 19h $ kubectl get pods NAME READY STATUS RESTARTS AGE hello-node-deployment-5c6bb445fb-g25n5 1/1 Running 0 19h hello-node-deployment-5c6bb445fb-lwxvc 1/1 Running 0 19h

To view the current image version of the app, run a describe command against the Pods (look at the Image field):

$ kubectl describe pods

Name: hello-node-deployment-5c6bb445fb-g25n5

Namespace: default

...

Containers:

hello-node:

Container ID: docker://85098d12fb415be7a6d958d9c91b476d15fa66cf42f349b8d9a9f603e34cf88a

Image: dockerbogo/echoserver:v1

Image ID: docker-pullable://dockerbogo/echoserver@sha256:eb09a387eb751fd7a00fb59de8117c7084e88350a3e4246ae40ebf4613e6e55c

...

To update the image of the application to version 2, use the set image command, followed by the deployment name and container=new image: version:

$ docker images REPOSITORY TAG IMAGE ID CREATED SIZE dockerbogo/echoserver v1 f42b5d3cbd29 26 hours ago 660MB $ docker tag f42b5d3cbd29 dockerbogo/echoserver:v2 $ docker images REPOSITORY TAG IMAGE ID CREATED SIZE dockerbogo/echoserver v1 f42b5d3cbd29 26 hours ago 660MB dockerbogo/echoserver v2 f42b5d3cbd29 26 hours ago 660MB $ docker push dockerbogo/echoserver The push refers to repository [docker.io/dockerbogo/echoserver] ... v1: digest: sha256:eb09a387eb751fd7a00fb59de8117c7084e88350a3e4246ae40ebf4613e6e55c size: 2214 ... v2: digest: sha256:eb09a387eb751fd7a00fb59de8117c7084e88350a3e4246ae40ebf4613e6e55c size: 2214 $ kubectl set image deployments/hello-node-deployment \ hello-node=dockerbogo/echoserver:v2 deployment.apps/hello-node-deployment image updated

The command notified the Deployment to use a different image for our app and initiated a rolling update.

Check the status of the new Pods:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-node-deployment-699574469-8nxpn 1/1 Running 0 6m15s

hello-node-deployment-699574469-c2245 1/1 Running 0 6m20s

$ kubectl describe pods

Name: hello-node-deployment-699574469-8nxpn

Namespace: default

...

Status: Running

IP: 172.17.0.9

Controlled By: ReplicaSet/hello-node-deployment-699574469

Containers:

hello-node:

Container ID: docker://874846f8d57d9c29b1d3c38003393773bc283356a09be9ae7f6076fbdc7b062c

Image: dockerbogo/echoserver:v2

Image ID: docker-pullable://dockerbogo/echoserver@sha256:eb09a387eb751fd7a00fb59de8117c7084e88350a3e4246ae40ebf4613e6e55c

...

The update can be confirmed also by running a rollout status command:

$ kubectl rollout status deployments/hello-node-deployment deployment "hello-node-deployment" successfully rolled out

When we introduce a change that breaks production, we should have a plan to roll back that change.

Kubernetes has a built-in rollback mechanism:

kubectl offers a simple mechanism to roll back changes to resources such as

Deployments, StatefulSets and DaemonSets.

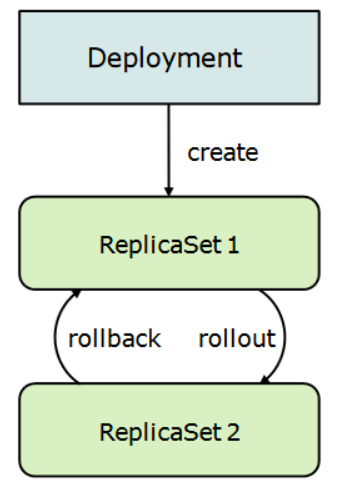

Since the replicas is a field in the Deployment, we might be tempted to conclude that is the Deployment's job to count the number of Pods and create or delete them. However, this is not the case. Deployments delegate counting Pods to another component: the ReplicaSet

Every time we create a Deployment, the deployment creates a ReplicaSet and delegates creating (and deleting) the Pods.

Source: Deployments, ReplicaSets, and pods

The sole responsibility for the ReplicaSet is to count Pods while the Deployment manages ReplicaSets and orchestrates the rolling update.

Source: Deployments, ReplicaSets, and pods

Let's perform another update, and deploy image tagged as v3 which does not exist:

$ kubectl scale deployment/hello-node-deployment --replicas=4 deployment.apps/hello-node-deployment scaled $ kubectl get deployments NAME READY UP-TO-DATE AVAILABLE AGE hello-node-deployment 4/4 4 4 104s $ kubectl get pods NAME READY STATUS RESTARTS AGE hello-node-deployment-699574469-lb8dj 1/1 Running 0 23s hello-node-deployment-699574469-t8cjb 1/1 Running 0 62s hello-node-deployment-699574469-tg8b9 1/1 Running 0 23s hello-node-deployment-699574469-wbn8s 1/1 Running 0 62s $ kubectl set image deployment/hello-node-deployment \ hello-node=dockerbogo/echoserver:v3 deployment.apps/hello-node-deployment image updated $ kubectl get deployments NAME READY UP-TO-DATE AVAILABLE AGE hello-node-deployment 3/4 2 3 2m44s $ kubectl get pods NAME READY STATUS RESTARTS AGE hello-node-deployment-699574469-t8cjb 1/1 Running 0 3m10s hello-node-deployment-699574469-tg8b9 1/1 Running 0 2m31s hello-node-deployment-699574469-wbn8s 1/1 Running 0 3m10s hello-node-deployment-6ff6f5d986-6xfnl 0/1 ErrImagePull 0 34s hello-node-deployment-6ff6f5d986-gg9qc 0/1 ErrImagePull 0 34s

And something is wrong and that's because there is no image called v3 in the repository. Let's roll back to our previously working version.

We'll use the rollout undo command:

$ kubectl rollout undo deployment/hello-node-deployment

deployment.apps/hello-node-deployment rolled back

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

NAME READY STATUS RESTARTS AGE

hello-node-deployment-699574469-6nn4l 1/1 Running 0 2m6s

hello-node-deployment-699574469-k7tk2 1/1 Running 0 13s

hello-node-deployment-699574469-rzsqc 1/1 Running 0 2m6s

hello-node-deployment-699574469-w7dmn 1/1 Running 0 2m6s

$ kubectl describe pods hello-node-deployment-699574469-6nn4l

Name: hello-node-deployment-699574469-6nn4l

Namespace: default

...

Status: Running

IP: 172.17.0.9

IPs:

IP: 172.17.0.9

Controlled By: ReplicaSet/hello-node-deployment-699574469

Containers:

hello-node:

Container ID: docker://a833a6b565e5df4d98cea8fddd0990d76367db6f53bed142911a87279b89be4a

Image: dockerbogo/echoserver:v2

...

$ kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

hello-node-deployment 4/4 4 4 5m48s

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization