Docker Compose - Hashicorp's Vault and Consul Part C (Consul Backend)

Continued from Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation).

So far, we've been using the Filesystem backend. This will not scale beyond a single server, so it does not take advantage of Vault's high availability (HA). Fortunately, there are a number of other Storage backends, like the Consul backend, designed for distributed systems.

In this post, we'll set up Consul. It is a distributed service mesh to connect, secure, and configure services across any runtime platform and public or private cloud.

HashiCorp's Consul has multiple components such as discovering and configuring services in our infrastructure. It provides several key features:

- Service Discovery

- Health Checking

- KV Store

- Multi Datacenter

Among the features, in this post, we'll use the Consul as KV Storage backend.

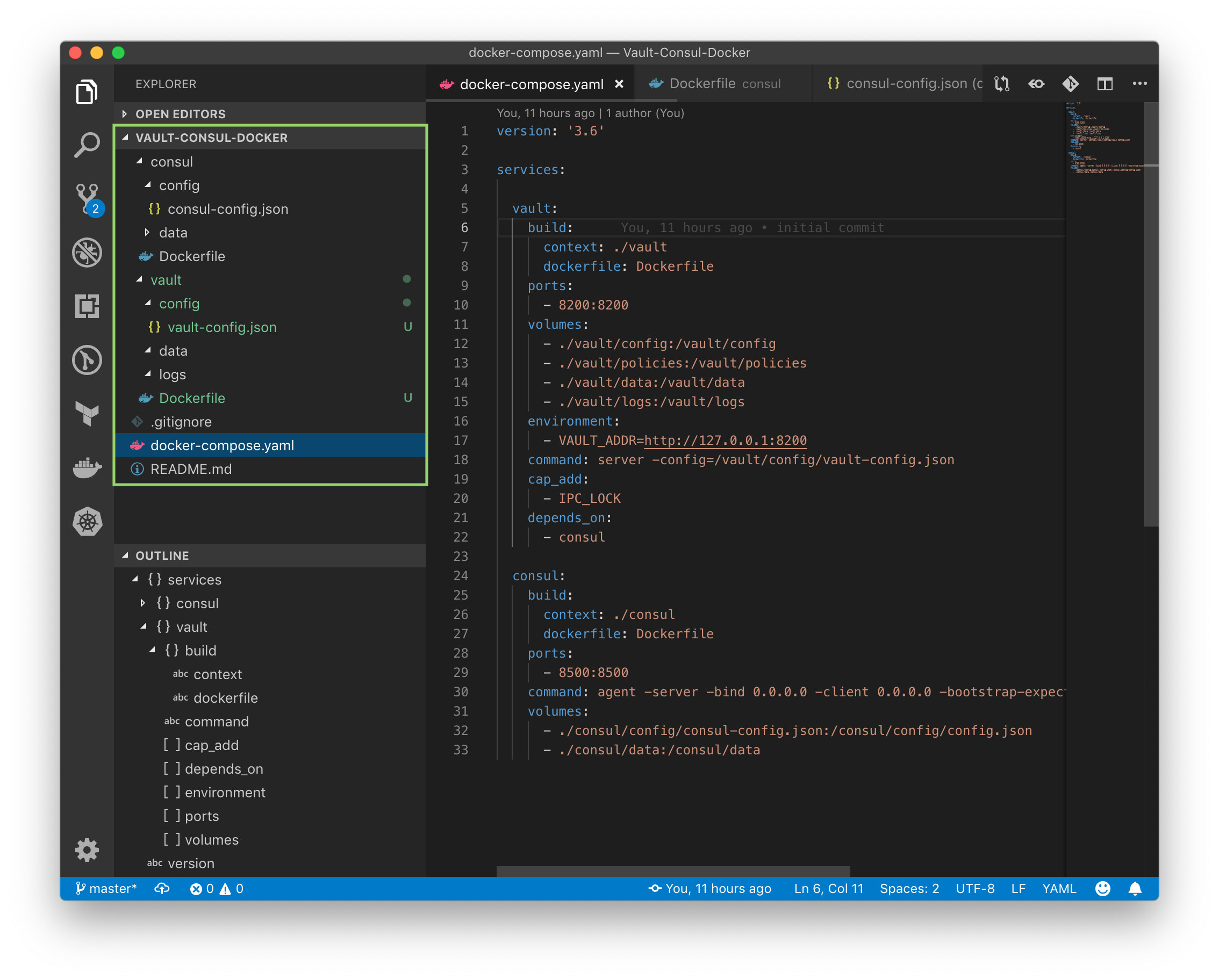

To set up Consul, we may want to update the docker-compose.yml file so that we can add the consul service:

version: '3.6'

services:

vault:

build:

context: ./vault

dockerfile: Dockerfile

ports:

- 8200:8200

volumes:

- ./vault/config:/vault/config

- ./vault/policies:/vault/policies

- ./vault/data:/vault/data

- ./vault/logs:/vault/logs

environment:

- VAULT_ADDR=http://127.0.0.1:8200

command: server -config=/vault/config/vault-config.json

cap_add:

- IPC_LOCK

depends_on:

- consul

consul:

build:

context: ./consul

dockerfile: Dockerfile

ports:

- 8500:8500

command: agent -server -bind 0.0.0.0 -client 0.0.0.0 -bootstrap-expect 1 -config-file=/consul/config/config.json

volumes:

- ./consul/config/consul-config.json:/consul/config/config.json

- ./consul/data:/consul/data

Let's create a new directory within the project root called consul, and then add a new Dockerfile to that newly created directory:

# base image

FROM alpine:3.7

# set consul version

ENV CONSUL_VERSION 1.2.1

# create a new directory

RUN mkdir /consul

# download dependencies

RUN apk --no-cache add \

bash \

ca-certificates \

wget

# download and set up consul

RUN wget --quiet --output-document=/tmp/consul.zip https://releases.hashicorp.com/consul/${CONSUL_VERSION}/consul_${CONSUL_VERSION}_linux_amd64.zip && \

unzip /tmp/consul.zip -d /consul && \

rm -f /tmp/consul.zip && \

chmod +x /consul/consul

# update PATH

ENV PATH="PATH=$PATH:$PWD/consul"

# add the config file

COPY ./config/consul-config.json /consul/config/config.json

# expose ports

EXPOSE 8300 8400 8500 8600

# run consul

ENTRYPOINT ["consul"]

consul/consul-config.json:

{

"datacenter": "localhost",

"data_dir": "/consul/data",

"log_level": "DEBUG",

"server": true,

"ui": true,

"ports": {

"dns": 53

}

}

We can find more options for the configuration.

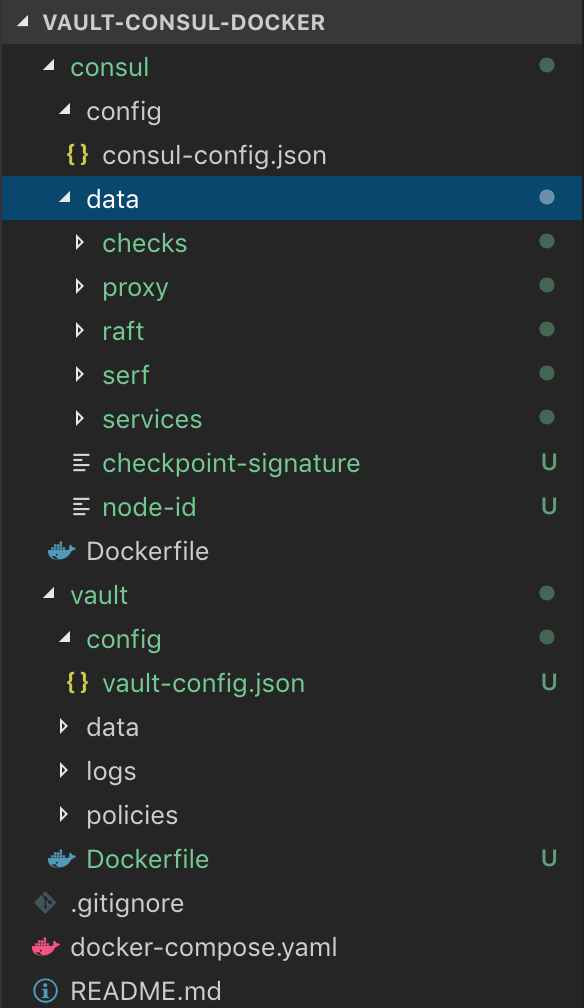

The consul directory looks like this:

../consul ├── Dockerfile ├── config │ └── consul-config.json └── data

Exit out of the bash session and bring the container down we've been running:

$ docker-compose down Stopping vault-consul-docker_vault_1 ... done Removing vault-consul-docker_vault_1 ... done Removing network vault-consul-docker_default

Then update the Vault config file (vault/vault-config):

{

"backend": {

"consul": {

"address": "consul:8500",

"path": "vault/"

}

},

"listener": {

"tcp":{

"address": "0.0.0.0:8200",

"tls_disable": 1

}

},

"ui": true

}

So, now we're using the Consul backend instead of the Filesystem.

We used the name of the service, consul, as part of the address. The path key defines the path in Consul's key/value store where the Vault data will be stored.

We need to clear out all files and folders within the vault/data directory to remove the Filesystem backend.

Now we have a new Dockerfile and config files for Consul and updated config for Vault:

Let's build the new images and spin up the containers:

$ docker-compose up -d --build ... Creating vault-consul-docker_consul_1 ... done Creating vault-consul-docker_vault_1 ... done $ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 0f0cc416ae2d vault-consul-docker_vault "vault server -confi…" About a minute ago Up About a minute 0.0.0.0:8200->8200/tcp vault-consul-docker_vault_1 006a2fcaf6bf vault-consul-docker_consul "consul agent -serve…" About a minute ago Up About a minute 8300/tcp, 8400/tcp, 8600/tcp, 0.0.0.0:8500->8500/tcp vault-consul-docker_consul_1

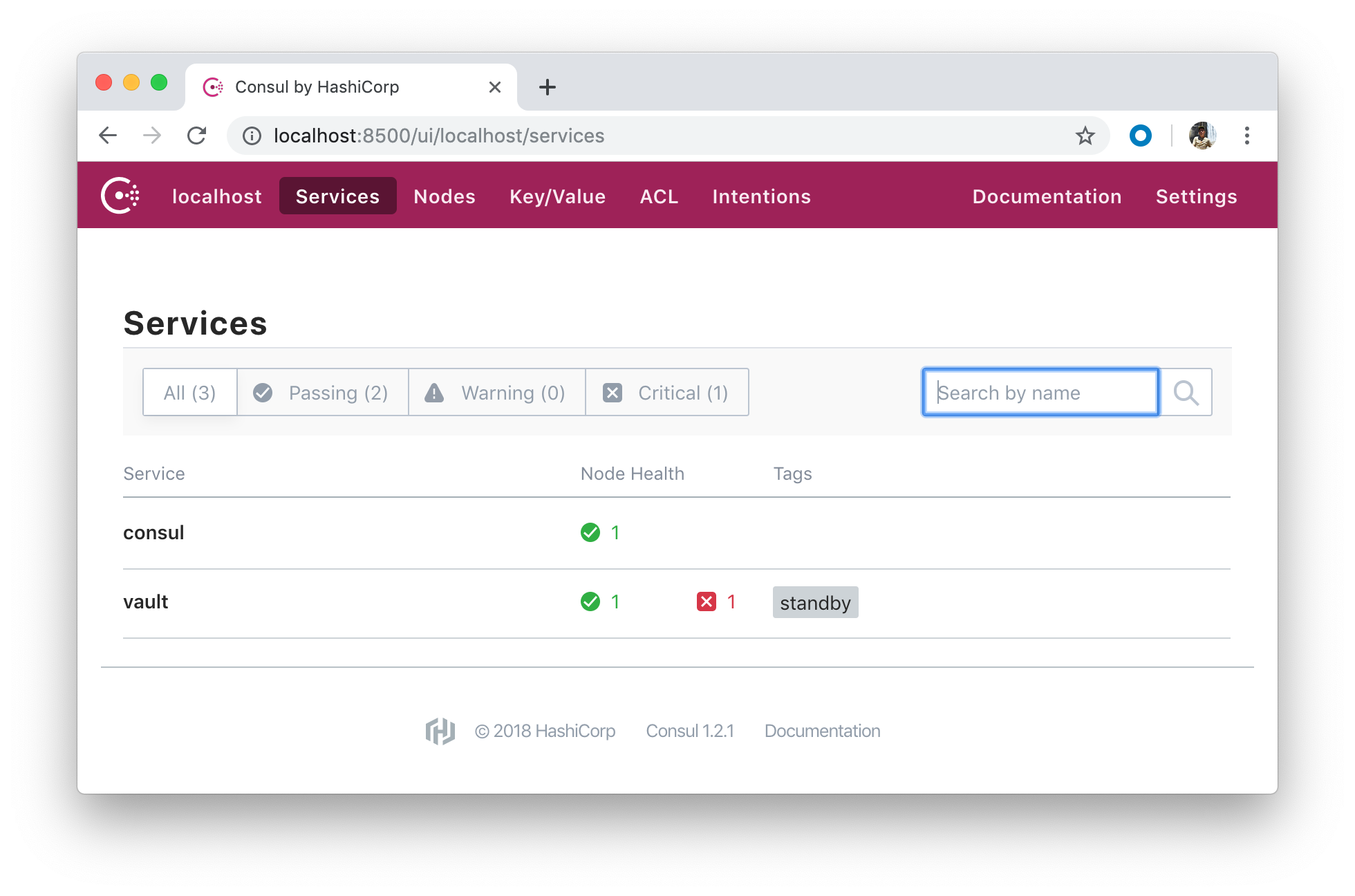

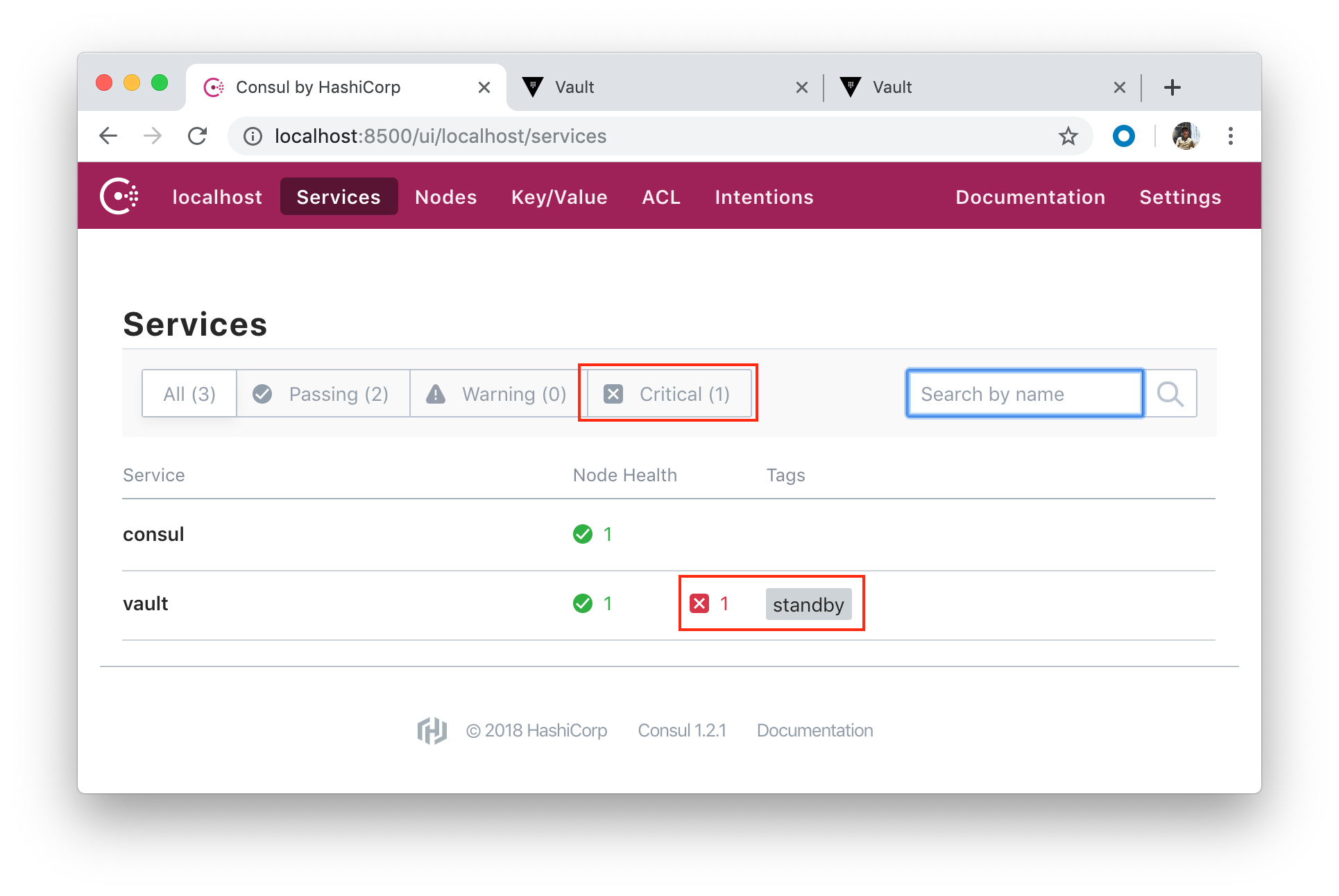

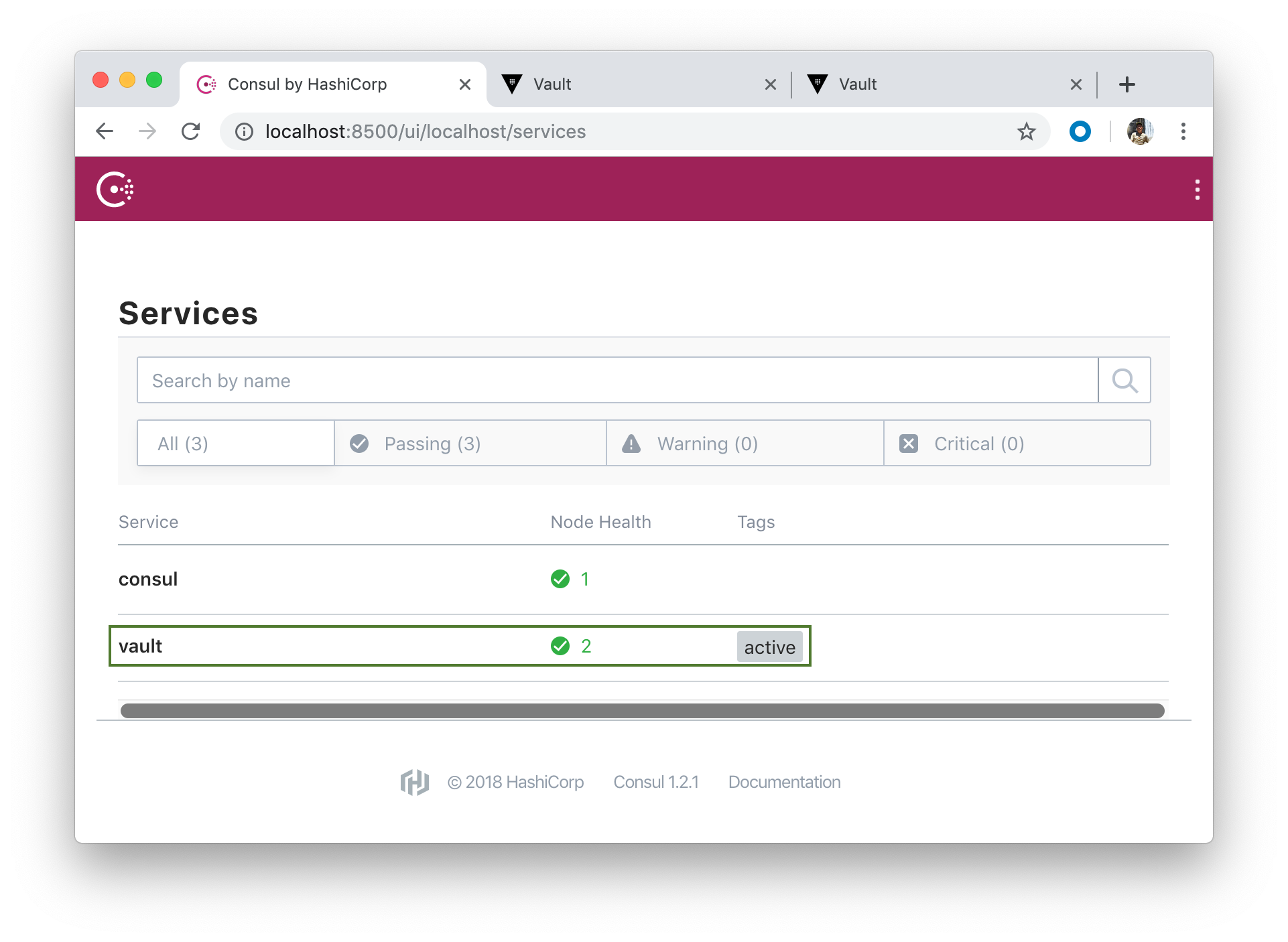

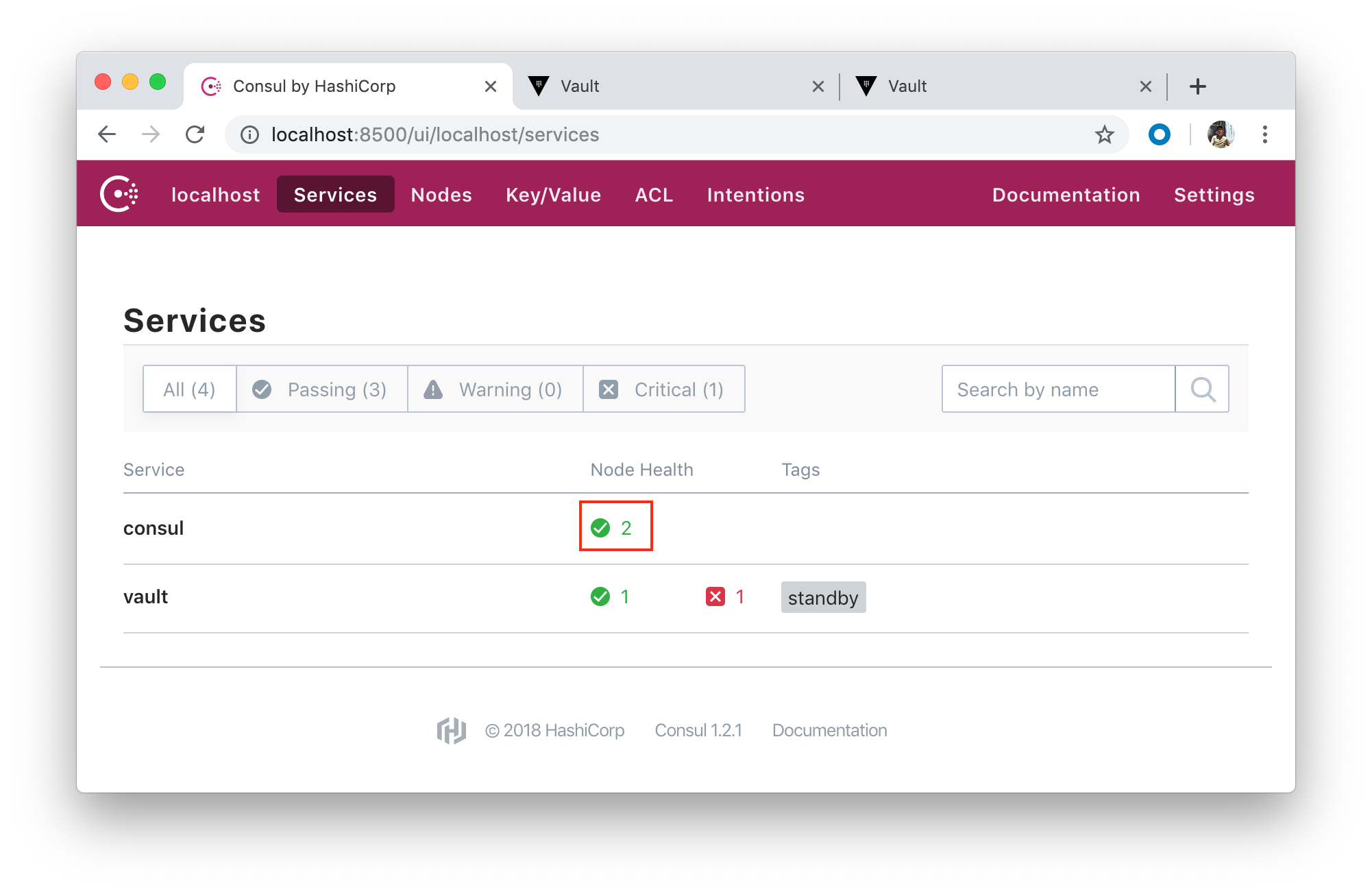

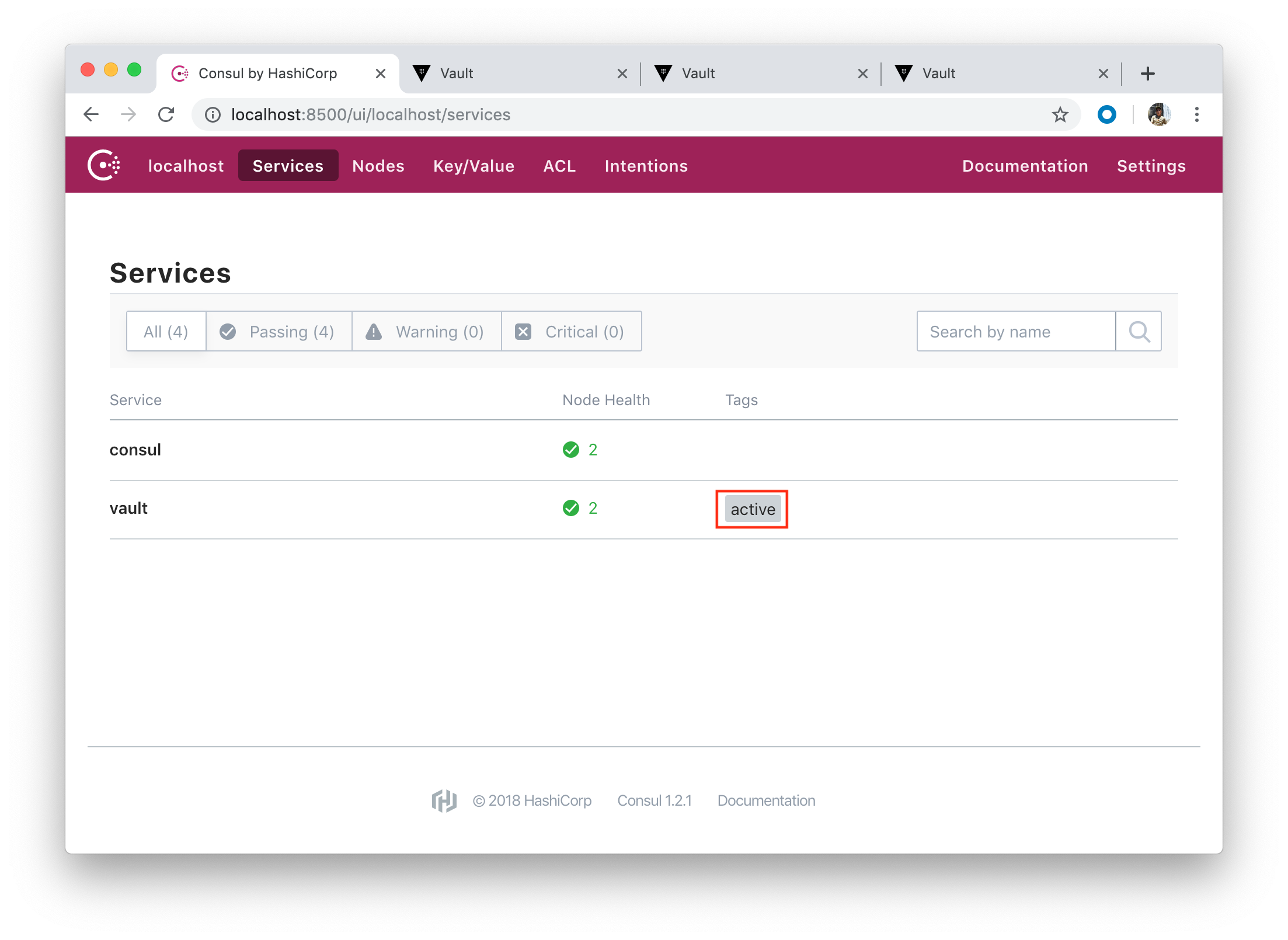

Check http://localhost:8500/ui:

As we've done before, let's create a new bash session in the Vault container:

$ docker-compose exec vault bash bash-4.4#

The vault operator init command initializes a Vault server. Initialization is the process by which Vault's storage backend is prepared to receive data.

During initialization, Vault generates an in-memory master key and applies Shamir's secret sharing algorithm to disassemble that master key into a configuration number of key shares such that a configurable subset of those key shares must come together to regenerate the master key. These keys are often called unseal keys in Vault's documentation.

Note that this command cannot be run against already-initialized Vault cluster.

bash-4.4# vault operator init Unseal Key 1: RDmgJrpou9uEoGhCHuul+yaUVjeVq0qqS0bjZNvSJjBd Unseal Key 2: Typ0pcGCF8FiqReFztcoJHdpPavgQtFiyxDuvdkBw+W7 Unseal Key 3: ZH7dr8kHKlZfYdV49404UfMzSvIqAfxtWoXM8ROxMzkp Unseal Key 4: MhaAKFPbZ20h5y1N6l/g453Izi6XdbcOVEWHK2kvMIMc Unseal Key 5: RKm7E9q2UVyD7u7JliofmTwknvg/kRGwobUjmi86nGXd Initial Root Token: 16336cfc-a13c-c288-ee7b-fad06f15475e Vault initialized with 5 key shares and a key threshold of 3. Please securely distribute the key shares printed above. When the Vault is re-sealed, restarted, or stopped, you must supply at least 3 of these keys to unseal it before it can start servicing requests. Vault does not store the generated master key. Without at least 3 key to reconstruct the master key, Vault will remain permanently sealed! It is possible to generate new unseal keys, provided you have a quorum of existing unseal keys shares. See "vault operator rekey" for more information.

The vault operator unseal allows the user to provide a portion of the master key to unseal a Vault server. Vault starts in a sealed state.

bash-4.4# vault operator unseal RKm7E9q2UVyD7u7JliofmTwknvg/kRGwobUjmi86nGXd Key Value --- ----- Seal Type shamir Sealed false Total Shares 5 Threshold 3 Version 0.10.3 Cluster Name vault-cluster-49bce7b8 Cluster ID fb2f7207-6e41-bd12-abe7-ca18b256f001 HA Enabled true HA Cluster n/a HA Mode standby Active Node Addressbash-4.4# vault operator unseal MhaAKFPbZ20h5y1N6l/g453Izi6XdbcOVEWHK2kvMIMc Key Value --- ----- Seal Type shamir Sealed false Total Shares 5 Threshold 3 Version 0.10.3 Cluster Name vault-cluster-49bce7b8 Cluster ID fb2f7207-6e41-bd12-abe7-ca18b256f001 HA Enabled true HA Cluster https://172.22.0.2:8201 HA Mode active bash-4.4# vault operator unseal ZH7dr8kHKlZfYdV49404UfMzSvIqAfxtWoXM8ROxMzkp Key Value --- ----- Seal Type shamir Sealed false Total Shares 5 Threshold 3 Version 0.10.3 Cluster Name vault-cluster-49bce7b8 Cluster ID fb2f7207-6e41-bd12-abe7-ca18b256f001 HA Enabled true HA Cluster https://172.22.0.2:8201 HA Mode active

bash-4.4# vault login Token (will be hidden): Success! You are now authenticated. The token information displayed below is already stored in the token helper. You do NOT need to run "vault login" again. Future Vault requests will automatically use this token. Key Value --- ----- token 16336cfc-a13c-c288-ee7b-fad06f15475e token_accessor 9edd90cb-0eb5-0ef5-2296-1f32a014f870 token_duration ∞ token_renewable false token_policies ["root"] identity_policies [] policies ["root"]

The vault login command authenticates users or machines to Vault using the provided arguments. A successful authentication results in a Vault token - conceptually similar to a session token on a website. By default, this token is cached on the local machine for future requests.

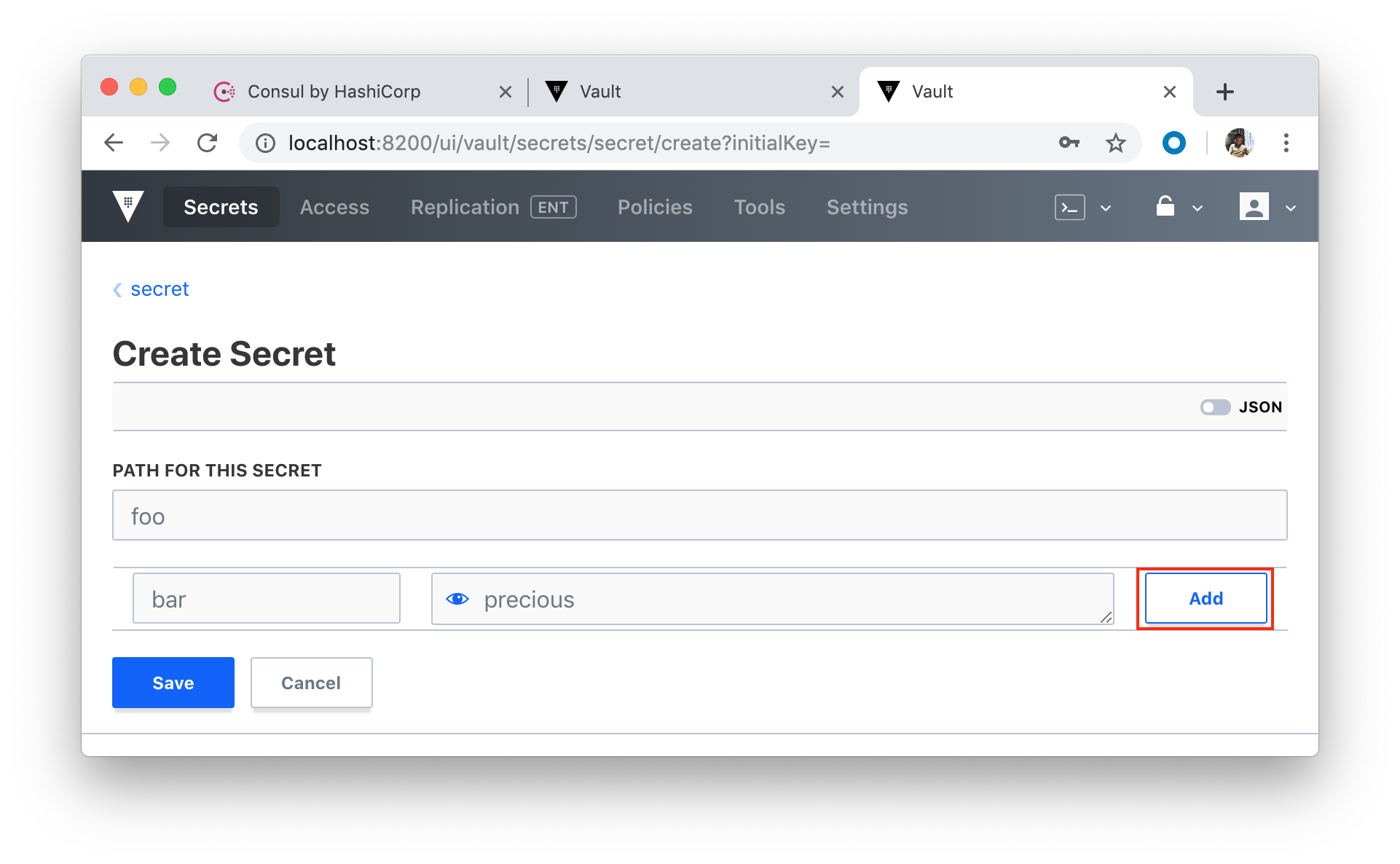

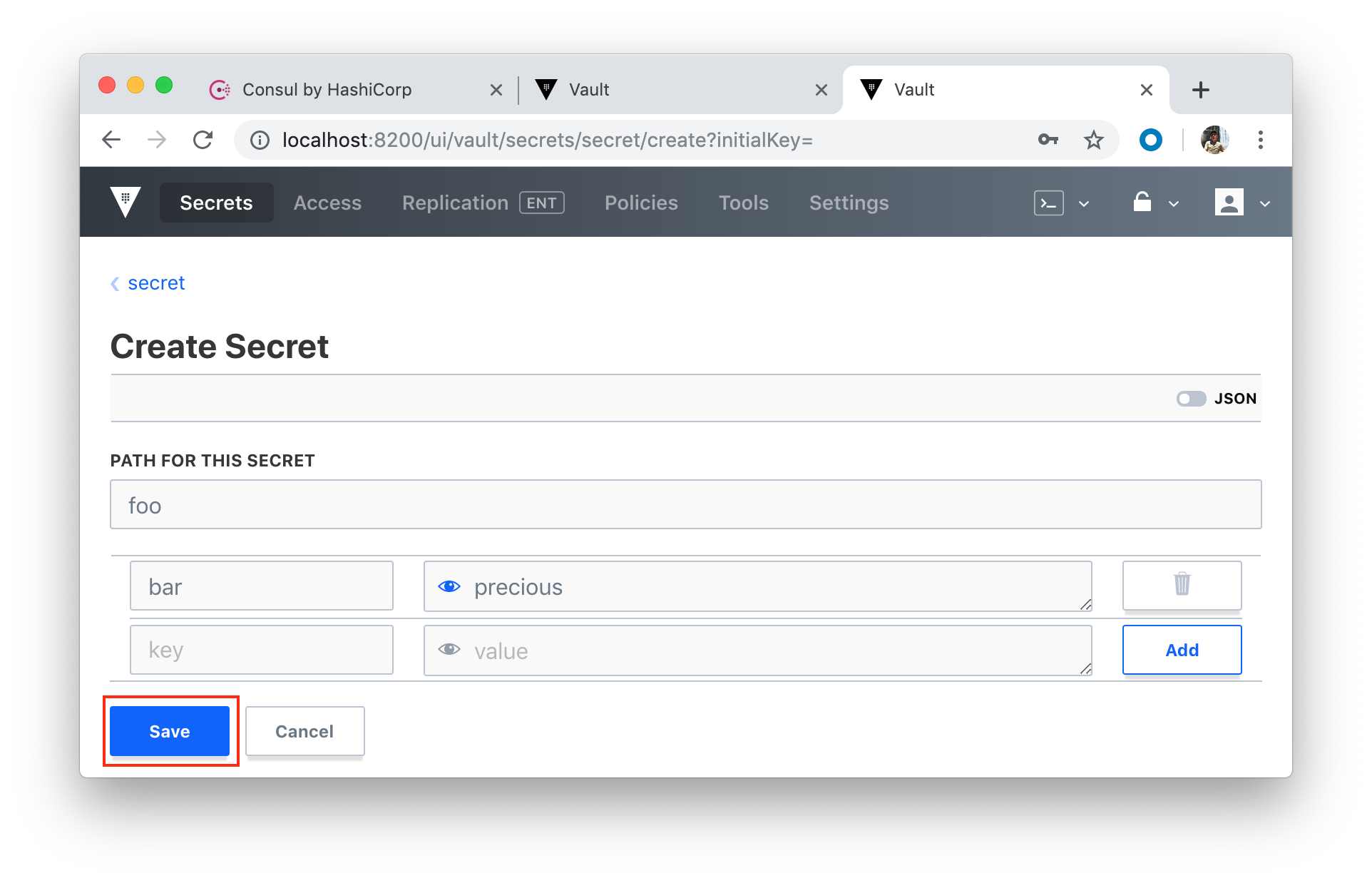

Let's add a new static secret:

bash-4.4# vault kv put secret/foo bar=precious Success! Data written to: secret/foo

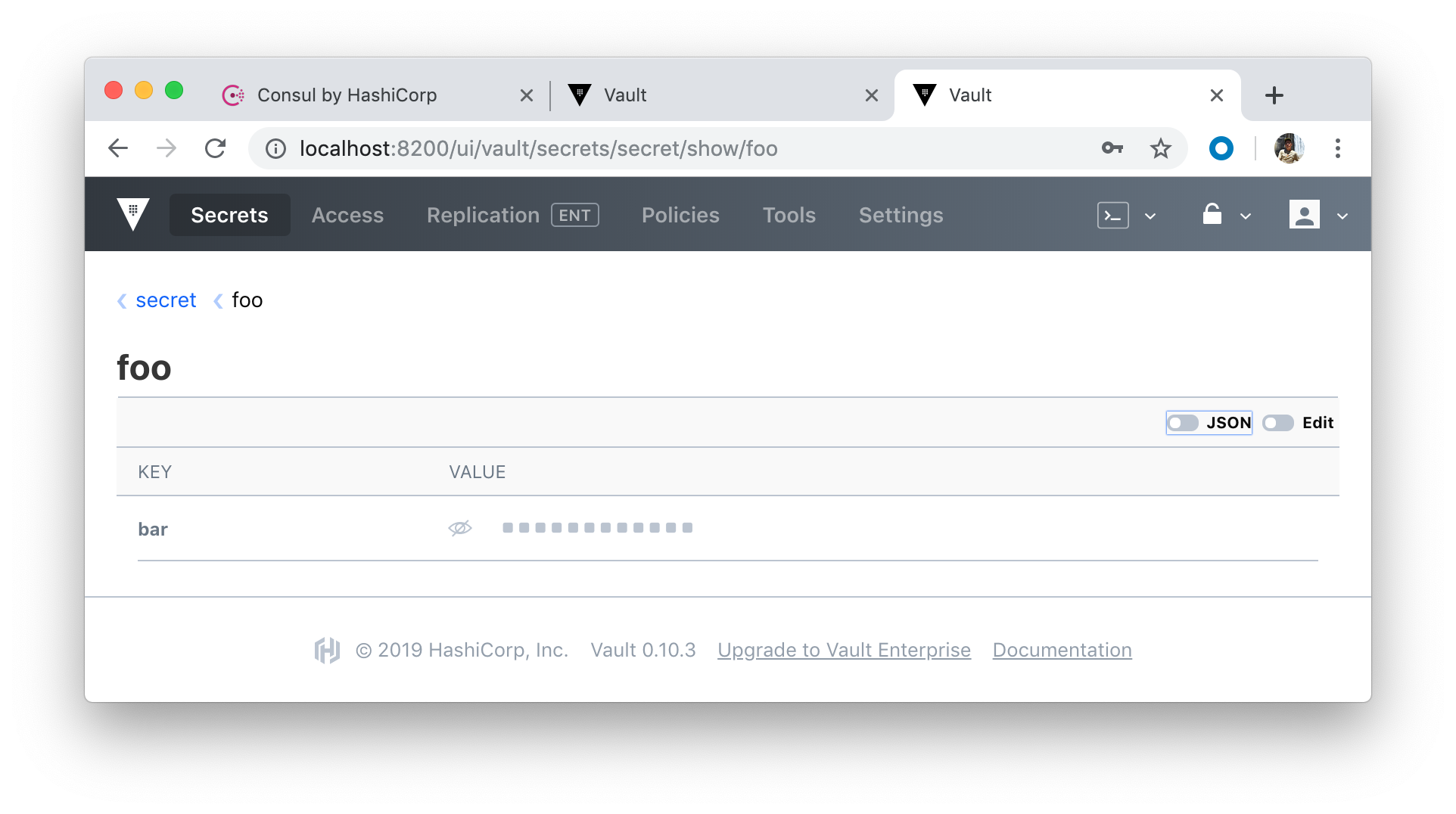

To read it back:

bash-4.4# vault kv get secret/foo === Data === Key Value --- ----- bar precious

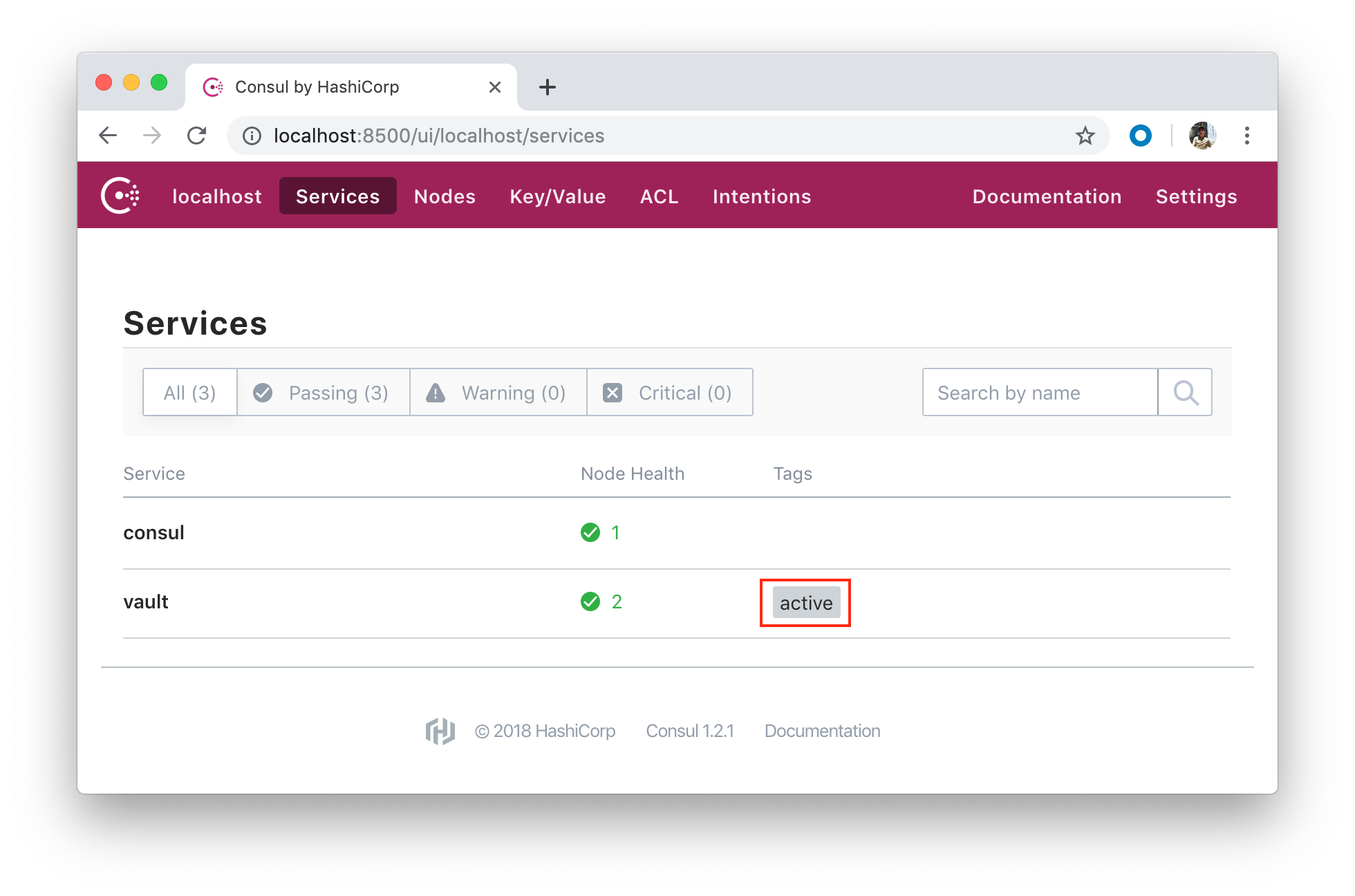

One of our Vault server nodes (though we have only one) successfully grabbed a lock within the data store and become an active node:

All other nodes (suppose we have three) become standby nodes.

To test UI, exit out of the bash session and bring the container down we've been running.

$ docker-compose down Stopping vault-consul-docker_vault_1 ... done Stopping vault-consul-docker_consul_1 ... done Removing vault-consul-docker_vault_1 ... done Removing vault-consul-docker_consul_1 ... done Removing network vault-consul-docker_default

We also need to undo the actions we took in the previous section with CLI: remove the folders /files in consul/data.

Let's build the new images and spin up the containers again:

$ docker-compose up -d --build ... Creating vault-consul-docker_consul_1 ... done Creating vault-consul-docker_vault_1 ... done

Navigate to http://localhost:8500/ui:

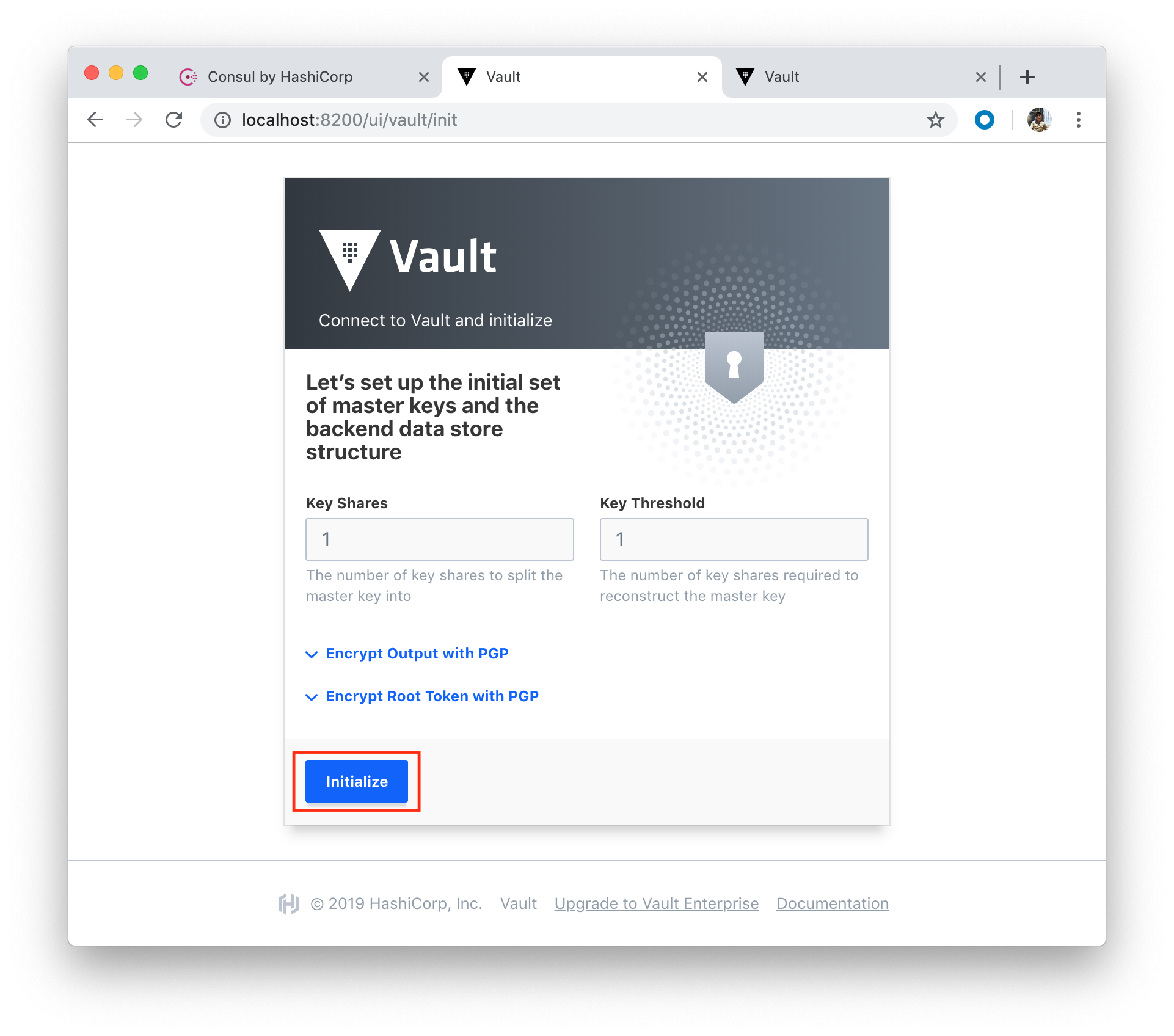

Click "Initialize" button:

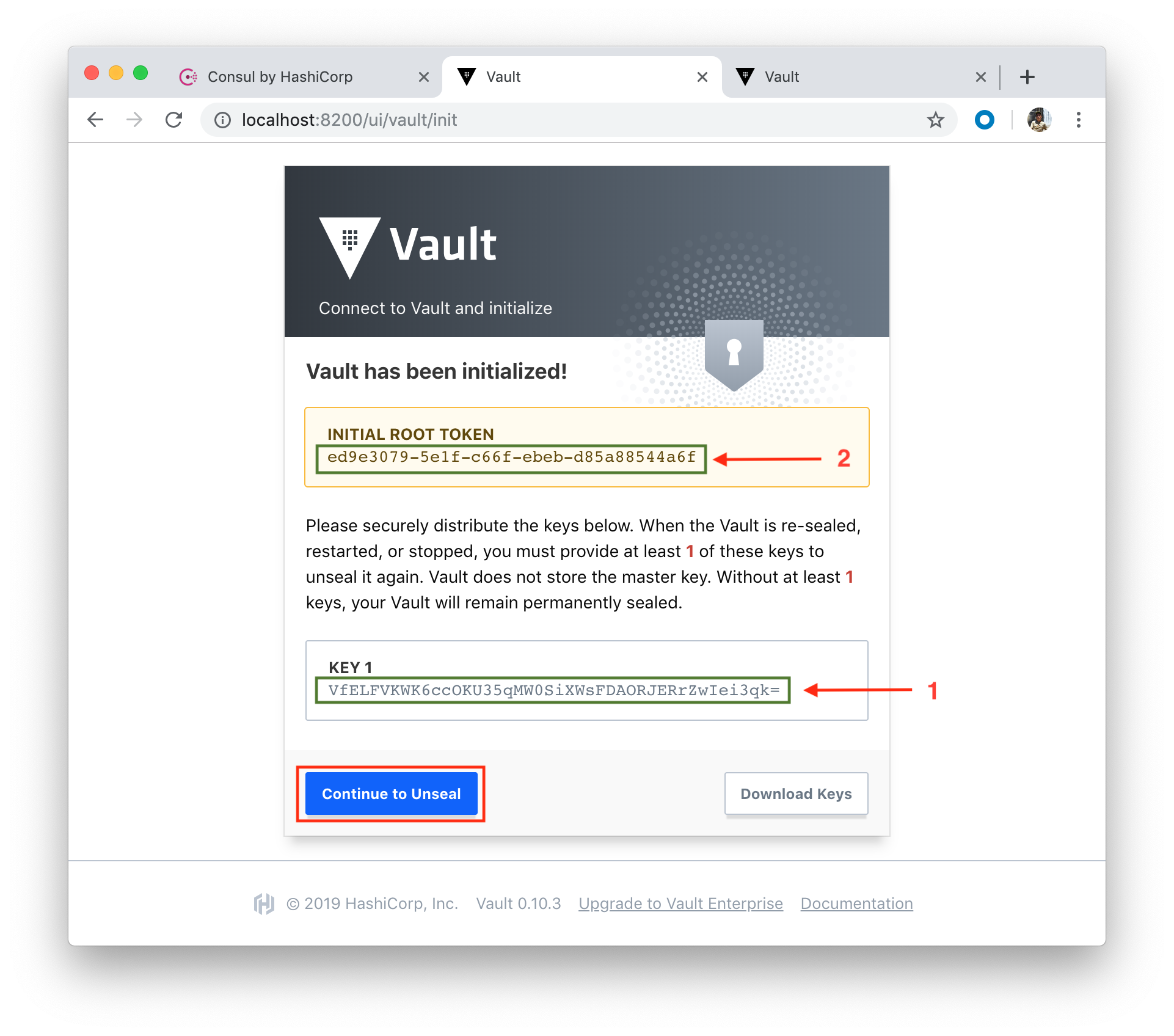

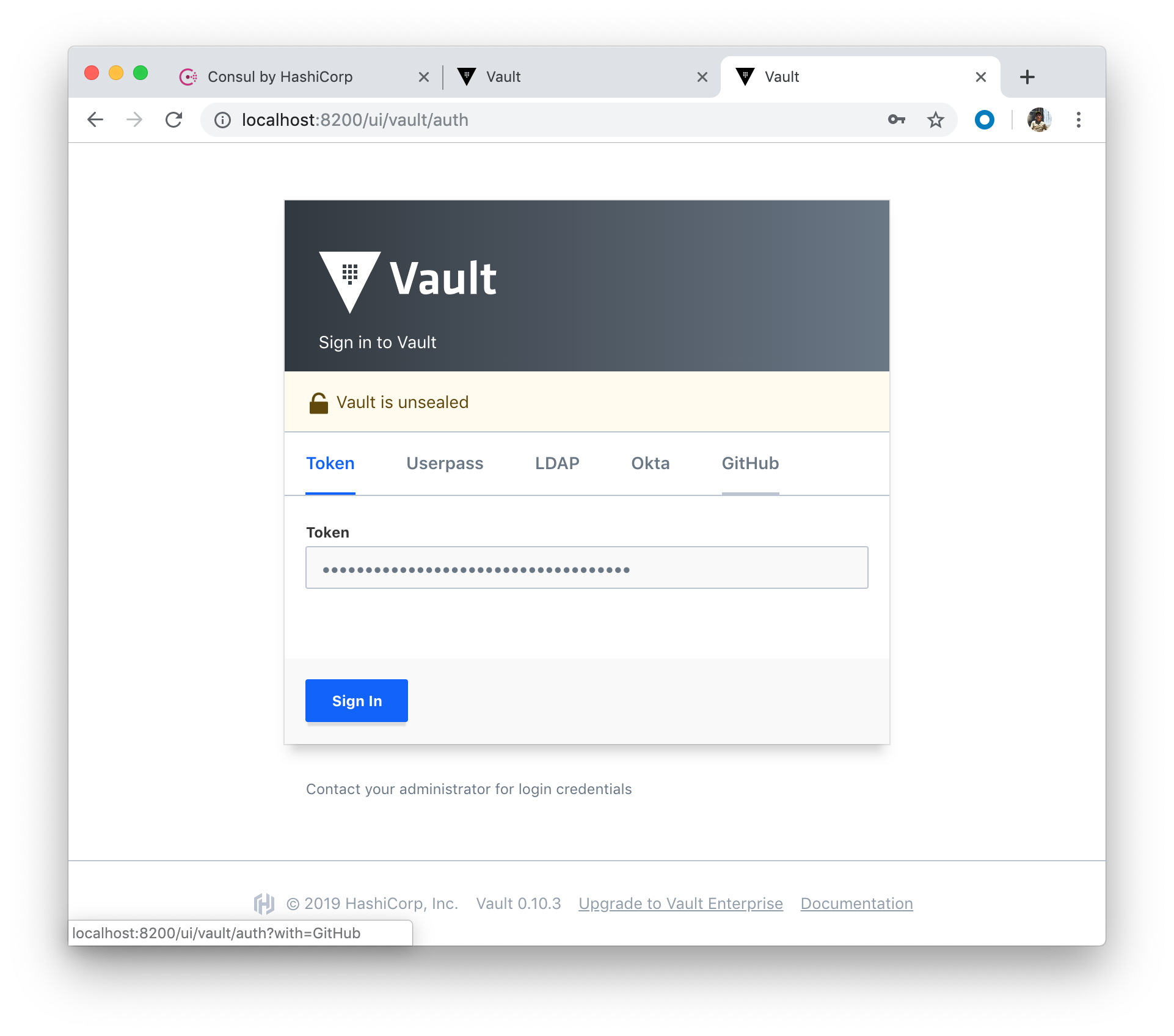

Copy the key and paste it into our Vault in the 3rd tab:

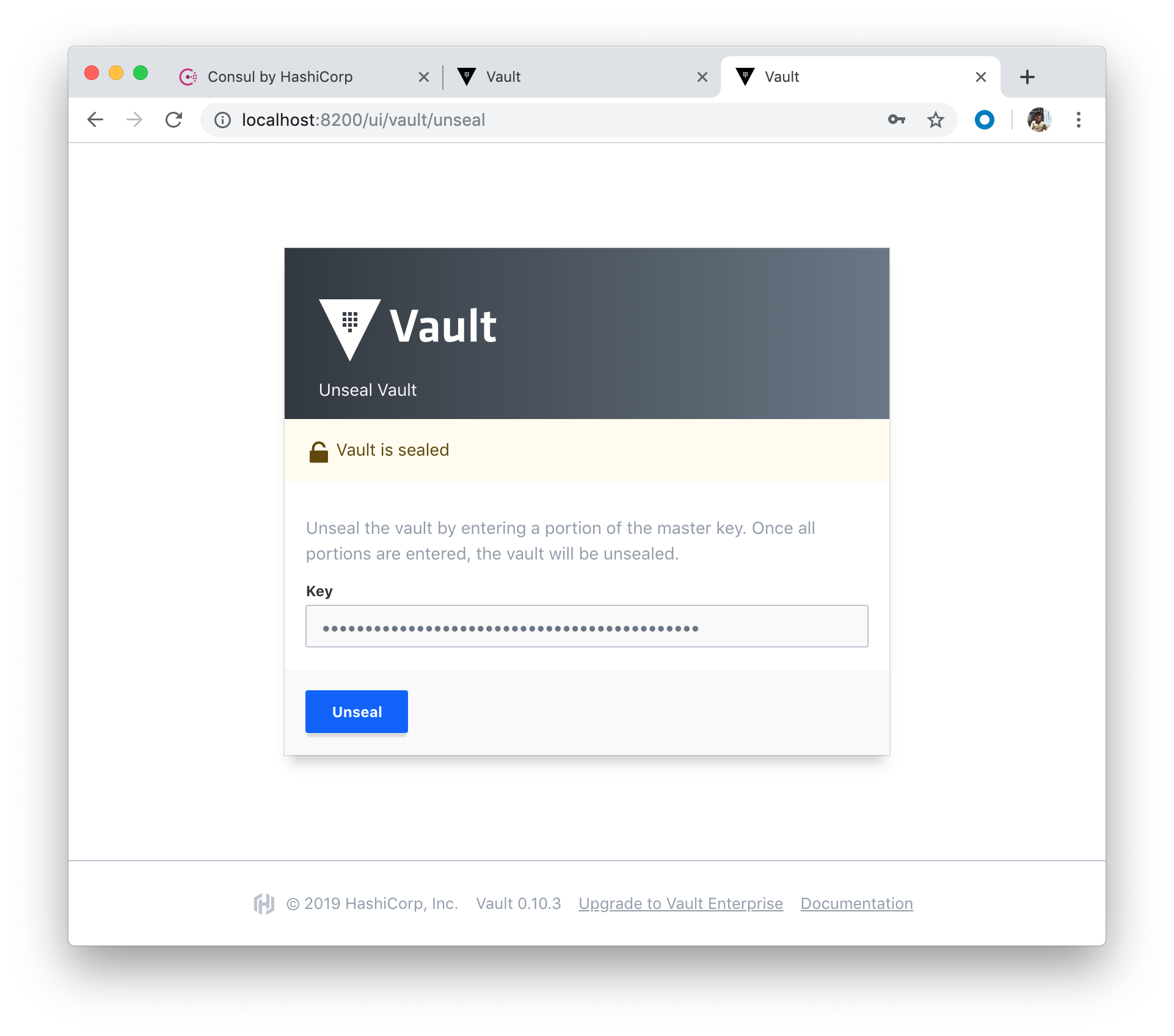

Click "Unseal" button:

Copy the token and paste it, and click "Sign In" button:

We can see our Vault node successfully grabbed a lock within the data store and become an active node:

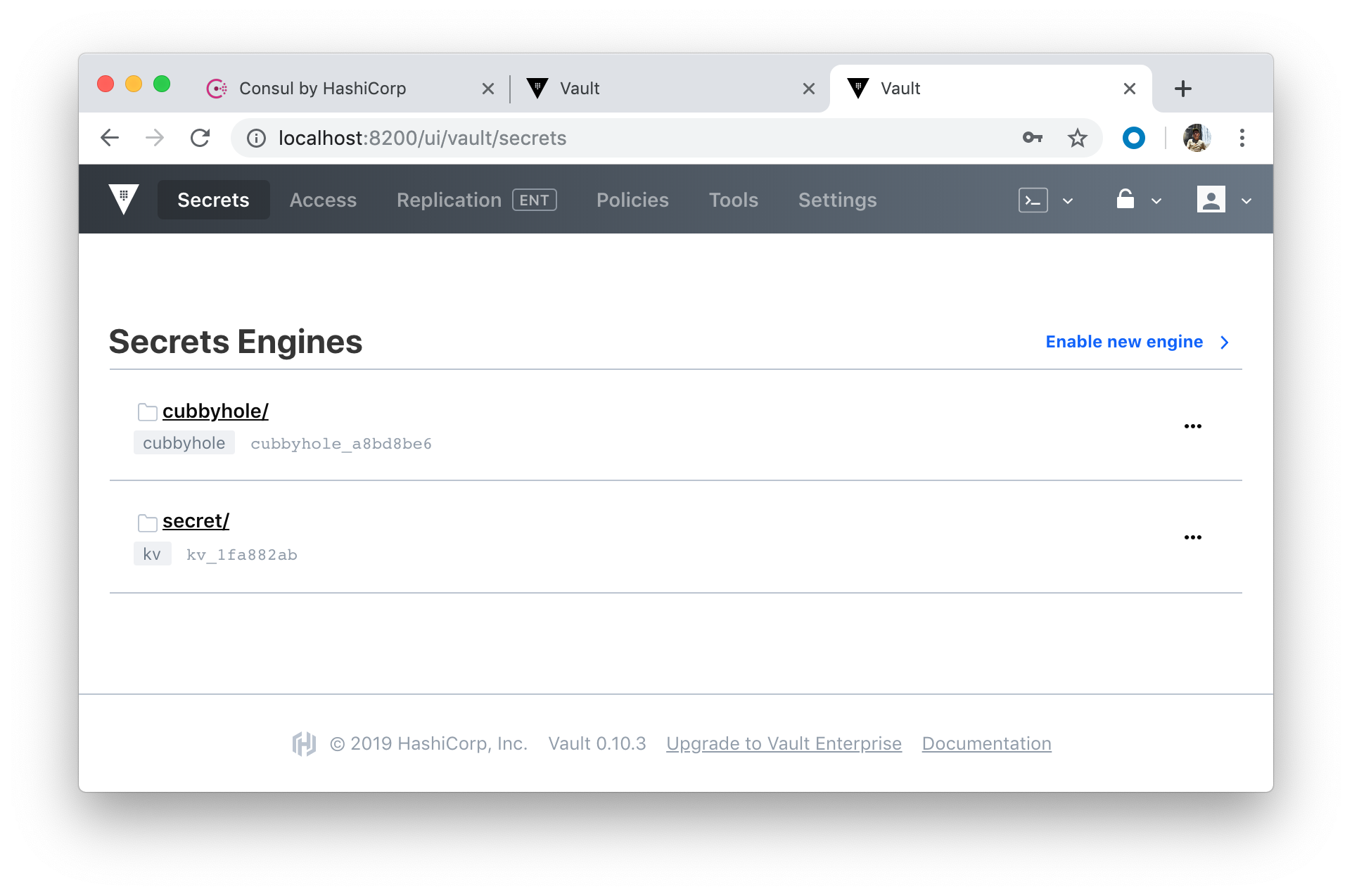

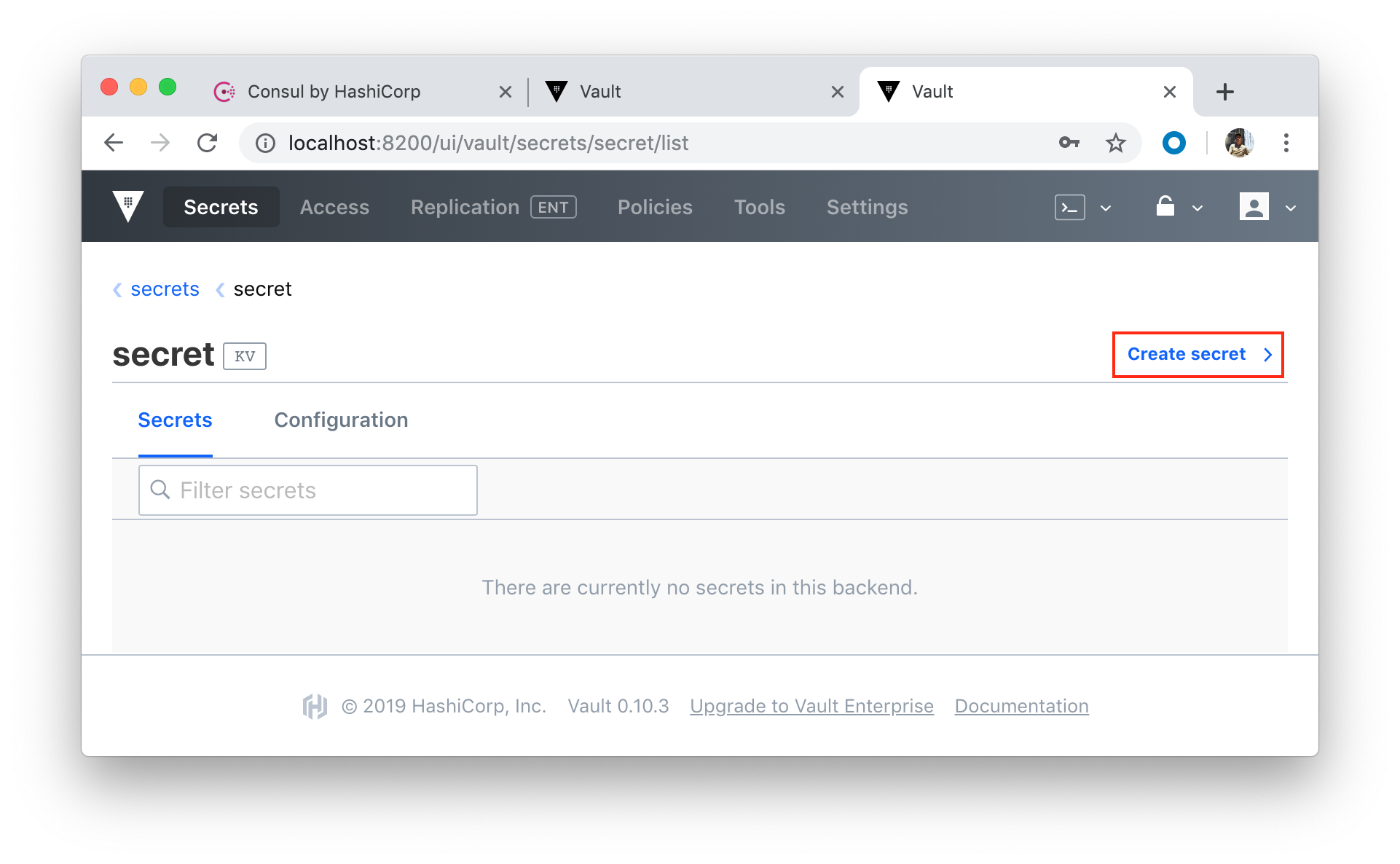

Let's create a secret:

If we want to add another Consul server into the mix, we need to add a new service to docker-compose.yml:

version: '3.6'

services:

vault:

build:

context: ./vault

dockerfile: Dockerfile

ports:

- 8200:8200

volumes:

- ./vault/config:/vault/config

- ./vault/policies:/vault/policies

- ./vault/data:/vault/data

- ./vault/logs:/vault/logs

environment:

- VAULT_ADDR=http://127.0.0.1:8200

command: server -config=/vault/config/vault-config.json

cap_add:

- IPC_LOCK

depends_on:

- consul

consul:

build:

context: ./consul

dockerfile: Dockerfile

ports:

- 8500:8500

command: agent -server -bind 0.0.0.0 -client 0.0.0.0 -bootstrap-expect 1 -config-file=/consul/config/config.json

volumes:

- ./consul/config/consul-config.json:/consul/config/config.json

- ./consul/data:/consul/data

consul-worker:

build:

context: ./consul

dockerfile: Dockerfile

command: agent -server -join consul -config-file=/consul/config/config.json

volumes:

- ./consul/config/consul-config.json:/consul/config/config.json

depends_on:

- consul

Here, we used the join command to connect this agent to an existing cluster. Notice how we simply had to reference the service name - consul

With the docker-compose file, we need to take the same steps as before : tear down the containers and spin-up again:

- Bring down the containers

- Clear out the data directory in "consul/data"

- Spin the containers back up and test

$ docker-compose down Stopping vault-consul-docker_vault_1 ... done Stopping vault-consul-docker_consul_1 ... done Removing vault-consul-docker_vault_1 ... done Removing vault-consul-docker_consul_1 ... done Removing network vault-consul-docker_default

$ docker-compose up -d --build Creating vault-consul-docker_consul_1 ... done Creating vault-consul-docker_consul-worker_1 ... done Creating vault-consul-docker_vault_1 ... done $ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES cc95cac5161d vault-consul-docker_vault "vault server -confi…" 18 minutes ago Up 18 minutes 0.0.0.0:8200->8200/tcp vault-consul-docker_vault_1 431a2b8735b1 vault-consul-docker_consul-worker "consul agent -serve…" 18 minutes ago Up 18 minutes 8300/tcp, 8400/tcp, 8500/tcp, 8600/tcp vault-consul-docker_consul-worker_1 93cc119b4f0d vault-consul-docker_consul "consul agent -serve…" 18 minutes ago Up 18 minutes 8300/tcp, 8400/tcp, 8600/tcp, 0.0.0.0:8500->8500/tcp vault-consul-docker_consul_1

After unseal we get Vault node in action:

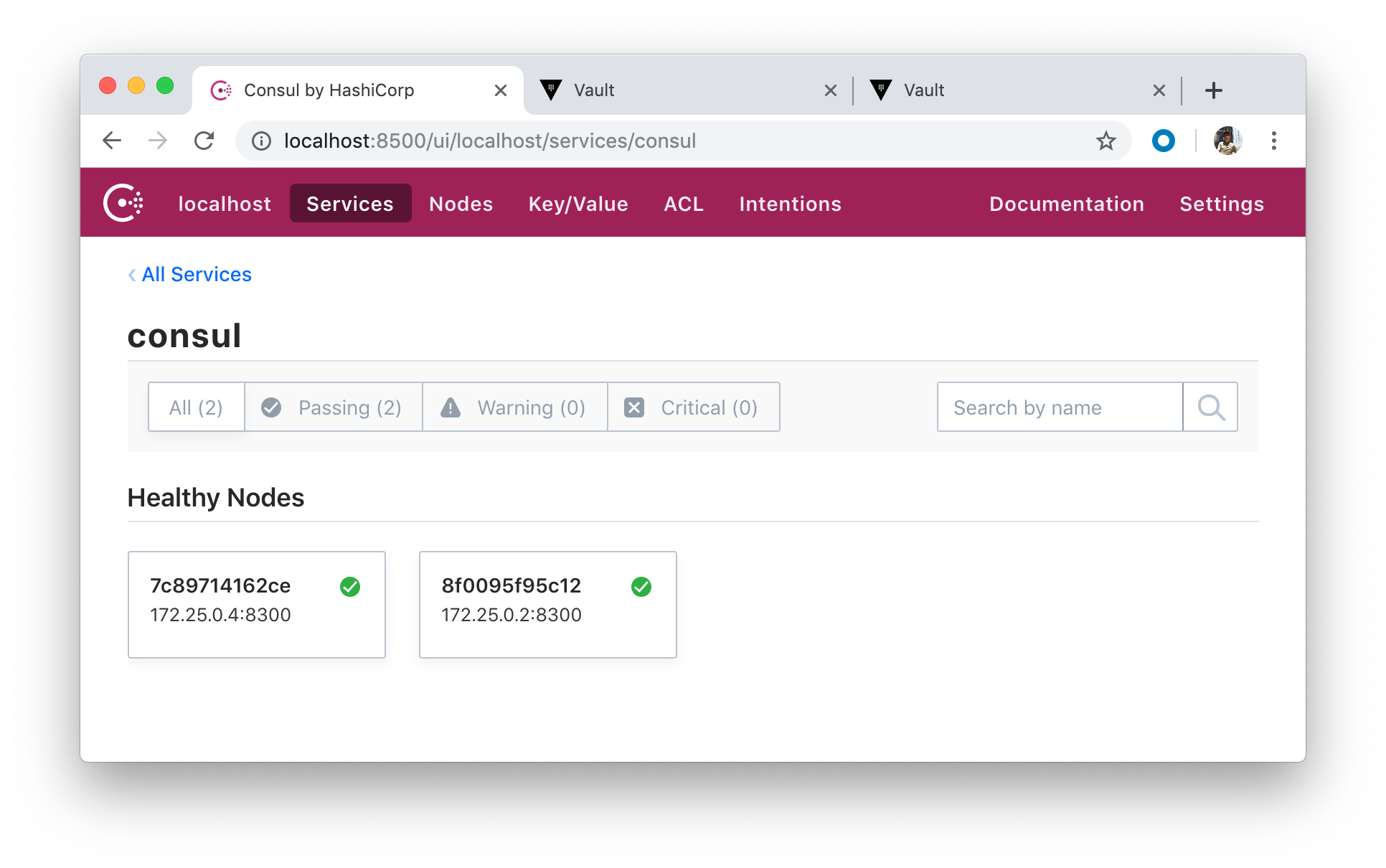

Two healthy Consul nodes:

- Managing Secrets with Vault and Consul

- HashiCorp Docs

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- HashiCorp Vault and Consul on AWS with Terraform

- Code Repo: Docker-compose-Hashicorp-Vault-Consul

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization