Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

Continued from Docker & Kubernetes : HashiCorp's Vault and Consul on minikube, in this post, we'll do Auto-unseal using Transit Secrets Engine (Auto-unseal using Transit Secrets Engine).

Important : we need to make sure two env variables should be set (VAULT_ADDR and VAULT_CACERT), which is explained later in this section, otherwise we get 509 error for vault command. It might have been simpler if we could just use http but the way my minikube setup for Vault communication is via https, so we'll stick to it through out this post.

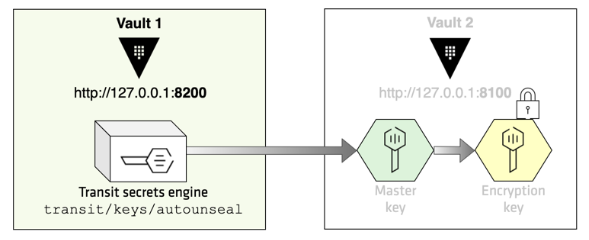

We'll use two Vaults (vault-1 and vault-2), and the vault-1 should be listening to 8200 while port 8100 will be used for the vault-2. The vault-1 will be used as a encryption service provider.

For a fresh start, let's delete the Consul/Vault pods to get fresh Vault if they are still there:

$ kubectl delete -f consul/statefulset.yaml statefulset.apps "consul" deleted $ kubectl delete -f vault/deployment.yaml deployment.extensions "vault" deleted

Then, create 3 of the Vaults by setting replicas: 3 in vault/deployment.yaml:

$ kubectl create -f vault/deployment.yaml deployment.extensions/vault created

Though not going to affect Vault unseal, we may want to create consul pods as well and setup port-forward:

$ kubectl create -f consul/statefulset.yaml statefulset.apps/consul created $ kubectl port-forward consul-1 8500:8500

$ kubectl get pods NAME READY STATUS RESTARTS AGE consul-0 1/1 Running 0 76s consul-1 1/1 Running 0 74s consul-2 1/1 Running 0 71s vault-fb8d76649-5ldh2 2/2 Running 0 89s vault-fb8d76649-9bgdq 2/2 Running 0 89s vault-fb8d76649-wdsx7 2/2 Running 0 89s $ kubectl port-forward vault-84b7b67d87-dzqv4 8200:8200 Forwarding from 127.0.0.1:8200 -> 8200 Forwarding from [::1]:8200 -> 8200 ...

If Vault client not installed yet, it needs to be installed (Download Vault):

If the client installed, by executing vault, we should see help output similar to the following:

$ vault

Usage: vault <command> [args]

Common commands:

read Read data and retrieves secrets

write Write data, configuration, and secrets

delete Delete secrets and configuration

list List data or secrets

login Authenticate locally

agent Start a Vault agent

server Start a Vault server

status Print seal and HA status

unwrap Unwrap a wrapped secret

...

Let's set certs related environment variables:

$ export VAULT_ADDR=https://127.0.0.1:8200 $ export VAULT_CACERT="certs/ca.pem"

Without those variables, we may get similar to the following error during the initialization:

Error initializing: Put https://127.0.0.1:8200/v1/sys/init: x509: certificate signed by unknown authority

Start initialization with the default options:

$ vault operator init Unseal Key 1: G9/Xea2iuwos4mVOArOAvGQ4ZS5a66uk/EzgOiBpVYd7 Unseal Key 2: bbkQvl/30WCXumrXPb6IqojFmUh04IiaWinv77vrJ3Cz Unseal Key 3: mNyxdRWddvqDh0f9vxDnP4FX4k7iXvqZ6b32emrEC17J Unseal Key 4: D0I0PlFNIapJrYIoUarRWfjGZnL6dw20ZqdYNtbeJWuN Unseal Key 5: XQW4/VtieZaAWc+tRAzFln5VIOvmtO2FXiLg1863o6p5 Initial Root Token: 8sPw5UQaechgblAglBwtpR9p ...

We could have used the following command doing the same:

$ vault operator init \

-key-shares=5 \

-key-threshold=3

The key-shares is the number of key shares to split the generated master key into. This is the number of "unseal keys" to generate. key-threshold is the number of key shares required to reconstruct the master key. This must be less than or equal to key-shares.

Unseal:

$ vault operator unseal G9/Xea2iuwos4mVOArOAvGQ4ZS5a66uk/EzgOiBpVYd7 Key Value --- ----- Seal Type shamir Initialized true Sealed true Total Shares 5 Threshold 3 Unseal Progress 1/3 Unseal Nonce 7a98342d-aa11-a432-ab62-25026e98d7b5 Version 0.11.5 HA Enabled true $ vault operator unseal bbkQvl/30WCXumrXPb6IqojFmUh04IiaWinv77vrJ3Cz Key Value --- ----- Seal Type shamir Initialized true Sealed true Total Shares 5 Threshold 3 Unseal Progress 2/3 Unseal Nonce 7a98342d-aa11-a432-ab62-25026e98d7b5 Version 0.11.5 HA Enabled true $ vault operator unseal mNyxdRWddvqDh0f9vxDnP4FX4k7iXvqZ6b32emrEC17J Key Value --- ----- Seal Type shamir Initialized true Sealed false Total Shares 5 Threshold 3 Version 0.11.5 Cluster Name vault-cluster-bd336bc4 Cluster ID d54b0cd8-cdf8-9ab9-d66a-30e2670e9196 HA Enabled true HA Cluster n/a HA Mode standby Active Node Address <none>

Authenticate with the root token:

$ vault login 8sPw5UQaechgblAglBwtpR9p Success! You are now authenticated. The token information displayed below is already stored in the token helper. You do NOT need to run "vault login" again. Future Vault requests will automatically use this token. Key Value --- ----- token 8sPw5UQaechgblAglBwtpR9p token_accessor 1S7u96fRHBeVdK13AFcCg3HF token_duration ∞ token_renewable false token_policies ["root"] identity_policies [] policies ["root"]

Let's check the status:

$ vault status Key Value --- ----- Seal Type shamir Initialized true Sealed false Total Shares 5 Threshold 3 Version 0.11.5 Cluster Name vault-cluster-89fc7934 Cluster ID b8766e4e-5fbf-2852-692f-5b4a9ea7fad6 HA Enabled true HA Cluster https://127.0.0.1:8201 HA Mode active

Vault 1 (http://127.0.0.1:8200) is the encryption service provider, and its transit key protects the Vault 2 server's master key. Therefore, the first step is to enable and configure the transit secrets engine on Vault 1 as shown in the picture below:

Enable an audit device so that we can examine the audit log later:

$ vault audit enable file file_path=audit.log Success! Enabled the file audit device at: file/

Execute the following command to enable the transit secrets engine and create a key named, autounseal:

$ vault secrets enable transit Success! Enabled the transit secrets engine at: transit/ $ vault write -f transit/keys/autounseal Success! Data written to: transit/keys/autounseal

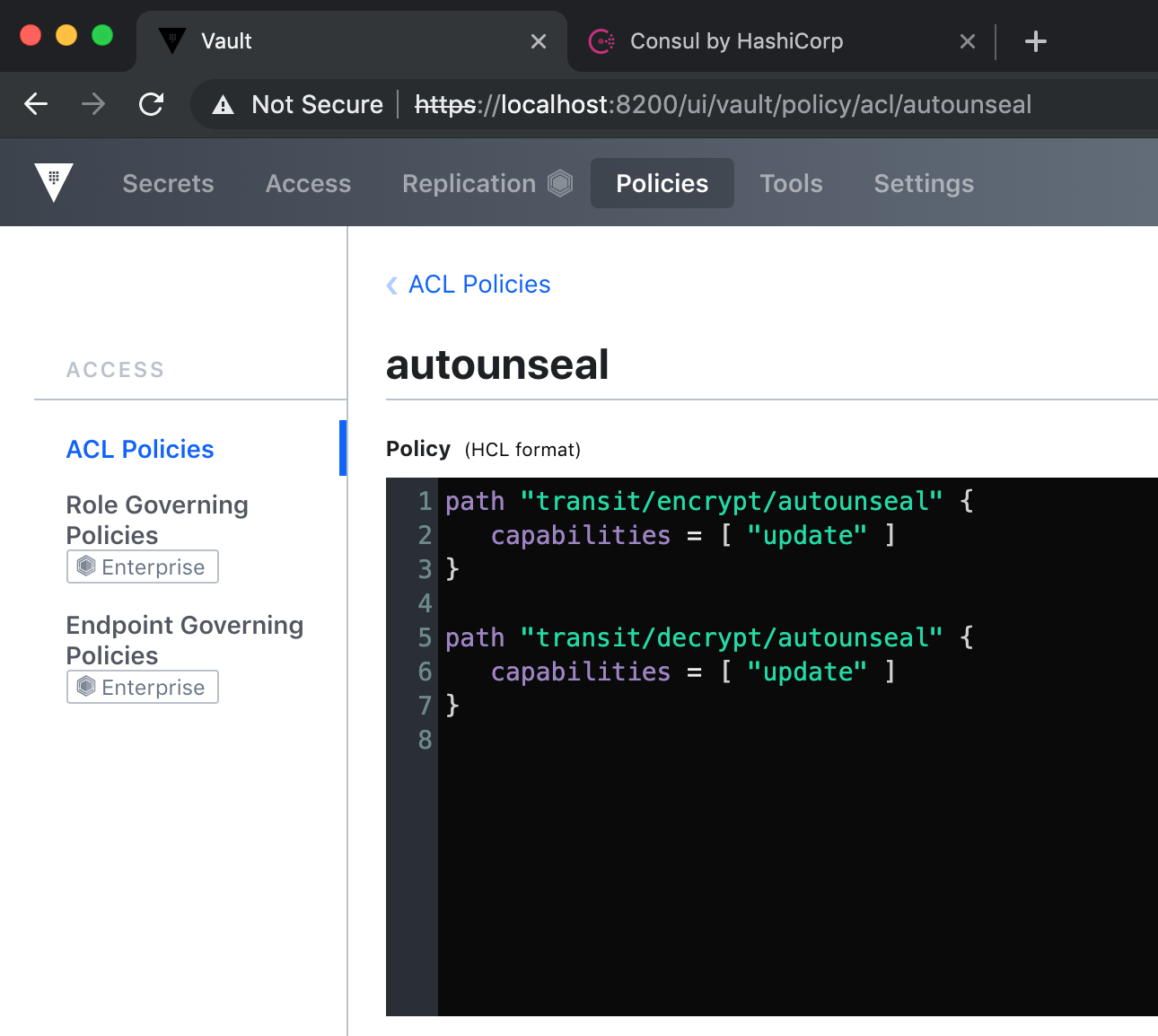

Create a autounseal policy which permits update against transit/encrypt/autounseal and transit/decrypt/autounseal paths:

# Create a policy file

$ tee autounseal.hcl <<EOF

path "transit/encrypt/autounseal" {

capabilities = [ "update" ]

}

path "transit/decrypt/autounseal" {

capabilities = [ "update" ]

}

EOF

# Create an 'autounseal' policy

$ vault policy write autounseal autounseal.hcl

Success! Uploaded policy: autounseal

This creates a policy to permit update against transit/encrypt/<key_name> and transit/decrypt/<key_name> where the <key_name> is the name of the encryption key we created in the previous step:

Create a client token with autounseal policy attached and response wrap it with TTL of 120 seconds:

$ vault token create -policy="autounseal" -wrap-ttl=120 Key Value --- ----- wrapping_token: 351A6CLcQuVYnbOerkw9ATgp wrapping_accessor: 8rSJgjkU8c8jTakstmPf4ghb wrapping_token_ttl: 2m wrapping_token_creation_time: 2019-05-30 16:50:46.425737148 +0000 UTC wrapping_token_creation_path: auth/token/create wrapped_accessor: 6gRh1zfHEwsQZK6EDe6AEdhU

Now, let's setup a second Vault instance which listens to port 8100.

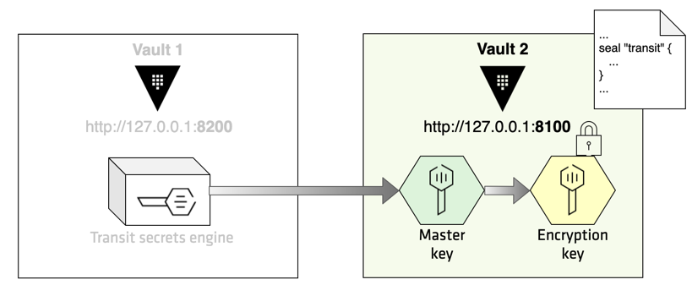

The server configuration file should define a seal stanza with parameters properly set based on the tasks we performed in the previous step.

Execute the following command to unwrap the secrets passed from Vault 1:

# VAULT_TOKEN=<wrapping_token> vault unwrap $ VAULT_TOKEN=351A6CLcQuVYnbOerkw9ATgp vault unwrap Key Value --- ----- token 1mY1KEXANytZzmTRT6Fn07h3 token_accessor 6gRh1zfHEwsQZK6EDe6AEdhU token_duration 768h token_renewable true token_policies ["autounseal" "default"] identity_policies [] policies ["autounseal" "default"]

The revealed token is the client token Vault 2 will use to connect with Vault 1.

On a new terminal (for vault-2), set VAULT_TOKEN environment variable whose value is the client token we just unwrapped:

$ export VAULT_TOKEN=1mY1KEXANytZzmTRT6Fn07h3

Next, create a server configuration file (config-autounseal.hcl) to start a second Vault instance (Vault 2):

disable_mlock = true

ui=true

storage "file" {

path = "vault-2/data"

}

listener "tcp" {

address = "127.0.0.1:8100"

tls_disable = 0

tls_cert_file = "certs/vault.pem"

tls_key_file = "certs/vault-key.pem"

}

seal "transit" {

address = "https://127.0.0.1:8200"

disable_renewal = "false"

key_name = "autounseal"

mount_path = "transit/"

tls_skip_verify = "true"

}

Notice that the address points to the Vault server listening to port 8200 (Vault 1). The key_name and key_name match to what we created in the previous section.

Start the vault server with the configuration file:

$ vault server -config=config-autounseal.hcl

==> Vault server configuration:

Seal Type: transit

Transit Address: https://127.0.0.1:8200

Transit Key Name: autounseal

Transit Mount Path: transit/

Cgo: disabled

Listener 1: tcp (addr: "127.0.0.1:8100", cluster address: "127.0.0.1:8101", max_request_duration: "1m30s", max_request_size: "33554432", tls: "enabled")

Log Level: info

Mlock: supported: false, enabled: false

Storage: file

Version: Vault v1.1.2

Version Sha: 0082501623c0b704b87b1fbc84c2d725994bac54

==> Vault server started! Log data will stream in below:

2019-05-30T10:01:58.503-0700 [WARN] no `api_addr` value specified in config or in VAULT_API_ADDR; falling back to detection if possible, but this value should be manually set

2019-05-30T10:01:58.506-0700 [INFO] core: stored unseal keys supported, attempting fetch

2019-05-30T10:01:58.506-0700 [WARN] failed to unseal core: error="stored unseal keys are supported, but none were found"

2019-05-30T10:02:03.511-0700 [INFO] core: stored unseal keys supported, attempting fetch

2019-05-30T10:02:03.511-0700 [WARN] failed to unseal core: error="stored unseal keys are supported, but none were found"

2019-05-30T10:02:08.513-0700 [INFO] core: stored unseal keys supported, attempting fetch

...

This message indicates that our vault-2 is not initialized:

$ VAULT_ADDR=https://127.0.0.1:8100 vault status Key Value --- ----- Recovery Seal Type transit Initialized false Sealed true Total Recovery Shares 0 Threshold 0 Unseal Progress 0/0 Unseal Nonce n/a Version n/a HA Enabled false RM-MERASO-MAC:Vault-Kubernetes2 ki.hong$

So, leave the terminal where the command vault server -config=config-autounseal.hcl is running, the let's open another terminal and initialize our second Vault server (Vault 2):

$ VAULT_ADDR=https://127.0.0.1:8100 vault operator init -recovery-shares=1 \

-recovery-threshold=1 > recovery-key.txt

By passing the VAULT_ADDR, the subsequent command gets executed against the second Vault server (http://127.0.0.1:8100).

Notice also that we are setting the number of recovery key and recovery threshold because there is no unseal keys with auto-unseal. Vault 2's master key is now protected by the transit secret engine of Vault 1. Recovery keys are used for high-privilege operations such as root token generation. Recovery keys are also used to make Vault operable if Vault has been manually sealed through the vault operator seal command.

Let's go back to the previous terminal, and check the output:

... 2019-05-29T16:32:03.403-0700 [WARN] core: stored keys supported on init, forcing shares/threshold to 1 2019-05-29T16:32:03.404-0700 [INFO] core: security barrier not initialized 2019-05-29T16:32:03.412-0700 [INFO] core: security barrier initialized: shares=1 threshold=1 2019-05-29T16:32:03.426-0700 [INFO] core: post-unseal setup starting 2019-05-29T16:32:03.454-0700 [INFO] core: loaded wrapping token key 2019-05-29T16:32:03.454-0700 [INFO] core: successfully setup plugin catalog: plugin-directory= 2019-05-29T16:32:03.455-0700 [INFO] core: no mounts; adding default mount table 2019-05-29T16:32:03.464-0700 [INFO] core: successfully mounted backend: type=cubbyhole path=cubbyhole/ 2019-05-29T16:32:03.469-0700 [INFO] core: successfully mounted backend: type=system path=sys/ 2019-05-29T16:32:03.471-0700 [INFO] core: successfully mounted backend: type=identity path=identity/ 2019-05-29T16:32:03.486-0700 [INFO] core: successfully enabled credential backend: type=token path=token/ 2019-05-29T16:32:03.487-0700 [INFO] rollback: starting rollback manager 2019-05-29T16:32:03.487-0700 [INFO] core: restoring leases 2019-05-29T16:32:03.489-0700 [INFO] identity: entities restored 2019-05-29T16:32:03.489-0700 [INFO] identity: groups restored 2019-05-29T16:32:03.489-0700 [INFO] core: post-unseal setup complete 2019-05-29T16:32:03.490-0700 [INFO] expiration: lease restore complete 2019-05-29T16:32:03.541-0700 [INFO] core: root token generated 2019-05-29T16:32:03.541-0700 [INFO] core: pre-seal teardown starting 2019-05-29T16:32:03.541-0700 [INFO] rollback: stopping rollback manager 2019-05-29T16:32:03.542-0700 [INFO] core: pre-seal teardown complete 2019-05-29T16:32:03.542-0700 [INFO] core: stored unseal keys supported, attempting fetch 2019-05-29T16:32:03.550-0700 [INFO] core: vault is unsealed 2019-05-29T16:32:03.551-0700 [INFO] core.cluster-listener: starting listener: listener_address=127.0.0.1:8101 2019-05-29T16:32:03.551-0700 [INFO] core.cluster-listener: serving cluster requests: cluster_listen_address=127.0.0.1:8101 2019-05-29T16:32:03.551-0700 [INFO] core: post-unseal setup starting 2019-05-29T16:32:03.552-0700 [INFO] core: loaded wrapping token key 2019-05-29T16:32:03.552-0700 [INFO] core: successfully setup plugin catalog: plugin-directory= 2019-05-29T16:32:03.553-0700 [INFO] core: successfully mounted backend: type=system path=sys/ 2019-05-29T16:32:03.553-0700 [INFO] core: successfully mounted backend: type=identity path=identity/ 2019-05-29T16:32:03.553-0700 [INFO] core: successfully mounted backend: type=cubbyhole path=cubbyhole/ 2019-05-29T16:32:03.556-0700 [INFO] core: successfully enabled credential backend: type=token path=token/ 2019-05-29T16:32:03.556-0700 [INFO] core: restoring leases 2019-05-29T16:32:03.556-0700 [INFO] rollback: starting rollback manager 2019-05-29T16:32:03.556-0700 [INFO] expiration: lease restore complete 2019-05-29T16:32:03.557-0700 [INFO] identity: entities restored 2019-05-29T16:32:03.557-0700 [INFO] identity: groups restored 2019-05-29T16:32:03.557-0700 [INFO] core: post-unseal setup complete

Check the Vault 2 server status on the terminal for vault-2. It is now successfully initialized and unsealed:

$ VAULT_ADDR=https://127.0.0.1:8100 vault status Key Value --- ----- Recovery Seal Type shamir Initialized true Sealed false Total Recovery Shares 1 Threshold 1 Version 1.1.2 Cluster Name vault-cluster-99fa9f20 Cluster ID 20cbbe39-388a-e1a7-5982-e37e1e13b3c9 HA Enabled false

Notice that it shows "Total Recovery Shares" instead of "Total Shares". The transit secrets engine is solely responsible for protecting the master key of Vault 2. There are some operations that still requires Shamir's keys (e.g. regenerate a root token). Therefore, Vault 2 server requires recovery keys although auto-unseal has been enabled.

To verify that Vault 2 gets automatically unseal, press Ctrl + C to stop the Vault 2 server where it is running (where the vault server -config=config-autounseal.hcl is running):

^C==> Vault shutdown triggered 2019-05-29T17:33:21.058-0700 [INFO] core: marked as sealed 2019-05-29T17:33:21.058-0700 [INFO] core: pre-seal teardown starting 2019-05-29T17:33:21.058-0700 [INFO] rollback: stopping rollback manager 2019-05-29T17:33:21.059-0700 [INFO] core: pre-seal teardown complete 2019-05-29T17:33:21.059-0700 [INFO] core: stopping cluster listeners 2019-05-29T17:33:21.059-0700 [INFO] core.cluster-listener: forwarding rpc listeners stopped 2019-05-29T17:33:21.504-0700 [INFO] core.cluster-listener: rpc listeners successfully shut down 2019-05-29T17:33:21.504-0700 [INFO] core: cluster listeners successfully shut down 2019-05-29T17:33:21.504-0700 [INFO] core: vault is sealed 2019-05-29T17:33:21.504-0700 [INFO] seal-transit: shutting down token renewal

Note that Vault 2 is now sealed.

Press the upper-arrow key, and execute the vault server -config=config-autounseal.hcl command again to start Vault 2 and see what happens:

$ vault server -config=config-autounseal.hcl

==> Vault server configuration:

Seal Type: transit

Transit Address: https://127.0.0.1:8200

Transit Key Name: autounseal

Transit Mount Path: transit/

Cgo: disabled

Listener 1: tcp (addr: "127.0.0.1:8100", cluster address: "127.0.0.1:8101", max_request_duration: "1m30s", max_request_size: "33554432", tls: "enabled")

Log Level: info

Mlock: supported: false, enabled: false

Storage: file

Version: Vault v1.1.2

Version Sha: 0082501623c0b704b87b1fbc84c2d725994bac54

==> Vault server started! Log data will stream in below:

2019-05-29T17:36:46.741-0700 [WARN] no `api_addr` value specified in config or in VAULT_API_ADDR; falling back to detection if possible, but this value should be manually set

2019-05-29T17:36:46.752-0700 [INFO] core: stored unseal keys supported, attempting fetch

2019-05-29T17:36:46.771-0700 [INFO] core: vault is unsealed

2019-05-29T17:36:46.771-0700 [INFO] core.cluster-listener: starting listener: listener_address=127.0.0.1:8101

2019-05-29T17:36:46.771-0700 [INFO] core.cluster-listener: serving cluster requests: cluster_listen_address=127.0.0.1:8101

2019-05-29T17:36:46.773-0700 [INFO] core: post-unseal setup starting

2019-05-29T17:36:46.774-0700 [INFO] core: loaded wrapping token key

2019-05-29T17:36:46.774-0700 [INFO] core: successfully setup plugin catalog: plugin-directory=

2019-05-29T17:36:46.776-0700 [INFO] core: successfully mounted backend: type=system path=sys/

2019-05-29T17:36:46.776-0700 [INFO] core: successfully mounted backend: type=identity path=identity/

2019-05-29T17:36:46.776-0700 [INFO] core: successfully mounted backend: type=cubbyhole path=cubbyhole/

2019-05-29T17:36:46.785-0700 [INFO] core: successfully enabled credential backend: type=token path=token/

2019-05-29T17:36:46.785-0700 [INFO] core: restoring leases

2019-05-29T17:36:46.785-0700 [INFO] rollback: starting rollback manager

2019-05-29T17:36:46.785-0700 [INFO] expiration: lease restore complete

2019-05-29T17:36:46.786-0700 [INFO] identity: entities restored

2019-05-29T17:36:46.786-0700 [INFO] identity: groups restored

2019-05-29T17:36:46.786-0700 [INFO] core: post-unseal setup complete

2019-05-29T17:36:46.787-0700 [INFO] core: unsealed with stored keys: stored_keys_used=1

Notice that the Vault server is already unsealed. The Transit Address is set to our Vault 1 which is listening to port 8200 (http://127.0.0.1:8200).

Check the Vault 2 server status:

$ VAULT_ADDR=https://127.0.0.1:8100 vault status Key Value --- ----- Recovery Seal Type shamir Initialized true Sealed false Total Recovery Shares 1 Threshold 1 Version 1.1.2 Cluster Name vault-cluster-99fa9f20 Cluster ID 20cbbe39-388a-e1a7-5982-e37e1e13b3c9 HA Enabled false

Now, examine the audit log in Vault 1. But for some reason, got the following error:

$ tail -f audit.log | jq tail: audit.log: No such file or directory

- Auto-unseal using Transit Secrets Engine

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- HashiCorp Vault and Consul on AWS with Terraform

- repo: HashCorp-Vault-and-Consul-on-Minikube

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization