Kubernetes Q and A - Part II

- Deploying a Go app to a minikube starting from multistage Dockerfile

$ minikube docker-env export DOCKER_TLS_VERIFY="1" export DOCKER_HOST="tcp://127.0.0.1:32770" export DOCKER_CERT_PATH="/Users/kihyuckhong/.minikube/certs" export MINIKUBE_ACTIVE_DOCKERD="minikube" $ eval $(minikube docker-env)

With the env setup, we're operating in Minikube's Docker environment.

Here are the two files (hello.go and Dockerfile) we're going to use.

hello.go:

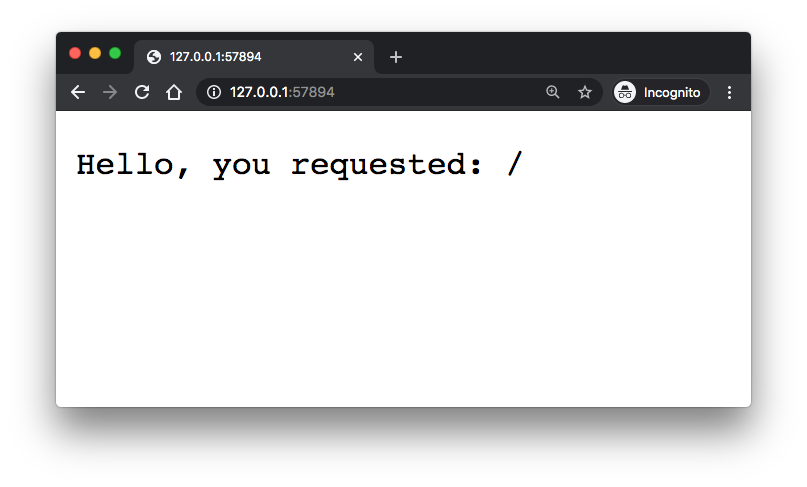

package main import ( "fmt" "log" "net/http" ) //Hello Server responds to requests with the given URL path. func HelloServer(w http.ResponseWriter, r *http.Request) { fmt.Fprintf(w, "Hello, you requested: %s", r.URL.Path) log.Printf("Received request for path: %s", r.URL.Path) } func main() { var addr string = ":8181" handler := http.HandlerFunc(HelloServer) if err := http.ListenAndServe(addr, handler); err != nil { log.Fatalf("Could not listen on port %s %v", addr, err) } }

Dockerfile that builds the app then copy the binary into a container:

FROM golang:1-alpine as build WORKDIR /app COPY hello.go /app RUN go build hello.go FROM alpine:latest WORKDIR /app COPY --from=build /app /app EXPOSE 8180 ENTRYPOINT ["./hello"]

So, let's build and tag the hello-go-mini image:

$ docker build -t hello-go-mini:1.0 . Sending build context to Docker daemon 3.072kB Step 1/9 : FROM golang:1-alpine as build ---> 14ee78639386 Step 2/9 : WORKDIR /app ---> Using cache ---> 25a061582720 Step 3/9 : COPY hello.go /app ---> Using cache ---> 31ff16dd7be8 Step 4/9 : RUN go build hello.go ---> Using cache ---> 2cdc7af4ecde Step 5/9 : FROM alpine:latest ---> 49f356fa4513 Step 6/9 : WORKDIR /app ---> Using cache ---> f9bf344b9c16 Step 7/9 : COPY --from=build /app /app ---> Using cache ---> d8fcd75df8e6 Step 8/9 : EXPOSE 8180 ---> Using cache ---> 74bfdec770eb Step 9/9 : ENTRYPOINT ["./hello"] ---> Using cache ---> 6c1b1977faff Successfully built 6c1b1977faff Successfully tagged hello-go-mini:1.0 $ docker images REPOSITORY TAG IMAGE ID CREATED SIZE hello-go-mini 1.0 6c1b1977faff 4 hours ago 11.8MB

Note here that we set the tag as :1.0 to get the image from locally built one (not freom registry), otherwise we may get "ErrImagePull" when a pod tries to pull the image from a local registry that does not have the image.

This is happening because with the latest tag, minikube sets the imagePullPolicy to Always implicitly, and it checks a registry. We can try setting it to IfNotPresent explicitly or change to a tag other than latest as we've done is our example here.

Let's deploy our Hello Go app into the Minikube:

$ kubectl create deployment hello-go --image=hello-go-mini:1.0 deployment.apps/hello-go created $ kubectl get all NAME READY STATUS RESTARTS AGE pod/hello-go-676b7c76cd-tqw2g 1/1 Running 0 23m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 33h NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/hello-go 1/1 1 1 23m NAME DESIRED CURRENT READY AGE replicaset.apps/hello-go-676b7c76cd 1 1 1 23m

At this point, our Hello Go is running in Kubernetes. But we won't be able to access the app from anywhere, because there is nothing exposing port 8181 to the outside world. In Docker, we can use -p 8181:8181 to expose a port from the Docker container to a port on the host. In Kubernetes, we can expose a deployment to the outside world using a Kubernetes Service.

$ kubectl expose deployment hello-go --type=LoadBalancer --port=8181 service/hello-go exposed

$ minikube service hello-go |-----------|----------|-------------|-------------------------| | NAMESPACE | NAME | TARGET PORT | URL | |-----------|----------|-------------|-------------------------| | default | hello-go | 8181 | http://172.17.0.2:31294 | |-----------|----------|-------------|-------------------------| Starting tunnel for service hello-go. |-----------|----------|-------------|------------------------| | NAMESPACE | NAME | TARGET PORT | URL | |-----------|----------|-------------|------------------------| | default | hello-go | | http://127.0.0.1:57894 | |-----------|----------|-------------|------------------------| Opening service default/hello-go in default browser... Because you are using a Docker driver on darwin, the terminal needs to be open to run it.

We can scale the containers using

kubectl scalecommand:$ kubectl scale deployments hello-go --replicas=3 deployment.apps/hello-go scaled $ kubectl get pods NAME READY STATUS RESTARTS AGE hello-go-676b7c76cd-72t5q 1/1 Running 0 35s hello-go-676b7c76cd-cdb88 1/1 Running 0 35s hello-go-676b7c76cd-tqw2g 1/1 Running 0 38m

To run the same app with a simple Docker container but not on Kubernetes cluster, please check out Multistage Image Builds.

- Autoscaling:

- Horizontal Pod Autoscaling (HPA):

We can add or remove pod replicas in response to changes in demand for our applications. The Horizontal Pod Autoscaler (HPA) can manage scaling these workloads for us automatically.

The HPA is ideal for scaling stateless applications, although it can also support scaling stateful sets. Using HPA in combination with cluster autoscaling can help us achieve cost savings for workloads that see regular changes in demand by reducing the number of active nodes as the number of pods decreases. - Cluster Autoscaler:

While HPA scales the number of running pods in a cluster, the cluster autoscaler can change the number of nodes in a cluster. - Vertical Pod Autoscaling (VPA):

The default Kubernetes scheduler overcommits CPU and memory reservations on a node, with the philosophy that most containers will stick closer to their initial requests than to their requested upper limit. The Vertical Pod Autoscaler (VPA) can increase and decrease the CPU and memory resource requests of pod containers to better match the allocated cluster resource allotment to actual usage.

- Horizontal Pod Autoscaling (HPA):

- Kubernetes compute resources:

When authoring the pods, we can optionally specify how much CPU and memory (RAM) each container needs in order to better schedule pods in the cluster and ensure satisfactory performance.

CPU is measured in millicores.

Each node in the cluster introspects the operating system to determine the amount of CPU cores on the node and then multiples that value by 1000 to express its total capacity. For example, If we have 4 cores, then the CPU capacity of the node is 4000m.4 * 1000m = 4000m

- CPU requests:

Each container in a pod may specify the amount of CPU it requests on a node.

CPU requests are used by the scheduler to find a node with an appropriate fit for our container.

The CPU request represents a minimum amount of CPU that our container may consume, but if there is no contention for CPU, it may burst to use as much CPU as is available on the node.

If there is CPU contention on the node, CPU requests provide a relative weight across all containers on the system for how much CPU time the container may use. - CPU limits:

Each container in a pod may specify the amount of CPU it is limited to use on a node.

CPU limits are used to control the maximum amount of CPU that our container may use independent of contention on the node. If a container attempts to use more than the specified limit, the system will throttle the container.

This allows our container to have a consistent level of service independent of the number of pods scheduled to the node. - Memory requests:

By default, a container is able to consume as much memory on the node as possible.

In order to improve placement of pods in the cluster, it is recommended to specify the amount of memory required for a container to run.

The scheduler will then take available node memory capacity into account prior to binding our pod to a node.

A container is still able to consume as much memory on the node as possible even when specifying a request. - Memory limits:

If we specify a memory limit, we can constrain the amount of memory our container can use.

For example, if we specify a limit of 200Mi, our container will be limited to using that amount of memory on the node, and if it exceeds the specified memory limit, it will be terminated and potentially restarted dependent upon the container restart policy.

Note:

-

Requests (guaranted):

Requests are guaranteed resources that Kubernetes will ensure for the container on a node. If the required Requests resources are not available, the pod is not scheduled and lies in a Pending state until the required resources are made available (by cluster autoscaler). -

Limits (maximum):

Limits are the maximum resource that can be utilized by a container. If the container exceeds this quota, it is forcefully killed with a status OOMKilled. The resources mentioned in limits are not guaranteed to be available to the container, it may or may not be fulfilled depending upon resource allocation situation on the node.

nginx-deployment.yaml:

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 5 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx-container image: nginx:1.14.2 ports: - containerPort: 80 resources: requests: cpu: 100m memory: 200Mi limits: cpu: 200m memory: 400Mi

- The container requests 100m cpu.

- The container requests 200Mi memory.

- The container limits 200m cpu.

- The container limits 400Mi memory.

To deploy the pods:$ kubectl apply -f nginx-deployment.yaml deployment.apps/nginx-deployment created $ kubectl get pods NAME READY STATUS RESTARTS AGE nginx-deployment-94c4794b4-22nbg 1/1 Running 0 85s nginx-deployment-94c4794b4-29wqn 1/1 Running 0 85s nginx-deployment-94c4794b4-2djk2 1/1 Running 0 85s nginx-deployment-94c4794b4-b7mgr 1/1 Running 0 85s nginx-deployment-94c4794b4-rjvbq 1/1 Running 0 85s

To view compute resources for a pod:

$ kubectl describe pods nginx-deployment-94c4794b4-22nbg Name: nginx-deployment-94c4794b4-22nbg Namespace: default Priority: 0 Node: minikube/172.17.0.3 Start Time: Thu, 22 Apr 2021 18:14:16 -0700 Labels: app=nginx pod-template-hash=94c4794b4 Annotations: <none> Status: Running IP: 172.18.0.3 IPs: IP: 172.18.0.3 Controlled By: ReplicaSet/nginx-deployment-94c4794b4 Containers: nginx-container: Container ID: docker://0aa6466b5be90df27d5ed405bed015bf0c0d8b2ca36873351b29581d438f4775 Image: nginx:1.14.2 Image ID: docker-pullable://nginx@sha256:f7988fb6c02e0ce69257d9bd9cf37ae20a60f1df7563c3a2a6abe24160306b8d Port: 80/TCP Host Port: 0/TCP State: Running Started: Thu, 22 Apr 2021 18:14:27 -0700 Ready: True Restart Count: 0 Limits: cpu: 200m memory: 400Mi Requests: cpu: 100m memory: 200Mi Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-mqf72 (ro) Conditions: Type Status Initialized True Ready True ContainersReady True PodScheduled True Volumes: default-token-mqf72: Type: Secret (a volume populated by a Secret) SecretName: default-token-mqf72 Optional: false QoS Class: Burstable Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s node.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 3m56s default-scheduler Successfully assigned default/nginx-deployment-94c4794b4-22nbg to minikube Normal Pulled 3m47s kubelet, minikube Container image "nginx:1.14.2" already present on machine Normal Created 3m47s kubelet, minikube Created container nginx-container Normal Started 3m46s kubelet, minikube Started container nginx-container - CPU requests:

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization