AWS : RDS Importing and Exporting SQL Server Data

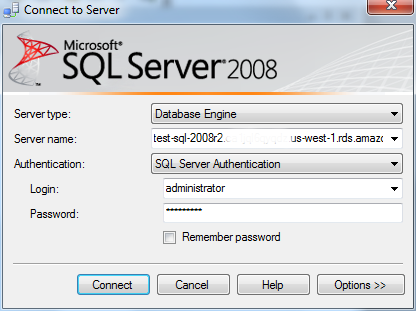

To demonstrate import/export, we'll use two RDS MS SQL instances on RDS as the first example. Next, we'll export a table from localhost (PC) to a RDS instance.

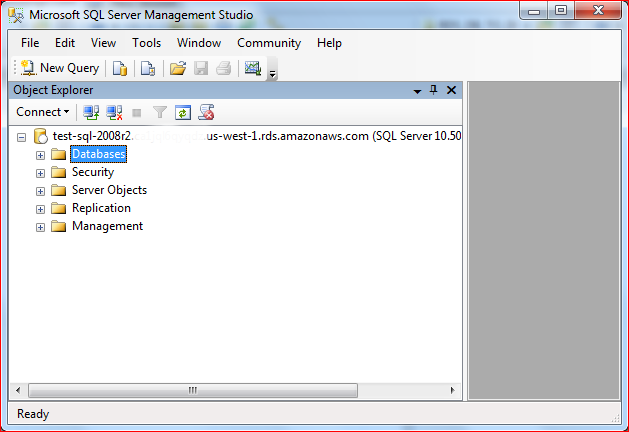

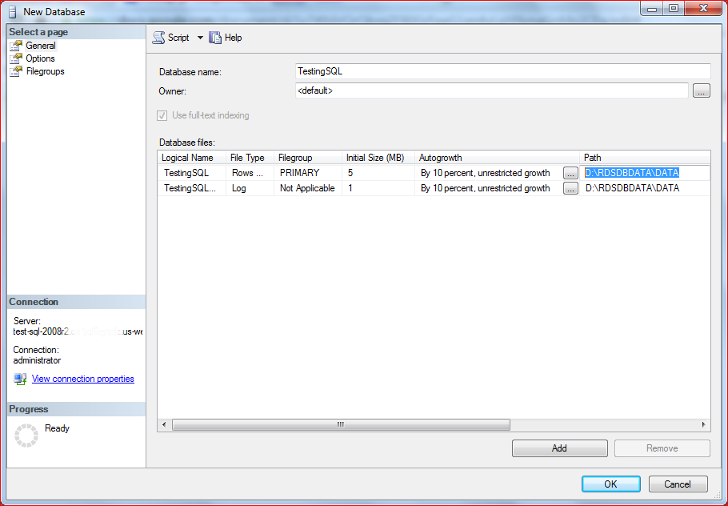

Before we learn how to import/export data, we want to create a database and table.

Select "New Database..." from "Databases":

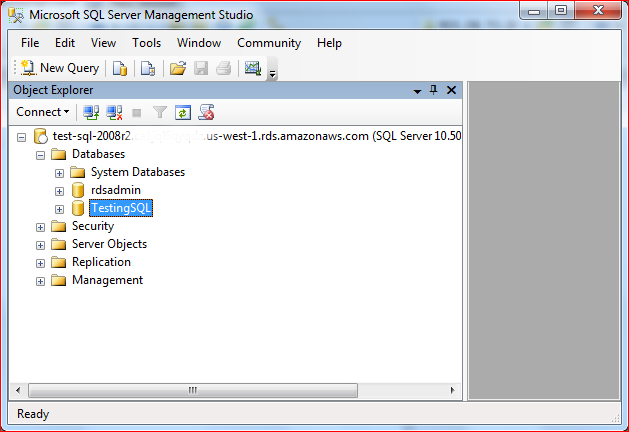

Now we've just created "TestingSQL" database:

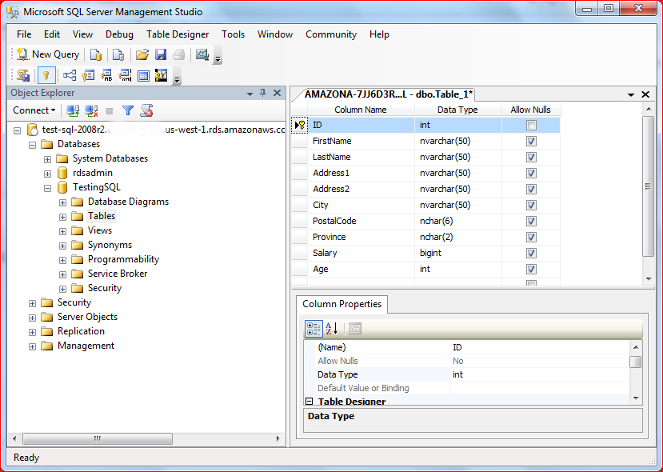

Let's create a table:

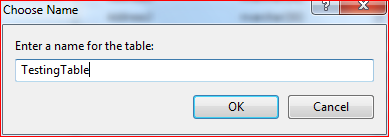

"Save":

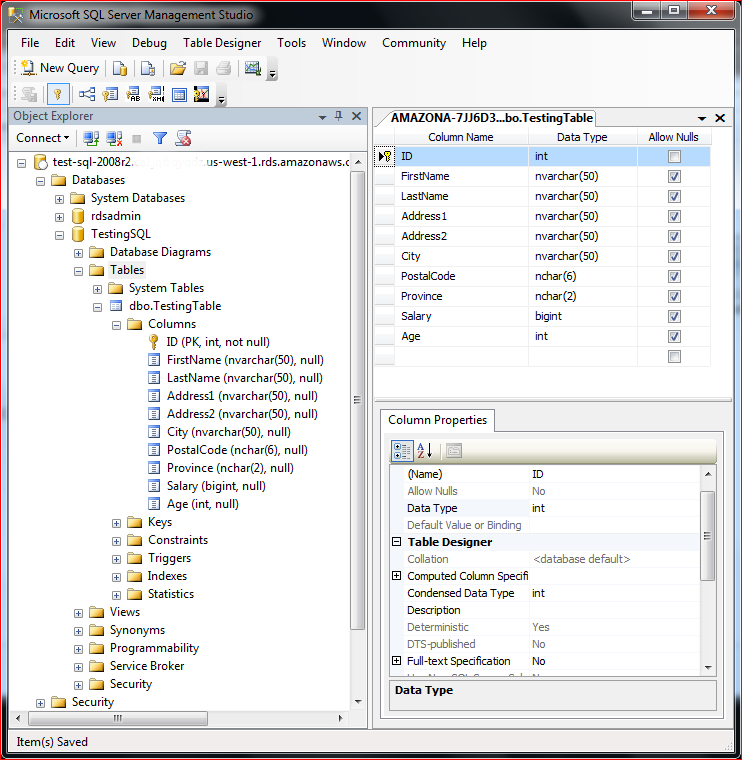

Put some data into the table:

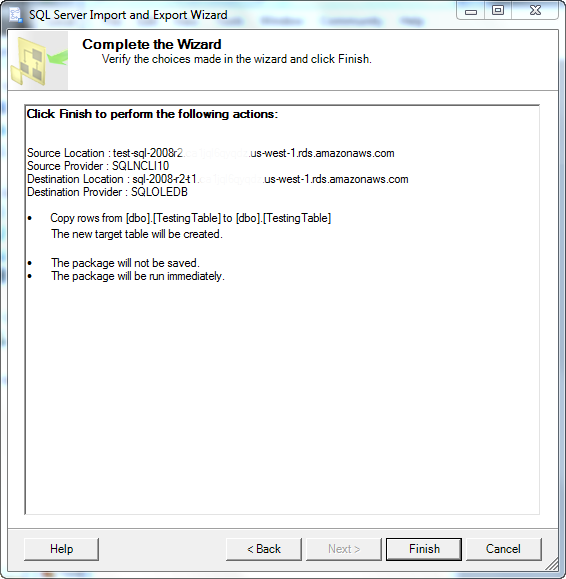

Now we want to export the table to another MS SQL server on RDS. We'll use SQL Server Management Studio (SSMS).

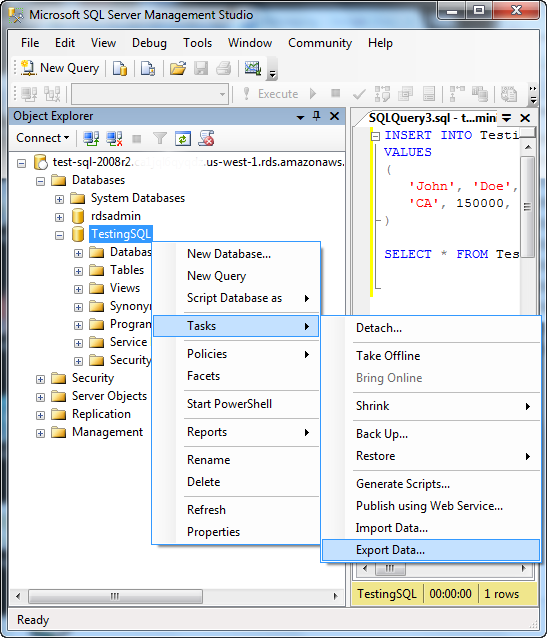

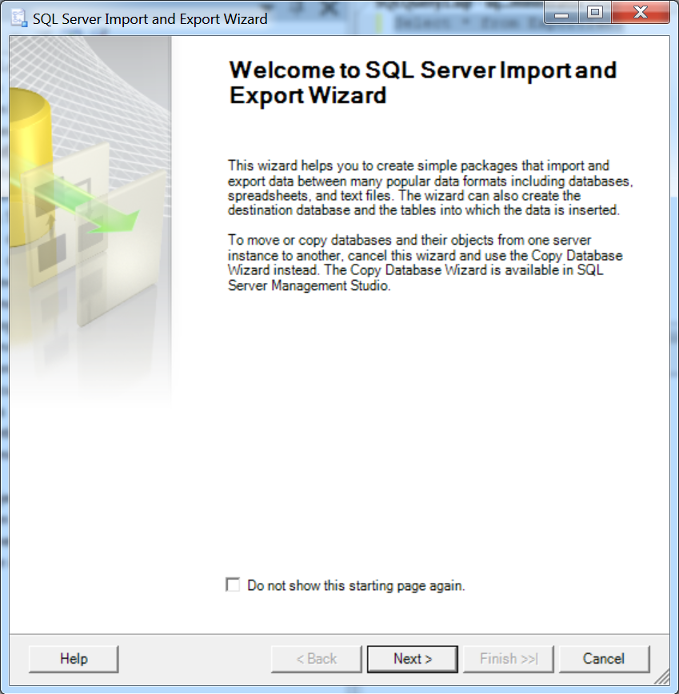

Right click on DB name => Tasks => Export Data...:

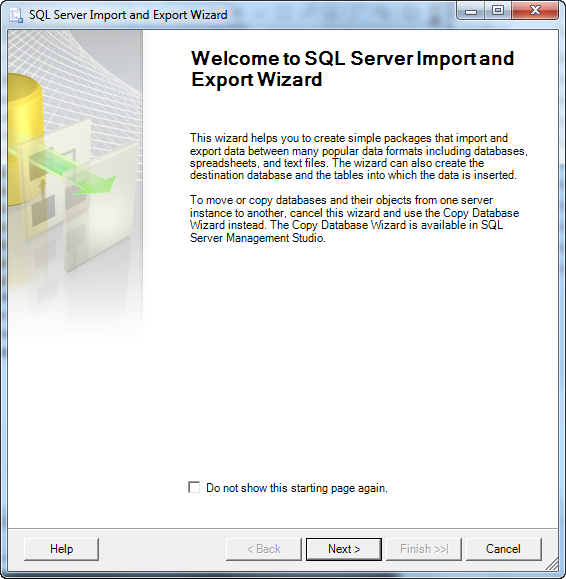

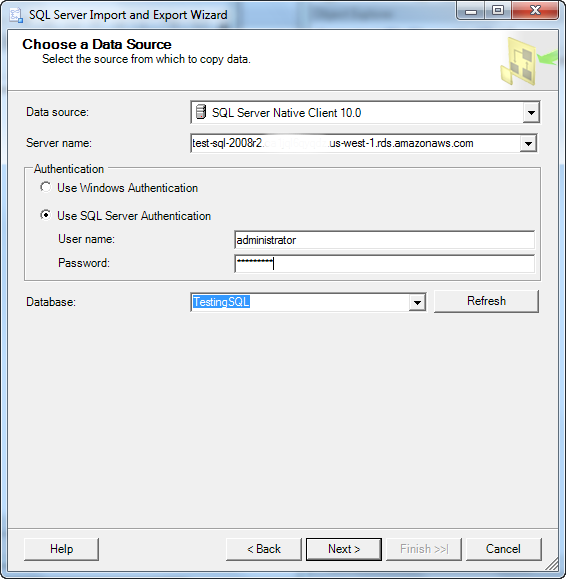

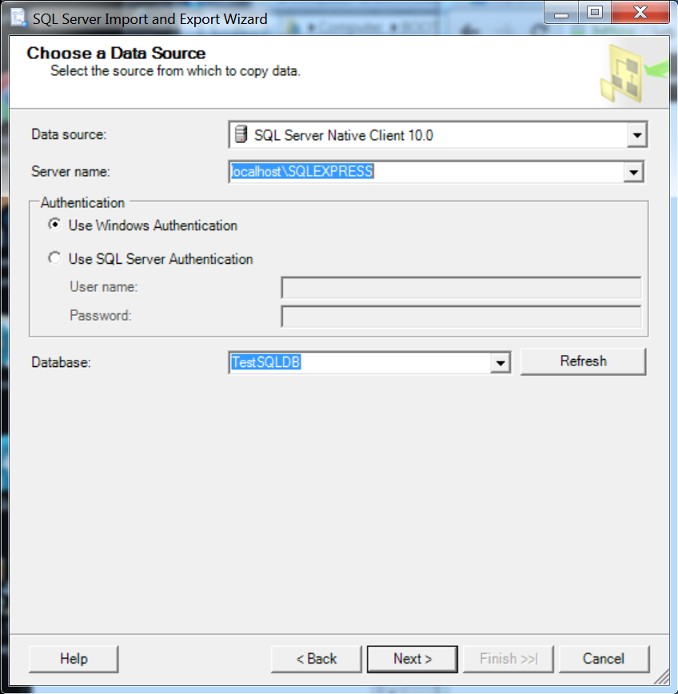

Click Next. Then, in the following dialog, we need to specify the data source:

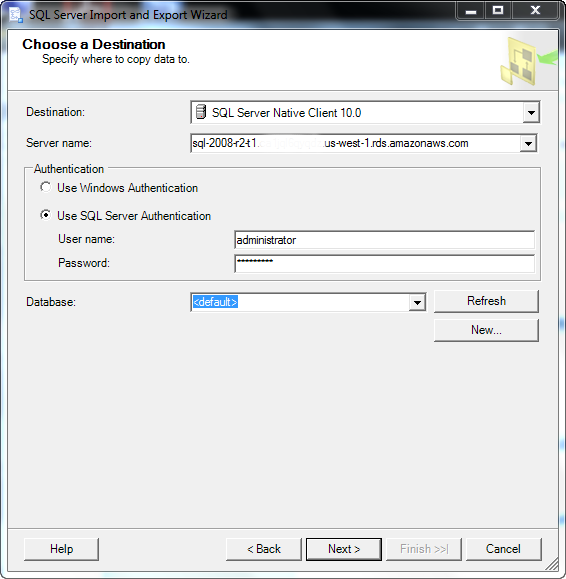

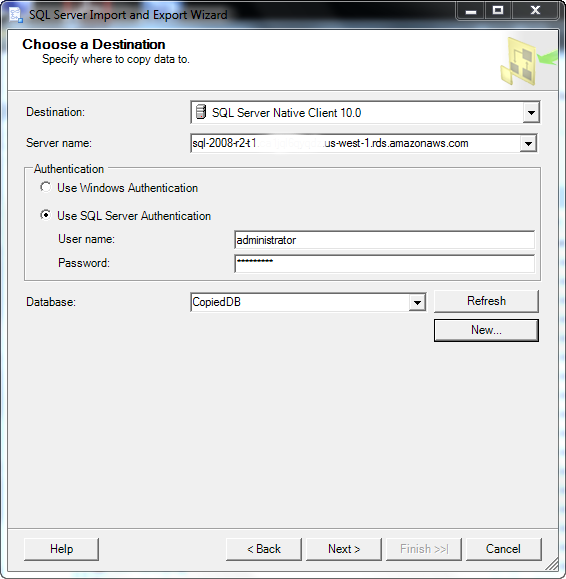

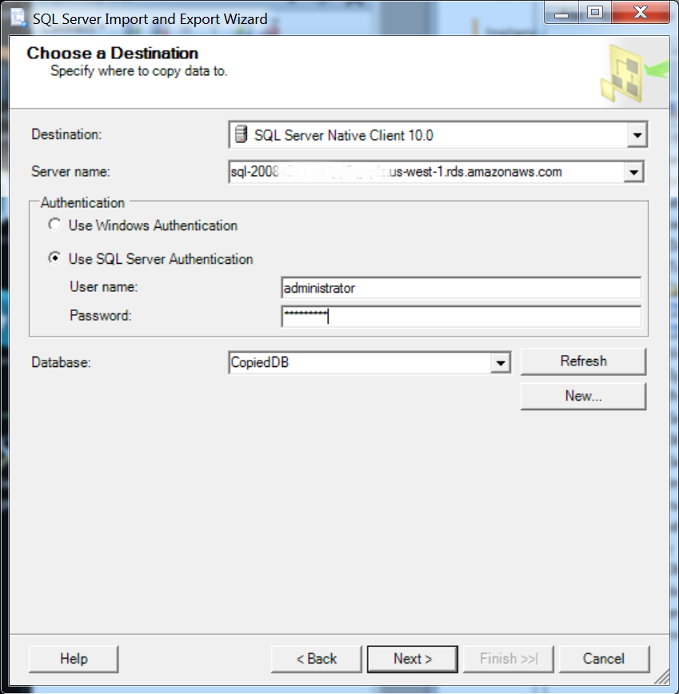

Then, target (destination):

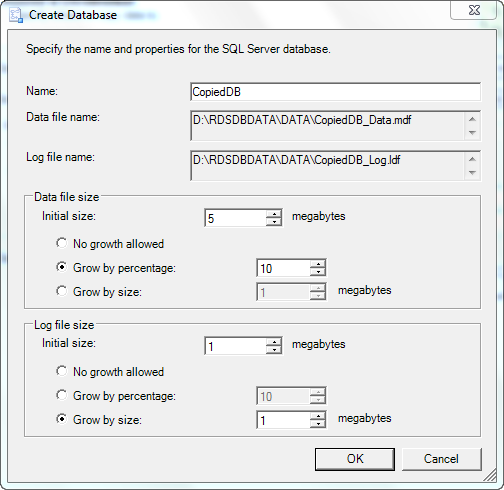

To create a new database in the destination SQL server, click New...:

Notice the wizard inserted the new database name:

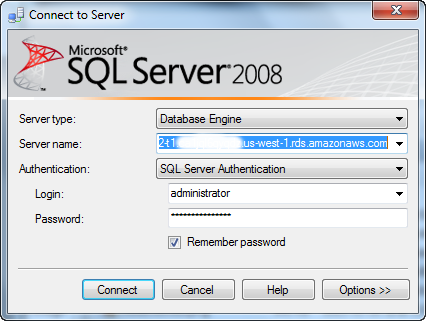

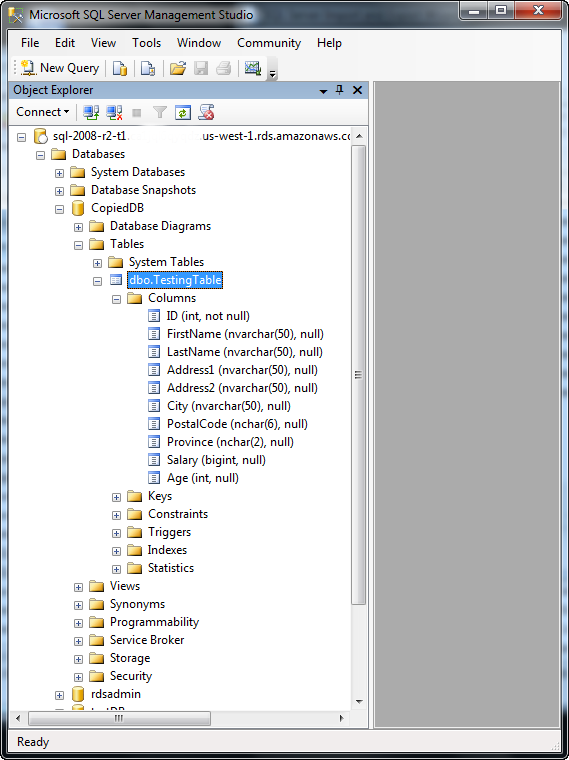

Now, let's open our destination SQL server on RDS:

We can see the target DB, "CopiedDB". It has been successfully exported from the source:

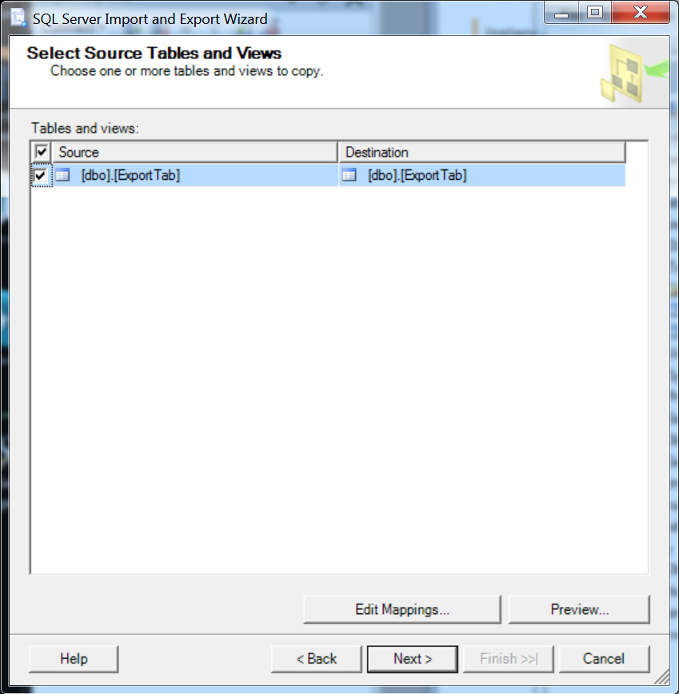

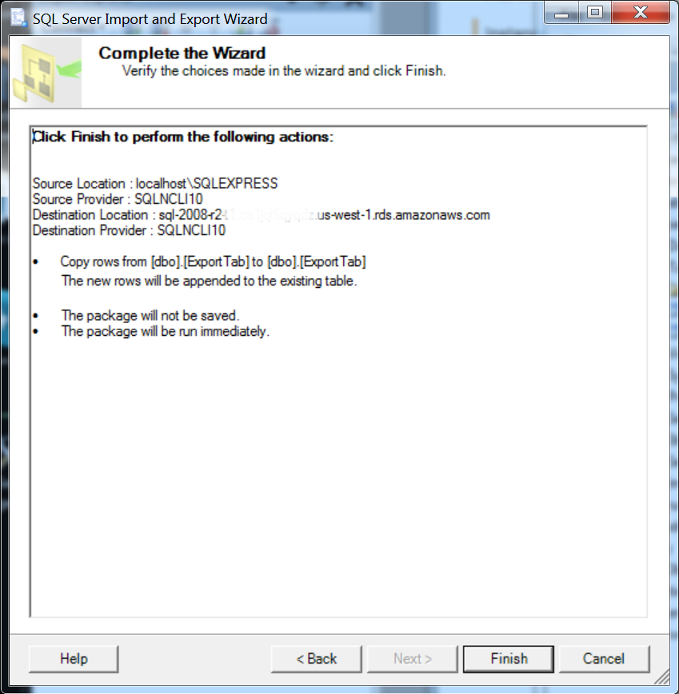

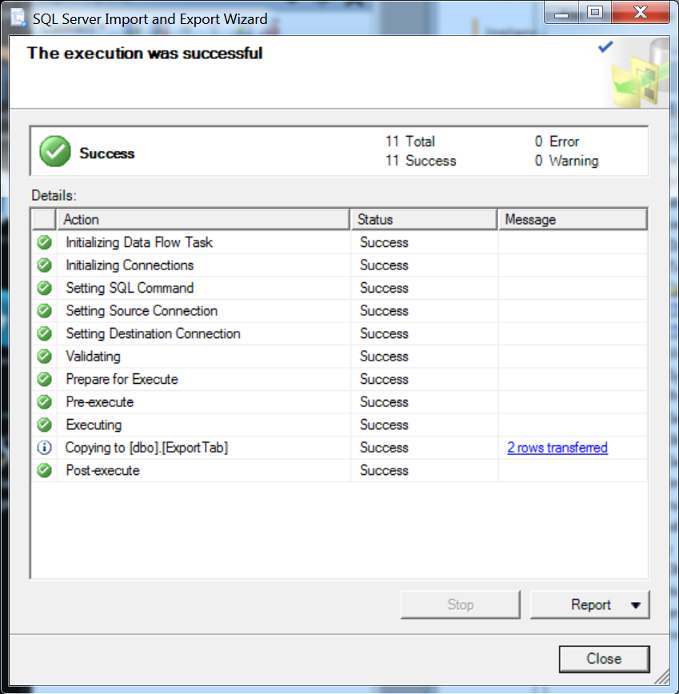

The process is almost the same as exporting from RDS to RDS:

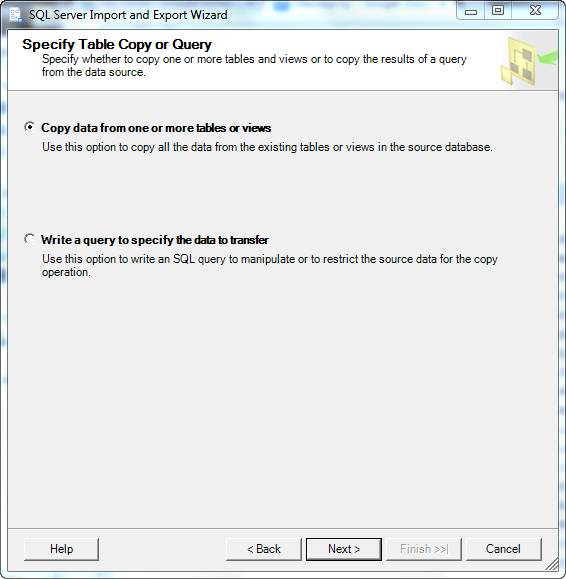

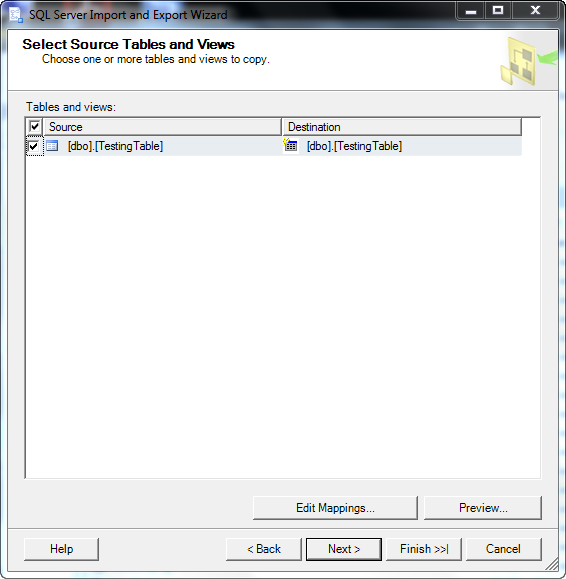

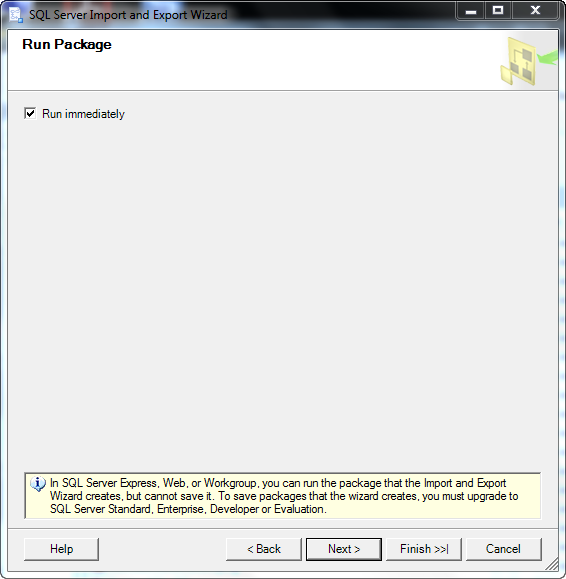

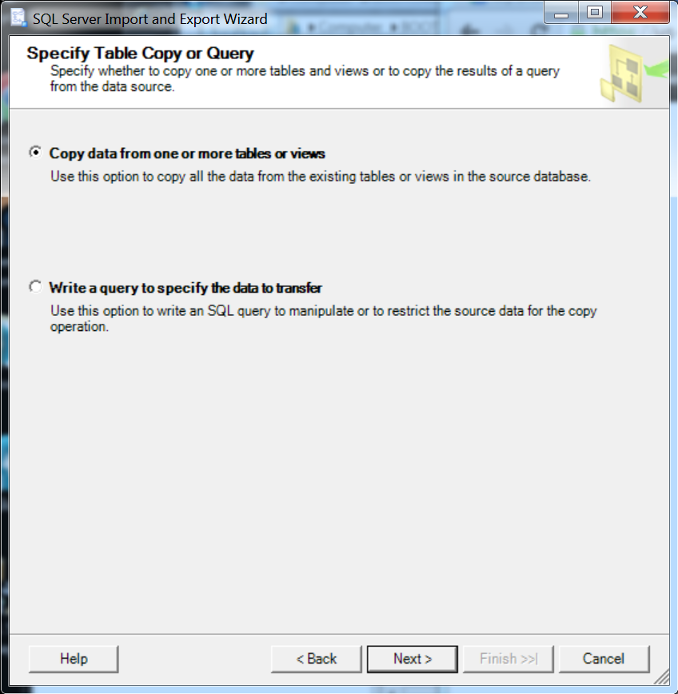

The Import and Export Wizard creates a special Integration Services package, which we can use to copy data from our local SQL Server database to the destination DB Instance. The wizard can filter which tables and even which tuples within a table are copied to the destination DB Instance.

Note:

The Import and Export Wizard works well for large datasets, but it may not be the fastest way to remotely export data from our local deployment. For an even faster way, we may want to consider the SQL Server bulk copy feature.

The SQL Server bulk copy feature is an efficient means of copying data from a source database to our DB Instance. Bulk copy writes the data that we specify to a data file, such as an ASCII file. We can then run bulk copy again to write the contents of the file to the destination DB Instance.

This section uses the bcp utility, which is included with all editions of SQL Server.

From the command line on a machine with SQL Server installed, type bcp and press Enter.:

C:\>bcp ?

usage: bcp {dbtable | query} {in | out | queryout | format} datafile

[-m maxerrors] [-f formatfile] [-e errfile]

[-F firstrow] [-L lastrow] [-b batchsize]

[-n native type] [-c character type] [-w wide character type]

[-N keep non-text native] [-V file format version] [-q quoted identifier]

[-C code page specifier] [-t field terminator] [-r row terminator]

[-i inputfile] [-o outfile] [-a packetsize]

[-S server name] [-U username] [-P password]

[-T trusted connection] [-v version] [-R regional enable]

[-k keep null values] [-E keep identity values]

[-h "load hints"] [-x generate xml format file]

[-d database name] [-K application intent]

C:\>

Data file download from codeplex.

On Query pane of SQL Server Management Studio, here are the scripts to create and populate a table:

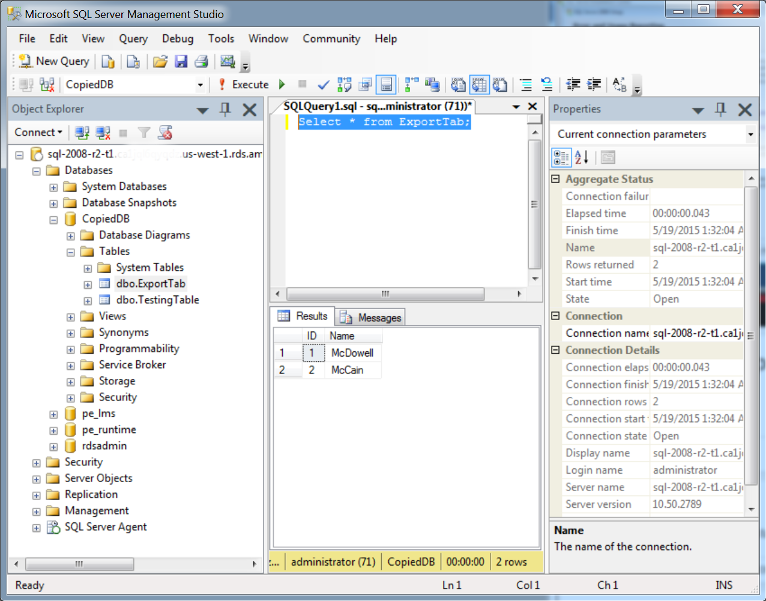

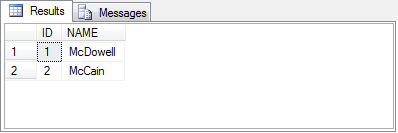

Create TABLE ExportTab(ID INT, Name varchar(20)) INSERT INTO ExportTab VALUES(1,'McDowell'), (2, 'McCain') select * from ExportTab

Execute one by one, and then, on command window, we use bcp to push data out:

PS C:\> bcp LocalSQLDB.dbo.ExportTab out ExportTab.txt -S localhost\SQLEXPRESS -c -T Starting copy... 2 rows copied. Network packet size (bytes): 4096 Clock Time (ms.) Total : 16 Average : (125.00 rows per sec.)

The "ExportTab.txt" looks like this:

1 McDowell 2 McCain

First, we need to drop the table, and then recreate the table:

DROP TABLE ExportTab; CREATE TABLE ExportTab(ID INT, NAME VARCHAR(10));

We can check there is no data in the table:

Select * from ExportTab;

Then, we use the bcp in reverse by using "in" instated of "out":

PS C:\> bcp LocalSQLDB.dbo.ExportTab in ExportTab.txt -S localhost\SQLEXPRESS -c -T Starting copy... 2 rows copied. Network packet size (bytes): 4096 Clock Time (ms.) Total : 250 Average : (8.00 rows per sec.)

Let's see if the data is back:

Select * from ExportTab;

Now we see there is our data back into the table:

We can use System administrator (sa) instead of Windows authentication.

The system administrator (sa) is a special login provided for backward compatibility. By default, it is assigned to the sysadmin fixed server role and cannot be changed. Although sa is a built-in administrator login, do not use it routinely.

PS C:\> bcp LocalSQLDB.dbo.ExportTab out ExportTab.txt -S localhost\SQLEXPRESS -U SAlogin -P password -c -T Starting copy... 2 rows copied. Network packet size (bytes): 4096 Clock Time (ms.) Total : 1 Average : (2000.00 rows per sec.)

After we generate the data file, if we have created the database and schema on the target DB Instance, we can upload the data to our DB Instance by using a similar command. In this case, we will use the in argument to specify an input file instead of out to specify an output file. Instead of using localhost to specify the local SQL Server instance, we will specify the endpoint of our DB Instance. If we use a port other than 1433, we will specify that, too. The user name and password will be those of the master user and password for our DB Instance. The syntax is as follows:

bcp dbname.schema_name.table_name in C:\table_name.txt -n -S endpoint,port -U master_user_name -P master_user_password -b 10000

In our case, the real bcp command looks like this:

bcp TestDB.dbo.ExportTab in C:\ExportTab.txt -n -S sql-2008-r2-t1.ca1jql6qyqdz.us-west-1.rds.amazonaws.com -U administrator -P Hodoo2015 -b 10000

AWS (Amazon Web Services)

- AWS : EKS (Elastic Container Service for Kubernetes)

- AWS : Creating a snapshot (cloning an image)

- AWS : Attaching Amazon EBS volume to an instance

- AWS : Adding swap space to an attached volume via mkswap and swapon

- AWS : Creating an EC2 instance and attaching Amazon EBS volume to the instance using Python boto module with User data

- AWS : Creating an instance to a new region by copying an AMI

- AWS : S3 (Simple Storage Service) 1

- AWS : S3 (Simple Storage Service) 2 - Creating and Deleting a Bucket

- AWS : S3 (Simple Storage Service) 3 - Bucket Versioning

- AWS : S3 (Simple Storage Service) 4 - Uploading a large file

- AWS : S3 (Simple Storage Service) 5 - Uploading folders/files recursively

- AWS : S3 (Simple Storage Service) 6 - Bucket Policy for File/Folder View/Download

- AWS : S3 (Simple Storage Service) 7 - How to Copy or Move Objects from one region to another

- AWS : S3 (Simple Storage Service) 8 - Archiving S3 Data to Glacier

- AWS : Creating a CloudFront distribution with an Amazon S3 origin

- AWS : Creating VPC with CloudFormation

- AWS : WAF (Web Application Firewall) with preconfigured CloudFormation template and Web ACL for CloudFront distribution

- AWS : CloudWatch & Logs with Lambda Function / S3

- AWS : Lambda Serverless Computing with EC2, CloudWatch Alarm, SNS

- AWS : Lambda and SNS - cross account

- AWS : CLI (Command Line Interface)

- AWS : CLI (ECS with ALB & autoscaling)

- AWS : ECS with cloudformation and json task definition

- AWS Application Load Balancer (ALB) and ECS with Flask app

- AWS : Load Balancing with HAProxy (High Availability Proxy)

- AWS : VirtualBox on EC2

- AWS : NTP setup on EC2

- AWS: jq with AWS

- AWS & OpenSSL : Creating / Installing a Server SSL Certificate

- AWS : OpenVPN Access Server 2 Install

- AWS : VPC (Virtual Private Cloud) 1 - netmask, subnets, default gateway, and CIDR

- AWS : VPC (Virtual Private Cloud) 2 - VPC Wizard

- AWS : VPC (Virtual Private Cloud) 3 - VPC Wizard with NAT

- DevOps / Sys Admin Q & A (VI) - AWS VPC setup (public/private subnets with NAT)

- AWS - OpenVPN Protocols : PPTP, L2TP/IPsec, and OpenVPN

- AWS : Autoscaling group (ASG)

- AWS : Setting up Autoscaling Alarms and Notifications via CLI and Cloudformation

- AWS : Adding a SSH User Account on Linux Instance

- AWS : Windows Servers - Remote Desktop Connections using RDP

- AWS : Scheduled stopping and starting an instance - python & cron

- AWS : Detecting stopped instance and sending an alert email using Mandrill smtp

- AWS : Elastic Beanstalk with NodeJS

- AWS : Elastic Beanstalk Inplace/Rolling Blue/Green Deploy

- AWS : Identity and Access Management (IAM) Roles for Amazon EC2

- AWS : Identity and Access Management (IAM) Policies, sts AssumeRole, and delegate access across AWS accounts

- AWS : Identity and Access Management (IAM) sts assume role via aws cli2

- AWS : Creating IAM Roles and associating them with EC2 Instances in CloudFormation

- AWS Identity and Access Management (IAM) Roles, SSO(Single Sign On), SAML(Security Assertion Markup Language), IdP(identity provider), STS(Security Token Service), and ADFS(Active Directory Federation Services)

- AWS : Amazon Route 53

- AWS : Amazon Route 53 - DNS (Domain Name Server) setup

- AWS : Amazon Route 53 - subdomain setup and virtual host on Nginx

- AWS Amazon Route 53 : Private Hosted Zone

- AWS : SNS (Simple Notification Service) example with ELB and CloudWatch

- AWS : Lambda with AWS CloudTrail

- AWS : SQS (Simple Queue Service) with NodeJS and AWS SDK

- AWS : Redshift data warehouse

- AWS : CloudFormation

- AWS : CloudFormation Bootstrap UserData/Metadata

- AWS : CloudFormation - Creating an ASG with rolling update

- AWS : Cloudformation Cross-stack reference

- AWS : OpsWorks

- AWS : Network Load Balancer (NLB) with Autoscaling group (ASG)

- AWS CodeDeploy : Deploy an Application from GitHub

- AWS EC2 Container Service (ECS)

- AWS EC2 Container Service (ECS) II

- AWS Hello World Lambda Function

- AWS Lambda Function Q & A

- AWS Node.js Lambda Function & API Gateway

- AWS API Gateway endpoint invoking Lambda function

- AWS API Gateway invoking Lambda function with Terraform

- AWS API Gateway invoking Lambda function with Terraform - Lambda Container

- Amazon Kinesis Streams

- AWS: Kinesis Data Firehose with Lambda and ElasticSearch

- Amazon DynamoDB

- Amazon DynamoDB with Lambda and CloudWatch

- Loading DynamoDB stream to AWS Elasticsearch service with Lambda

- Amazon ML (Machine Learning)

- Simple Systems Manager (SSM)

- AWS : RDS Connecting to a DB Instance Running the SQL Server Database Engine

- AWS : RDS Importing and Exporting SQL Server Data

- AWS : RDS PostgreSQL & pgAdmin III

- AWS : RDS PostgreSQL 2 - Creating/Deleting a Table

- AWS : MySQL Replication : Master-slave

- AWS : MySQL backup & restore

- AWS RDS : Cross-Region Read Replicas for MySQL and Snapshots for PostgreSQL

- AWS : Restoring Postgres on EC2 instance from S3 backup

- AWS : Q & A

- AWS : Security

- AWS : Security groups vs. network ACLs

- AWS : Scaling-Up

- AWS : Networking

- AWS : Single Sign-on (SSO) with Okta

- AWS : JIT (Just-in-Time) with Okta

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization