Docker & Kubernetes - Ingress controller on AWS with Kops

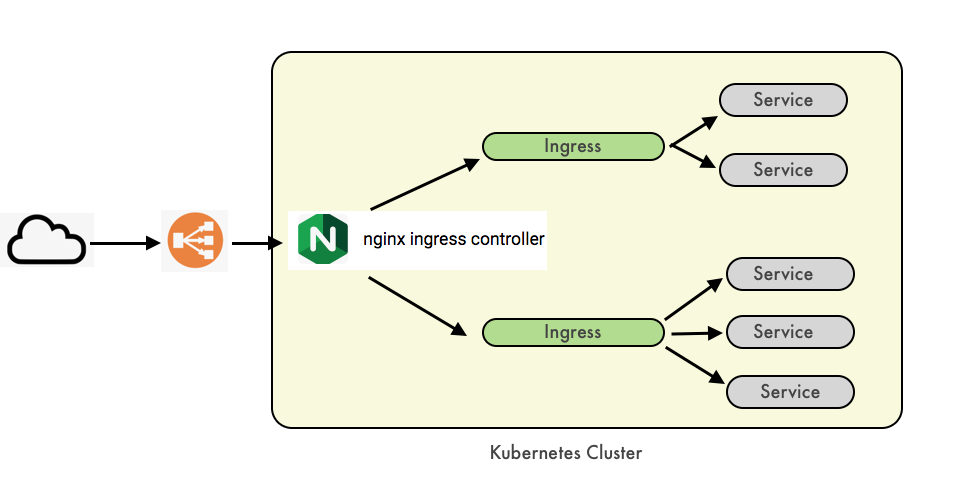

In this post, using kops we'll make Kubernetes cluster and setup an Ingress controller on AWS.

Then, we are going to deploy a service of type ClusterIP and access it using the Ingress rule.

- Create a S3 bucket to store our clusters state:

$ aws s3 mb s3://clusters.dev.pykey.com

- Export KOPS_STATE_STORE=s3://clusters.dev.pykey.com and then kops will use this location by default:

$ export KOPS_STATE_STORE=s3://clusters.dev.pykey.com

- Create a cluster configuration. While the following command does NOT actually create the cloud resources it gives us an opportunity to review the configuration or change it:

$ kops create cluster --zones=us-east-1a,us-east-1b,us-east-1c useast1.dev.pykey.com

The

kops create clustercommand creates the configuration for our cluster (default conf creates 1 master and 2 worker nodes). Note that it only creates the configuration, it does not actually create the cloud resources - we'll do that in the next step with akops update cluster.It prints commands we can use to explore further:

- List clusters with:

kops get cluster:

$ kops get cluster --state s3://clusters.dev.pykey.com NAME CLOUD ZONES useast1.dev.pykey.com aws us-east-1a,us-east-1b,us-east-1c

Or after setting export KOPS_STATE_STOR=s3://clusters.dev.pykey.com:

$ kops get cluster NAME CLOUD ZONES useast1.dev.pykey.com aws us-east-1a,us-east-1b,us-east-1c

- Edit this cluster with:

kops edit cluster useast1.dev.pykey.com:

# Please edit the object below. Lines beginning with a '#' will be ignored, # and an empty file will abort the edit. If an error occurs while saving this file will be # reopened with the relevant failures. # apiVersion: kops.k8s.io/v1alpha2 kind: Cluster metadata: creationTimestamp: "2020-09-03T21:24:06Z" name: useast1.dev.pykey.com spec: api: dns: {} authorization: rbac: {} channel: stable cloudProvider: aws configBase: s3://clusters.dev.pykey.com/useast1.dev.pykey.com containerRuntime: docker etcdClusters: - cpuRequest: 200m etcdMembers: - instanceGroup: master-us-east-1a name: a memoryRequest: 100Mi name: main - cpuRequest: 100m etcdMembers: - instanceGroup: master-us-east-1a name: a memoryRequest: 100Mi name: events iam: allowContainerRegistry: true legacy: false kubelet: anonymousAuth: false kubernetesApiAccess: - 0.0.0.0/0 kubernetesVersion: 1.18.8 masterInternalName: api.internal.useast1.dev.pykey.com masterPublicName: api.useast1.dev.pykey.com networkCIDR: 172.20.0.0/16 networking: kubenet: {} nonMasqueradeCIDR: 100.64.0.0/10 sshAccess: - 0.0.0.0/0 subnets: - cidr: 172.20.32.0/19 name: us-east-1a type: Public zone: us-east-1a - cidr: 172.20.64.0/19 name: us-east-1b type: Public zone: us-east-1b - cidr: 172.20.96.0/19 name: us-east-1c type: Public zone: us-east-1c topology: dns: type: Public masters: public nodes: public - Edit node instance group:

kops edit ig --name=useast1.dev.pykey.com nodes:

apiVersion: kops.k8s.io/v1alpha2 kind: InstanceGroup metadata: creationTimestamp: "2020-09-01T23:17:45Z" labels: kops.k8s.io/cluster: useast1.dev.pykey.com name: nodes spec: image: 099720109477/ubuntu/images/hvm-ssd/ubuntu-focal-20.04-amd64-server-20200716 machineType: t3.medium maxSize: 2 minSize: 2 nodeLabels: kops.k8s.io/instancegroup: nodes role: Node subnets: - us-east-1a - us-east-1b - us-east-1c

Modify it:

machineType: t3.medium maxSize: 2 minSize: 2 => machineType: t2.nano maxSize: 1 minSize: 1

- Edit master instance group:

kops edit ig --name=useast1.dev.pykey.com master-us-east-1a:

apiVersion: kops.k8s.io/v1alpha2 kind: InstanceGroup metadata: creationTimestamp: "2020-09-03T21:24:06Z" labels: kops.k8s.io/cluster: useast1.dev.pykey.com name: master-us-east-1a spec: image: 099720109477/ubuntu/images/hvm-ssd/ubuntu-focal-20.04-amd64-server-20200716 machineType: t3.medium maxSize: 1 minSize: 1 nodeLabels: kops.k8s.io/instancegroup: master-us-east-1a role: Master subnets: - us-east-1a

- Finally configure cluster with:

kops update cluster --name useast1.dev.pykey.com --yesSee next item. - Run

kops update clusterto preview what it is going to do our cluster in AWS:$ kops update cluster useast1.dev.pykey.com ...

Now we'll use the same command,

kops update clusterbut with --yes to actually create the cluster:$ kops update cluster useast1.dev.pykey.com --yes

-

Now that our cluster is ready, let's check:

$ kops validate cluster Using cluster from kubectl context: useast1.dev.pykey.com Validating cluster useast1.dev.pykey.com INSTANCE GROUPS NAME ROLE MACHINETYPE MIN MAX SUBNETS master-us-east-1a Master t3.medium 1 1 us-east-1a nodes Node t2.nano 1 1 us-east-1a,us-east-1b,us-east-1c NODE STATUS NAME ROLE READY ip-172-20-45-183.ec2.internal master True ip-172-20-95-112.ec2.internal node True Your cluster useast1.dev.pykey.com is ready

- list nodes:

$ kubectl get nodes NAME STATUS ROLES AGE VERSION ip-172-20-45-183.ec2.internal Ready master 11m v1.18.8 ip-172-20-95-112.ec2.internal Ready node 9m57s v1.18.8

Let's deploy the base components required for all Kubernetes cluster types. The deployment can be done using the following https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.27.0/deploy/static/mandatory.yaml:

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "-"

# Here: "-"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:master

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- default:

min:

memory: 90Mi

cpu: 100m

type: Container

To deploy it, use the following command:

$ kubectl apply --dry-run -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.27.0/deploy/static/mandatory.yaml W0914 12:49:01.360238 33193 helpers.go:535] --dry-run is deprecated and can be replaced with --dry-run=client. namespace/ingress-nginx created (dry run) configmap/nginx-configuration created (dry run) configmap/tcp-services created (dry run) configmap/udp-services created (dry run) serviceaccount/nginx-ingress-serviceaccount created (dry run) clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created (dry run) role.rbac.authorization.k8s.io/nginx-ingress-role created (dry run) rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created (dry run) clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created (dry run) deployment.apps/nginx-ingress-controller created (dry run) limitrange/ingress-nginx created (dry run) $ kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.27.0/deploy/static/mandatory.yaml namespace/ingress-nginx created configmap/nginx-configuration created configmap/tcp-services created configmap/udp-services created serviceaccount/nginx-ingress-serviceaccount created clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created role.rbac.authorization.k8s.io/nginx-ingress-role created rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created deployment.apps/nginx-ingress-controller created limitrange/ingress-nginx created

This deploys the biggest part of the whole infrastructure and thus creates a number of resources in the Kubernetes cluster.

We can watch the controller becoming available:

$ kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE ingress-nginx nginx-ingress-controller-7fcb6cffc5-llmtb 1/1 Running 0 3m1s kube-system dns-controller-78f77977f-rt8bg 1/1 Running 0 19m kube-system etcd-manager-events-ip-172-20-45-183.ec2.internal 1/1 Running 0 19m kube-system etcd-manager-main-ip-172-20-45-183.ec2.internal 1/1 Running 0 18m kube-system kops-controller-tckrf 1/1 Running 0 18m kube-system kube-apiserver-ip-172-20-45-183.ec2.internal 2/2 Running 0 18m kube-system kube-controller-manager-ip-172-20-45-183.ec2.internal 1/1 Running 0 19m kube-system kube-dns-64f86fb8dd-8xt5z 3/3 Running 0 17m kube-system kube-dns-64f86fb8dd-p5s4v 3/3 Running 0 19m kube-system kube-dns-autoscaler-cd7778b7b-n7pdm 1/1 Running 0 19m kube-system kube-proxy-ip-172-20-45-183.ec2.internal 1/1 Running 0 19m kube-system kube-proxy-ip-172-20-95-112.ec2.internal 1/1 Running 0 17m kube-system kube-scheduler-ip-172-20-45-183.ec2.internal 1/1 Running 0 19m

The only missing piece is how the Ingress Controller will be exposed, in the generic case, this will be achieved by a service of type LoadBalancer. This is the last step in the deployment of the Ingress Controller:

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.27.0/deploy/static/provider/cloud-generic.yaml service/ingress-nginx created

Now, the LoadBalancer service will be deployed and receive the external IP address.

It might take Kubernetes a moment to request and assign an external IP to our service. To check the public IP, inspect the service in the ingress-nginx namespace:

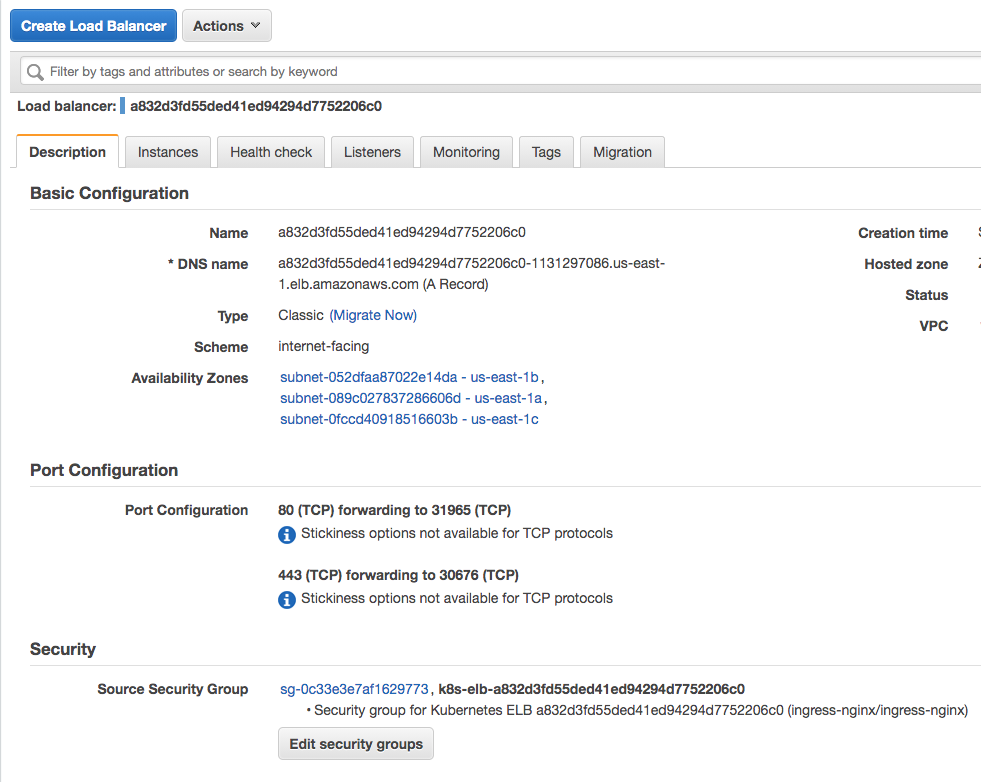

$ kubectl get service -n ingress-nginx NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ingress-nginx LoadBalancer 100.64.222.123 a832d3fd55ded41ed94294d7752206c0-1131297086.us-east-1.elb.amazonaws.com 80:31965/TCP,443:30676/TCP 82s

Once the EXTERNAL-IP is assigned, the NGINX Ingress Controller is ready to serve.

kind: Deployment

apiVersion: apps/v1

metadata:

name: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: "nginxdemos/hello"

---

kind: Service

apiVersion: v1

metadata:

name: nginx-demo

spec:

type: LoadBalancer

selector:

app: nginx

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30008

Deploy the pod and the service:

$ kubectl apply -f demo-deploy.yaml deployment.apps/nginx created service/nginx-demo created $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 100.64.0.1 <none> 443/TCP 121m nginx-demo LoadBalancer 100.71.139.63 a077ecbc0ac8249c99a1d7d139839d45-517528641.us-east-1.elb.amazonaws.com 80:30008/TCP 10m

The service named nginx-demo should be made available under the domain "dev.pykey.com". This would require an Ingress resource definition like the following:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ingress-test

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path:

backend:

serviceName: nginx-demo

servicePort: 80

Deploy the Ingress rule:

$ kubectl apply -f ingress.yaml ingress.extensions/ingress-test created

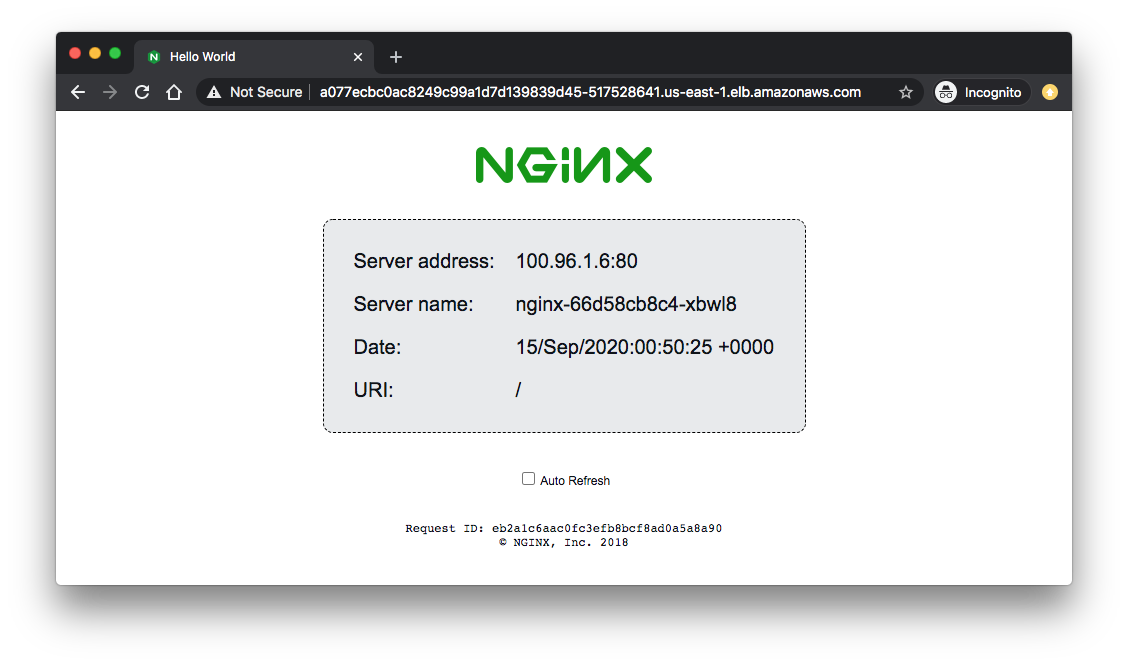

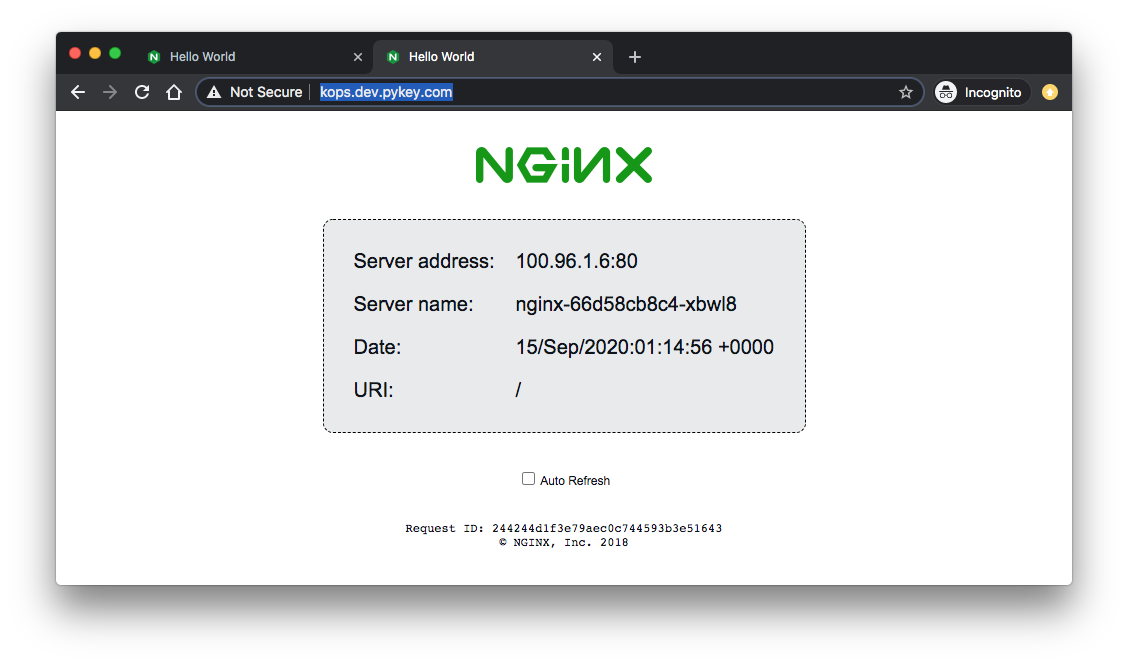

Copy the EXTERNAL-IP of the nginx-demo service into the browser to verify:

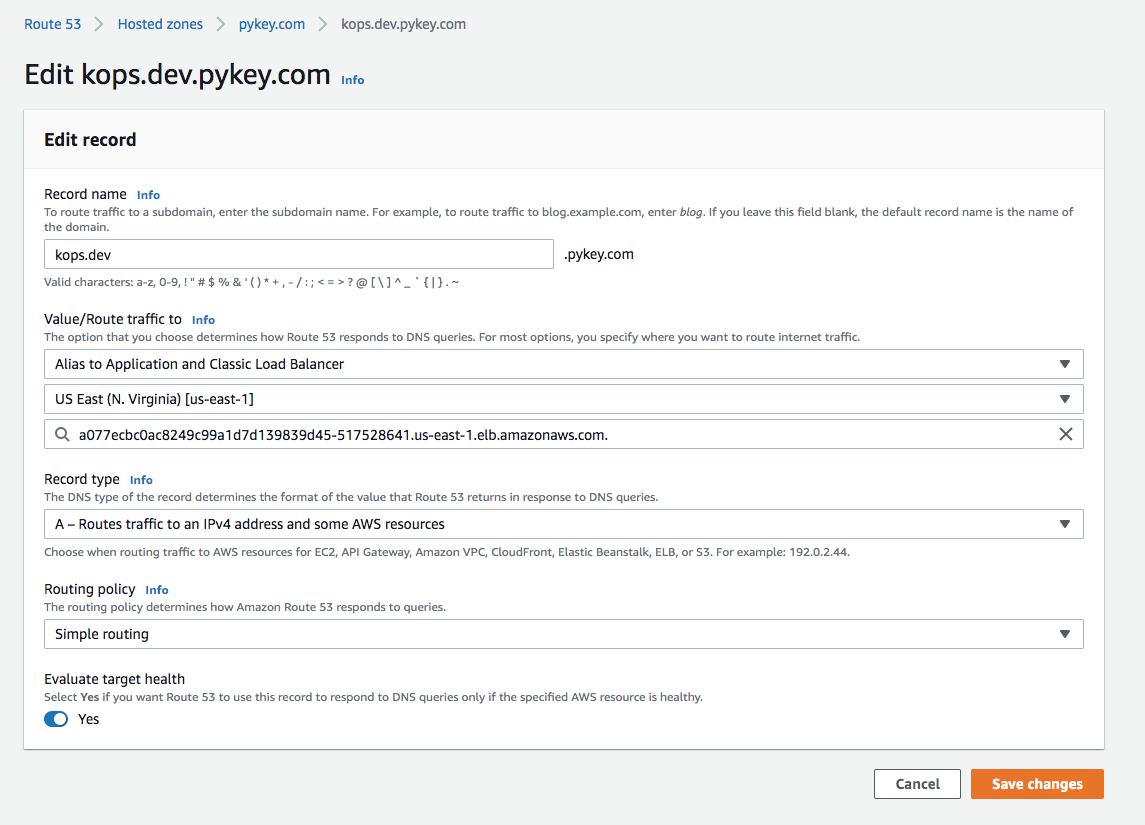

To use a domain name instead of the LB address, we define a new record in Route53:

Now, we can use the domain name:

To delete our cluster:

$ kops delete cluster useast1.dev.pykey.com --yes

Delete S3 bucket:

$ aws s3 rb s3://clusters.dev.pykey.com

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization