Install and Configure Mesos Cluster

We will install Mesos via the GitHub repository for Ubuntu with up-to-date official package repositories which connect directly to the native package management tools of our favorite Linux distribution.

Add the Mesosphere Repositories to sources list.

First, we need to download the Mesosphere project's key from the Ubuntu keyserver and then set the correct URL for our Ubuntu release:

# Setup

$ sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv E56151BF

$ DISTRO=$(lsb_release -is | tr '[:upper:]' '[:lower:]')

$ CODENAME=$(lsb_release -cs)

# Add the repository

$ echo "deb http://repos.mesosphere.com/${DISTRO} ${CODENAME} main" | sudo tee /etc/apt/sources.list.d/mesosphere.list

$ sudo apt-get -y update

$ sudo apt-get -y install mesos marathon

For master hosts, we need the mesosphere meta package. This includes the zookeeper, mesos, marathon, and chronos applications:

$ sudo apt-get install mesosphere

For slave hosts, we only need the mesos package, which also pulls in zookeeper as a dependency:

sudo apt-get install mesos

The Mesos package will automatically pull in the ZooKeeper package as a dependency.

The Mesosphere package ships with a default configuration, outlined below:

- /etc/default/mesos

sets master and slave log dir to /var/log/mesos - /etc/default/mesos-master

sets port to 5050 sets zk to the value in the file /etc/mesos/zk - /etc/default/mesos-slave

sets master as the value of /etc/mesos/zk - /etc/mesos/zk

sets the ZooKeeper instance to zk://localhost:2181/mesos - /etc/mesos-master/work_dir

sets working_dir to var/lib/mesos - /etc/mesos-master/quorum

sets quorum to 1

This default configuration allows Mesos to start immediately on a single node.

Let's configure our zookeeper connection info (/etc/mesos/zk).

This allows all of our hosts to connect to the correct master servers.

We need to modify the default to point to our three master servers:

zk://localhost:2181/mesos

We need to replace localhost with the IP address of our Mesos master servers:

zk://172.17.0.1:2181,172.17.0.2:2181,172.17.0.3:2181/mesos

Do the same to all our masters and slaves. This will help each individual server connect to the correct master servers to communicate with the cluster.

Define a unique ID number, from 1 to 255, for each of our master servers (/etc/zookeeper/conf/myid).

Our first server will just have this in the file:

1

Next, we need to modify our zookeeper configuration file to map our zookeeper IDs to actual hosts. This will ensure that the service can correctly resolve each host from the ID system that it uses.

Append the following values to /etc/zookeeper/conf/zoo.cfg on each node:

server.1=172.17.0.1:2888:3888 server.2=172.17.0.2:2888:3888 server.3=172.17.0.3:2888:3888

The fist port is used by followers to connect to the leader. The second one is used for leader election.

Do the same mappings for all master servers' configuration files.

Now, configure Mesos on the three master servers (/etc/mesos-master/quorum).

Let's adjust the quorum necessary to make decisions. This will determine the number of hosts necessary for the cluster to be in a functioning state.

A replicated group of servers in the same application is called a quorum, and in replicated mode, all servers in the quorum have copies of the same configuration file.

The quorum should be set so that over 50 percent of the master members must be present to make decisions. However, we also want to build in some fault tolerance so that if all of our masters are not present, the cluster can still function.

We have three masters, so the only setting that satisfies both of these requirements is a quorum of two. Since the initial configuration assumes a single server setup, the quorum is currently set to one.

Change the value from 1 to 2:

2

The number should be set greater than the number of masters divided by 2. For example, the optimal quorum size for a five node master cluster would be 3. In our case, there are three masters and the quorum size should be set to 2 on each node.

Next we want to configure the hostname and IP Address.

For our master servers, the IP address needs to be placed in these files:

/etc/mesos-master/ip /etc/mesos-master/hostname

Put IPs into the files:

172.17.0.1 (1st server) 172.17.0.2 (2nd server) 172.17.0.3 (3rd server)

Marathon is Mesosphere's clustered init system.

Let's configure Marathon.

Marathon will be running on each of our master hosts, but only the leading master server will be able to actually schedule jobs while the other Marathon instances will transparently proxy requests to the master server.

We need to set the hostname again for each server's Marathon instance:

$ mkdir -p /etc/marathon/conf $ cp /etc/mesos-master/hostname /etc/marathon/conf/hostname

Next, we may want to define the list of zookeeper masters that Marathon connects to for information and scheduling. This is the same zookeeper connection string that we've been using for Mesos, so we can just copy the file. We need to place it in a file called master:

$ cp /etc/mesos/zk /etc/marathon/conf/master

This will allow our Marathon service to connect to the Mesos cluster. However, we also want Marathon to store its own state information in zookeeper. For this, we will use the other zookeeper connection file as a base, and just modify the endpoint.

First, copy the file to the Marathon zookeeper location:

$ cp /etc/marathon/conf/master /etc/marathon/conf/zk

Next, edit the file (/etc/marathon/conf/zk) and change the endpoint from /mesos to /marathon:

zk://172.17.0.1:2181,172.17.0.2:2181,172.17.0.3:2181/marathon

We need to bring each service up on the set of master nodes at roughly the same time. Bring up Mesos master.

But we need to make sure that our master servers are only running the Mesos master process, and not running the slave process. We can ensure that the server doesn't start the slave process at boot by creating an override file:

$ echo manual | sudo tee /etc/init/mesos-slave.override

Now, all we need to do is restart zookeeper, which will set up our master elections. We can then start our Mesos master and Marathon processes:

$ sudo service zookeeper restart $ sudo service mesos-master restart $ sudo service marathon restart

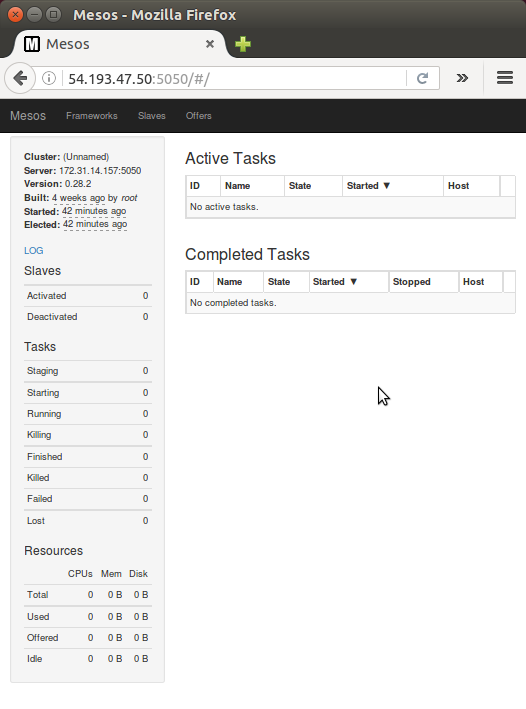

If the packages were installed and configured correctly, we should be able to access the Mesos console at http://<master-ip>:5050:

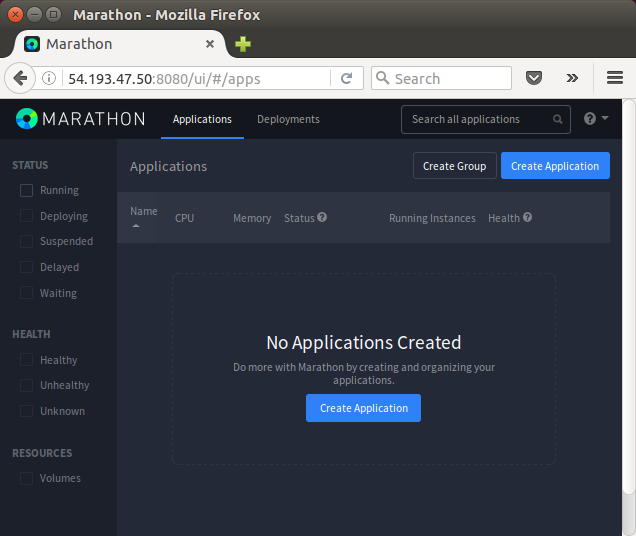

Marathon console at http://<master-ip>:8080:

So far we've configured the master servers. Now we want to configure slave servers.

Since the slaves do not need to run their own zookeeper instances, we may want to stop any zookeeper process currently running on our slave nodes and create an override file so that it won't automatically start when the server reboots:

$ sudo service zookeeper stop $ sudo sh -c "echo manual > /etc/init/zookeeper.override"

Then, we want to create another override file to make sure the Mesos master process doesn't start on our slave servers. We will also ensure that it is stopped currently:

$ sudo service mesos-master stop $ sudo sh -c "echo manual > /etc/init/mesos-master.override"

Next, we need to set the IP address and hostname, just as we did for our master servers. This involves putting each node's IP address into a file, this time under the /etc/mesos-slave directory. We will use this as the hostname as well, for easy access to services through the web interface:

$ echo 172.31.9.1 | sudo tee /etc/mesos-slave/ip $ sudo cp /etc/mesos-slave/ip /etc/mesos-slave/hostname

Again, use each slave server's individual IP address for the first command. This will ensure that it is being bound to the correct interface.

Now, we have all of the pieces in place to start our Mesos slaves. We just need to turn on the service:

$ sudo service mesos-slave restart

Do this on each of our slave machines.

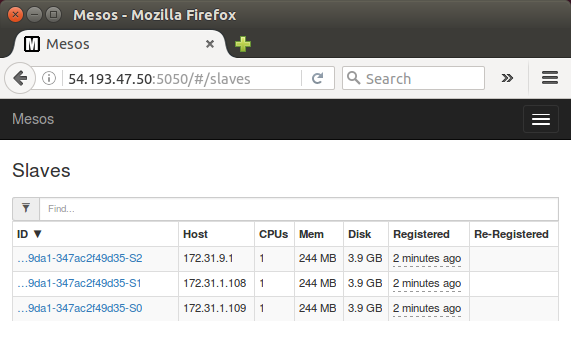

To see whether our slaves are successfully registering themselves in our cluster, go back to one of the master servers at port 5050:

http://54.193.47.50:5050

Click on the "Slaves" link at the top of the interface. This will give us an overview of each machine's resource contribution, as well as links to a page for each slave:

Marathon does scheduling long-running tasks, and it's a sort of the init system for a Mesosphere cluster. It handles starting and stopping services, scheduling tasks, and making sure applications come back up if they go down.

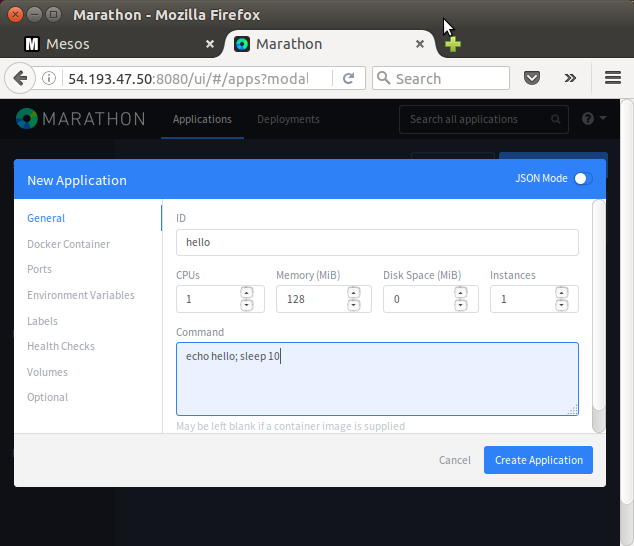

The most easiest way of getting a service running on the cluster is to add an application through the Marathon web interface.

Let's visit the Marathon web interface (http://<master-ip>:8080) on the master servers.

Click on the "Create Application" button in the upper-right corner. This will pop up an overlay where we can add information about our new application:

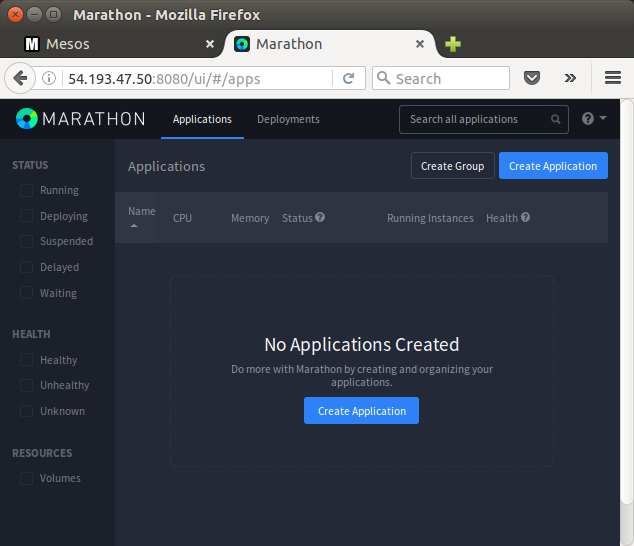

Click "Create Application":

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization