Docker : Envoy - Front Proxy

Designed from the ground up for microservices, Envoy is one of the newest proxies and it's been deployed in production at Lyft, Apple, Salesforce, and Google.

For more information, check Front Proxy.

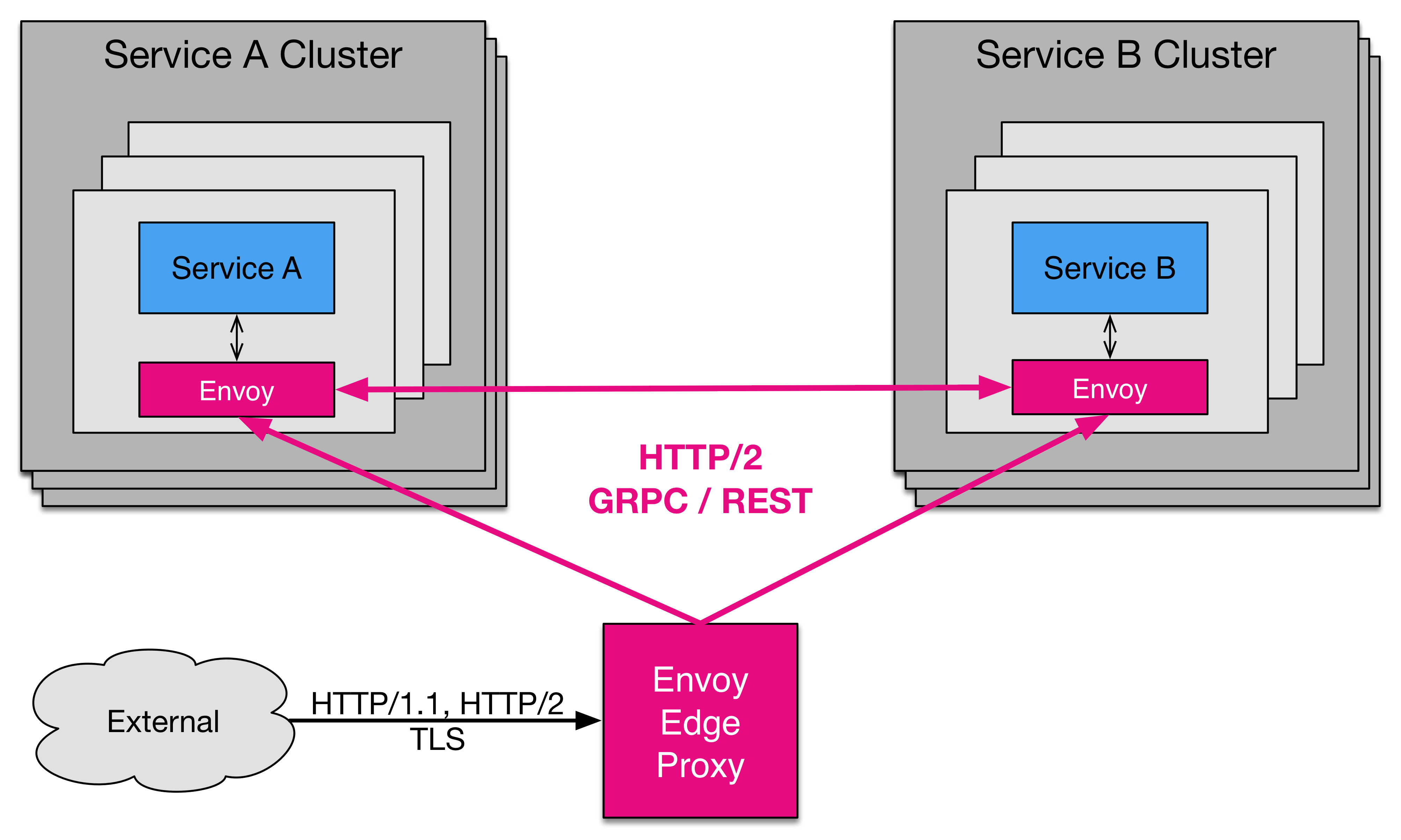

Envoy has the following three deployment models (https://www.learnenvoy.io/):

- Front Proxy: Envoy acts as the primary load balancer for customer requests from the public internet. This deployment style is also called an edge proxy or a load balancer.

- Service Mesh: Envoy acts as the primary load balancer for requests between internal services. By co-locating Envoy with our code, we can let Envoy handle the complexities of the network. This makes service-to-service communication safer and more reliable, while alleviating the need to re-implement this functionality within each service.

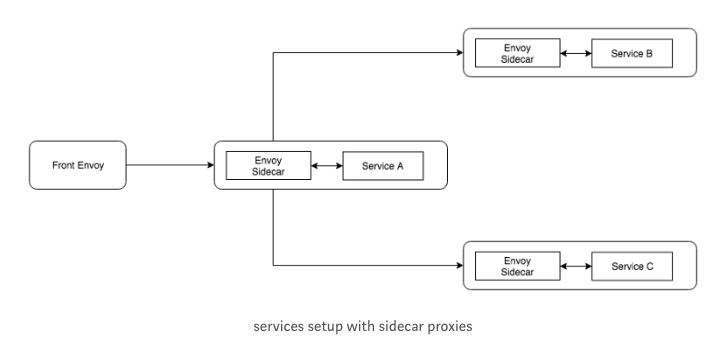

- Sidecar: A basic Service Mesh uses Envoy sidecars to handle outbound traffic for each service instance. This allows Envoy to handle load balancing and resilience strategies for all internal calls, as well as providing a coherent layer for observability. Services are still exposed to the internal network, and all network calls pass through an Envoy on localhost.

From Service Proxying with Matt Klein Holiday Repeat

In practice, we usually end up running combination of the models.

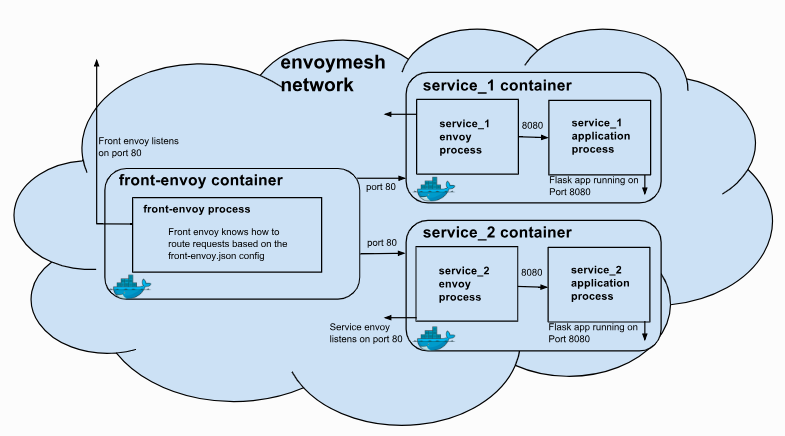

In this post, we'll deploy a front envoy and a couple of services (simple flask apps) colocated with a running service envoy. Three containers will be deployed inside a virtual network called envoymesh.

From Front Proxy

The diagram is our deployment by the following envoy/examples/front-proxy/docker-compose.yml:

version: '2'

services:

front-envoy:

build:

context: .

dockerfile: Dockerfile-frontenvoy

volumes:

- ./front-envoy.yaml:/etc/front-envoy.yaml

networks:

- envoymesh

expose:

- "80"

- "8001"

ports:

- "8000:80"

- "8001:8001"

service1:

build:

context: .

dockerfile: Dockerfile-service

volumes:

- ./service-envoy.yaml:/etc/service-envoy.yaml

networks:

envoymesh:

aliases:

- service1

environment:

- SERVICE_NAME=1

expose:

- "80"

service2:

build:

context: .

dockerfile: Dockerfile-service

volumes:

- ./service-envoy.yaml:/etc/service-envoy.yaml

networks:

envoymesh:

aliases:

- service2

environment:

- SERVICE_NAME=2

expose:

- "80"

networks:

envoymesh: {}

All incoming requests are routed via the front envoy, which is acting as a reverse proxy sitting on the edge of the envoymesh network. Port 80 is mapped to port 8000 by the docker-compose.yml.

All traffic routed by the front envoy to the service containers is actually routed to the service envoys (routes setup in envoy/examples/front-proxy/front-envoy.yaml):

static_resources:

listeners:

- address:

socket_address:

address: 0.0.0.0

port_value: 80

filter_chains:

- filters:

- name: envoy.http_connection_manager

config:

codec_type: auto

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

- name: backend

domains:

- "*"

routes:

- match:

prefix: "/service/1"

route:

cluster: service1

- match:

prefix: "/service/2"

route:

cluster: service2

http_filters:

- name: envoy.router

config: {}

clusters:

- name: service1

connect_timeout: 0.25s

type: strict_dns

lb_policy: round_robin

http2_protocol_options: {}

hosts:

- socket_address:

address: service1

port_value: 80

- name: service2

connect_timeout: 0.25s

type: strict_dns

lb_policy: round_robin

http2_protocol_options: {}

hosts:

- socket_address:

address: service2

port_value: 80

admin:

access_log_path: "/dev/null"

address:

socket_address:

address: 0.0.0.0

port_value: 8001

In turn, the service envoys route the request to the flask app via the loopback address (routes setup in envoy/examples/front-proxy/service-envoy.yaml):

This setup illustrates the advantage of running service envoys collocated with our services: all requests are handled by the service envoy, and efficiently routed to our services.

Let's clone the repo with git clone https://github.com/envoyproxy/envoy.git.

$ cd envoy/examples/front-proxy $ ls -1 Dockerfile-frontenvoy Dockerfile-service README.md deprecated_v1 docker-compose.yml front-envoy.yaml service-envoy.yaml service.py start_service.sh

Let's build containers with Dockerfile-frontenvoy & Dockerfile-service files and run them in detached mode:

$ docker-compose up --build -d

$ docker-compose ps

Name Command State Ports

----------------------------------------------------------------------------------------------------------------------------

front-proxy_front-envoy_1 /usr/bin/dumb-init -- /bin ... Up 10000/tcp, 0.0.0.0:8000->80/tcp, 0.0.0.0:8001->8001/tcp

front-proxy_service1_1 /bin/sh -c /usr/local/bin/ ... Up 10000/tcp, 80/tcp

front-proxy_service2_1 /bin/sh -c /usr/local/bin/ ... Up 10000/tcp, 80/tcp

Docker for Mac uses HyperKit, a lightweight macOS virtualization solution built on top of the Hypervisor.framework.

Currently, there is no docker-machine create driver for HyperKit, so use the virtualbox driver to create local machines. (See the Docker Machine driver for Oracle VirtualBox.)

We can use docker-machine create with the virtualbox driver to create additional local machines.

$ docker-machine create --driver virtualbox default ... (default) Creating VirtualBox VM... (default) Creating SSH key... (default) Starting the VM... (default) Check network to re-create if needed... (default) Waiting for an IP... Waiting for machine to be running, this may take a few minutes... Detecting operating system of created instance... Waiting for SSH to be available... Detecting the provisioner... Provisioning with boot2docker... Copying certs to the local machine directory... Copying certs to the remote machine... Setting Docker configuration on the remote daemon... Checking connection to Docker... Docker is up and running! To see how to connect your Docker Client to the Docker Engine running on this virtual machine, run: docker-machine env default $ docker-machine env default export DOCKER_TLS_VERIFY="1" export DOCKER_HOST="tcp://192.168.99.100:2376" export DOCKER_CERT_PATH="/Users/kihyuckhong/.docker/machine/machines/default" export DOCKER_MACHINE_NAME="default" # Run this command to configure your shell: # eval $(docker-machine env default) $ eval $(docker-machine env default)

We can run both HyperKit and Oracle VirtualBox on the same system!

We need to make sure we have the latest VirtualBox correctly installed on our system (either as part of an earlier Toolbox install, or manual install).

Let's test the Envoy's routing capabilities by sending a request to both services via the front-envoy.

For service1:

$ docker-machine ip default 192.168.99.100 $ curl -v $(docker-machine ip default):8000/service/1 * Trying 192.168.99.100... * TCP_NODELAY set * Connected to 192.168.99.100 (192.168.99.100) port 8000 (#0) > GET /service/1 HTTP/1.1 > Host: 192.168.99.100:8000 > User-Agent: curl/7.54.0 > Accept: */* > < HTTP/1.1 200 OK < content-type: text/html; charset=utf-8 < content-length: 89 < server: envoy < date: Tue, 25 Dec 2018 09:29:02 GMT < x-envoy-upstream-service-time: 9 < Hello from behind Envoy (service 1)! hostname: bd416e27da09 resolvedhostname: 172.18.0.4 * Connection #0 to host 192.168.99.100 left intact

For service2:

$ curl -v $(docker-machine ip default):8000/service/2 * Trying 192.168.99.100... * TCP_NODELAY set * Connected to 192.168.99.100 (192.168.99.100) port 8000 (#0) > GET /service/2 HTTP/1.1 > Host: 192.168.99.100:8000 > User-Agent: curl/7.54.0 > Accept: */* > < HTTP/1.1 200 OK < content-type: text/html; charset=utf-8 < content-length: 89 < server: envoy < date: Tue, 25 Dec 2018 09:31:50 GMT < x-envoy-upstream-service-time: 5 < Hello from behind Envoy (service 2)! hostname: 37d6498ee463 resolvedhostname: 172.18.0.3 * Connection #0 to host 192.168.99.100 left intact

We can see that each request, while sent to the front envoy, was correctly routed to the respective application.

Now let's scale up our service1 nodes to see the clustering capabilities of envoy:

$ docker-compose scale service1=3 WARNING: The scale command is deprecated. Use the up command with the --scale flag instead. Starting front-proxy_service1_1 ... done Creating front-proxy_service1_2 ... done Creating front-proxy_service1_3 ... done

Now if we send multiple requests to service1, the front envoy will load balance the requests by doing a round robin of the three service1 machines:

$ curl -v $(docker-machine ip default):8000/service/1 * Trying 192.168.99.100... * TCP_NODELAY set * Connected to 192.168.99.100 (192.168.99.100) port 8000 (#0) > GET /service/1 HTTP/1.1 > Host: 192.168.99.100:8000 > User-Agent: curl/7.54.0 > Accept: */* > < HTTP/1.1 200 OK < content-type: text/html; charset=utf-8 < content-length: 89 < server: envoy < date: Tue, 25 Dec 2018 09:42:05 GMT < x-envoy-upstream-service-time: 6 < Hello from behind Envoy (service 1)! hostname: 18476f2c5373 resolvedhostname: 172.18.0.6 * Connection #0 to host 192.168.99.100 left intact $ curl -v $(docker-machine ip default):8000/service/1 * Trying 192.168.99.100... * TCP_NODELAY set * Connected to 192.168.99.100 (192.168.99.100) port 8000 (#0) > GET /service/1 HTTP/1.1 > Host: 192.168.99.100:8000 > User-Agent: curl/7.54.0 > Accept: */* > < HTTP/1.1 200 OK < content-type: text/html; charset=utf-8 < content-length: 89 < server: envoy < date: Tue, 25 Dec 2018 09:43:51 GMT < x-envoy-upstream-service-time: 2 < Hello from behind Envoy (service 1)! hostname: bd416e27da09 resolvedhostname: 172.18.0.4 * Connection #0 to host 192.168.99.100 left intact $ curl -v $(docker-machine ip default):8000/service/1 * Trying 192.168.99.100... * TCP_NODELAY set * Connected to 192.168.99.100 (192.168.99.100) port 8000 (#0) > GET /service/1 HTTP/1.1 > Host: 192.168.99.100:8000 > User-Agent: curl/7.54.0 > Accept: */* > < HTTP/1.1 200 OK < content-type: text/html; charset=utf-8 < content-length: 89 < server: envoy < date: Tue, 25 Dec 2018 09:44:00 GMT < x-envoy-upstream-service-time: 3 < Hello from behind Envoy (service 1)! hostname: 5356b5f172f7 resolvedhostname: 172.18.0.5 * Connection #0 to host 192.168.99.100 left intact

In addition of using curl from our host machine, we can also enter the containers themselves and curl from inside them. To enter a container we can use docker-compose exec <container_name> /bin/bash.

For example we can enter the front-envoy container, and curl for services locally:

$ docker-compose exec front-envoy /bin/bash root@cfb6469dda16:/# curl localhost:80/service/1 Hello from behind Envoy (service 1)! hostname: bd416e27da09 resolvedhostname: 172.18.0.4 root@cfb6469dda16:/# root@cfb6469dda16:/# curl localhost:80/service/1 Hello from behind Envoy (service 1)! hostname: 18476f2c5373 resolvedhostname: 172.18.0.6 root@cfb6469dda16:/# root@cfb6469dda16:/# curl localhost:80/service/1 Hello from behind Envoy (service 1)! hostname: 5356b5f172f7 resolvedhostname: 172.18.0.5 root@cfb6469dda16:/# root@cfb6469dda16:/# curl localhost:80/service/1 Hello from behind Envoy (service 1)! hostname: bd416e27da09 resolvedhostname: 172.18.0.4 root@cfb6469dda16:/# root@cfb6469dda16:/# curl localhost:80/service/1 Hello from behind Envoy (service 1)! hostname: 18476f2c5373 resolvedhostname: 172.18.0.6 root@cfb6469dda16:/# root@cfb6469dda16:/# curl localhost:80/service/1 Hello from behind Envoy (service 1)! hostname: 5356b5f172f7 resolvedhostname: 172.18.0.5 root@cfb6469dda16:/#

When envoy runs it also attaches an admin to our desired port. In the example configs, the admin is bound to port 8001. We can curl it to gain useful information. For example we can curl /server_info to get information about the envoy version we are running. Additionally we can curl /stats to get statistics. For example inside frontenvoy we can get:

$ docker-compose exec front-envoy /bin/bash

root@cfb6469dda16:/# curl localhost:8001/server_info

{

"version": "7b6d7a2706ac5290e5e87535c23c11def49275db/1.10.0-dev/Clean/RELEASE/BoringSSL",

"state": "LIVE",

"command_line_options": {

"base_id": "0",

"concurrency": 1,

"config_path": "/etc/front-envoy.yaml",

"config_yaml": "",

"allow_unknown_fields": false,

"admin_address_path": "",

"local_address_ip_version": "v4",

"log_level": "info",

"component_log_level": "",

"log_format": "[%Y-%m-%d %T.%e][%t][%l][%n] %v",

"log_path": "",

"hot_restart_version": false,

"service_cluster": "front-proxy",

"service_node": "",

"service_zone": "",

"mode": "Serve",

"max_stats": "16384",

"max_obj_name_len": "60",

"disable_hot_restart": false,

"enable_mutex_tracing": false,

"restart_epoch": 0,

"file_flush_interval": "10s",

"drain_time": "600s",

"parent_shutdown_time": "900s"

},

"uptime_current_epoch": "1952s",

"uptime_all_epochs": "1952s"

}

root@cfb6469dda16:/#

root@cfb6469dda16:/# curl localhost:8001/stats

cluster.service1.bind_errors: 0

cluster.service1.circuit_breakers.default.cx_open: 0

cluster.service1.circuit_breakers.default.rq_open: 0

cluster.service1.circuit_breakers.default.rq_pending_open: 0

cluster.service1.circuit_breakers.default.rq_retry_open: 0

cluster.service1.circuit_breakers.high.cx_open: 0

cluster.service1.circuit_breakers.high.rq_open: 0

cluster.service1.circuit_breakers.high.rq_pending_open: 0

cluster.service1.circuit_breakers.high.rq_retry_open: 0

cluster.service1.external.upstream_rq_200: 15

cluster.service1.external.upstream_rq_2xx: 15

cluster.service1.external.upstream_rq_completed: 15

cluster.service1.http2.header_overflow: 0

cluster.service1.http2.headers_cb_no_stream: 0

cluster.service1.http2.rx_messaging_error: 0

cluster.service1.http2.rx_reset: 0

cluster.service1.http2.too_many_header_frames: 0

cluster.service1.http2.trailers: 0

cluster.service1.http2.tx_reset: 0

cluster.service1.lb_healthy_panic: 0

cluster.service1.lb_local_cluster_not_ok: 0

cluster.service1.lb_recalculate_zone_structures: 0

cluster.service1.lb_subsets_active: 0

cluster.service1.lb_subsets_created: 0

cluster.service1.lb_subsets_fallback: 0

cluster.service1.lb_subsets_removed: 0

cluster.service1.lb_subsets_selected: 0

cluster.service1.lb_zone_cluster_too_small: 0

cluster.service1.lb_zone_no_capacity_left: 0

cluster.service1.lb_zone_number_differs: 0

cluster.service1.lb_zone_routing_all_directly: 0

cluster.service1.lb_zone_routing_cross_zone: 0

cluster.service1.lb_zone_routing_sampled: 0

cluster.service1.max_host_weight: 1

cluster.service1.membership_change: 2

cluster.service1.mmembership_healthy: 3

cluster.service1.mmembership_total: 3

cluster.service1.original_dst_host_invalid: 0

cluster.service1.retry_or_shadow_abandoned: 0

cluster.service1.update_attempt: 378

cluster.service1.update_empty: 0

cluster.service1.update_failure: 0

cluster.service1.update_no_rebuild: 376

cluster.service1.update_success: 378

cluster.service1.upstream_cx_active: 3

cluster.service1.upstream_cx_close_notify: 0

cluster.service1.upstream_cx_connect_attempts_exceeded: 0

cluster.service1.upstream_cx_connect_fail: 0

cluster.service1.upstream_cx_connect_timeout: 0

cluster.service1.upstream_cx_destroy: 0

cluster.service1.upstream_cx_destroy_local: 0

cluster.service1.upstream_cx_destroy_local_with_active_rq: 0

cluster.service1.upstream_cx_destroy_remote: 0

cluster.service1.upstream_cx_destroy_remote_with_active_rq: 0

cluster.service1.upstream_cx_destroy_with_active_rq: 0

cluster.service1.upstream_cx_http1_total: 0

cluster.service1.upstream_cx_http2_total: 3

cluster.service1.upstream_cx_idle_timeout: 0

cluster.service1.upstream_cx_max_requests: 0

cluster.service1.upstream_cx_none_healthy: 0

cluster.service1.upstream_cx_overflow: 0

cluster.service1.upstream_cx_protocol_error: 0

cluster.service1.upstream_cx_rx_bytes_buffered: 405

cluster.service1.upstream_cx_rx_bytes_total: 2505

cluster.service1.upstream_cx_total: 3

cluster.service1.upstream_cx_tx_bytes_buffered: 0

cluster.service1.upstream_cx_tx_bytes_total: 1301

cluster.service1.upstream_flow_control_backed_up_total: 0

cluster.service1.upstream_flow_control_drained_total: 0

cluster.service1.upstream_flow_control_paused_reading_total: 0

cluster.service1.upstream_flow_control_resumed_reading_total: 0

cluster.service1.upstream_rq_200: 15

cluster.service1.upstream_rq_2xx: 15

cluster.service1.upstream_rq_active: 0

cluster.service1.upstream_rq_cancelled: 0

cluster.service1.upstream_rq_completed: 15

cluster.service1.upstream_rq_maintenance_mode: 0

cluster.service1.upstream_rq_pending_active: 0

cluster.service1.upstream_rq_pending_failure_eject: 0

cluster.service1.upstream_rq_pending_overflow: 0

cluster.service1.upstream_rq_pending_total: 3

cluster.service1.upstream_rq_per_try_timeout: 0

cluster.service1.upstream_rq_retry: 0

cluster.service1.upstream_rq_retry_overflow: 0

cluster.service1.upstream_rq_retry_success: 0

cluster.service1.upstream_rq_rx_reset: 0

cluster.service1.upstream_rq_timeout: 0

cluster.service1.upstream_rq_total: 15

cluster.service1.upstream_rq_tx_reset: 0

cluster.service1.version: 0

cluster.service2.bind_errors: 0

cluster.service2.circuit_breakers.default.cx_open: 0

cluster.service2.circuit_breakers.default.rq_open: 0

cluster.service2.circuit_breakers.default.rq_pending_open: 0

cluster.service2.circuit_breakers.default.rq_retry_open: 0

cluster.service2.circuit_breakers.high.cx_open: 0

cluster.service2.circuit_breakers.high.rq_open: 0

cluster.service2.circuit_breakers.high.rq_pending_open: 0

cluster.service2.circuit_breakers.high.rq_retry_open: 0

cluster.service2.external.upstream_rq_200: 1

cluster.service2.external.upstream_rq_2xx: 1

cluster.service2.external.upstream_rq_completed: 1

cluster.service2.http2.header_overflow: 0

cluster.service2.http2.headers_cb_no_stream: 0

cluster.service2.http2.rx_messaging_error: 0

cluster.service2.http2.rx_reset: 0

cluster.service2.http2.too_many_header_frames: 0

cluster.service2.http2.trailers: 0

cluster.service2.http2.tx_reset: 0

cluster.service2.lb_healthy_panic: 0

cluster.service2.lb_local_cluster_not_ok: 0

cluster.service2.lb_recalculate_zone_structures: 0

cluster.service2.lb_subsets_active: 0

cluster.service2.lb_subsets_created: 0

cluster.service2.lb_subsets_fallback: 0

cluster.service2.lb_subsets_removed: 0

cluster.service2.lb_subsets_selected: 0

cluster.service2.lb_zone_cluster_too_small: 0

cluster.service2.lb_zone_no_capacity_left: 0

cluster.service2.lb_zone_number_differs: 0

cluster.service2.lb_zone_routing_all_directly: 0

cluster.service2.lb_zone_routing_cross_zone: 0

cluster.service2.lb_zone_routing_sampled: 0

cluster.service2.max_host_weight: 1

cluster.service2.membership_change: 1

cluster.service2.membership_healthy: 1

cluster.service2.membership_total: 1

cluster.service2.original_dst_host_invalid: 0

cluster.service2.retry_or_shadow_abandoned: 0

cluster.service2.update_attempt: 348

cluster.service2.update_empty: 0

cluster.service2.update_failure: 0

cluster.service2.update_no_rebuild: 347

cluster.service2.update_success: 348

cluster.service2.upstream_cx_active: 1

cluster.service2.upstream_cx_close_notify: 0

cluster.service2.upstream_cx_connect_attempts_exceeded: 0

cluster.service2.upstream_cx_connect_fail: 0

cluster.service2.upstream_cx_connect_timeout: 0

cluster.service2.upstream_cx_destroy: 0

cluster.service2.upstream_cx_destroy_local: 0

cluster.service2.upstream_cx_destroy_local_with_active_rq: 0

cluster.service2.upstream_cx_destroy_remote: 0

cluster.service2.upstream_cx_destroy_remote_with_active_rq: 0

cluster.service2.upstream_cx_destroy_with_active_rq: 0

cluster.service2.upstream_cx_http1_total: 0

cluster.service2.upstream_cx_http2_total: 1

cluster.service2.upstream_cx_idle_timeout: 0

cluster.service2.upstream_cx_max_requests: 0

cluster.service2.upstream_cx_none_healthy: 0

cluster.service2.upstream_cx_overflow: 0

cluster.service2.upstream_cx_protocol_error: 0

cluster.service2.upstream_cx_rx_bytes_buffered: 197

cluster.service2.upstream_cx_rx_bytes_total: 234

cluster.service2.upstream_cx_total: 1

cluster.service2.upstream_cx_tx_bytes_buffered: 0

cluster.service2.upstream_cx_tx_bytes_total: 204

cluster.service2.upstream_flow_control_backed_up_total: 0

cluster.service2.upstream_flow_control_drained_total: 0

cluster.service2.upstream_flow_control_paused_reading_total: 0

cluster.service2.upstream_flow_control_resumed_reading_total: 0

cluster.service2.upstream_rq_200: 1

cluster.service2.upstream_rq_2xx: 1

cluster.service2.upstream_rq_active: 0

cluster.service2.upstream_rq_cancelled: 0

cluster.service2.upstream_rq_completed: 1

cluster.service2.upstream_rq_maintenance_mode: 0

cluster.service2.upstream_rq_pending_active: 0

cluster.service2.upstream_rq_pending_failure_eject: 0

cluster.service2.upstream_rq_pending_overflow: 0

cluster.service2.upstream_rq_pending_total: 1

cluster.service2.upstream_rq_per_try_timeout: 0

cluster.service2.upstream_rq_retry: 0

cluster.service2.upstream_rq_retry_overflow: 0

cluster.service2.upstream_rq_retry_success: 0

cluster.service2.upstream_rq_rx_reset: 0

cluster.service2.upstream_rq_timeout: 0

cluster.service2.upstream_rq_total: 1

cluster.service2.upstream_rq_tx_reset: 0

cluster.service2.version: 0

cluster_manager.active_clusters: 2

cluster_manager.cluster_added: 2

cluster_manager.cluster_modified: 0

cluster_manager.cluster_removed: 0

cluster_manager.cluster_updated: 1

cluster_manager.cluster_updated_via_merge: 0

cluster_manager.update_merge_cancelled: 0

cluster_manager.update_out_of_merge_window: 0

cluster_manager.warming_clusters: 0

filesystem.flushed_by_timer: 2

filesystem.reopen_failed: 0

filesystem.write_buffered: 1

filesystem.write_completed: 1

filesystem.write_total_buffered: 0

http.admin.downstream_cx_active: 1

http.admin.downstream_cx_delayed_close_timeout: 0

http.admin.downstream_cx_destroy: 1

http.admin.downstream_cx_destroy_active_rq: 0

http.admin.downstream_cx_destroy_local: 0

http.admin.downstream_cx_destroy_local_active_rq: 0

http.admin.downstream_cx_destroy_remote: 1

http.admin.downstream_cx_destroy_remote_active_rq: 0

http.admin.downstream_cx_drain_close: 0

http.admin.downstream_cx_http1_active: 1

http.admin.downstream_cx_http1_total: 2

http.admin.downstream_cx_http2_active: 0

http.admin.downstream_cx_http2_total: 0

http.admin.downstream_cx_idle_timeout: 0

http.admin.downstream_cx_overload_disable_keepalive: 0

http.admin.downstream_cx_protocol_error: 0

http.admin.downstream_cx_rx_bytes_buffered: 83

http.admin.downstream_cx_rx_bytes_total: 172

http.admin.downstream_cx_ssl_active: 0

http.admin.downstream_cx_ssl_total: 0

http.admin.downstream_cx_total: 2

http.admin.downstream_cx_tx_bytes_buffered: 0

http.admin.downstream_cx_tx_bytes_total: 1098

http.admin.downstream_cx_upgrades_active: 0

http.admin.downstream_cx_upgrades_total: 0

http.admin.downstream_flow_control_paused_reading_total: 0

http.admin.downstream_flow_control_resumed_reading_total: 0

http.admin.downstream_rq_1xx: 0

http.admin.downstream_rq_2xx: 1

http.admin.downstream_rq_3xx: 0

http.admin.downstream_rq_4xx: 0

http.admin.downstream_rq_5xx: 0

http.admin.downstream_rq_active: 1

http.admin.downstream_rq_completed: 1

http.admin.downstream_rq_http1_total: 2

http.admin.downstream_rq_http2_total: 0

http.admin.downstream_rq_idle_timeout: 0

http.admin.downstream_rq_non_relative_path: 0

http.admin.downstream_rq_overload_close: 0

http.admin.downstream_rq_response_before_rq_complete: 0

http.admin.downstream_rq_rx_reset: 0

http.admin.downstream_rq_timeout: 0

http.admin.downstream_rq_too_large: 0

http.admin.downstream_rq_total: 2

http.admin.downstream_rq_tx_reset: 0

http.admin.downstream_rq_ws_on_non_ws_route: 0

http.admin.rs_too_large: 0

http.async-client.no_cluster: 0

http.async-client.no_route: 0

http.async-client.rq_direct_response: 0

http.async-client.rq_redirect: 0

http.async-client.rq_total: 0

http.ingress_http.downstream_cx_active: 0

http.ingress_http.downstream_cx_delayed_close_timeout: 0

http.ingress_http.downstream_cx_destroy: 16

http.ingress_http.downstream_cx_destroy_active_rq: 0

http.ingress_http.downstream_cx_destroy_local: 0

http.ingress_http.downstream_cx_destroy_local_active_rq: 0

http.ingress_http.downstream_cx_destroy_remote: 16

http.ingress_http.downstream_cx_destroy_remote_active_rq: 0

http.ingress_http.downstream_cx_drain_close: 0

http.ingress_http.downstream_cx_http1_active: 0

http.ingress_http.downstream_cx_http1_total: 16

http.ingress_http.downstream_cx_http2_active: 0

http.ingress_http.downstream_cx_http2_total: 0

http.ingress_http.downstream_cx_idle_timeout: 0

http.ingress_http.downstream_cx_overload_disable_keepalive: 0

http.ingress_http.downstream_cx_protocol_error: 0

http.ingress_http.downstream_cx_rx_bytes_buffered: 0

http.ingress_http.downstream_cx_rx_bytes_total: 1412

http.ingress_http.downstream_cx_ssl_active: 0

http.ingress_http.downstream_cx_ssl_total: 0

http.ingress_http.downstream_cx_total: 16

http.ingress_http.downstream_cx_tx_bytes_buffered: 0

http.ingress_http.downstream_cx_tx_bytes_total: 4065

http.ingress_http.downstream_cx_upgrades_active: 0

http.ingress_http.downstream_cx_upgrades_total: 0

http.ingress_http.downstream_flow_control_paused_reading_total: 0

http.ingress_http.downstream_flow_control_resumed_reading_total: 0

http.ingress_http.downstream_rq_1xx: 0

http.ingress_http.downstream_rq_2xx: 16

http.ingress_http.downstream_rq_3xx: 0

http.ingress_http.downstream_rq_4xx: 0

http.ingress_http.downstream_rq_5xx: 0

http.ingress_http.downstream_rq_active: 0

http.ingress_http.downstream_rq_completed: 16

http.ingress_http.downstream_rq_http1_total: 16

http.ingress_http.downstream_rq_http2_total: 0

http.ingress_http.downstream_rq_idle_timeout: 0

http.ingress_http.downstream_rq_non_relative_path: 0

http.ingress_http.downstream_rq_overload_close: 0

http.ingress_http.downstream_rq_response_before_rq_complete: 0

http.ingress_http.downstream_rq_rx_reset: 0

http.ingress_http.downstream_rq_timeout: 0

http.ingress_http.downstream_rq_too_large: 0

http.ingress_http.downstream_rq_total: 16

http.ingress_http.downstream_rq_tx_reset: 0

http.ingress_http.downstream_rq_ws_on_non_ws_route: 0

http.ingress_http.no_cluster: 0

http.ingress_http.no_route: 0

http.ingress_http.rq_direct_response: 0

http.ingress_http.rq_redirect: 0

http.ingress_http.rq_total: 16

http.ingress_http.rs_too_large: 0

http.ingress_http.tracing.client_enabled: 0

http.ingress_http.tracing.health_check: 0

http.ingress_http.tracing.not_traceable: 0

http.ingress_http.tracing.random_sampling: 0

http.ingress_http.tracing.service_forced: 0

listener.0.0.0.0_80.downstream_cx_active: 0

listener.0.0.0.0_80.downstream_cx_destroy: 16

listener.0.0.0.0_80.downstream_cx_total: 16

listener.0.0.0.0_80.downstream_pre_cx_active: 0

listener.0.0.0.0_80.downstream_pre_cx_timeout: 0

listener.0.0.0.0_80.http.ingress_http.downstream_rq_1xx: 0

listener.0.0.0.0_80.http.ingress_http.downstream_rq_2xx: 16

listener.0.0.0.0_80.http.ingress_http.downstream_rq_3xx: 0

listener.0.0.0.0_80.http.ingress_http.downstream_rq_4xx: 0

listener.0.0.0.0_80.http.ingress_http.downstream_rq_5xx: 0

listener.0.0.0.0_80.http.ingress_http.downstream_rq_completed: 16

listener.0.0.0.0_80.no_filter_chain_match: 0

listener.admin.downstream_cx_active: 1

listener.admin.downstream_cx_destroy: 1

listener.admin.downstream_cx_total: 2

listener.admin.downstream_pre_cx_active: 0

listener.admin.downstream_pre_cx_timeout: 0

listener.admin.http.admin.downstream_rq_1xx: 0

listener.admin.http.admin.downstream_rq_2xx: 1

listener.admin.http.admin.downstream_rq_3xx: 0

listener.admin.http.admin.downstream_rq_4xx: 0

listener.admin.http.admin.downstream_rq_5xx: 0

listener.admin.http.admin.downstream_rq_completed: 1

listener.admin.no_filter_chain_match: 0

listener_manager.listener_added: 1

listener_manager.listener_create_failure: 0

listener_manager.listener_create_success: 1

listener_manager.listener_modified: 0

listener_manager.listener_removed: 0

listener_manager.total_listeners_active: 1

listener_manager.total_listeners_draining: 0

listener_manager.total_listeners_warming: 0

runtime.admin_overrides_active: 0

runtime.load_error: 0

runtime.load_success: 0

runtime.num_keys: 0

runtime.override_dir_exists: 0

runtime.override_dir_not_exists: 0

server.concurrency: 1

server.days_until_first_cert_expiring: 2147483647

server.hot_restart_epoch: 0

server.live: 1

server.memory_allocated: 3588744

server.memory_heap_size: 4194304

server.parent_connections: 0

server.total_connections: 0

server.uptime: 1975

server.version: 8088954

server.watchdog_mega_miss: 0

server.watchdog_miss: 8

stats.overflow: 0

...

We see we can get the number of members of upstream clusters, number of requests fulfilled by them, information about http ingress, and other useful stats.

Once we're done, let's stop/remove the containers as well as any networks that were created:

$ docker-compose down

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization