Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

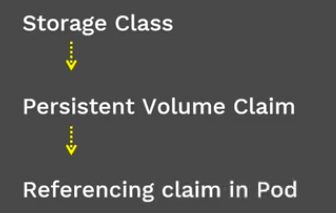

The PersistentVolume subsystem provides an API for users and administrators that abstracts details of how storage is provided from how it is consumed.

To do this we introduce two new API resources: PersistentVolume (PV) and PersistentVolumeClaim (PVC).

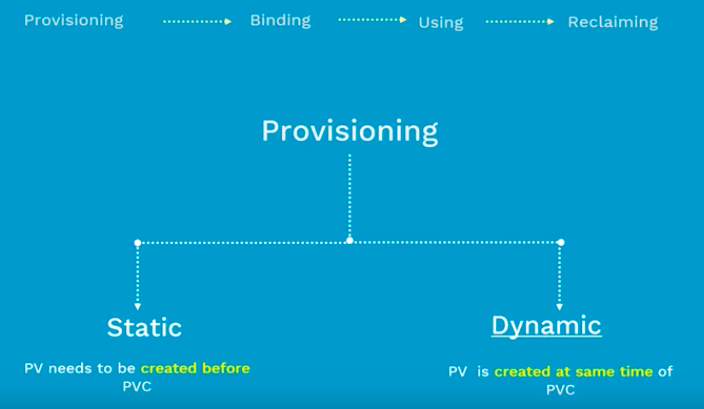

There are two ways PVs may be provisioned: statically or dynamically.

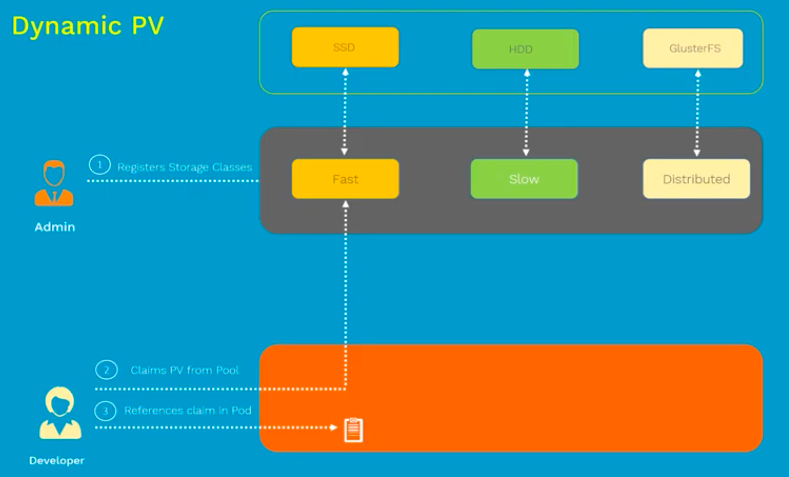

When none of the static PVs the administrator created matches a user's PersistentVolumeClaim, the cluster may try to dynamically provision a volume specially for the PVC. This provisioning is based on StorageClasses: the PVC must request a storage class and the administrator must have created and configured that class in order for dynamic provisioning to occur.

Pictures from Dynamic Provisioning in Kubernetes | Kubernetes Concepts & Demos | Kubernetes Tutorial

To enable dynamic provisioning, a cluster administrator needs to pre-create one or more StorageClass objects for users. StorageClass objects define which provisioner should be used and what parameters should be passed to that provisioner when dynamic provisioning is invoked. The following manifest creates a storage class "slow" which provisions standard disk-like persistent disks.

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: slow provisioner: kubernetes.io/gce-pd parameters: type: pd-standard

The name of a StorageClass object is significant, and is how users can request a particular class. Administrators set the name and other parameters of a class when first creating StorageClass objects, and the objects cannot be updated once they are created.

Note that Storage classes have a provisioner that determines what volume plugin is used for provisioning PVs.

The following manifest creates a storage class "fast" which provisions SSD-like persistent disks.

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: fast provisioner: kubernetes.io/gce-pd parameters: type: pd-ssd

Here is a sample of configuration file (storage-class.yaml):

# storage-class.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: fast provisioner: kubernetes.io/gce-pd parameters: type: pd-ssd

Note that we're using GCEPersistentDisk (gce-pd). It should be aws-ebs if we want to use AWS EBS. Regarding the "type", we're using pd-ssd while the default is pd-standard type.

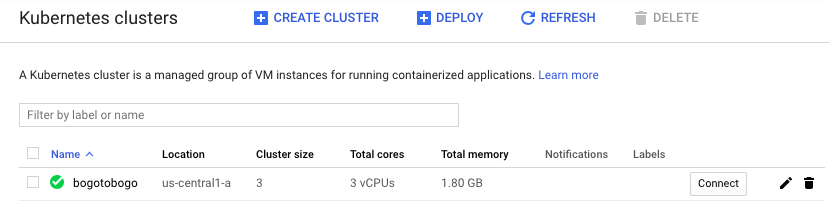

GKE:

Create the StorageClass:

$ gcloud config set compute/zone us-central1-a Updated property [compute/zone]. $ gcloud container clusters get-credentials bogotobogo Fetching cluster endpoint and auth data. kubeconfig entry generated for bogotobogo. $ kubectl create -f storage-class.yaml storageclass.storage.k8s.io/fast created $ kubectl get storageclass NAME PROVISIONER AGE fast kubernetes.io/gce-pd 10m standard (default) kubernetes.io/gce-pd 20m $ kubectl describe storageclass fast Name: fast IsDefaultClass: No Annotations: <none> Provisioner: kubernetes.io/gce-pd Parameters: type=pd-ssd AllowVolumeExpansion: <unset> MountOptions: <none> ReclaimPolicy: Delete VolumeBindingMode: Immediate Events: <none>

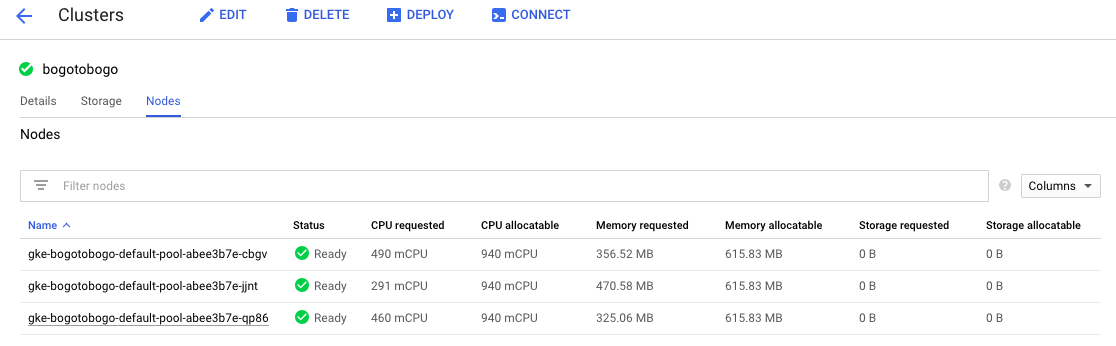

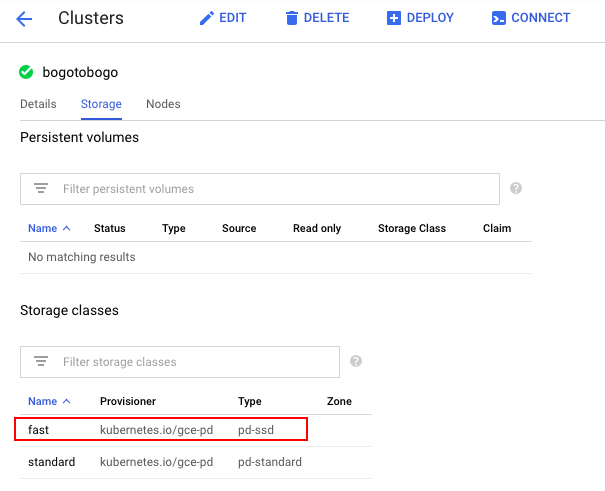

We can see the StorageClass just created from the Console as well:

Pods access storage by using the claim as a volume. Claims must exist in the same namespace as the pod using the claim. The cluster finds the claim in the pod’s namespace and uses it to get the PersistentVolume backing the claim. The volume is then mounted to the host and into the pod.

Here is the PVC configuration file, pvc-1.yaml:

# pvc-1.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: myclaim-1

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 8Gi

storageClassName: fast

Also, almost the same but with a bigger request, 16Gi, pvc-2.yaml:

# pvc-2.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: myclaim-2

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 16Gi

storageClassName: fast

Let's create a PVC:

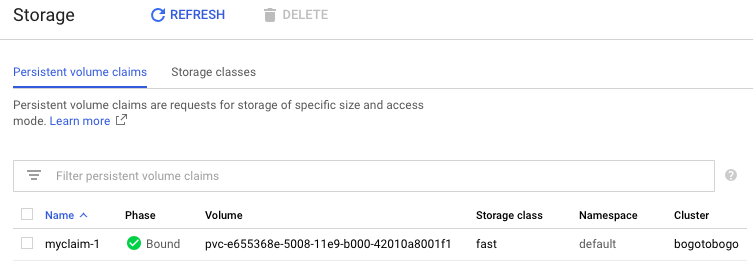

$ kubectl create -f pvc-1.yaml persistentvolumeclaim/myclaim-1 created $ kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE myclaim-1 Bound pvc-e655368e-5008-11e9-b000-42010a8001f1 8Gi RWO fast 1m

We can create another PVC:

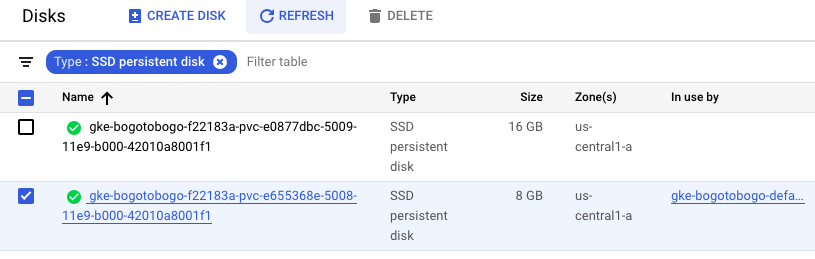

$ kubectl create -f pvc-2.yaml persistentvolumeclaim/myclaim-2 created $ kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE myclaim-1 Bound pvc-e655368e-5008-11e9-b000-42010a8001f1 8Gi RWO fast 7m myclaim-2 Bound pvc-e0877dbc-5009-11e9-b000-42010a8001f1 16Gi RWO fast 6s

As mentioned earlier, pods access storage by using the claim as a volume. Claims must exist in the same namespace as the pod using the claim. The cluster finds the claim in the pod's namespace and uses it to get the PersistentVolume backing the claim. The volume is then mounted to the host and into the pod.

Now we have our storage reserved. So, let's use it from our pods.

Here is our pod definition ():

# nginx-pod.yaml

kind: Pod

apiVersion: v1

metadata:

name: pv-pod

spec:

containers:

- name: pv-test-container

image: nginx

volumeMounts:

- mountPath: "/test-pd"

name: my-test-volume

volumes:

- name: my-test-volume

persistentVolumeClaim:

claimName: myclaim-1

Let's create the pod:

$ kubectl create -f nginx-pod.yaml pod/pv-pod created $ kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE pv-pod 1/1 Running 0 35s 10.40.1.5 gke-bogotobogo-default-pool-abee3b7e-jjnt <none>

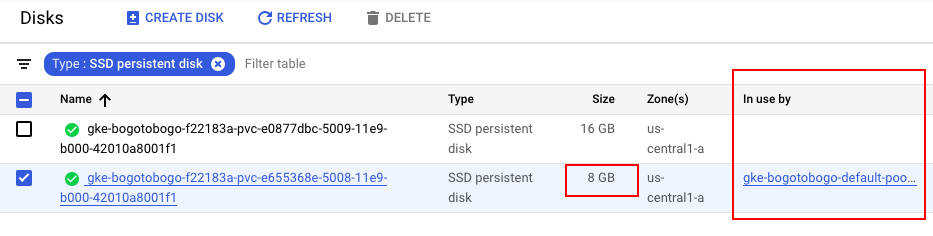

We can see the disk space (8GB) is being used now:

Now we need to verify the data written on the volume not go away even after the pod is gone.

Let's go into the pod and write a file:

$ kubectl get pod NAME READY STATUS RESTARTS AGE pv-pod 1/1 Running 0 13m $ kubectl exec -it pv-pod -- /bin/sh # ls bin boot dev etc home lib lib64 media mnt opt proc root run sbin srv sys test-pd tmp usr var # cd /test-pd # echo "I was here" > i_was_here.txt # ls i_was_here.txt lost+found # exit $

Delete the pod and recreate a new pod with the same configuration:

$ kubectl delete -f nginx-pod.yaml pod "pv-pod" deleted $ kubectl get pod No resources found. $ kubectl create -f nginx-pod.yaml pod/pv-pod created $ kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE pv-pod 1/1 Running 0 50s 10.40.1.6 gke-bogotobogo-default-pool-abee3b7e-jjnt <none>

Because the same disk space (8GB) is being used now, we expect the file we wrote should be still there:

Let's check the earlier file is there:

$ kubectl exec pv-pod df /test-pd Filesystem 1K-blocks Used Available Use% Mounted on /dev/sdb 8191416 36856 8138176 1% /test-pd $ kubectl exec pv-pod ls /test-pd i_was_here.txt lost+found $ kubectl exec pv-pod cat /test-pd/i_was_here.txt I was here

Yes, the file is there. Our PersistentVolumes are working great!

We need to delete the objects we created:

$ kubectl delete -f nginx-pod.yaml pod "pv-pod" deleted $ kubectl delete -f pvc-1.yaml persistentvolumeclaim "myclaim-1" deleted $ kubectl delete -f pvc-2.yaml persistentvolumeclaim "myclaim-2" deleted $ kubectl delete -f storage-class.yaml storageclass.storage.k8s.io "fast" deleted

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization