Docker - ELK 7.6: Elasticsearch

Elastic Stack docker/kubernetes series:

Elasticsearch is available as Docker images. The images use centos:7 as the base image.

A list of all published Docker images and tags is available at www.docker.elastic.co. The source files are in https://github.com/elastic/elasticsearch/blob/7.6/distribution/docker.

Issue a docker pull command against the Elastic Docker registry:

$ docker pull docker.elastic.co/elasticsearch/elasticsearch:7.6.2

To start a single-node Elasticsearch cluster for development or testing, we need to specify single-node discovery (by setting discovery.type to single-node). This will elect a node as a master and will not join a cluster with any other node.

$ docker run -p 9200:9200 -p 9300:9300 -e "discovery.type=single-node" docker.elastic.co/elasticsearch/elasticsearch:7.6.2

By default, Elasticsearch will use port 9200 for requests and port 9300 for communication between nodes within the cluster.

To see if it works, simply issue the following:

$ curl -XGET 'localhost:9200'

{

"name" : "caa1097bc4af",

"cluster_name" : "docker-cluster",

"cluster_uuid" : "WcBnCZzNS_WR2_0J5H1cdg",

"version" : {

"number" : "7.6.2",

"build_flavor" : "default",

"build_type" : "docker",

"build_hash" : "aa751e09be0a5072e8570670309b1f12348f023b",

"build_date" : "2020-02-29T00:15:25.529771Z",

"build_snapshot" : false,

"lucene_version" : "8.4.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

To check the cluster health, we will be using the cat API:

$ curl 'localhost:9200/_cat/health?v' epoch timestamp cluster status node.total node.data shards pri relo init unassign pending_tasks max_task_wait_time active_shards_percent 1585433442 22:10:42 docker-cluster green 1 1 0 0 0 0 0 0 - 100.0%

We can also get a list of nodes in our cluster as follows:

$ curl 'localhost:9200/_cat/nodes?v' ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name 172.17.0.2 13 96 1 0.01 0.01 0.00 dilm * caa1097bc4af

Here, we can see our one node named "caa1097bc4af", which is the single node that is currently in our cluster.

Adding data to Elasticsearch is called indexing. This is because when we feed data into Elasticsearch, the data is placed into Apache Lucene indexes to store and retrieve its data.

The easiest and most familiar layout clones what we would expect from a relational database. We can (very roughly) think of an index like a database:

- MySQL => Databases => Tables => Columns/Rows

- Elasticsearch => Indices => Types => Documents with Properties

| RDBMS (MySQL) | Elasticsearch |

|---|---|

| Databases | Indices |

| Tables | Types |

| Rows | Documents |

| Columns | Fields (Properties of Documents) |

| Schema | Mapping |

In Elasticsearch, the term document has a specific meaning. It refers to the top-level, or root object that is serialized into JSON and stored in Elasticsearch under a unique ID.

A document consist not only of its data but also has metadata (information about the document). The three required metadata elements are as follows:

- _index: where the document lives

- _type: the class of object that the document represents

- _id: the unique identifier for the document

So, the query has the following components:

- Index

An index is the equivalent of database in relational database. The index is the top-most level that can be found athttp://localhost:9200/<index>

- Types

Types are objects that are contained within indexes. They are like tables. Being a child of the index, they can be found athttp://localhost:9200/<index>/<type>

- ID

In order to index a first JSON object, we make a PUT request to the REST API to a URL made up of the index name, type name and ID:

http://localhost:9200/<index>/<type>/[<id>]

Index and type are required while the id part is optional. We can use either the POST or the PUT method to add data to it. If we don't specify an id, ElasticSearch will generate one for us. So, if we don't specify an id we should use POST instead of PUT.

Let's create an index, "twitter":

$ curl -X PUT "localhost:9200/twitter?pretty"

{

"acknowledged" : true,

"shards_acknowledged" : true,

"index" : "twitter"

}

Check if the index has been create:

$ curl "localhost:9200/twitter?pretty"

{

"twitter" : {

"aliases" : { },

"mappings" : { },

"settings" : {

"index" : {

"creation_date" : "1585580705116",

"number_of_shards" : "1",

"number_of_replicas" : "1",

"uuid" : "wYmyP-t6QFq5eHHGpT_bzg",

"version" : {

"created" : "7060199"

},

"provided_name" : "twitter"

}

}

}

}

When creating an index, we can specify the following optional request body:

- Index aliases

- Mappings for fields in the index

- Settings for the index

Delete:

$ curl -X DELETE "localhost:9200/twitter?pretty"

{

"acknowledged" : true,

}

Let's make sure the index has been deleted:

$ curl "localhost:9200/twitter?pretty"

{

"error" : {

"root_cause" : [

{

"type" : "index_not_found_exception",

"reason" : "no such index [twitter]",

"resource.type" : "index_or_alias",

"resource.id" : "twitter",

"index_uuid" : "_na_",

"index" : "twitter"

}

],

"type" : "index_not_found_exception",

"reason" : "no such index [twitter]",

"resource.type" : "index_or_alias",

"resource.id" : "twitter",

"index_uuid" : "_na_",

"index" : "twitter"

},

"status" : 404

}

As expected, we get an error because we don't have the "twitter" index any more.

Now, we want more control over indexing than the above. So, we will create it again. Because we are using a test setup on our local machine, probably, what we want is to use a very minimal index, with just one shard and no replicas like this:

$ curl -X PUT "localhost:9200/twitter?pretty" -H 'Content-Type: application/json' -d'

{

"settings" : {

"index" : {

"number_of_shards" : 1,

"number_of_replicas" : 0

}

}

}

'

{

"acknowledged" : true,

"shards_acknowledged" : true,

"index" : "twitter"

}

Check what we've done:

$ curl "localhost:9200/twitter?pretty"

{

"twitter" : {

"aliases" : { },

"mappings" : { },

"settings" : {

"index" : {

"creation_date" : "1585585646565",

"number_of_shards" : "1",

"number_of_replicas" : "0",

"uuid" : "zPuLa5kMTGiq2A16FD4zMg",

"version" : {

"created" : "7060199"

},

"provided_name" : "twitter"

}

}

}

}

Internally, elasticsearch is using an Apache Lucene which is a powerful search engine. It stores its data in a file (a shard) which is an unsplittable entity that can only grow by adding documents.

Shards are used to distribute data over multiple nodes. That's why we only need one shard on a single node system. One thing to note is that the number of shards for an index cannot change after creating the index.

A replica is a copy of a shard. The shard being copied is called the primary shard, and it can have 0 or more replicas. When we insert data into Elasticsearch, it is stored in the primary shard first, and then in the replicas.

Mapping is the process of defining how a document, and the fields it contains, are stored and indexed.

$ curl -X PUT "localhost:9200/twitter/_mapping?pretty" -H 'Content-Type: application/json' -d'

{

"properties": {

"age": { "type": "integer" },

"email": { "type": "keyword" },

"name": { "type": "text" }

}

}

'

{

"acknowledged" : true

}

We can see three field types here: a integer field (could be data field), a keyword field, and a text field.

We can load data into Elasticsearch without explicitly creating a mapping (this is optional). Elasticsearch will guess the field types and will do a job for us.

To view the mapping of the "twitter" index:

$ curl -X GET "localhost:9200/twitter/_mapping?pretty"

{

"twitter" : {

"mappings" : {

"properties" : {

"age" : {

"type" : "integer"

},

"email" : {

"type" : "keyword"

},

"name" : {

"type" : "text"

}

}

}

}

}

To view the mapping of a specific field:

$ curl -X GET "localhost:9200/twitter/_mapping/field/email?pretty"

{

"twitter" : {

"mappings" : {

"email" : {

"full_name" : "email",

"mapping" : {

"email" : {

"type" : "keyword"

}

}

}

}

}

}

Now that we have our index and a mapping, we may want to load some data into Elasticsearch.

There are several ways of loading data (such as via Kibana, Beats/Logstash, Client library) but here we'll use the Index API to insert data into an index something like this:

$ curl -X POST "localhost:9200/twitter/_doc?pretty" -H 'Content-Type: application/json' -d'

{

"age": "25",

"email": "JohnDoe@gmail.com",

"name": "John_Doe"

}

'

{

"_index" : "twitter",

"_type" : "_doc",

"_id" : "DFWFLHEBKxEZJmQe-laZ",

"_version" : 1,

"result" : "created",

"_shards" : {

"total" : 1,

"successful" : 1,

"failed" : 0

},

"_seq_no" : 0,

"_primary_term" : 1

}

As we can see, it created a document id (_id) automatically though we could have chosen our own _id.

We can query using _search endpoint of our twitter index:

$ curl -X GET "localhost:9200/twitter/_search?q=name:John_Doe&pretty"

{

"took" : 9,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1,

"relation" : "eq"

},

"max_score" : 1.3940738,

"hits" : [

{

"_index" : "twitter",

"_type" : "_doc",

"_id" : "DFWFLHEBKxEZJmQe-laZ",

"_score" : 1.3940738,

"_source" : {

"age" : "25",

"email" : "JohnDoe@gmail.com",

"name" : "John_Doe"

}

}

]

}

}

Another way of searching is by performing a request body search:

$ curl -X GET "localhost:9200/twitter/_search?pretty" -H 'Content-Type: application/json' -d'

{

"query" : {

"term" : {"email" : "JohnDoe@gmail.com" }

}

}

'

{

"took" : 5,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1,

"relation" : "eq"

},

"max_score" : 0.35667494,

"hits" : [

{

"_index" : "twitter",

"_type" : "_doc",

"_id" : "DFWFLHEBKxEZJmQe-laZ",

"_score" : 0.35667494,

"_source" : {

"age" : "25",

"email" : "JohnDoe@gmail.com",

"name" : "John_Doe"

}

}

]

}

}

Here, we'll play with queries.

Becuase we may want to use Kibana console, let's install it using docker-compose. Just clone Einsteinish-ELK-Stack-with-docker-compose.

Before we do that, let's modify the setup for xpack in "elasticsearch/config/elasticsearch.yml" to set "xpack.security.enabled: true". Otherwise, we may get the following error:

{"error":{"root_cause":[{"type":"security_exception","reason":"missing authentication credentials for REST request [/]","header":{"WWW-Authenticate":"Basic realm=\"security\" charset=\"UTF-8\""}}],"type":"security_exception","reason":"missing authentication credentials for REST request [/]","header":{"WWW-Authenticate":"Basic realm=\"security\" charset=\"UTF-8\""}},"status":401}

Now, let's run ELK stack using ELK Stack with docker compose.

$ docker-compose up -d

Creating network "einsteinish-elk-stack-with-docker-compose_elk" with driver "bridge"

Creating einsteinish-elk-stack-with-docker-compose_elasticsearch_1 ... done

Creating einsteinish-elk-stack-with-docker-compose_kibana_1 ... done

Creating einsteinish-elk-stack-with-docker-compose_logstash_1 ... done

$ docker-compose ps

Name Command State Ports

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

einsteinish-elk-stack-with-docker-compose_elasticsearch_1 /usr/local/bin/docker-entr ... Up 0.0.0.0:9200->9200/tcp, 0.0.0.0:9300->9300/tcp

einsteinish-elk-stack-with-docker-compose_kibana_1 /usr/local/bin/dumb-init - ... Up 0.0.0.0:5601->5601/tcp

einsteinish-elk-stack-with-docker-compose_logstash_1 /usr/local/bin/docker-entr ... Up 0.0.0.0:5000->5000/tcp, 0.0.0.0:5000->5000/udp, 5044/tcp, 0.0.0.0:9600->9600/tcp

$

We'll start to using cat APIs which are only intended for human consumption using the Kibana console or command line.

To list all available command:

$ curl -X GET "localhost:9200/_cat"

=^.^=

/_cat/allocation

/_cat/shards

/_cat/shards/{index}

/_cat/master

/_cat/nodes

/_cat/tasks

/_cat/indices

/_cat/indices/{index}

/_cat/segments

/_cat/segments/{index}

/_cat/count

/_cat/count/{index}

/_cat/recovery

/_cat/recovery/{index}

/_cat/health

/_cat/pending_tasks

/_cat/aliases

/_cat/aliases/{alias}

/_cat/thread_pool

/_cat/thread_pool/{thread_pools}

/_cat/plugins

/_cat/fielddata

/_cat/fielddata/{fields}

/_cat/nodeattrs

/_cat/repositories

/_cat/snapshots/{repository}

/_cat/templates

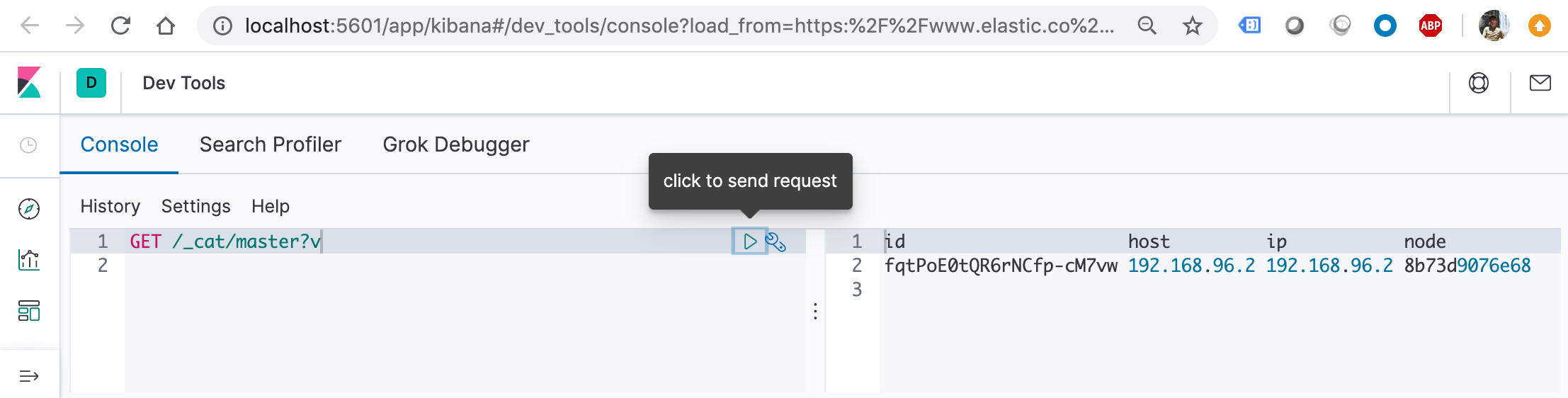

Each of the _cat commands accepts a query string parameter v to turn on verbose output. For example:

where we used Kibana console.

$ curl -X GET "localhost:9200/_cat/nodes?" 192.168.96.2 60 92 5 0.35 0.32 0.41 dilm * 8b73d9076e68

h query string parameter which forces only those columns to appear:

$ curl -X GET "localhost:9200/_cat/nodes?h=ip,port,heapPercent,name&pretty" 192.168.96.2 9300 67 8b73d9076e68

We can also request multiple columns using simple wildcards like /_cat/thread_pool?h=ip,queue* to get all headers (or aliases) starting with queue.

$ curl -X GET "localhost:9200/_cat/thread_pool?h=ip,queue*" 192.168.96.2 0 16 192.168.96.2 0 100 ... 192.168.96.2 0 -1 192.168.96.2 0 4 192.168.96.2 0 -1 192.168.96.2 0 1000 192.168.96.2 0 200

If we want to find the largest index in our cluster (storage used by all the shards, not number of documents). The /_cat/indices API is ideal. We only need to add three things to the API request:

- The bytes query string parameter with a value of b to get byte-level resolution.

- The s (sort) parameter with a value of store.size:desc to sort the output by shard storage in descending order.

- The v (verbose) parameter to include column headings in the response.

$ curl -X GET "localhost:9200/_cat/indices?bytes=b&s=store.size:desc&v" health status index uuid pri rep docs.count docs.deleted store.size pri.store.size green open .monitoring-es-7-2020.04.06 XQSHRxs7RsOZ3ZeLsa0Y_Q 1 0 77410 55160 34506536 34506536 green open .monitoring-es-7-2020.04.10 Gjs4h4dqTIWKC3owN0cjqQ 1 0 9435 1443 10628315 10628315 green open .monitoring-logstash-7-2020.04.06 6itFo78lShiWIb1e5i6GKg 1 0 43969 0 3007501 3007501 green open .monitoring-kibana-7-2020.04.06 LWbl13UVQLq_cWwkCccatA 1 0 5522 0 1220685 1220685 green open .monitoring-logstash-7-2020.04.10 myGRrPNMRlebzYfOdBbHUQ 1 0 2577 0 542115 542115 green open .monitoring-kibana-7-2020.04.10 knd52K_vSTellI_qhGPYpA 1 0 544 0 269953 269953 green open .security-7 e21FT4JoQ2WML_oax2GFYA 1 0 36 0 99098 99098 green open .kibana_1 2yJ-CzinQ-Czv0H3Rg4mQg 1 0 10 1 39590 39590 yellow open logstash-2020.04.06-000001 YShJ9NKUQO-4TuwhS0MlXA 1 1 100 0 36727 36727 green open ilm-history-1-000001 rVfV3nLQSXOM7c6yN68dbg 1 0 18 0 32919 32919 green open .kibana_task_manager_1 y29CTX98TEuZt3pb6lnXhA 1 0 2 0 6823 6823 green open .apm-agent-configuration zC5fg2AhSVK0TvV3WUcv_Q 1 0 0 0 283 283

The following queries give the same response in json format:

$ curl 'localhost:9200/_cat/indices?format=json&pretty'

[

{

"health" : "green",

"status" : "open",

"index" : ".security-7",

"uuid" : "e21FT4JoQ2WML_oax2GFYA",

"pri" : "1",

"rep" : "0",

"docs.count" : "36",

"docs.deleted" : "0",

"store.size" : "96.7kb",

"pri.store.size" : "96.7kb"

},

...

$ curl 'localhost:9200/_cat/indices?pretty' -H "Accept: application/json"

[

{

"health" : "green",

"status" : "open",

"index" : ".security-7",

"uuid" : "e21FT4JoQ2WML_oax2GFYA",

"pri" : "1",

"rep" : "0",

"docs.count" : "36",

"docs.deleted" : "0",

"store.size" : "96.7kb",

"pri.store.size" : "96.7kb"

},

s query string parameter which sorts the table by the columns specified as the parameter value. Columns are specified either by name or by alias, and are provided as a comma separated string. By default, sorting is done in ascending fashion. Appending :desc to a column will invert the ordering for that column. :asc is also accepted but exhibits the same behavior as the default sort order.

For example, with a sort string s=column1,column2:desc,column3, the table will be sorted in ascending order by column1, in descending order by column2, and in ascending order by column3.

Let's put JSON documents into an Elasticsearch index.

We can do this directly with a simple PUT request that specifies the index we want to add the document, a unique document ID, and one or more "field": "value" pairs in the request body:

$ curl -X PUT "localhost:9200/customer/_doc/1?pretty" -H 'Content-Type: application/json' -d'

{

"name": "John Doe"

}

'

{

"_index" : "customer",

"_type" : "_doc",

"_id" : "1",

"_version" : 1,

"result" : "created",

"_shards" : {

"total" : 2,

"successful" : 1,

"failed" : 0

},

"_seq_no" : 0,

"_primary_term" : 1

}

This request automatically creates the customer index if it doesn’t already exist, adds a new document that has an ID of 1, and stores and indexes the name field.

The new document is available immediately from any node in the cluster. We can retrieve it with a GET request that specifies its document ID:

$ curl -X GET "localhost:9200/customer/_doc/1?pretty"

{

"_index" : "customer",

"_type" : "_doc",

"_id" : "1",

"_version" : 1,

"_seq_no" : 0,

"_primary_term" : 1,

"found" : true,

"_source" : {

"name" : "John Doe"

}

}

If we have a lot of documents to index, we can submit them in batches with the https://www.elastic.co/guide/en/elasticsearch/reference/7.6/docs-bulk.htmlbulk API.

Let's download the accounts.json sample data set which is randomly-generated data set represent user accounts with the following information:

{

"account_number": 0,

"balance": 16623,

"firstname": "Bradshaw",

"lastname": "Mckenzie",

"age": 29,

"gender": "F",

"address": "244 Columbus Place",

"employer": "Euron",

"email": "bradshawmckenzie@euron.com",

"city": "Hobucken",

"state": "CO"

}

We're going to index the account data into the bank index with the following _bulk request:

$ curl -H "Content-Type: application/json" -XPOST "localhost:9200/bank/_bulk?pretty&refresh" --data-binary "@accounts.json"

{

"took" : 711,

"errors" : false,

"items" : [

{

"index" : {

"_index" : "bank",

"_type" : "_doc",

"_id" : "1",

"_version" : 1,

"result" : "created",

"forced_refresh" : true,

"_shards" : {

"total" : 2,

"successful" : 1,

"failed" : 0

},

"_seq_no" : 0,

"_primary_term" : 1,

"status" : 201

}

},

...

{

"index" : {

"_index" : "bank",

"_type" : "_doc",

"_id" : "995",

"_version" : 1,

"result" : "created",

"forced_refresh" : true,

"_shards" : {

"total" : 2,

"successful" : 1,

"failed" : 0

},

"_seq_no" : 999,

"_primary_term" : 1,

"status" : 201

}

}

]

}

The --data-binary posts data exactly as specified with no extra processing whatsoever while --data or -d sends the specified data in a POST request to the HTTP server, in the same way that a browser does when a user has filled in an HTML form and presses the submit button. This will cause curl to pass the data to the server using the content-type application/x-www-form-urlencoded.

We can check if the 1,000 documents were indexed successfully:

$ curl -X GET "localhost:9200/_cat/indices?v&s=index&pretty" health status index uuid pri rep docs.count docs.deleted store.size pri.store.size green open .apm-agent-configuration zC5fg2AhSVK0TvV3WUcv_Q 1 0 0 0 283b 283b green open .kibana_1 2yJ-CzinQ-Czv0H3Rg4mQg 1 0 10 1 38.6kb 38.6kb green open .kibana_task_manager_1 y29CTX98TEuZt3pb6lnXhA 1 0 2 0 32kb 32kb green open .monitoring-es-7-2020.04.06 XQSHRxs7RsOZ3ZeLsa0Y_Q 1 0 77410 55160 32.9mb 32.9mb green open .monitoring-es-7-2020.04.10 Gjs4h4dqTIWKC3owN0cjqQ 1 0 30096 0 10.7mb 10.7mb green open .monitoring-es-7-2020.04.11 FMSpb4JKScGYhu8nEzXt1A 1 0 95 18 695.5kb 695.5kb green open .monitoring-kibana-7-2020.04.06 LWbl13UVQLq_cWwkCccatA 1 0 5522 0 1.1mb 1.1mb green open .monitoring-kibana-7-2020.04.10 knd52K_vSTellI_qhGPYpA 1 0 1752 0 534.6kb 534.6kb green open .monitoring-kibana-7-2020.04.11 GxM1BDKvRkGTv0gWyt8U_A 1 0 3 0 42.9kb 42.9kb green open .monitoring-logstash-7-2020.04.06 6itFo78lShiWIb1e5i6GKg 1 0 43969 0 2.8mb 2.8mb green open .monitoring-logstash-7-2020.04.10 myGRrPNMRlebzYfOdBbHUQ 1 0 8617 0 1.1mb 1.1mb green open .monitoring-logstash-7-2020.04.11 TAjxbas0Rd-5mb2JOmvr0A 1 0 15 0 95.5kb 95.5kb green open .security-7 e21FT4JoQ2WML_oax2GFYA 1 0 36 0 96.7kb 96.7kb yellow open bank bDhhObs0SMiHpPJti21rZA 1 1 1000 0 414.1kb 414.1kb yellow open customer Q68qN_NBSOqz3dnWG6P0yQ 1 1 1 0 3.4kb 3.4kb green open ilm-history-1-000001 rVfV3nLQSXOM7c6yN68dbg 1 0 18 0 32.1kb 32.1kb yellow open logstash-2020.04.06-000001 YShJ9NKUQO-4TuwhS0MlXA 1 1 100 0 35.8kb 35.8kb

Just to see the bank index:

$ curl -X GET "localhost:9200/_cat/indices/bank?v&pretty" health status index uuid pri rep docs.count docs.deleted store.size pri.store.size yellow open bank bDhhObs0SMiHpPJti21rZA 1 1 1000 0 414.1kb 414.1kb

Now that we have ingested some data into an Elasticsearch index, we can search it by sending requests to the _search endpoint. To access the full suite of search capabilities, we use the Elasticsearch Query DSL to specify the search criteria in the request body. We specify the name of the index we want to search in the request URI.

The following request, for example, retrieves all documents in the bank index sorted by account number:

$ curl -X GET "localhost:9200/bank/_search?pretty" -H 'Content-Type: application/json' -d'

{

"query": { "match_all": {} },

"sort": [

{ "account_number": "asc" }

]

}

'

{

"query": { "match_all": {} },

"sort": [

{ "account_number": "asc" }

]

}

'

{

"took" : 3,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1000,

"relation" : "eq"

},

"max_score" : null,

"hits" : [

{

"_index" : "bank",

"_type" : "_doc",

"_id" : "0",

"_score" : null,

"_source" : {

"account_number" : 0,

"balance" : 16623,

"firstname" : "Bradshaw",

"lastname" : "Mckenzie",

"age" : 29,

"gender" : "F",

"address" : "244 Columbus Place",

"employer" : "Euron",

"email" : "bradshawmckenzie@euron.com",

"city" : "Hobucken",

"state" : "CO"

},

"sort" : [

0

]

},

...

{

"_index" : "bank",

"_type" : "_doc",

"_id" : "9",

"_score" : null,

"_source" : {

"account_number" : 9,

"balance" : 24776,

"firstname" : "Opal",

"lastname" : "Meadows",

"age" : 39,

"gender" : "M",

"address" : "963 Neptune Avenue",

"employer" : "Cedward",

"email" : "opalmeadows@cedward.com",

"city" : "Olney",

"state" : "OH"

},

"sort" : [

9

]

}

]

}

}

As we can see from th eoutput above, by default, the hits section of the response includes the first 10 documents that match the search criteria.

The response also provides the following information about the search request:

- took – how long it took Elasticsearch to run the query, in milliseconds

- timed_out – whether or not the search request timed out

- _shards – how many shards were searched and a breakdown of how many shards succeeded, failed, or were skipped.

- hits.total.value - how many matching documents were found

- hits.max_score – the score of the most relevant document found

- hits.sort - the document’s sort position (when not sorting by relevance score)

- hits._score - the document’s relevance score (not applicable when using match_all)

To page through the search hits, specify the from and size parameters in our request. For example, the following request gets hits 10 through 12:

$ curl -X GET "localhost:9200/bank/_search?pretty" -H 'Content-Type: application/json' -d'

{

"query": { "match_all": {} },

"sort": [

{ "account_number": "asc" }

],

"from": 10,

"size": 3

}

'

{

"took" : 15,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1000,

"relation" : "eq"

},

"max_score" : null,

"hits" : [

{

"_index" : "bank",

"_type" : "_doc",

"_id" : "10",

"_score" : null,

"_source" : {

"account_number" : 10,

"balance" : 46170,

"firstname" : "Dominique",

"lastname" : "Park",

"age" : 37,

"gender" : "F",

"address" : "100 Gatling Place",

"employer" : "Conjurica",

"email" : "dominiquepark@conjurica.com",

"city" : "Omar",

"state" : "NJ"

},

"sort" : [

10

]

},

{

"_index" : "bank",

"_type" : "_doc",

"_id" : "11",

"_score" : null,

"_source" : {

"account_number" : 11,

"balance" : 20203,

"firstname" : "Jenkins",

"lastname" : "Haney",

"age" : 20,

"gender" : "M",

"address" : "740 Ferry Place",

"employer" : "Qimonk",

"email" : "jenkinshaney@qimonk.com",

"city" : "Steinhatchee",

"state" : "GA"

},

"sort" : [

11

]

},

{

"_index" : "bank",

"_type" : "_doc",

"_id" : "12",

"_score" : null,

"_source" : {

"account_number" : 12,

"balance" : 37055,

"firstname" : "Stafford",

"lastname" : "Brock",

"age" : 20,

"gender" : "F",

"address" : "296 Wythe Avenue",

"employer" : "Uncorp",

"email" : "staffordbrock@uncorp.com",

"city" : "Bend",

"state" : "AL"

},

"sort" : [

12

]

}

]

}

}

Now can start to construct queries that are a bit more interesting than match_all.

To search for specific terms within a field, we can use a match query. For example, the following request searches the address field to find customers whose addresses contain mill or lane:

$ curl -X GET "localhost:9200/bank/_search?pretty" -H 'Content-Type: application/json' -d'

{

"query": { "match": { "address": "mill lane" } }

}

'

{

"took" : 18,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 19,

"relation" : "eq"

},

"max_score" : 9.507477,

"hits" : [

{

"_index" : "bank",

"_type" : "_doc",

"_id" : "136",

"_score" : 9.507477,

"_source" : {

"account_number" : 136,

"balance" : 45801,

"firstname" : "Winnie",

"lastname" : "Holland",

"age" : 38,

"gender" : "M",

"address" : "198 Mill Lane",

"employer" : "Neteria",

"email" : "winnieholland@neteria.com",

"city" : "Urie",

"state" : "IL"

}

},

{

"_index" : "bank",

"_type" : "_doc",

"_id" : "970",

"_score" : 5.4032025,

"_source" : {

"account_number" : 970,

"balance" : 19648,

"firstname" : "Forbes",

"lastname" : "Wallace",

"age" : 28,

"gender" : "M",

"address" : "990 Mill Road",

"employer" : "Pheast",

"email" : "forbeswallace@pheast.com",

"city" : "Lopezo",

"state" : "AK"

}

},

To perform a phrase search rather than matching individual terms, we use match_phrase instead of match. For example, the following request only matches addresses that contain the phrase mill lane:

$ curl -X GET "localhost:9200/bank/_search?pretty" -H 'Content-Type: application/json' -d'

{

"query": { "match_phrase": { "address": "mill lane" } }

}

'

{

"took" : 45,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1,

"relation" : "eq"

},

"max_score" : 9.507477,

"hits" : [

{

"_index" : "bank",

"_type" : "_doc",

"_id" : "136",

"_score" : 9.507477,

"_source" : {

"account_number" : 136,

"balance" : 45801,

"firstname" : "Winnie",

"lastname" : "Holland",

"age" : 38,

"gender" : "M",

"address" : "198 Mill Lane",

"employer" : "Neteria",

"email" : "winnieholland@neteria.com",

"city" : "Urie",

"state" : "IL"

}

}

]

}

}

To construct more complex queries, we can use a bool query to combine multiple query criteria. We can designate criteria as required (must match), desirable (should match), or undesirable (must not match).

For example, the following request searches the bank index for accounts that belong to customers who are 33 years old, but excludes anyone who lives in Idaho (ID):

$ curl -X GET "localhost:9200/bank/_search?pretty" -H 'Content-Type: application/json' -d'

{

"query": {

"bool": {

"must": [

{ "match": { "age": "33" } }

],

"must_not": [

{ "match": { "state": "ID" } }

]

}

}

}

'

{

"took" : 6,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 50,

"relation" : "eq"

},

"max_score" : 1.0,

"hits" : [

{

"_index" : "bank",

"_type" : "_doc",

"_id" : "18",

"_score" : 1.0,

"_source" : {

"account_number" : 18,

"balance" : 4180,

"firstname" : "Dale",

"lastname" : "Adams",

"age" : 33,

"gender" : "M",

"address" : "467 Hutchinson Court",

"employer" : "Boink",

"email" : "daleadams@boink.com",

"city" : "Orick",

"state" : "MD"

}

},

...

Each must, should, and must_not element in a Boolean query is referred to as a query clause. How well a document meets the criteria in each must or should clause contributes to the document’s relevance score. The higher the score, the better the document matches our search criteria. By default, Elasticsearch returns documents ranked by these relevance scores.

The criteria in a must_not clause is treated as a filter. It affects whether or not the document is included in the results, but does not contribute to how documents are scored. We can also explicitly specify arbitrary filters to include or exclude documents based on structured data.

For example, the following request uses a range filter to limit the results to accounts with a balance between $20,000 and $30,000 (inclusive).

$ curl -X GET "localhost:9200/bank/_search?pretty" -H 'Content-Type: application/json' -d'

{

"query": {

"bool": {

"must": { "match_all": {} },

"filter": {

"range": {

"balance": {

"gte": 20000,

"lte": 30000

}

}

}

}

}

}

'

{

"took" : 3,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 217,

"relation" : "eq"

},

"max_score" : 1.0,

"hits" : [

{

"_index" : "bank",

"_type" : "_doc",

"_id" : "49",

"_score" : 1.0,

"_source" : {

"account_number" : 49,

"balance" : 29104,

"firstname" : "Fulton",

"lastname" : "Holt",

"age" : 23,

"gender" : "F",

"address" : "451 Humboldt Street",

"employer" : "Anocha",

"email" : "fultonholt@anocha.com",

"city" : "Sunriver",

"state" : "RI"

}

},

Elasticsearch aggregations enable us to get meta-information about our search results and answer questions like, "How many account holders are in Texas?" or "What’s the average balance of accounts in Tennessee?" We can search documents, filter hits, and use aggregations to analyze the results all in one request.

For example, the following request uses a terms aggregation to group all of the accounts in the bank index by state, and returns the ten states with the most accounts in descending order:

$ curl -X GET "localhost:9200/bank/_search?pretty" -H 'Content-Type: application/json' -d'

{

"size": 0,

"aggs": {

"group_by_state": {

"terms": {

"field": "state.keyword"

}

}

}

}

'

{

"took" : 11,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1000,

"relation" : "eq"

},

"max_score" : null,

"hits" : [ ]

},

"aggregations" : {

"group_by_state" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 743,

"buckets" : [

{

"key" : "TX",

"doc_count" : 30

},

{

"key" : "MD",

"doc_count" : 28

},

{

"key" : "ID",

"doc_count" : 27

},

{

"key" : "AL",

"doc_count" : 25

},

{

"key" : "ME",

"doc_count" : 25

},

{

"key" : "TN",

"doc_count" : 25

},

{

"key" : "WY",

"doc_count" : 25

},

{

"key" : "DC",

"doc_count" : 24

},

{

"key" : "MA",

"doc_count" : 24

},

{

"key" : "ND",

"doc_count" : 24

}

]

}

}

}

The buckets in the response are the values of the state field. The doc_count shows the number of accounts in each state. For example, we can see that there are 27 accounts in ID (Idaho). Because the request set size=0, the response only contains the aggregation results but not including the details of the accounts like this:

"hits" : [

{

"_index" : "bank",

"_type" : "_doc",

"_id" : "1",

"_score" : 1.0,

"_source" : {

"account_number" : 1,

"balance" : 39225,

"firstname" : "Amber",

"lastname" : "Duke",

"age" : 32,

"gender" : "M",

"address" : "880 Holmes Lane",

"employer" : "Pyrami",

"email" : "amberduke@pyrami.com",

"city" : "Brogan",

"state" : "IL"

}

},

...

We can combine aggregations to build more complex summaries of our data. For example, the following request nests an avg aggregation within the previous group_by_state aggregation to calculate the average account balances for each state.

$ curl -X GET "localhost:9200/bank/_search?pretty" -H 'Content-Type: application/json' -d'

{

"size": 0,

"aggs": {

"group_by_state": {

"terms": {

"field": "state.keyword"

},

"aggs": {

"average_balance": {

"avg": {

"field": "balance"

}

}

}

}

}

}

'

{

"took" : 38,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1000,

"relation" : "eq"

},

"max_score" : null,

"hits" : [ ]

},

"aggregations" : {

"group_by_state" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 743,

"buckets" : [

{

"key" : "TX",

"doc_count" : 30,

"average_balance" : {

"value" : 26073.3

}

},

{

"key" : "MD",

"doc_count" : 28,

"average_balance" : {

"value" : 26161.535714285714

}

},

{

"key" : "ID",

"doc_count" : 27,

"average_balance" : {

"value" : 24368.777777777777

}

},

{

"key" : "AL",

"doc_count" : 25,

"average_balance" : {

"value" : 25739.56

}

},

{

"key" : "ME",

"doc_count" : 25,

"average_balance" : {

"value" : 21663.0

}

},

{

"key" : "TN",

"doc_count" : 25,

"average_balance" : {

"value" : 28365.4

}

},

{

"key" : "WY",

"doc_count" : 25,

"average_balance" : {

"value" : 21731.52

}

},

{

"key" : "DC",

"doc_count" : 24,

"average_balance" : {

"value" : 23180.583333333332

}

},

{

"key" : "MA",

"doc_count" : 24,

"average_balance" : {

"value" : 29600.333333333332

}

},

{

"key" : "ND",

"doc_count" : 24,

"average_balance" : {

"value" : 26577.333333333332

}

}

]

}

}

}

Instead of sorting the results by count, we could sort using the result of the nested aggregation by specifying the order within the terms aggregation:

$ curl -X GET "localhost:9200/bank/_search?pretty" -H 'Content-Type: application/json' -d'

{

"size": 0,

"aggs": {

"group_by_state": {

"terms": {

"field": "state.keyword",

"order": {

"average_balance": "desc"

}

},

"aggs": {

"average_balance": {

"avg": {

"field": "balance"

}

}

}

}

}

}

'

{

"took" : 37,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1000,

"relation" : "eq"

},

"max_score" : null,

"hits" : [ ]

},

"aggregations" : {

"group_by_state" : {

"doc_count_error_upper_bound" : -1,

"sum_other_doc_count" : 827,

"buckets" : [

{

"key" : "CO",

"doc_count" : 14,

"average_balance" : {

"value" : 32460.35714285714

}

},

{

"key" : "NE",

"doc_count" : 16,

"average_balance" : {

"value" : 32041.5625

}

},

{

"key" : "AZ",

"doc_count" : 14,

"average_balance" : {

"value" : 31634.785714285714

}

},

{

"key" : "MT",

"doc_count" : 17,

"average_balance" : {

"value" : 31147.41176470588

}

},

{

"key" : "VA",

"doc_count" : 16,

"average_balance" : {

"value" : 30600.0625

}

},

{

"key" : "GA",

"doc_count" : 19,

"average_balance" : {

"value" : 30089.0

}

},

{

"key" : "MA",

"doc_count" : 24,

"average_balance" : {

"value" : 29600.333333333332

}

},

{

"key" : "IL",

"doc_count" : 22,

"average_balance" : {

"value" : 29489.727272727272

}

},

{

"key" : "NM",

"doc_count" : 14,

"average_balance" : {

"value" : 28792.64285714286

}

},

{

"key" : "LA",

"doc_count" : 17,

"average_balance" : {

"value" : 28791.823529411766

}

}

]

}

}

}

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization