Docker & Kubernetes - Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

In this post, we'll deploy WordPress on Minikube using Helm. It also packages the Bitnami MariaDB chart which is required for bootstrapping a MariaDB deployment for the database requirements of the WordPress application.

This post is based on https://github.com/bitnami/charts/tree/master/bitnami/wordpress.

The file structure looks like this:

. ├── Chart.lock ├── Chart.yaml ├── README.md ├── ci │ ├── ct-values.yaml │ ├── ingress-wildcard-values.yaml │ ├── values-hpa-pdb.yaml │ └── values-metrics-and-ingress.yaml ├── templates │ ├── NOTES.txt │ ├── _helpers.tpl │ ├── configmap.yaml │ ├── deployment.yaml │ ├── externaldb-secrets.yaml │ ├── extra-list.yaml │ ├── hpa.yaml │ ├── ingress.yaml │ ├── metrics-svc.yaml │ ├── pdb.yaml │ ├── pvc.yaml │ ├── secrets.yaml │ ├── servicemonitor.yaml │ ├── svc.yaml │ ├── tests │ │ └── test-mariadb-connection.yaml │ └── tls-secrets.yaml ├── values.schema.json └── values.yaml

Minikube start:

$ minikube start --vm=true --cpus 2 --memory 2492 minikube v1.13.0 on Darwin 10.13.3 - KUBECONFIG=/Users/kihyuckhong/.kube/config Automatically selected the docker driver. Other choices: hyperkit, virtualbox Starting control plane node minikube in cluster minikube Creating hyperkit VM (CPUs=2, Memory=2492MB, Disk=20000MB) ... Preparing Kubernetes v1.19.0 on Docker 19.03.8 ... Verifying Kubernetes components... Enabled addons: default-storageclass, storage-provisioner Done! kubectl is now configured to use "minikube" by default

For Helm 2, tiller should be installed on the Minikube:

$ helm init $HELM_HOME has been configured at /Users/kihyuckhong/.helm. Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster. ...

Given the stable deprecation timeline, the Bitnami maintained WordPress Helm chart is now located at bitnami/charts.

The Bitnami repository can be added using helm repo add:

$ helm repo add bitnami https://charts.bitnami.com/bitnami $ helm repo list NAME URL bitnami https://charts.bitnami.com/bitnami stable https://charts.helm.sh/stable

To deploy the WordPress chart, we can use either one of the following depending on the Helm version:

$ helm install my-release bitnami/<chart> # Helm 3 $ helm install --name my-release bitnami/<chart> # Helm 2

Ref: bitnami / charts

In this post, we'll use Heml 3, so issue the following command from ~/charts/bitnami/wordpress directory:

$ helm install my-release \

--set wordpressUsername=admin \

--set wordpressPassword=password \

--set mariadb.auth.rootPassword=secretpassword \

bitnami/wordpress

NAME: my-release

LAST DEPLOYED: Wed Mar 17 16:42:40 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

** Please be patient while the chart is being deployed **

Your WordPress site can be accessed through the following DNS name from within your cluster:

my-release-wordpress.default.svc.cluster.local (port 80)

To access your WordPress site from outside the cluster follow the steps below:

1. Get the WordPress URL by running these commands:

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

Watch the status with: 'kubectl get svc --namespace default -w my-release-wordpress'

export SERVICE_IP=$(kubectl get svc --namespace default my-release-wordpress --template "{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}")

echo "WordPress URL: http://$SERVICE_IP/"

echo "WordPress Admin URL: http://$SERVICE_IP/admin"

2. Open a browser and access WordPress using the obtained URL.

3. Login with the following credentials below to see your blog:

echo Username: admin

echo Password: $(kubectl get secret --namespace default my-release-wordpress -o jsonpath="{.data.wordpress-password}" | base64 --decode)

Not used here but if we want to use just the default parameters defined in ./values.yaml, the command should look like this:

$ helm install wordpress bitnami/wordpress -f ./values.yaml

where the values.yaml is available from https://github.com/helm/charts/blob/master/stable/wordpress/values.yaml.

Wait for couple of minutes and then check the following:

$ kubectl get pods NAME READY STATUS RESTARTS AGE my-release-mariadb-0 1/1 Running 0 5m42s my-release-wordpress-76d8bb6c6c-jz2xj 1/1 Running 1 5m42s $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 10m my-release-mariadb ClusterIP 10.110.165.71 <none> 3306/TCP 6m5s my-release-wordpress LoadBalancer 10.99.101.249 <pending> 80:32233/TCP,443:30204/TCP 6m5s $ kubectl get deployment NAME READY UP-TO-DATE AVAILABLE AGE my-release-wordpress 1/1 1 1 6m52s $ kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-29cce85e-7beb-443b-84f8-ecfef87121a0 10Gi RWO Delete Bound default/my-release-wordpress standard 8m53s pvc-f9545501-2cc9-419d-9184-fba15c194d3f 8Gi RWO Delete Bound default/data-my-release-mariadb-0 standard 8m52s $ kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE data-my-release-mariadb-0 Bound pvc-f9545501-2cc9-419d-9184-fba15c194d3f 8Gi RWO standard 8m33s my-release-wordpress Bound pvc-29cce85e-7beb-443b-84f8-ecfef87121a0 10Gi RWO standard 8m34s $ kubectl get configmap NAME DATA AGE my-release-mariadb 1 9m23s $ kubectl get secrets NAME TYPE DATA AGE default-token-bqx9f kubernetes.io/service-account-token 3 14m my-release-mariadb Opaque 2 9m43s my-release-mariadb-token-vvlgt kubernetes.io/service-account-token 3 9m43s my-release-wordpress Opaque 1 9m43s sh.helm.release.v1.my-release.v1 helm.sh/release.v1 1 9m43s $ kubectl get statefulsets NAME READY AGE my-release-mariadb 1/1 10m $ kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE default my-release-mariadb-0 1/1 Running 0 10m default my-release-wordpress-76d8bb6c6c-jz2xj 1/1 Running 1 10m kube-system coredns-f9fd979d6-4wgt6 1/1 Running 0 14m kube-system etcd-minikube 1/1 Running 0 14m kube-system kube-apiserver-minikube 1/1 Running 0 14m kube-system kube-controller-manager-minikube 1/1 Running 0 14m kube-system kube-proxy-wv878 1/1 Running 0 14m kube-system kube-scheduler-minikube 1/1 Running 0 14m kube-system storage-provisioner 1/1 Running 0 14m $ helm list NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION my-release default 1 2021-03-17 16:52:39.698742 -0700 PDT deployed wordpress-10.6.13 5.7.0

$ kubectl exec -it my-release-mariadb-0 -- dash $ ps aux USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND 1001 1 0.5 3.3 1474720 84700 ? Ssl Mar17 0:03 /opt/bitnami/mariadb/sbin/mysqld --defaul 1001 733 0.0 0.0 2388 764 pts/0 Ss 00:03 0:00 dash 1001 784 0.0 0.1 7640 2712 pts/0 R+ 00:04 0:00 ps aux $ mysql -u root -p Enter password: Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 213 Server version: 10.5.9-MariaDB Source distribution Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> show databases; +--------------------+ | Database | +--------------------+ | bitnami_wordpress | | information_schema | | mysql | | performance_schema | | test | +--------------------+ 5 rows in set (0.012 sec) MariaDB [(none)]> use bitnami_wordpress; Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Database changed MariaDB [bitnami_wordpress]> show tables; +-----------------------------+ | Tables_in_bitnami_wordpress | +-----------------------------+ | wp_commentmeta | | wp_comments | | wp_links | | wp_options | | wp_postmeta | | wp_posts | | wp_term_relationships | | wp_term_taxonomy | | wp_termmeta | | wp_terms | | wp_usermeta | | wp_users | +-----------------------------+ 12 rows in set (0.001 sec) MariaDB [bitnami_wordpress]>

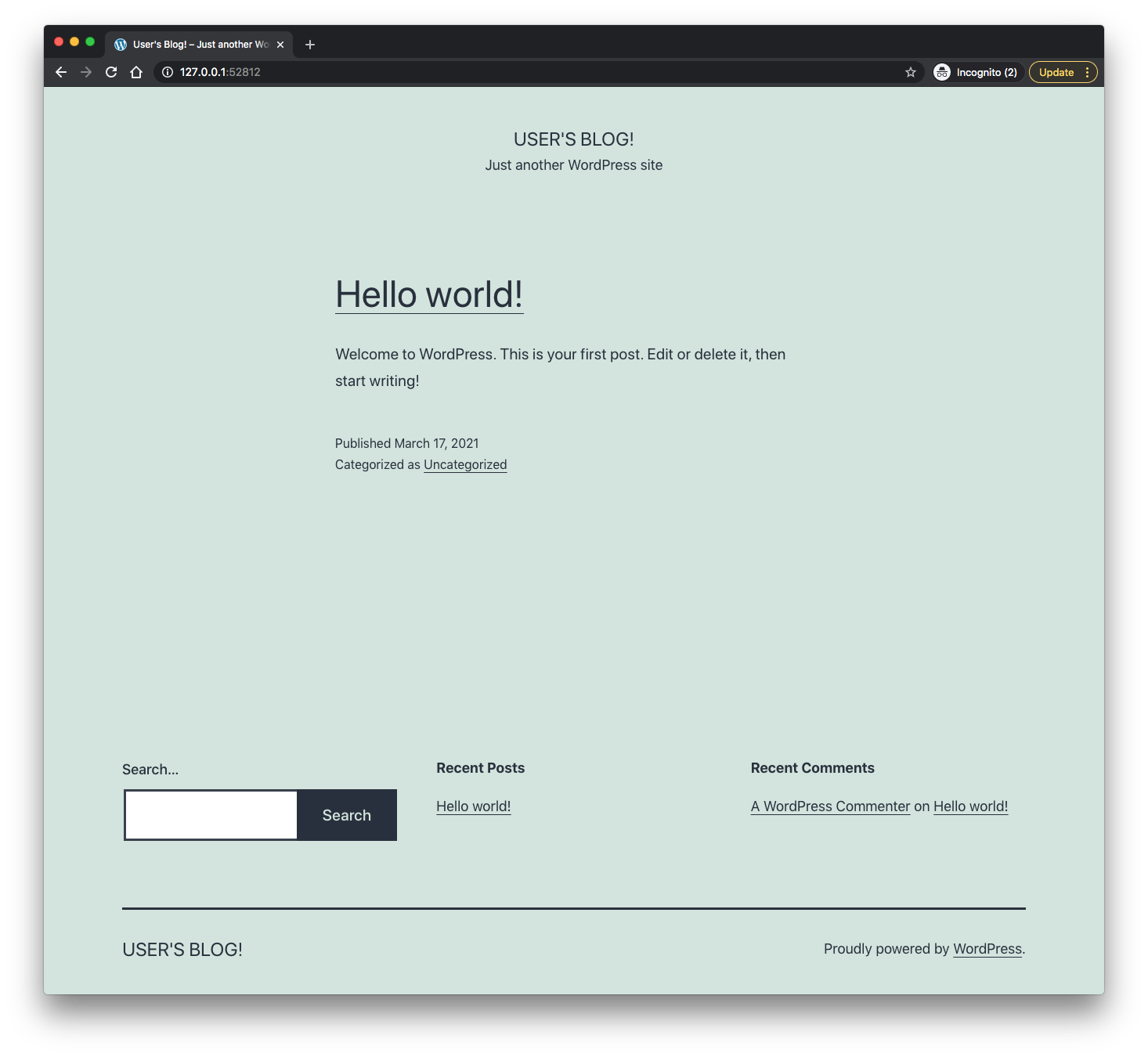

$ minikube service my-release-wordpress --url Starting tunnel for service my-release-wordpress. |-----------|----------------------|-------------|------------------------| | NAMESPACE | NAME | TARGET PORT | URL | |-----------|----------------------|-------------|------------------------| | default | my-release-wordpress | | http://127.0.0.1:52812 | | | | | http://127.0.0.1:52813 | |-----------|----------------------|-------------|------------------------| http://127.0.0.1:52812 http://127.0.0.1:52813 ecause you are using a Docker driver on darwin, the terminal needs to be open to run it.

In the previous section, we were connecting to our API on ephemeral port. However, it's not desired and we should be able to connect to our API on port 80.

We'll use an ingress resource to manage external access. An ingress resource defines rules that allow inbound connections to access the services within the cluster. Those rules require an ingress controller to be running inside the cluster.

Minikube, by default, uses the ingress-nginx controller which is the one we're going to use. Since it's Minikube's default, we could use it implicitly by enabling the ingress addon:

$ kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-f9fd979d6-9vd7z 1/1 Running 0 7m56s etcd-minikube 1/1 Running 0 7m49s kube-apiserver-minikube 1/1 Running 0 7m49s kube-controller-manager-minikube 1/1 Running 0 7m49s kube-proxy-m8wrd 1/1 Running 0 7m56s kube-scheduler-minikube 1/1 Running 0 7m49s storage-provisioner 1/1 Running 1 7m54s $ minikube addons enable ingress Verifying ingress addon... The 'ingress' addon is enabled $ kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-f9fd979d6-9vd7z 1/1 Running 0 10m etcd-minikube 1/1 Running 0 10m ingress-nginx-admission-create-vhz42 0/1 Completed 0 106s ingress-nginx-admission-patch-p89cc 0/1 Completed 0 106s ingress-nginx-controller-789d9c4dc-wrpth 1/1 Running 0 106s kube-apiserver-minikube 1/1 Running 0 10m kube-controller-manager-minikube 1/1 Running 0 10m kube-proxy-m8wrd 1/1 Running 0 10m kube-scheduler-minikube 1/1 Running 0 10m storage-provisioner 1/1 Running 1 10m

We'll install additional ingress controller as a dependency so that when we later deploy to a cloud provider we can rest assured that the same controller will be used in all our environments.

~/charts/bitnami/wordpress/charts.yaml (note that dependency information was transferred from the requirements.yaml to the Chart.yaml file, v2->v3):

annotations:

category: CMS

apiVersion: v2

appVersion: 5.7.0

dependencies:

- condition: mariadb.enabled

name: mariadb

repository: https://charts.bitnami.com/bitnami

version: 9.x.x

- name: nginx-ingress

repository: https://helm.nginx.com/stable

version: 0.8.0

- name: common

repository: https://charts.bitnami.com/bitnami

tags:

- bitnami-common

version: 1.x.x

...

Once we have defined dependencies, we can run helm dependency update and

it will use our dependency file to download all the specified charts into our charts/ directory for us:

$ helm dependency update Getting updates for unmanaged Helm repositories... ...Successfully got an update from the "https://helm.nginx.com/stable" chart repository Hang tight while we grab the latest from your chart repositories... ...Successfully got an update from the "bitnami" chart repository ...Successfully got an update from the "stable" chart repository Update Complete. ⎈Happy Helming!⎈ Saving 3 charts Downloading mariadb from repo https://charts.bitnami.com/bitnami Downloading nginx-ingress from repo https://helm.nginx.com/stable Downloading common from repo https://charts.bitnami.com/bitnami Deleting outdated charts

When helm dependency update retrieves charts, it will store them as chart archives in the charts/ directory. So in our case, one would expect to see the following files in the charts directory:

~/charts/bitnami/wordpress $ tree . ├── Chart.lock ├── Chart.yaml ├── Chart.yaml.SAVED ├── README.md ├── charts │ ├── common-1.4.1.tgz │ ├── mariadb-9.3.5.tgz │ └── nginx-ingress-0.8.0.tgz ├── ci │ ├── ct-values.yaml │ ├── ingress-wildcard-values.yaml │ ├── values-hpa-pdb.yaml │ └── values-metrics-and-ingress.yaml ├── templates │ ├── NOTES.txt │ ├── _helpers.tpl │ ├── configmap.yaml │ ├── deployment.yaml │ ├── externaldb-secrets.yaml │ ├── extra-list.yaml │ ├── hpa.yaml │ ├── ingress.yaml │ ├── metrics-svc.yaml │ ├── pdb.yaml │ ├── pvc.yaml │ ├── secrets.yaml │ ├── servicemonitor.yaml │ ├── svc.yaml │ ├── tests │ │ └── test-mariadb-connection.yaml │ └── tls-secrets.yaml ├── values.schema.json └── values.yaml

Now we need to define ingress rules specifying where to route incoming traffic. Our routing rules are simple — route all HTTP traffic to our wordpress service. Note that the nginx-ingress controller provides capabilities such as routing traffic to different services based on path (layer-7 routing), adding sticky sessions, doing TLS termination, etc.

values.yaml:

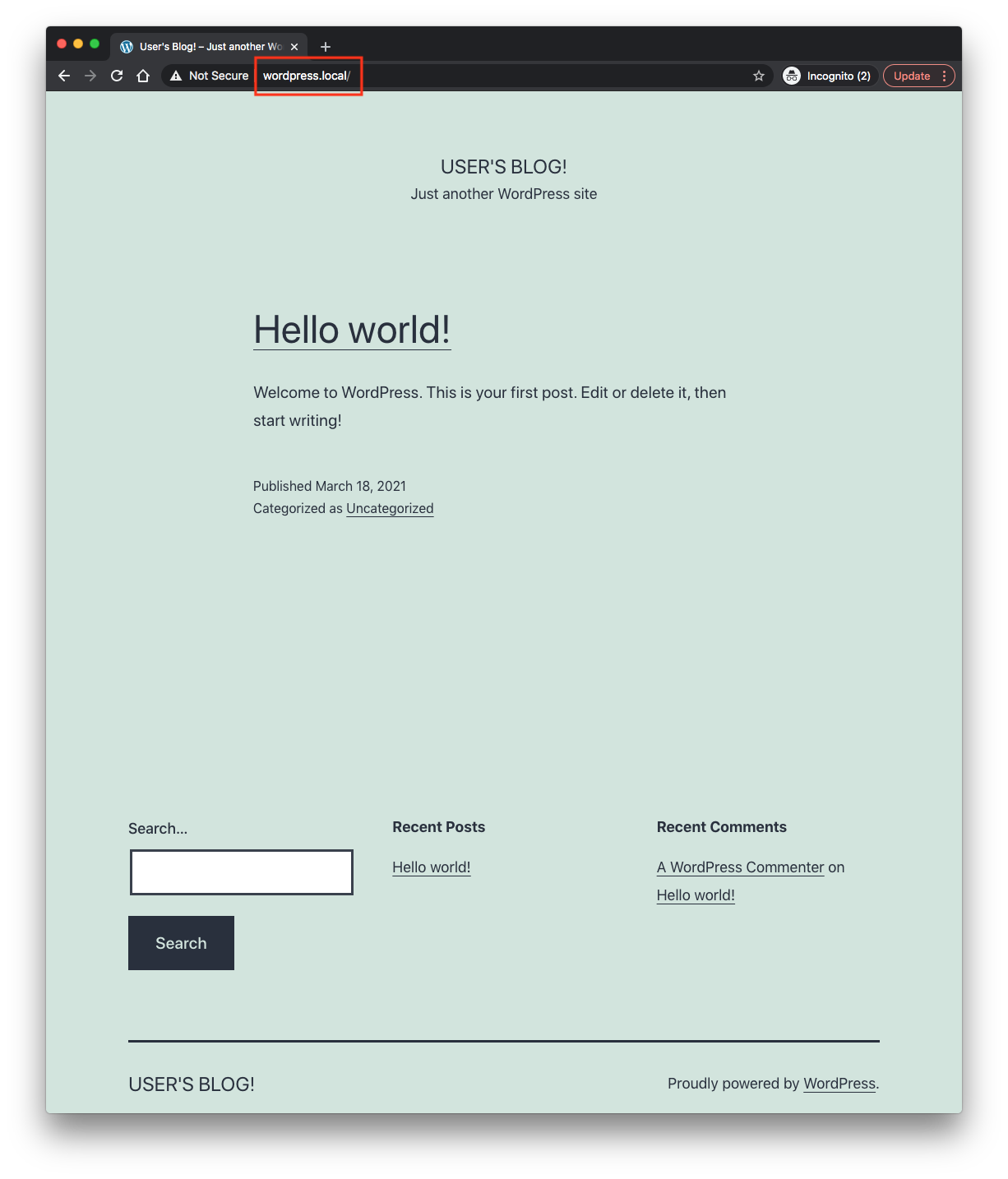

... ingress: ## Set to true to enable ingress record generation ## enabled: true ## Set this to true in order to add the corresponding annotations for cert-manager ## certManager: false ## When the ingress is enabled, a host pointing to this will be created ## hostname: wordpress.local ...

We may want to update the chart using helm upgrade command.

$ helm ls

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

my-release default 1 2021-03-17 17:57:59.11862 -0700 PDT deployed wordpress-10.6.13 5.7.0

$ helm upgrade \

--set wordpressUsername=admin \

--set wordpressPassword=password \

--set mariadb.auth.rootPassword=secretpassword \

--set mariadb.auth.password=secretpassword \

my-release ./

W0317 22:31:01.745835 52531 warnings.go:70] networking.k8s.io/v1beta1 IngressClass is deprecated in v1.19+, unavailable in v1.22+; use networking.k8s.io/v1 IngressClassList

W0317 22:31:04.680630 52531 warnings.go:70] networking.k8s.io/v1beta1 IngressClass is deprecated in v1.19+, unavailable in v1.22+; use networking.k8s.io/v1 IngressClassList

W0317 22:31:04.694483 52531 warnings.go:70] networking.k8s.io/v1beta1 IngressClass is deprecated in v1.19+, unavailable in v1.22+; use networking.k8s.io/v1 IngressClassList

Release "my-release" has been upgraded. Happy Helming!

NAME: my-release

LAST DEPLOYED: Wed Mar 17 22:31:01 2021

NAMESPACE: default

STATUS: deployed

REVISION: 2

NOTES:

** Please be patient while the chart is being deployed **

Your WordPress site can be accessed through the following DNS name from within your cluster:

my-release-wordpress.default.svc.cluster.local (port 80)

To access your WordPress site from outside the cluster follow the steps below:

1. Get the WordPress URL and associate WordPress hostname to your cluster external IP:

export CLUSTER_IP=$(minikube ip) # On Minikube. Use: `kubectl cluster-info` on others K8s clusters

echo "WordPress URL: http://wordpress.local/"

echo "$CLUSTER_IP wordpress.local" | sudo tee -a /etc/hosts

2. Open a browser and access WordPress using the obtained URL.

3. Login with the following credentials below to see your blog:

echo Username: admin

echo Password: $(kubectl get secret --namespace default my-release-wordpress -o jsonpath="{.data.wordpress-password}" | base64 --decode)

Following the steps from the output:

$ helm get values my-release

USER-SUPPLIED VALUES:

mariadb:

auth:

password: secretpassword

rootPassword: secretpassword

wordpressPassword: password

wordpressUsername: admin

$ kubectl cluster-info

Kubernetes master is running at https://192.168.64.12:8443

KubeDNS is running at https://192.168.64.12:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

$ echo "192.168.64.12 wordpress.local" | sudo tee -a /etc/hosts

Password:

192.168.64.12 wordpress.local

$ cat /etc/hosts

...

192.168.64.12 wordpress.local

...

The source codes are available from "ingress" branch: https://github.com/Einsteinish/Helm-Chart-for-Wordpress-and-MariaDB.git.

To uninstall/delete the my-release deployment:

$ helm uninstall my-release W0317 23:05:15.806918 52851 warnings.go:70] networking.k8s.io/v1beta1 IngressClass is deprecated in v1.19+, unavailable in v1.22+; use networking.k8s.io/v1 IngressClassList release "my-release" uninstalled $ helm ls NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization