GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

ArgoCD follows the GitOps pattern of using Git repositories as the source of truth for defining the desired application state. In other words, the ArgoCD automatically synchronize the cluster to the desired state defined in a Git repository.

Each workload is defined declarative through a resource manifest in a YAML file. Argo CD checks if the state defined in the Git repository matches what is running on the cluster and synchronizes it if changes were detected.

Kubernetes manifests can be specified in several ways:

- Kustomize applications

- Helm Charts

- Plain directory of YAML/json manifests

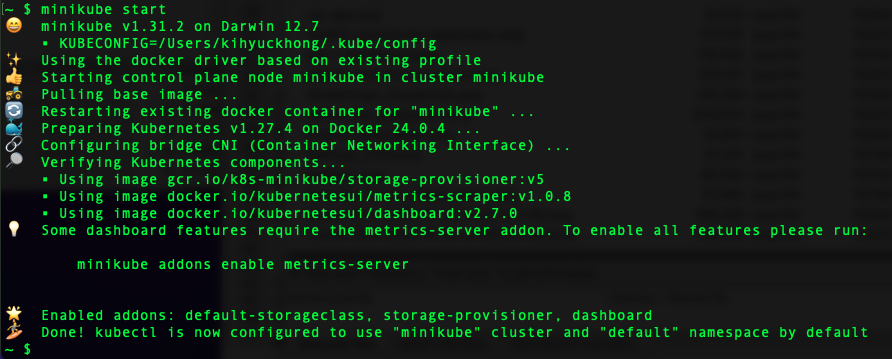

In this post, we'll use #3 (simple yamls) and they will be deployed to a Minikube cluster:

In this post, we'll install guestbook app.

ArgoCD is a declarative, GitOps continuous delivery (CD) tool for Kubernetes!

Ref: Argo CD - Declarative GitOps CD for Kubernetes: Getting Started.

Argo CD project can be found at https://github.com/argoproj/argo-cd.

Create a separate namespace for ArgoCD:

$ kubectl create namespace argocd namespace/argocd created $ kubectl get ns NAME STATUS AGE argocd Active 3s default Active 11d kube-node-lease Active 11d kube-public Active 11d kube-system Active 11d kubernetes-dashboard Active 11d

Then, we can install ArgoCD into the argocd namespace:

$ kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/master/manifests/install.yaml customresourcedefinition.apiextensions.k8s.io/applications.argoproj.io created ... networkpolicy.networking.k8s.io/argocd-server-network-policy created $ kubectl get all -n argocd NAME READY STATUS RESTARTS AGE pod/argocd-application-controller-0 1/1 Running 0 2m5s pod/argocd-applicationset-controller-d9b4bb7cc-9j9rv 1/1 Running 0 2m11s pod/argocd-dex-server-78f56465b-hb9d6 1/1 Running 0 2m11s pod/argocd-notifications-controller-7fb8c4c777-7pczk 1/1 Running 0 2m11s pod/argocd-redis-b5d6bf5f5-mwb62 1/1 Running 0 2m11s pod/argocd-repo-server-78fd68f9f5-qllr7 1/1 Running 0 2m8s pod/argocd-server-6fd9d5cfcd-4kl8c 1/1 Running 0 2m7s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/argocd-applicationset-controller ClusterIP 10.104.194.111 <none> 7000/TCP,8080/TCP 2m12s service/argocd-dex-server ClusterIP 10.106.180.122 <none> 5556/TCP,5557/TCP,5558/TCP 2m12s service/argocd-metrics ClusterIP 10.103.194.74 <none> 8082/TCP 2m12s service/argocd-notifications-controller-metrics ClusterIP 10.97.222.111 <none> 9001/TCP 2m12s service/argocd-redis ClusterIP 10.99.2.148 <none> 6379/TCP 2m12s service/argocd-repo-server ClusterIP 10.104.159.44 <none> 8081/TCP,8084/TCP 2m11s service/argocd-server ClusterIP 10.105.204.172 <none> 80/TCP,443/TCP 2m11s service/argocd-server-metrics ClusterIP 10.101.58.118 <none> 8083/TCP 2m11s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/argocd-applicationset-controller 1/1 1 1 2m11s deployment.apps/argocd-dex-server 1/1 1 1 2m11s deployment.apps/argocd-notifications-controller 1/1 1 1 2m11s deployment.apps/argocd-redis 1/1 1 1 2m11s deployment.apps/argocd-repo-server 1/1 1 1 2m10s deployment.apps/argocd-server 1/1 1 1 2m9s NAME DESIRED CURRENT READY AGE replicaset.apps/argocd-applicationset-controller-d9b4bb7cc 1 1 1 2m12s replicaset.apps/argocd-dex-server-78f56465b 1 1 1 2m12s replicaset.apps/argocd-notifications-controller-7fb8c4c777 1 1 1 2m12s replicaset.apps/argocd-redis-b5d6bf5f5 1 1 1 2m12s replicaset.apps/argocd-repo-server-78fd68f9f5 1 1 1 2m10s replicaset.apps/argocd-server-6fd9d5cfcd 1 1 1 2m8s NAME READY AGE statefulset.apps/argocd-application-controller 1/1 2m7s

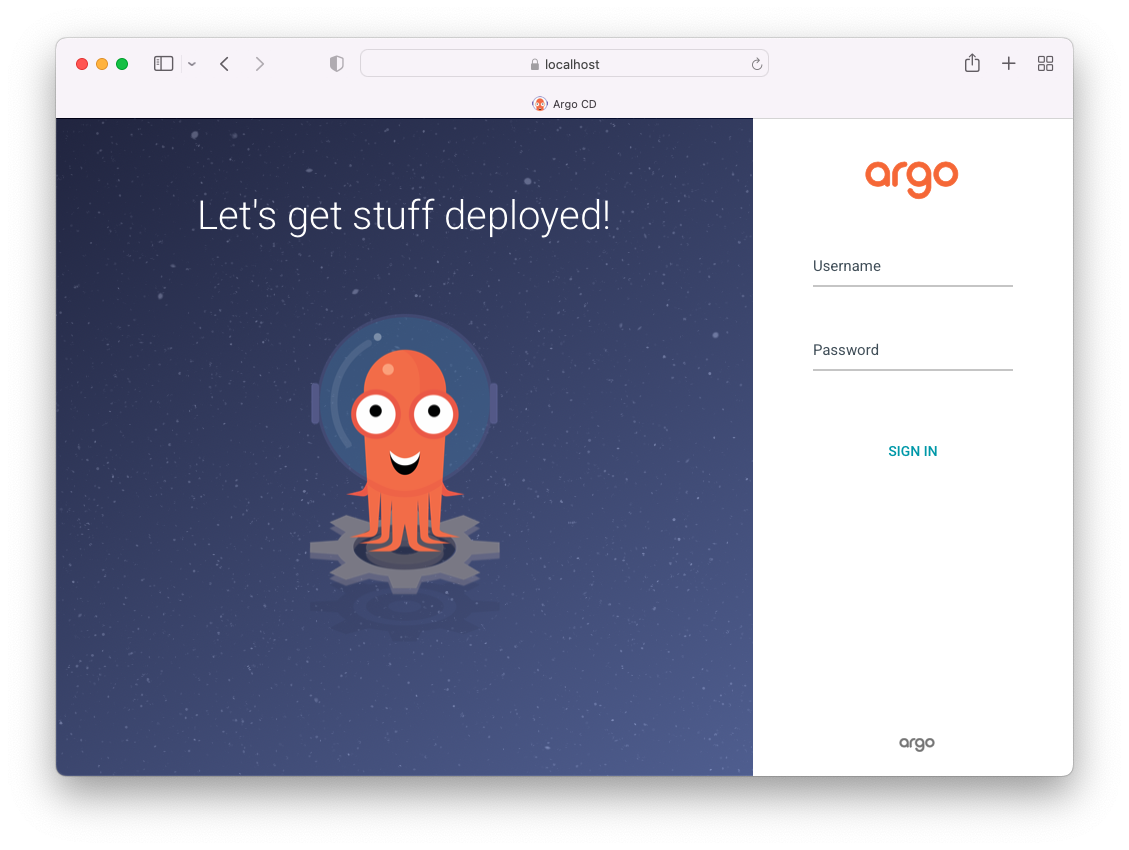

Once the pods are ready, ArgoCD will be running. But the ArgoCD API server (web GUI of ArgoCD) will not be accessible from outside the cluster. We'll use port-forward to expose a port to the service, and forward it to localhost:

$ kubectl port-forward svc/argocd-server -n argocd 8080:443 Forwarding from 127.0.0.1:8080 -> 8080 Forwarding from [::1]:8080 -> 8080

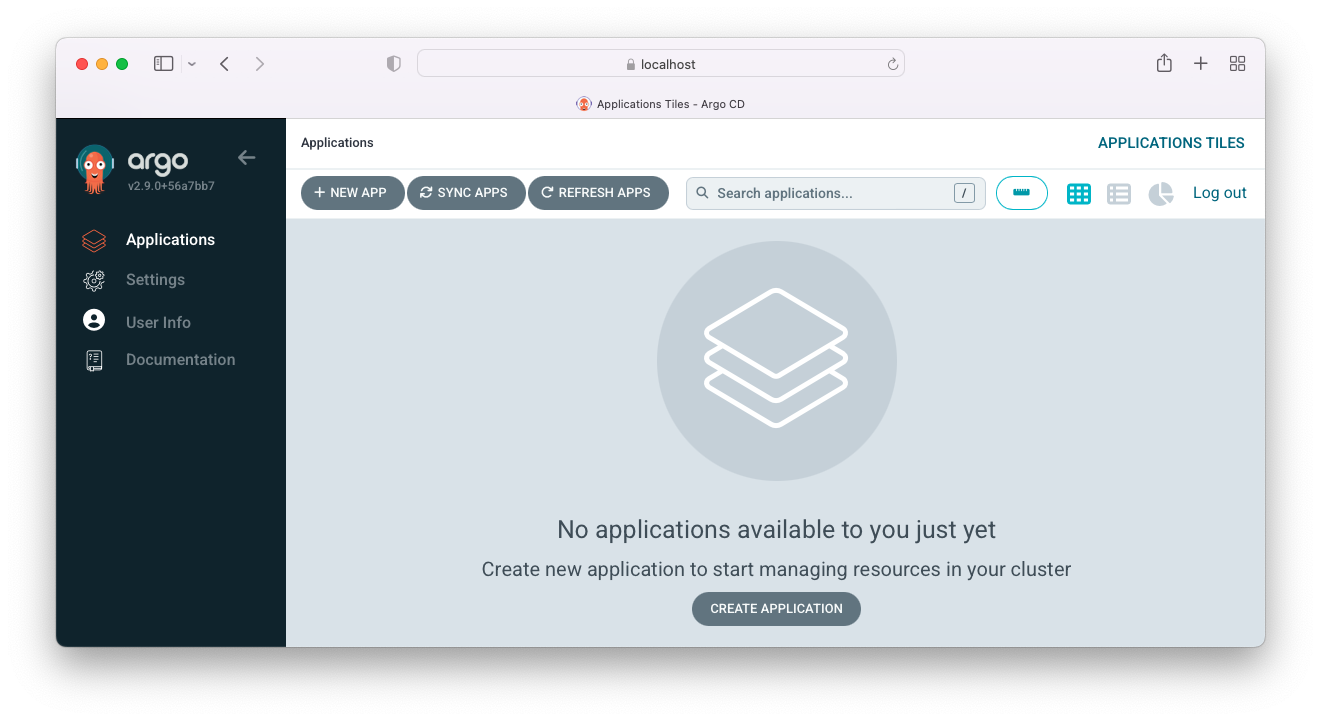

Now we'll be able to access the API server via localhost:8080:

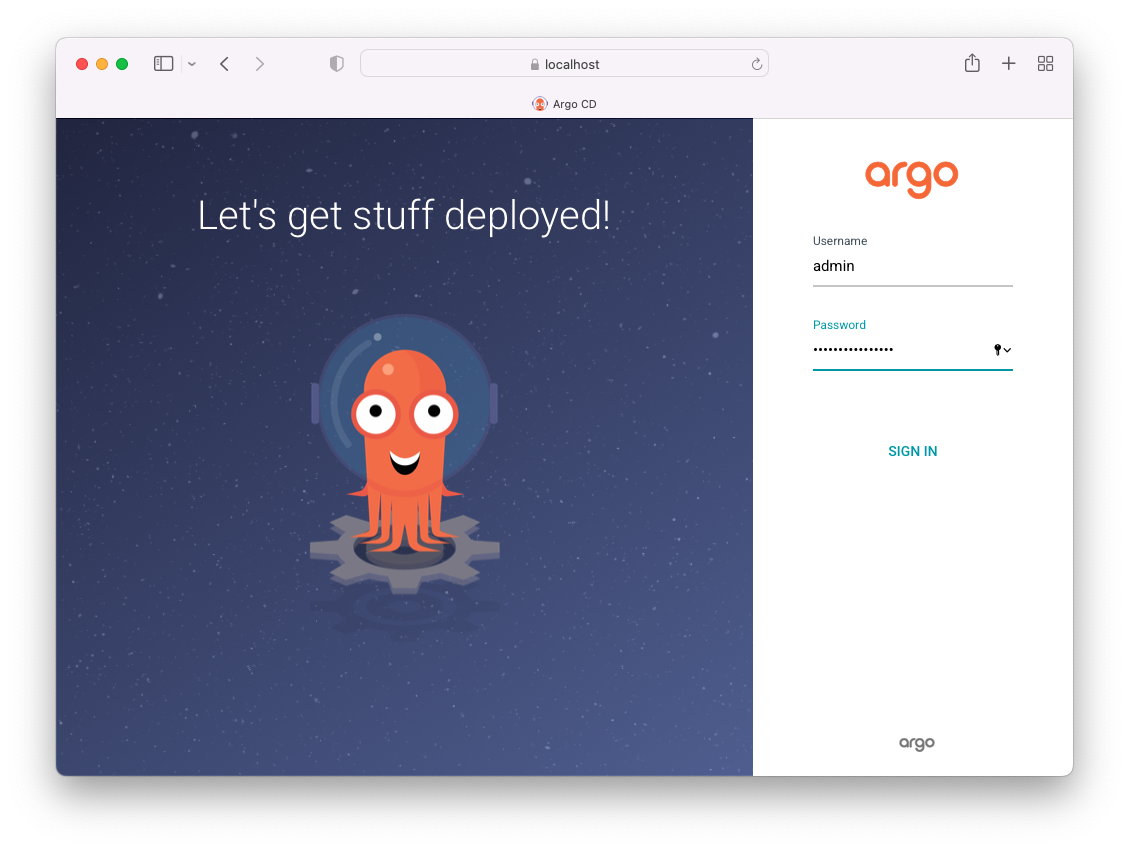

The username for Argo CD is admin. The process of retrieving initial password for ArgoCD involves accessing and decoding because the password is stored as a secret in the "argocd" namespace. The jsonpath query is used to retrieve the encoded password, and then it's decoded with base64 to make it usable for authentication or configuration purposes. This is a common security practice in Kubernetes to protect sensitive information like passwords:

$ kubectl get secret argocd-initial-admin-secret -n argocd -o yaml apiVersion: v1 data: password: UGNKNnNwRXFENXlUQjVhUQ== kind: Secret metadata: creationTimestamp: "2023-10-23T20:33:14Z" name: argocd-initial-admin-secret namespace: argocd resourceVersion: "198446" uid: 9d7e04a9-408e-4e11-aeae-1f0bfdd0cbb7 type: Opaque $ echo UGNKNnNwRXFENXlUQjVhUQ== | base64 --decode PcJ6spEqD5yTB5aQ

Or

$ kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d; echo

PcJ6spEqD5yTB5aQ

So, in our case, username = admin and password = PcJ6spEqD5yTB5aQ. Login with the credentials:

Now we want to create an application for our project on ArgoCD.

Note that we're going to deploy an application internally, in other words, into the same cluster(minikube) that Argo CD is running in.

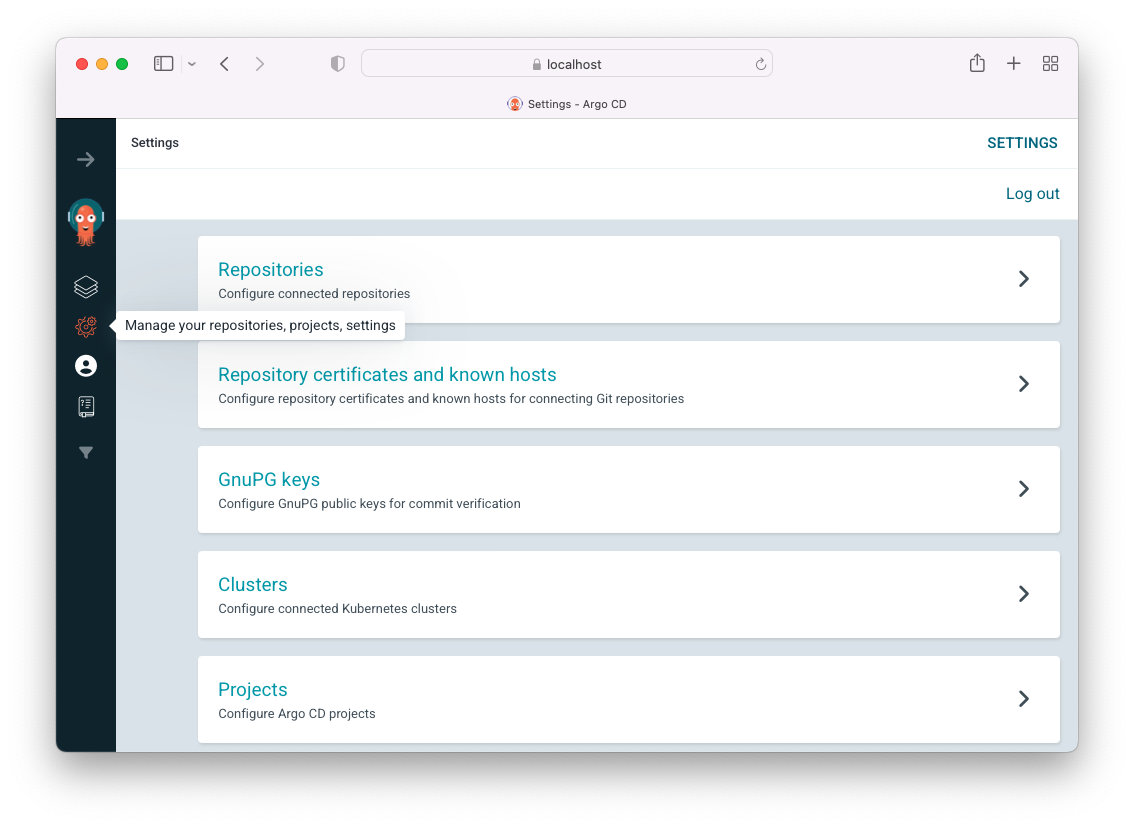

After the login, click the Manage your repositories, projects, settings in the left panel:

Click on Repositories.

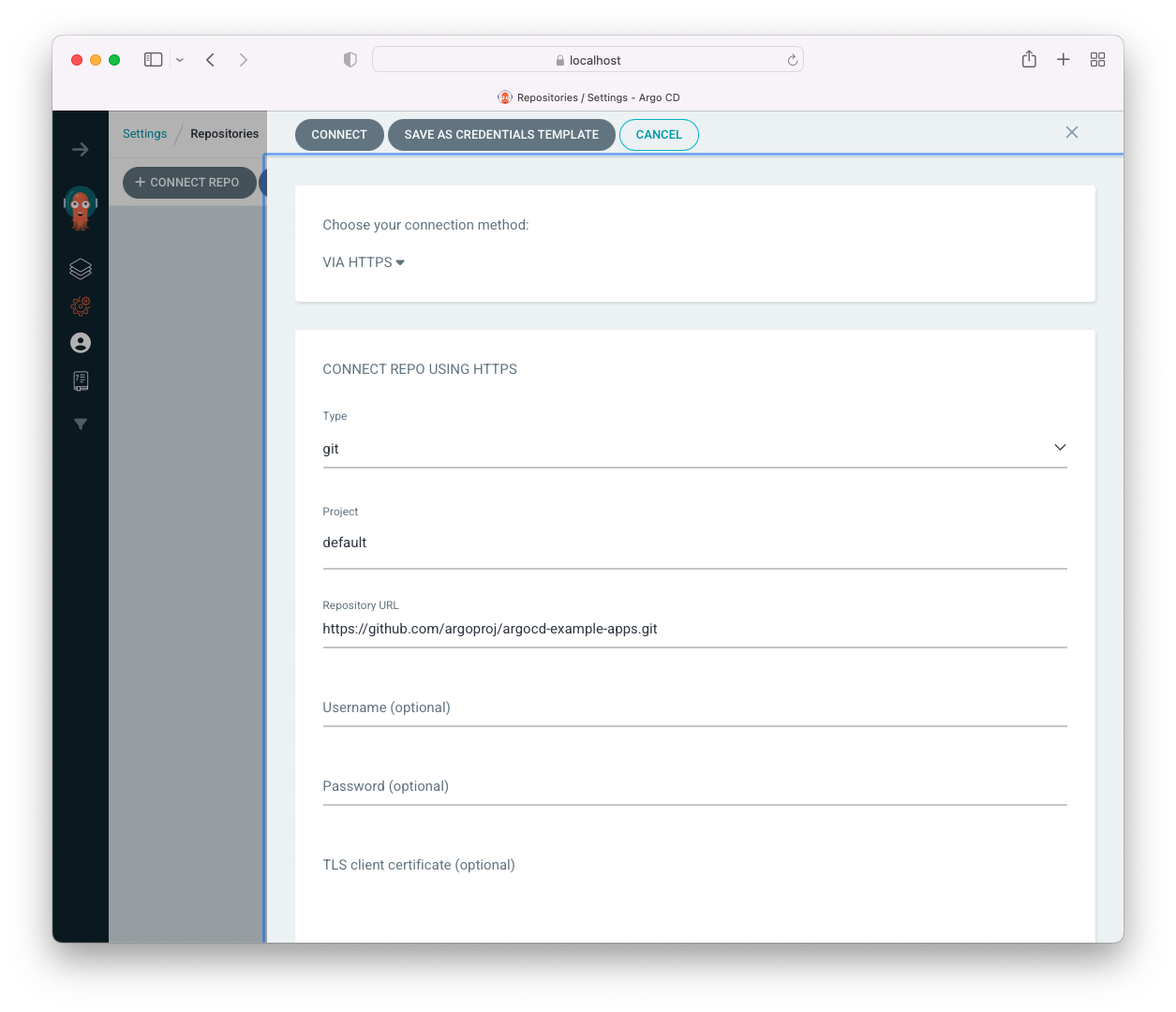

We can use either HTTPS or SSH link to connect our repository to ArgoCD but for a public repository , we just add the repo URL (https://github.com/argoproj/argocd-example-apps.git):

Click "Connect":

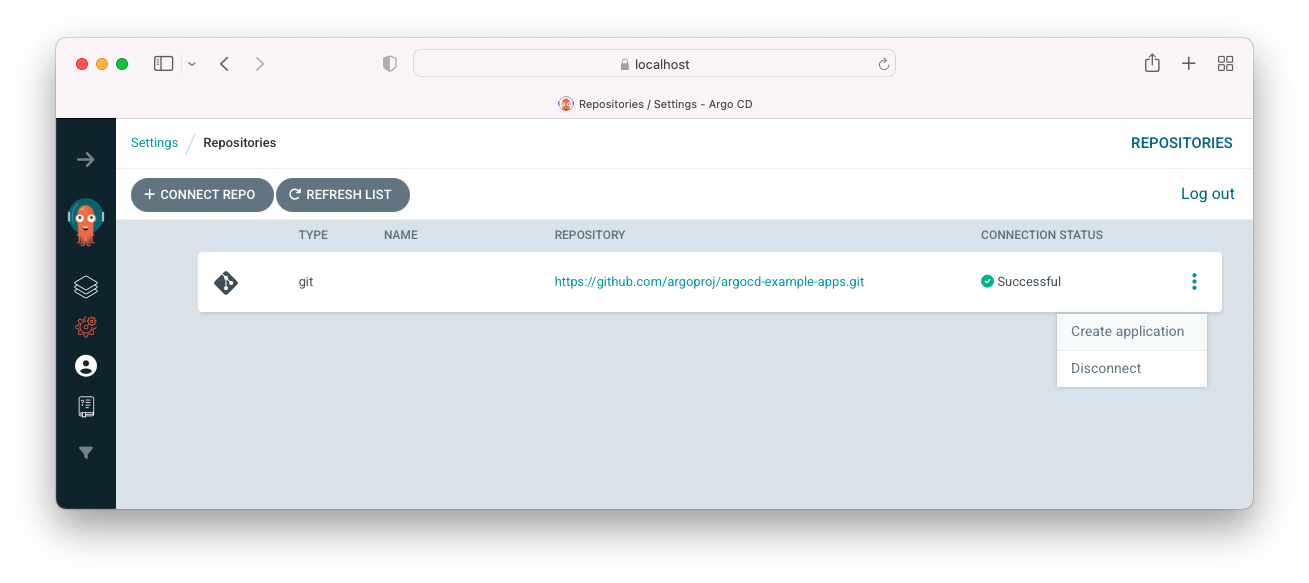

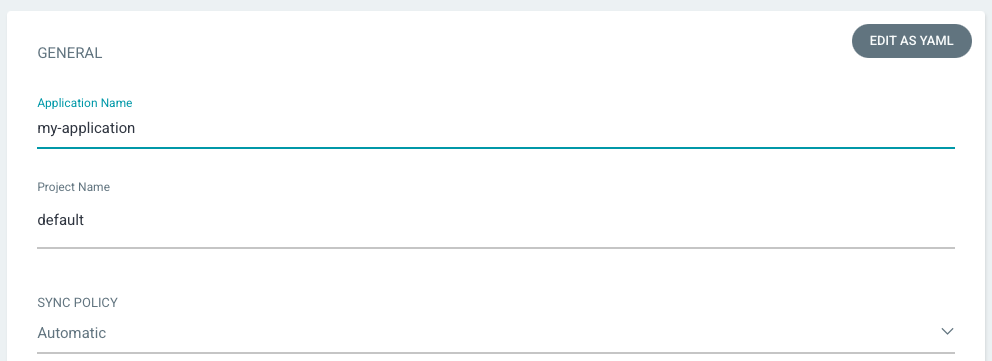

We should get a "Successful" message for the "Connection Status". Now that we're connected to the github, we want to create a new app from the repo. Click on the three dot and "Create application". Enter "my-application" as application name, choose "default" for Project Name, and choose "Automatic" for Sync Policy:

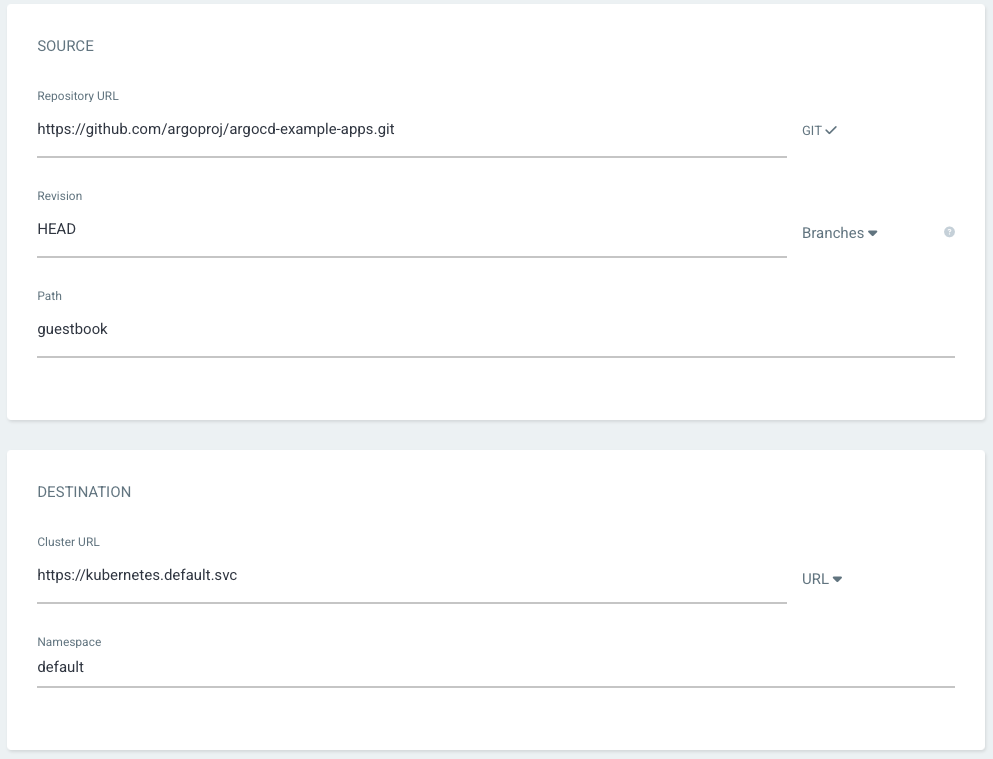

Leave revision as "HEAD", and set the path to "guestbook" and for Destination, set cluster URL to https://kubernetes.default.svc (or in-cluster for cluster name) and namespace to default:

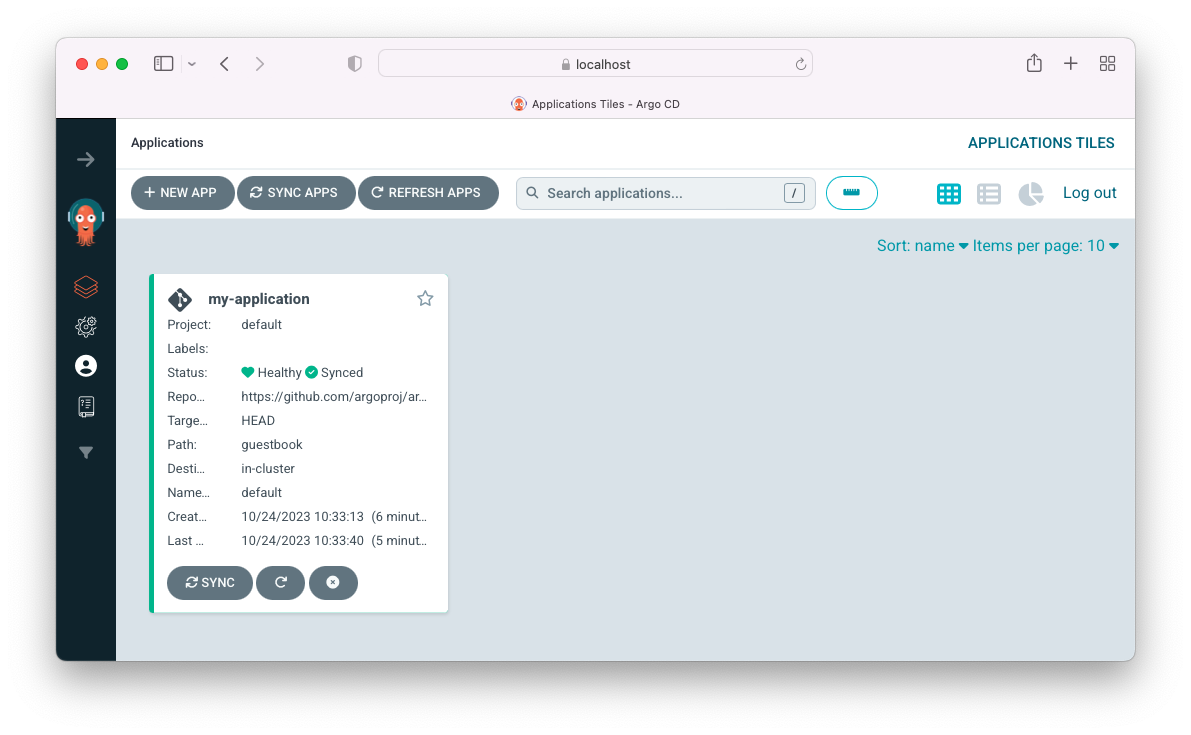

After filling out the information above, click Create at the top of the UI to create the guestbook application:

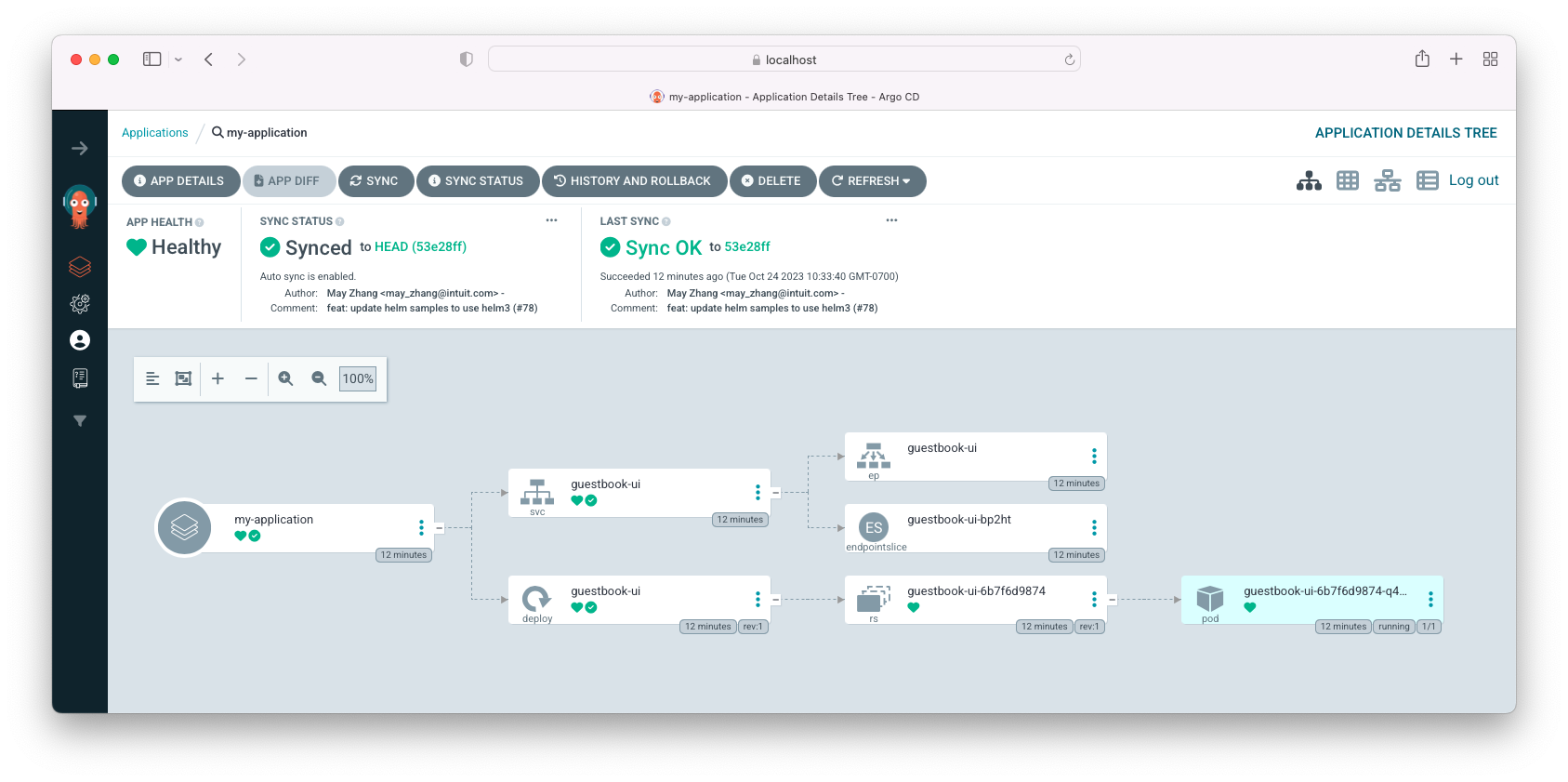

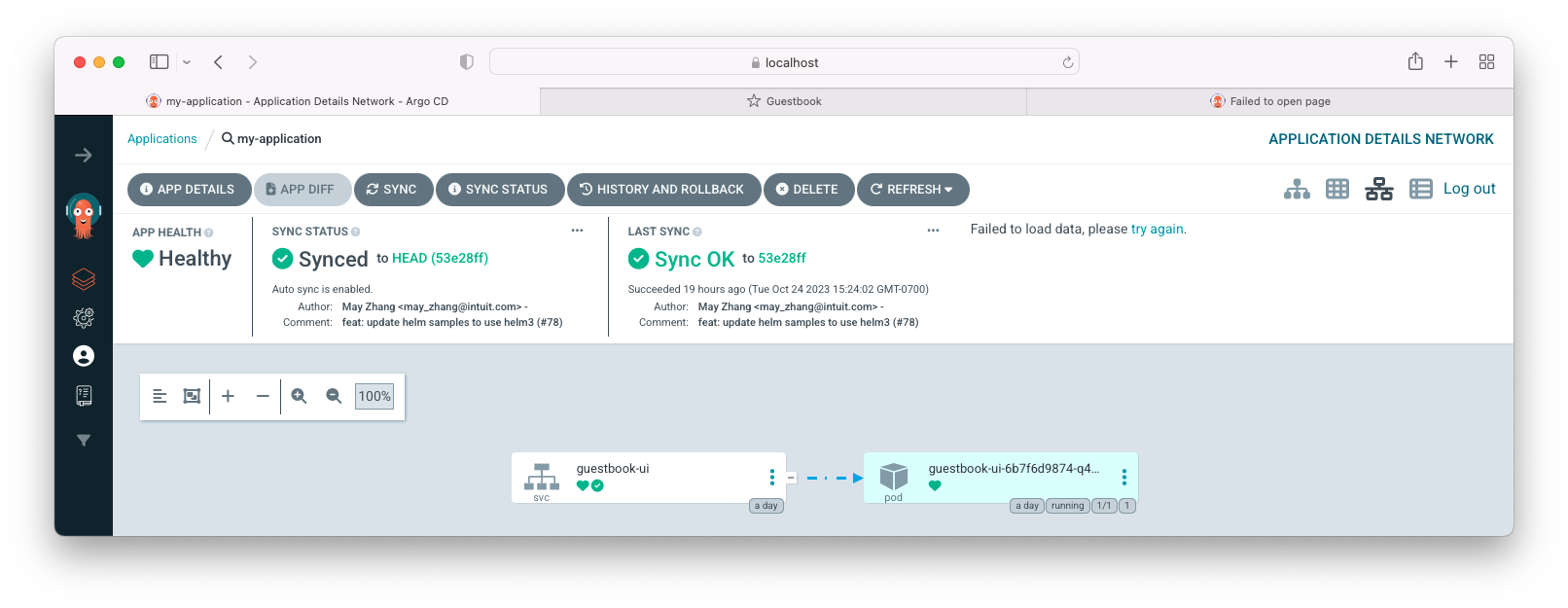

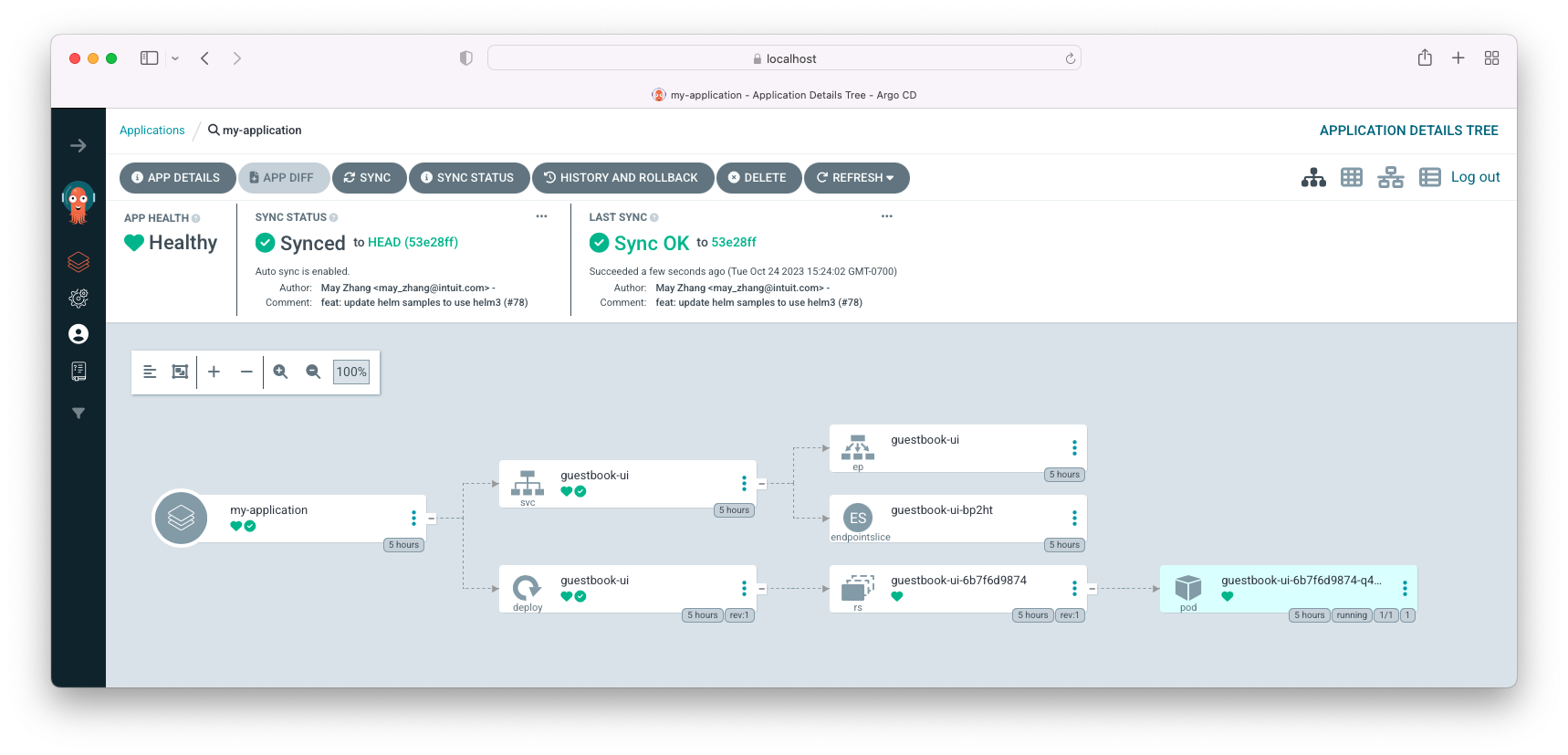

To get a detailed view of the application, click anywhere on the app:

Actually, we created the app using ArgoCD UI. But we could have created it using configuration file (application.yaml) instead (kubectl apply -f application.yaml).

Curious about the yaml for the "my-application"?

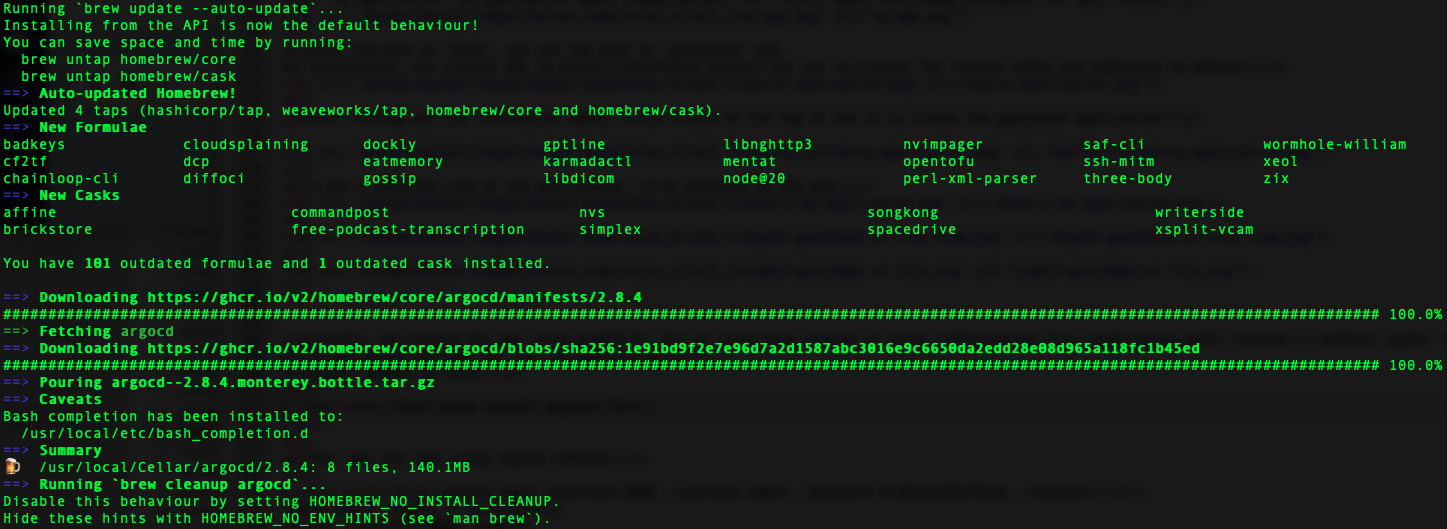

Install ArgoCD on local:

$ brew install argocd

Then, get the yaml using argocd command:

$ argocd login localhost:8080 --username admin --password PcJ6spEqD5yTB5aQ --insecure

'admin:login' logged in successfully

Context 'localhost:8080' updated

$ argocd app get my-application -o yaml --server localhost:8080 --insecure

metadata:

creationTimestamp: "2023-10-24T17:33:13Z"

generation: 76

managedFields:

- apiVersion: argoproj.io/v1alpha1

fieldsType: FieldsV1

fieldsV1:

f:spec:

.: {}

f:destination:

.: {}

f:namespace: {}

f:server: {}

f:project: {}

f:source:

.: {}

f:path: {}

f:repoURL: {}

f:targetRevision: {}

f:syncPolicy:

.: {}

f:automated: {}

f:status:

.: {}

f:health: {}

f:summary: {}

f:sync:

.: {}

f:comparedTo:

.: {}

f:destination: {}

f:source: {}

manager: argocd-server

operation: Update

time: "2023-10-24T17:33:13Z"

- apiVersion: argoproj.io/v1alpha1

fieldsType: FieldsV1

fieldsV1:

f:status:

f:controllerNamespace: {}

f:health:

f:status: {}

f:history: {}

f:operationState:

.: {}

f:finishedAt: {}

f:message: {}

f:operation:

.: {}

f:initiatedBy:

.: {}

f:automated: {}

f:retry:

.: {}

f:limit: {}

f:sync:

.: {}

f:revision: {}

f:phase: {}

f:startedAt: {}

f:syncResult:

.: {}

f:resources: {}

f:revision: {}

f:source:

.: {}

f:path: {}

f:repoURL: {}

f:targetRevision: {}

f:reconciledAt: {}

f:resources: {}

f:sourceType: {}

f:summary:

f:images: {}

f:sync:

f:comparedTo:

f:destination:

f:namespace: {}

f:server: {}

f:source:

f:path: {}

f:repoURL: {}

f:targetRevision: {}

f:revision: {}

f:status: {}

manager: argocd-application-controller

operation: Update

time: "2023-10-24T21:23:10Z"

name: my-application

namespace: argocd

resourceVersion: "234760"

uid: 3b8d2e9b-37ad-4bfd-9db2-557f44bf08f5

spec:

destination:

namespace: default

server: https://kubernetes.default.svc

project: default

source:

path: guestbook

repoURL: https://github.com/argoproj/argocd-example-apps.git

targetRevision: HEAD

syncPolicy:

automated: {}

status:

controllerNamespace: argocd

health:

status: Healthy

history:

- deployStartedAt: "2023-10-24T17:33:36Z"

deployedAt: "2023-10-24T17:33:39Z"

id: 0

revision: 53e28ff20cc530b9ada2173fbbd64d48338583ba

source:

path: guestbook

repoURL: https://github.com/argoproj/argocd-example-apps.git

targetRevision: HEAD

operationState:

finishedAt: "2023-10-24T17:33:40Z"

message: successfully synced (all tasks run)

operation:

initiatedBy:

automated: true

retry:

limit: 5

sync:

revision: 53e28ff20cc530b9ada2173fbbd64d48338583ba

phase: Succeeded

startedAt: "2023-10-24T17:33:36Z"

syncResult:

resources:

- group: ""

hookPhase: Running

kind: Service

message: service/guestbook-ui created

name: guestbook-ui

namespace: default

status: Synced

syncPhase: Sync

version: v1

- group: apps

hookPhase: Running

kind: Deployment

message: deployment.apps/guestbook-ui created

name: guestbook-ui

namespace: default

status: Synced

syncPhase: Sync

version: v1

revision: 53e28ff20cc530b9ada2173fbbd64d48338583ba

source:

path: guestbook

repoURL: https://github.com/argoproj/argocd-example-apps.git

targetRevision: HEAD

reconciledAt: "2023-10-24T21:23:10Z"

resources:

- health:

status: Healthy

kind: Service

name: guestbook-ui

namespace: default

status: Synced

version: v1

- group: apps

health:

status: Healthy

kind: Deployment

name: guestbook-ui

namespace: default

status: Synced

version: v1

sourceType: Directory

summary:

images:

- gcr.io/heptio-images/ks-guestbook-demo:0.2

sync:

comparedTo:

destination:

namespace: default

server: https://kubernetes.default.svc

source:

path: guestbook

repoURL: https://github.com/argoproj/argocd-example-apps.git

targetRevision: HEAD

revision: 53e28ff20cc530b9ada2173fbbd64d48338583ba

status: Synced

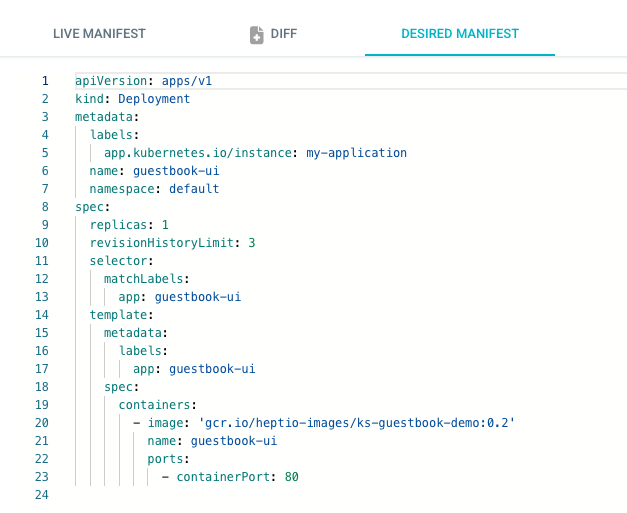

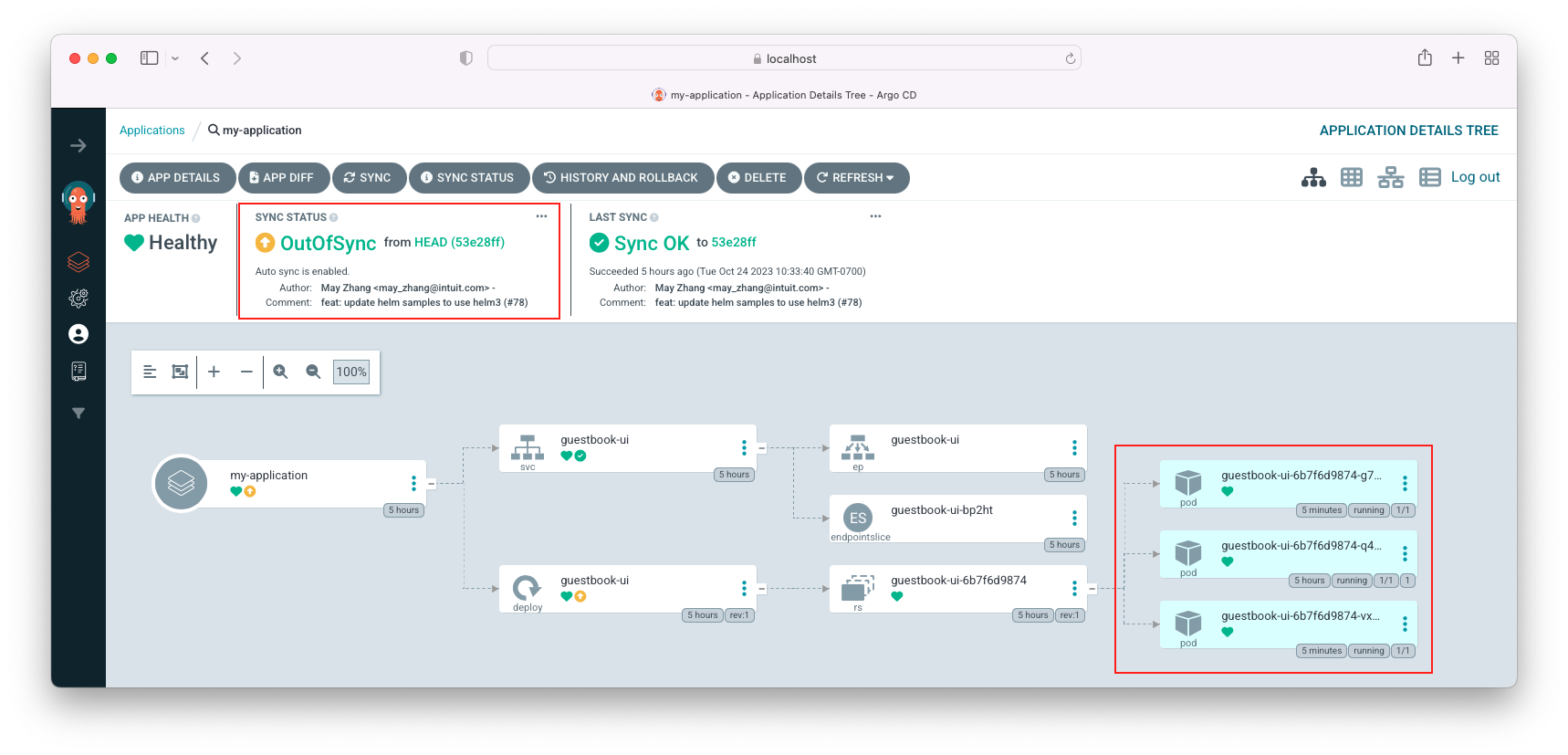

To see how the self-healing is working, we want to modify the live manifest.

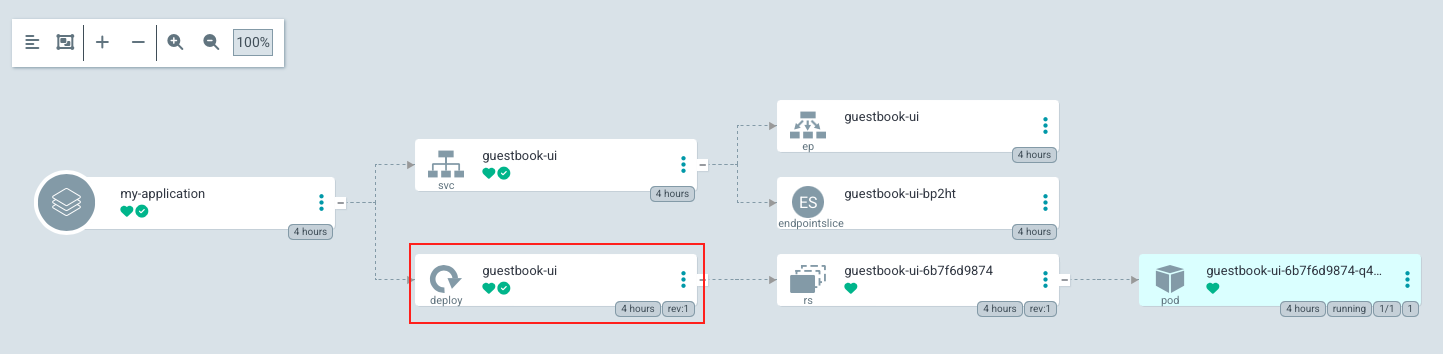

First, click "deployment" for guestbook-ui:

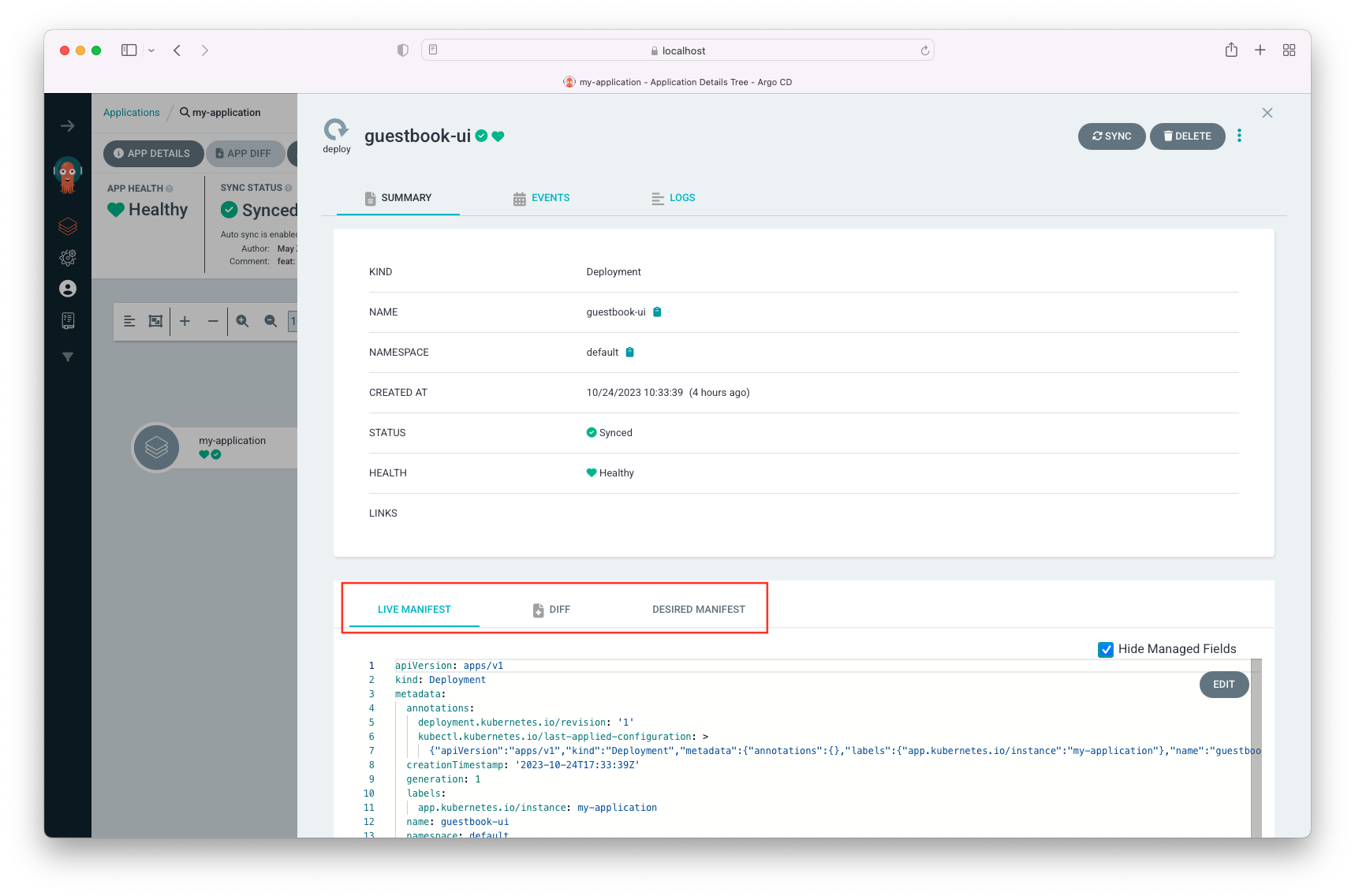

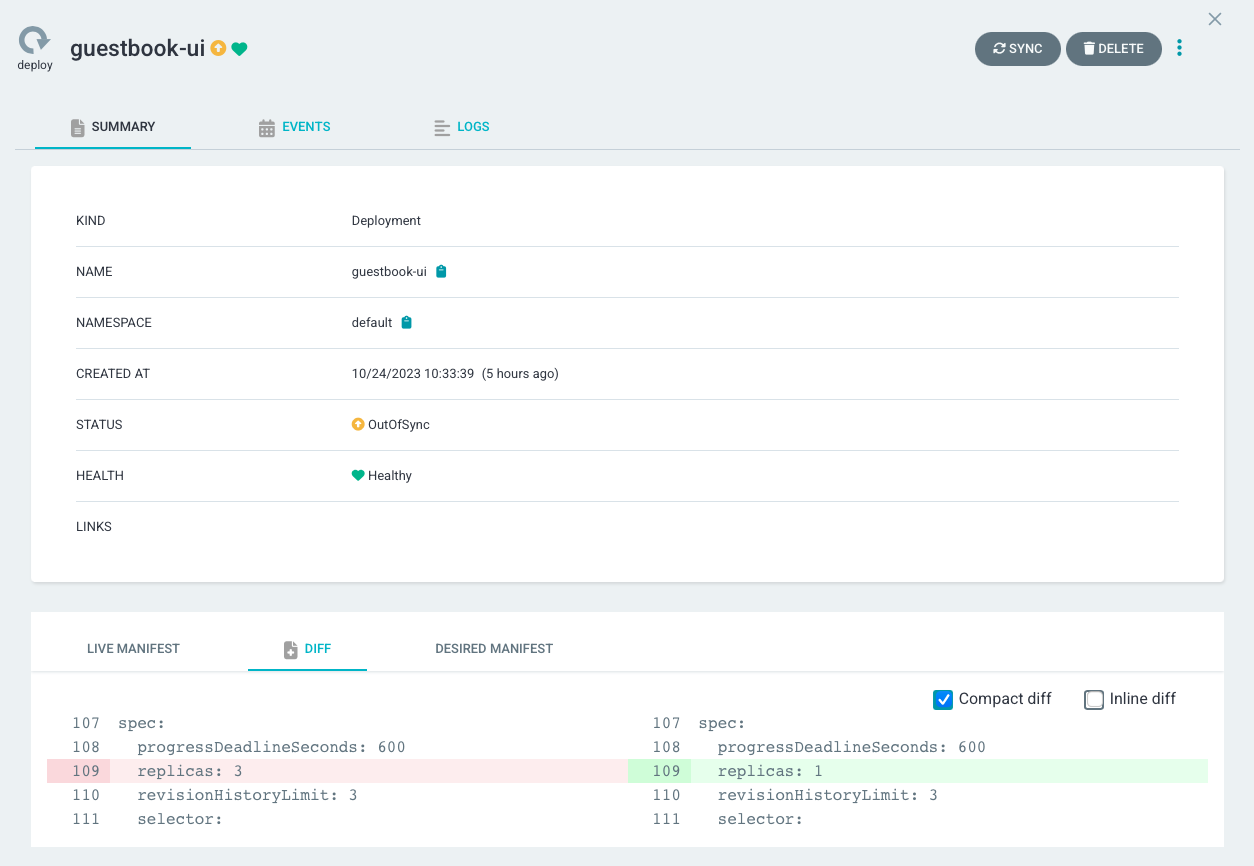

Then, we see two manifests (LIVE VS DESIRED) and DIFF in the middle:

Because the DESIRED MANIFEST is the Source of Truth, if we midify the LIVE manifest file and make it different from the DESIRED MANIFEST, ArgoCD's self-heal should put it back to the same as declared in the DESIRED MANIFEST.

Let's set the replicas = 3 for the live manifest:

$ kubectl scale deployment -n default guestbook-ui --replicas=3 deployment.apps/guestbook-ui scaled

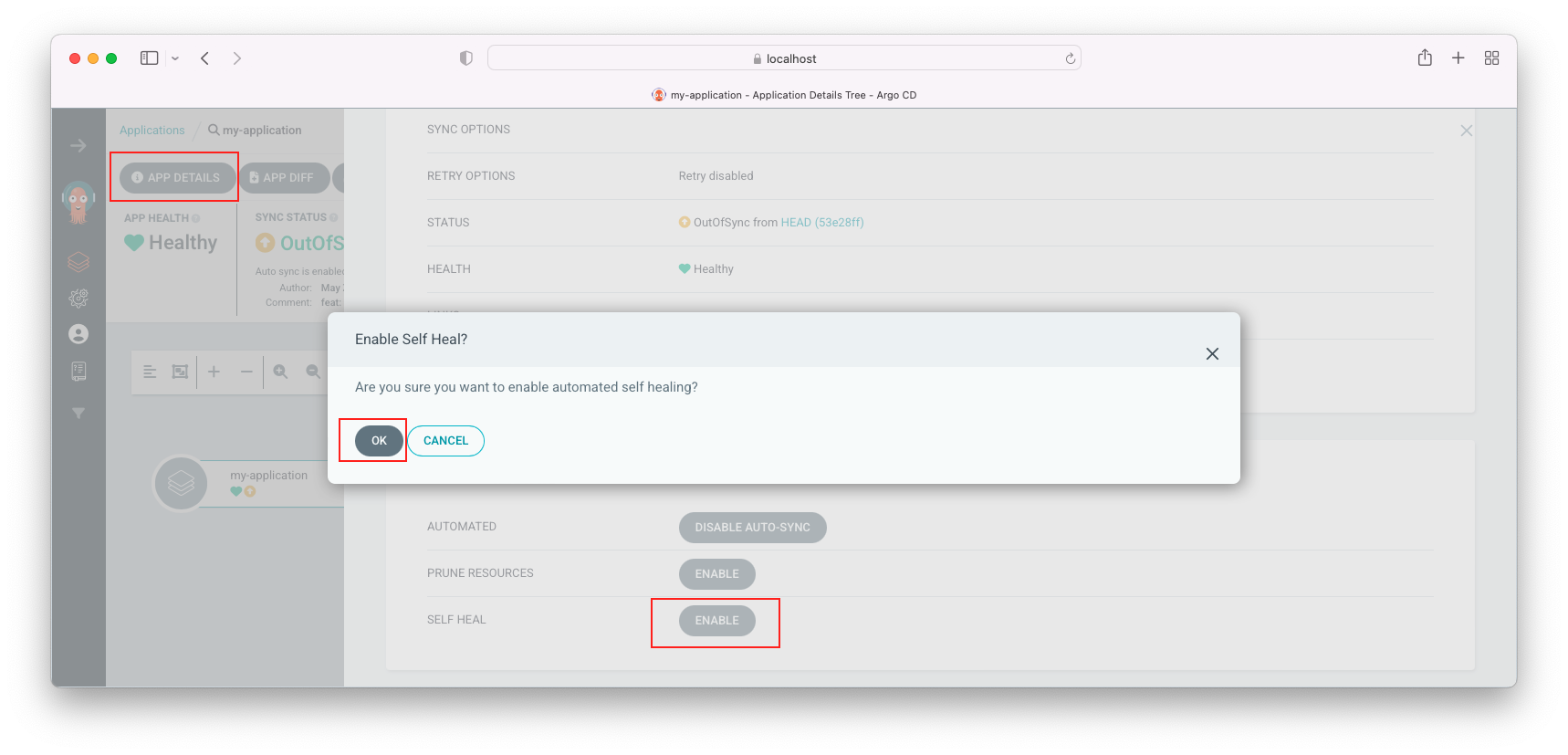

Click "Enable" => "OK":

We can see our deployment is synced back with out git repository (desired manifest, replica=1)!

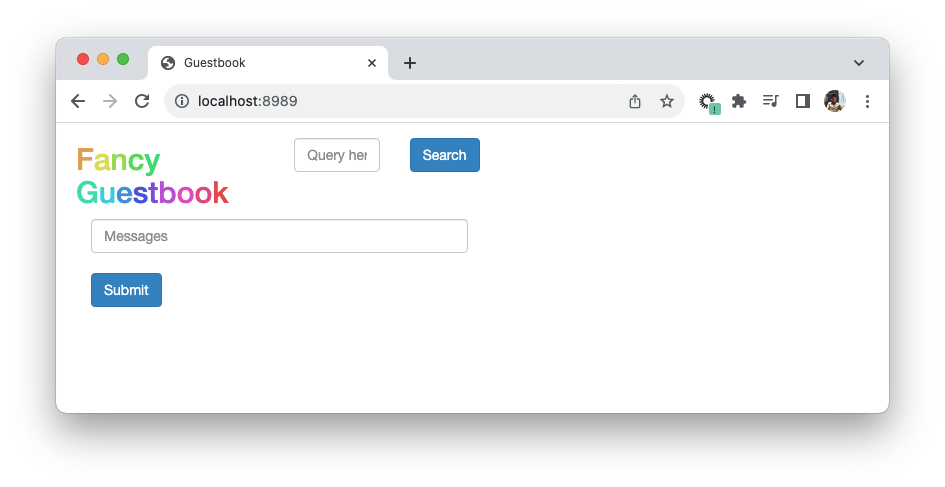

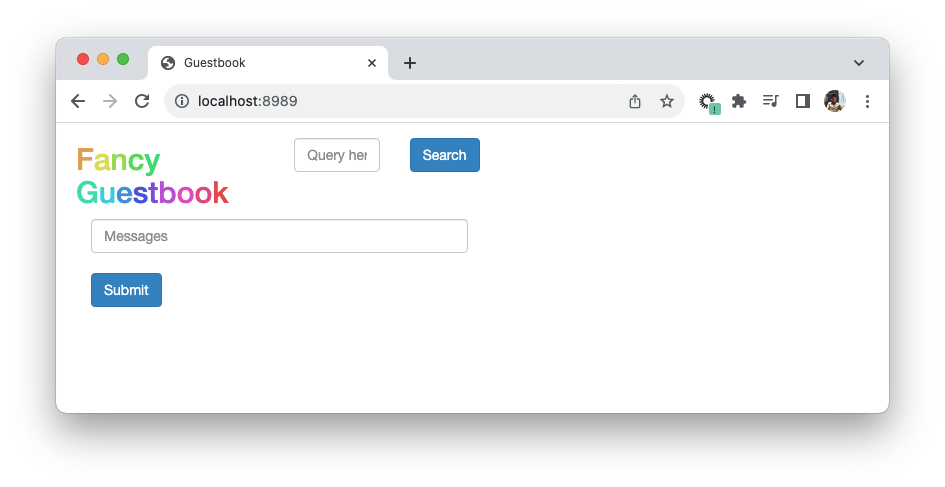

By the way, how can we access the guestbook?

We can use kubectl port-forward to create a tunnel that forwards traffic from our local machine to the ClusterIP service in the Minikube cluster.

$ kubectl get svc | grep guestbook guestbook-ui ClusterIP 10.107.98.229 <none> 80/TCP 2h $ kubectl get pods --all-namespaces | grep guestbook-ui default guestbook-ui-6b7f6d9874-q4nz7 1/1 Running 1 (18h ago) 2h $ kubectl port-forward -n default svc/guestbook-ui 8989:80

Open a web browser, type http://localhost:8989 in the browser's address bar:

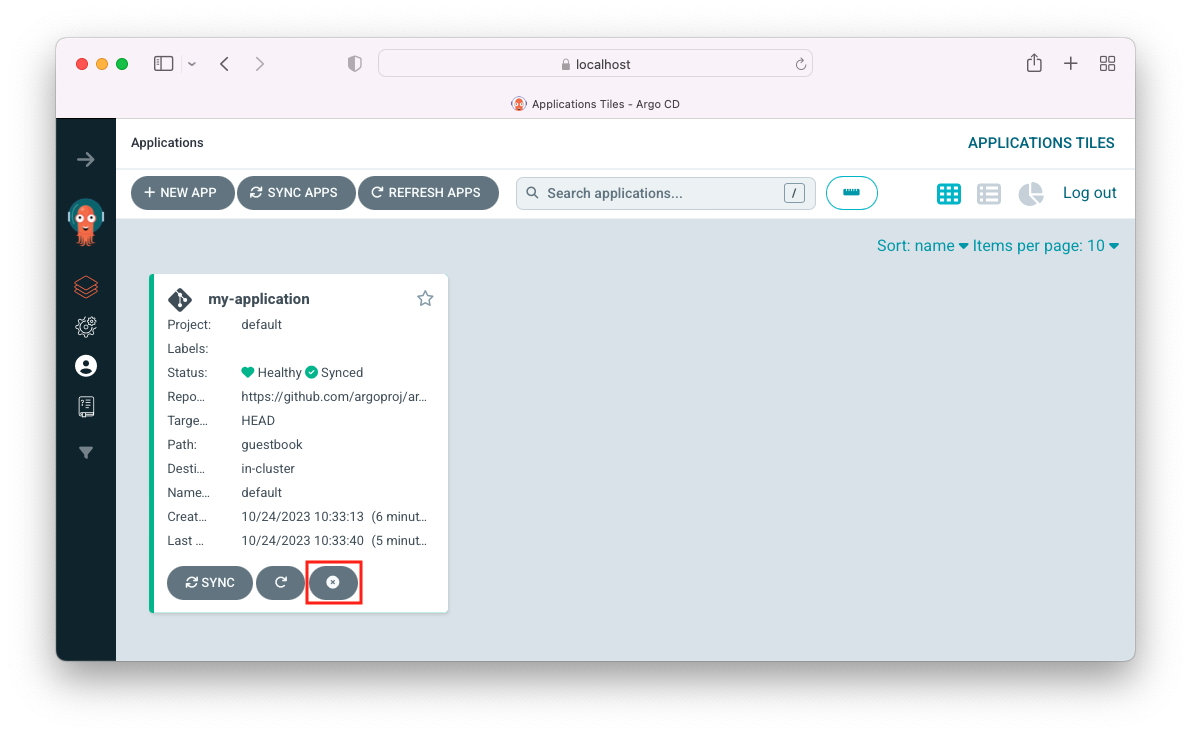

To close the application, click (x):

As we saw in the previous section, we can close the app using UI.

In this section, we want to close it using ArgoCD CLI.

$ argocd login localhost:8080 WARNING: server certificate had error: tls: failed to verify certificate: x509: certificate signed by unknown authority. Proceed insecurely (y/n)? y Username: admin Password: 'admin:login' logged in successfully Context 'localhost:8080' updated $ argocd app list NAME CLUSTER NAMESPACE PROJECT STATUS HEALTH SYNCPOLICY CONDITIONS REPO PATH TARGET argocd/my-application https://kubernetes.default.svc default default Synced Healthy Auto <none> https://github.com/argoproj/argocd-example-apps.git guestbook HEAD $ argocd app delete my-application Are you sure you want to delete 'my-application' and all its resources? [y/n] y application 'my-application' deleted

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization