Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

It is built around the Kubernetes Ingress resource, using a ConfigMap to store the NGINX configuration.

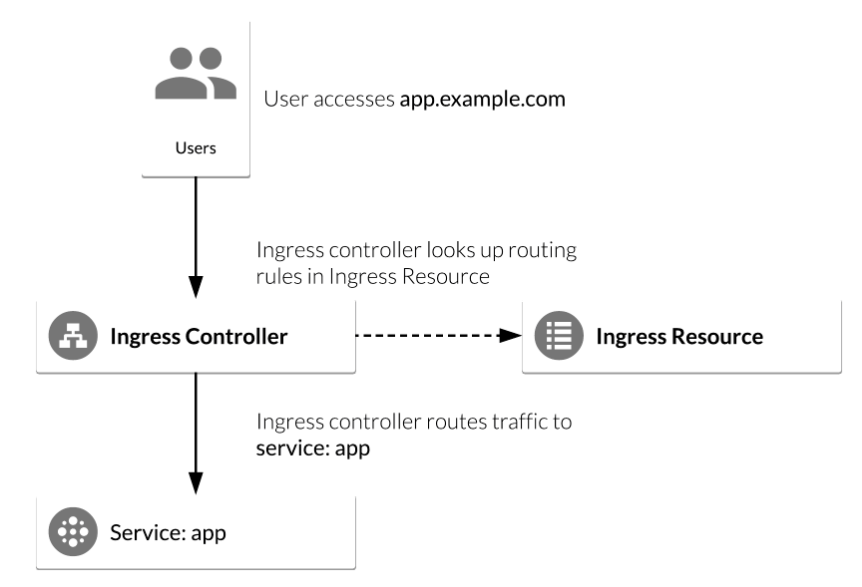

Ingress allows external users and outside client applications access to HTTP services. Ingress consists of two components: Ingress resource and Ingress controller and it is vital that both pieces are properly configured so that traffic can be routed from an outside client to a Kubernetes Service.

Ingress with NGINX controller on Google Kubernetes Engine

- Ingress resource is a collection of rules for the inbound traffic to reach Services. These are Layer 7 (L7) rules that allow hostnames (and optionally paths) to be directed to specific Services in Kubernetes.

- Ingress controller acts upon the rules set by the Ingress Resource, typically via an HTTP or L7 load balancer.

In this post, we're going to configure a Kubernetes deployment with an Ingress Resource with NGINX as an Ingress Controller:

- Deploy a simple Kubernetes web application.

- Deploy an NGINX Ingress Controller using a stable Helm Chart.

- Deploy an Ingress Resource for the application that uses NGINX Ingress as the controller.

- Test NGINX Ingress functionality by accessing the Google Cloud L4 (TCP/UDP) Load Balancer frontend IP and ensure it can access the web application.

Google Cloud Shell is loaded with development tools and it offers a persistent 5GB home directory and runs on the Google Cloud. Google Cloud Shell provides command-line access to our GCP resources. We can activate the shell: in GCP console, on the top right toolbar, click the Open Cloud Shell button:

In the dialog box that opens, click "START CLOUD SHELL".

gcloud is the command-line tool for Google Cloud Platform. It comes pre-installed on Cloud Shell and supports tab-completion.

Set our zone:

$ gcloud config set compute/zone us-central1-a Updated property [compute/zone].

Run the following command to create a Kubernetes cluster:

$ gcloud container clusters create nginx-demo --num-nodes 2 ... kubeconfig entry generated for nginx-demo. NAME LOCATION MASTER_VERSION MASTER_IP MACHINE_TYPE NODE_VERSION NUM_NODES STATUS nginx-demo us-central1-a 1.11.6-gke.2 35.224.213.155 n1-standard-1 1.11.6-gke.2 2 RUNNING

Helm is a tool that streamlines Kubernetes application installation and management. We can think of it as apt, yum, or homebrew for Kubernetes. It consists of two parts: Tiller(server-runs inside Kubernetes cluster and manages releases (installations) of our Helm Charts) and Helm (client).

Helm comes preconfigured with an installer script that automatically grabs the latest version of the Helm client and installs it locally. So, let's get the script:

$ curl https://raw.githubusercontent.com/kubernetes/helm/master/scripts/get > get_helm.sh

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 7234 100 7234 0 0 37172 0 --:--:-- --:--:-- --:--:-- 37288

Install the Helm client:

$ chmod 700 get_helm.sh $ ./get_helm.sh Downloading https://kubernetes-helm.storage.googleapis.com/helm-v2.12.3-linux-amd64.tar.gz Preparing to install helm and tiller into /usr/local/bin helm installed into /usr/local/bin/helm tiller installed into /usr/local/bin/tiller Run 'helm init' to configure helm. $ helm init ... Adding stable repo with URL: https://kubernetes-charts.storage.googleapis.com Adding local repo with URL: http://127.0.0.1:8879/charts ... Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster. ...

RBAC is enabled by default with Kubernetes v1.8+. Prior to installing tiller, we need to ensure that we have the correct "ServiceAccount" and "ClusterRoleBinding" configured for the tiller service. This allows tiller to be able to install services in the default namespace.

Let's install the server-side tiller with RBAC enabled:

$ kubectl create serviceaccount --namespace kube-system tiller

serviceaccount "tiller" created

$ kubectl create clusterrolebinding tiller-cluster-rule --clusterrole=cluster-admin --serviceaccount=kube-system:tiller

clusterrolebinding.rbac.authorization.k8s.io "tiller-cluster-rule" created

$ kubectl patch deploy --namespace kube-system tiller-deploy -p '{"spec":{"template":{"spec":{"serviceAccount":"tiller"}}}}'

deployment.extensions "tiller-deploy" patched

Now, let's initialize Helm with our newly-created service account:

$ helm init --service-account tiller --upgrade ... Tiller (the Helm server-side component) has been upgraded to the current version. ...

We can see if tiller is running by checking for the tiller_deploy Deployment in the kube-system namespace:

$ kubectl get deployments -n kube-system NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE event-exporter-v0.2.3 1 1 1 1 5m fluentd-gcp-scaler 1 1 1 1 5m heapster-v1.6.0-beta.1 1 1 1 1 5m kube-dns 2 2 2 2 5m kube-dns-autoscaler 1 1 1 1 5m l7-default-backend 1 1 1 1 5m metrics-server-v0.2.1 1 1 1 1 5m tiller-deploy 1 1 1 1 1m

Now that we have Helm configured, let's deploy a simple web-based application from the Google Cloud Repository. This application will be used as the backend for the Ingress:

$ kubectl run hello-app --image=gcr.io/google-samples/hello-app:1.0 --port=8080 deployment.apps "hello-app" created

Now expose the hello-app Deployment as a Service:

$ kubectl expose deployment hello-app service "hello-app" exposed

The NGINX controller must be exposed for external access. This is done using Service type: LoadBalancer on the NGINX controller service.

On Kubernetes Engine, this creates a Google Cloud Network (TCP/IP) Load Balancer with NGINX controller Service as a backend.

Google Cloud also creates the appropriate firewall rules within the Service's VPC to allow web HTTP(S) traffic to the load balancer frontend IP address.

Let's deploy the NGINX Ingress Controller:

$ helm install --name nginx-ingress stable/nginx-ingress --set rbac.create=true

NAME: nginx-ingress

LAST DEPLOYED: Mon Feb 18 16:26:00 2019

NAMESPACE: default

STATUS: DEPLOYED

RESOURCES:

==> v1beta1/ClusterRoleBinding

NAME AGE

nginx-ingress 0s

==> v1beta1/RoleBinding

NAME AGE

nginx-ingress 0s

==> v1beta1/Deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

nginx-ingress-controller 1 1 1 0 0s

nginx-ingress-default-backend 1 1 1 0 0s

==> v1beta1/ClusterRole

NAME AGE

nginx-ingress 0s

==> v1/ServiceAccount

NAME SECRETS AGE

nginx-ingress 1 0s

==> v1beta1/Role

NAME AGE

nginx-ingress 0s

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-ingress-controller LoadBalancer 10.63.243.6 <pending> 80:32654/TCP,443:31594/TCP 1s

nginx-ingress-default-backend ClusterIP 10.63.252.116 <none> 80/TCP 1s

==> v1beta1/Deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

nginx-ingress-controller 1 1 1 0 1s

nginx-ingress-default-backend 1 1 1 0 1s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

nginx-ingress-controller-6b464c8b8-94b8g 0/1 ContainerCreating 0 0s

nginx-ingress-default-backend-544cfb69fc-skslh 0/1 ContainerCreating 0 0s

==> v1/ServiceAccount

NAME SECRETS AGE

nginx-ingress 1 1s

==> v1beta1/Role

NAME AGE

nginx-ingress 1s

NOTES:

The nginx-ingress controller has been installed.

It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status by running 'kubectl --namespace default get services -o wide -w nginx-ingress-controller'

An example Ingress that makes use of the controller:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: example

namespace: foo

spec:

rules:

- host: www.example.com

http:

paths:

- backend:

serviceName: exampleService

servicePort: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls

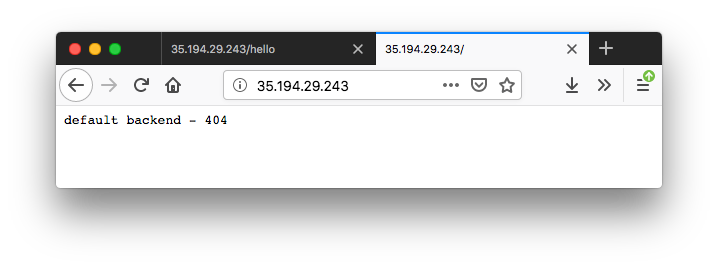

Note the second service, nginx-ingress-default-backend. The default backend is a Service which handles all URL paths and hosts the NGINX controller. The default backend exposes two URLs:

- /healthz that returns 200

- / that returns 404

We may want to wait a few moments while the GCP L4 Load Balancer gets deployed. Confirm that the nginx-ingress-controller Service has been deployed and that we have an external IP address associated with the service by running the following command:

$ kubectl get service nginx-ingress-controller NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE nginx-ingress-controller LoadBalancer 10.63.243.6 35.194.29.243 80:32654/TCP,443:31594/TCP 5m

An Ingress Resource object is a collection of L7 rules for routing inbound traffic to Kubernetes Services. Multiple rules can be defined in one Ingress Resource or they can be split up into multiple Ingress Resource manifests.

The Ingress Resource also determines which controller to utilize to serve traffic.

This can be set with an annotation, kubernetes.io/ingress.class, in the metadata section of the Ingress Resource.

For the NGINX controller, we will use the nginx value as shown below:

$ annotations: kubernetes.io/ingress.class: nginx

On Kubernetes Engine, if no annotation is defined under the metadata section, the Ingress Resource uses the GCP GCLB L7 load balancer to serve traffic.

Let's create a simple Ingress Resource YAML file which uses the NGINX Ingress Controller and has one path rule defined in ingress-resource.yaml:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ingress-resource

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/ssl-redirect: "false"

spec:

rules:

- http:

paths:

- path: /hello

backend:

serviceName: hello-app

servicePort: 8080

In the file, The kind: Ingress dictates it is an Ingress Resource object. This Ingress Resource defines an inbound L7 rule for path /hello to service hello-app on port 8080.

To apply those rules to our Kubernetes application:

$ kubectl apply -f ingress-resource.yaml ingress.extensions "ingress-resource" created

Verify that Ingress Resource has been created:

$ kubectl get ingress ingress-resource NAME HOSTS ADDRESS PORTS AGE ingress-resource * 35.202.54.69 80 55s

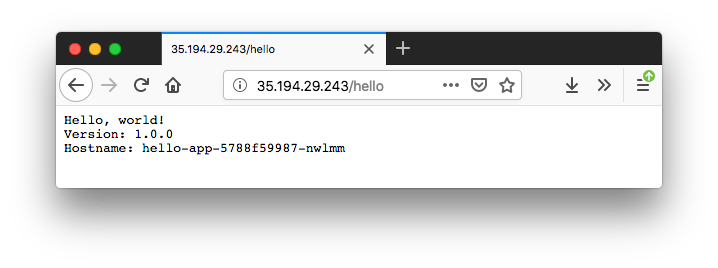

Now we should be able to access the web application by going to the EXTERNAL-IP/hello address of the NGINX ingress controller:

$ kubectl get service nginx-ingress-controller NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE nginx-ingress-controller LoadBalancer 10.63.243.6 35.194.29.243 80:32654/TCP,443:31594/TCP 11m

To check if the default-backend service is working properly, access any path (other than the path /hello defined in the Ingress Resource) and ensure that we receive a 404 message:

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization