Docker: Load Testing with Locust on GCP Kubernetes

In this post, we'll use Locust which is one of the most solidly proven performance frameworks (JMeter is another one that's likely popular). Locust is highly scalable due to its fully event-based implementation. Because of these facts, Locust has a fast-growing community, who prefer this framework over JMeter.

Locust is a distributed, Python-based load testing tool that is capable of distributing requests across multiple target paths. For example, Locust can distribute requests to the /login and /metrics target paths.

Setup the zone in Cloud Shell:

$ gcloud config set compute/zone us-central1-a Updated property [compute/zone].

Get the source code from the repository:

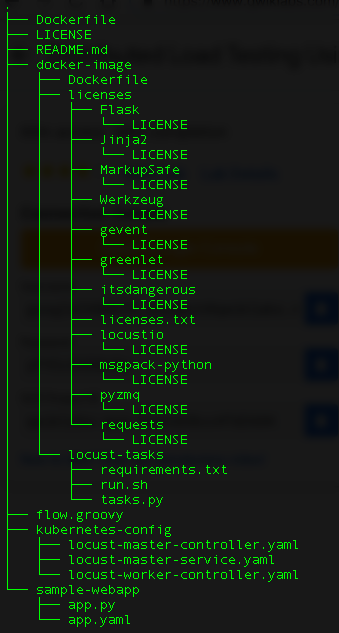

$ git clone https://github.com/GoogleCloudPlatform/distributed-load-testing-using-kubernetes.git $ cd distributed-load-testing-using-kubernetes/

The sample-webapp folder contains a simple Google App Engine Python application as the "system under test". To deploy the application, we can use the gcloud app deploy command:

$ gcloud app deploy sample-webapp/app.yaml [1] asia-east2 (supports standard and flexible) [2] asia-northeast1 (supports standard and flexible) [3] asia-south1 (supports standard and flexible) [4] australia-southeast1 (supports standard and flexible) [5] europe-west (supports standard and flexible) [6] europe-west2 (supports standard and flexible) [7] europe-west3 (supports standard and flexible) [8] northamerica-northeast1 (supports standard and flexible) [9] southamerica-east1 (supports standard and flexible) [10] us-central (supports standard and flexible) [11] us-east1 (supports standard and flexible) [12] us-east4 (supports standard and flexible) [13] us-west2 (supports standard and flexible) [14] cancel Please enter your numeric choice: Please enter a value between 1 and 14: 10 ... You can stream logs from the command line by running: $ gcloud app logs tail -s default To view your application in the web browser run: $ gcloud app browse

Let's create our cluster:

$ gcloud container clusters create locust-testing kubeconfig entry generated for locust-testing. NAME LOCATION MASTER_VERSION MASTER_IP MACHINE_TYPE NODE_VERSION NUM_NODES STATUS locust-testing us-central1-a 1.11.6-gke.2 35.225.199.73 n1-standard-1 1.11.6-gke.2 3 RUNNING $ cd kubernetes-config/ $ ls locust-master-controller.yaml locust-master-service.yaml locust-worker-controller.yaml

The first component of the deployment is the Locust master, which is the entry point for executing the load testing tasks. The Locust master is deployed as a replication controller with a single replica because we need only one master. A replication controller is useful even when deploying a single pod because it ensures high availability.

The configuration for the replication controller specifies several elements, including the name of the controller (locust-master), labels for organization (name: locust, role: master), and the ports that need to be exposed by the container (8089 for web interface, 5557 and 5558 for communicating with workers). This information is later used to configure the Locust workers controller.

The following file (locust-master-controller.yaml) contains the configuration for the ports:

kind: ReplicationController

apiVersion: v1

metadata:

name: locust-master

labels:

name: locust

role: master

spec:

replicas: 1

selector:

name: locust

role: master

template:

metadata:

labels:

name: locust

role: master

spec:

containers:

- name: locust

image: gcr.io/cloud-solutions-images/locust-tasks:latest

env:

- name: LOCUST_MODE

value: master

- name: TARGET_HOST

value: http://workload-simulation-webapp.appspot.com

ports:

- name: loc-master-web

containerPort: 8089

protocol: TCP

- name: loc-master-p1

containerPort: 5557

protocol: TCP

- name: loc-master-p2

containerPort: 5558

protocol: TCP

Let's deploy the locust-master-controller:

$ kubectl create -f locust-master-controller.yaml replicationcontroller "locust-master" created $ kubectl get rc NAME DESIRED CURRENT READY AGE locust-master 1 1 1 1m $ kubectl get pods -l name=locust,role=master NAME READY STATUS RESTARTS AGE locust-master-kg482 1/1 Running 0 1m

Let's deploy the locust-master-service:

$ kubectl create -f locust-master-service.yaml service "locust-master" created

This will expose the Pod with an internal DNS name (locust-master) and ports 8089, 5557, and 5558. As part of this step, the type: LoadBalancer directive in locust-master-service.yaml will tell Google Kubernetes Engine to create a Google Compute Engine forwarding-rule from a publicly avaialble IP address to the locust-master Pod.

To view the newly created forwarding-rule:

$ kubectl get svc locust-master NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE locust-master LoadBalancer 10.35.253.55 35.202.16.162 8089:31521/TCP,5557:31809/TCP,5558:30194/TCP 58s

The Locust workers execute the load testing tasks. The Locust workers are deployed by a single replication controller that creates ten pods. The pods are spread out across the Kubernetes cluster. Each pod uses environment variables to control important configuration information such as the hostname of the system under test and the hostname of the Locust master.

The following file ( contains the configuration for the name, labels, and number of replicas:

kind: ReplicationController

apiVersion: v1

metadata:

name: locust-worker

labels:

name: locust

role: worker

spec:

replicas: 10

selector:

name: locust

role: worker

template:

metadata:

labels:

name: locust

role: worker

spec:

containers:

- name: locust

image: gcr.io/cloud-solutions-images/locust-tasks:latest

env:

- name: LOCUST_MODE

value: worker

- name: LOCUST_MASTER

value: locust-master

- name: TARGET_HOST

value: http://workload-simulation-webapp.appspot.com

Let's deploy locust-worker-controller:

$ kubectl create -f locust-worker-controller.yaml replicationcontroller "locust-worker" created

The locust-worker-controller is set to deploy 10 locust-worker Pods. Let's check if they were deployed:

$ kubectl get pods -l name=locust,role=worker NAME READY STATUS RESTARTS AGE locust-worker-4zwvz 1/1 Running 0 36s locust-worker-7bgnb 1/1 Running 0 36s locust-worker-7jpl8 1/1 Running 0 36s locust-worker-7qtkl 1/1 Running 0 36s locust-worker-bfv5r 1/1 Running 0 36s locust-worker-qt4bm 1/1 Running 0 36s locust-worker-w69ng 1/1 Running 0 36s locust-worker-wdh99 1/1 Running 0 36s locust-worker-xpwqh 1/1 Running 0 36s locust-worker-zcz6m 1/1 Running 0 36s

To scale the number of locust-worker Pods, issue a replication controller scale command:

$ kubectl scale --replicas=20 replicationcontrollers locust-worker replicationcontroller "locust-worker" scaled

To confirm that the Pods have launched and are ready, get the list of locust-worker Pods:

$ kubectl get pods -l name=locust,role=worker NAME READY STATUS RESTARTS AGE NAME READY STATUS RESTARTS AGE locust-worker-2x8kv 0/1 Pending 0 3m locust-worker-4zwvz 1/1 Running 0 4m locust-worker-5bw4z 0/1 Pending 0 3m locust-worker-6qgqc 0/1 Pending 0 3m locust-worker-7bgnb 1/1 Running 0 4m locust-worker-7jpl8 1/1 Running 0 4m locust-worker-7qtkl 1/1 Running 0 4m locust-worker-b6t6h 0/1 Pending 0 3m locust-worker-bfv5r 1/1 Running 0 4m locust-worker-clh56 0/1 Pending 0 3m locust-worker-drt55 0/1 Pending 0 3m locust-worker-nrs6t 1/1 Running 0 3m locust-worker-qt4bm 1/1 Running 0 4m locust-worker-vcxm2 0/1 Pending 0 3m locust-worker-w69ng 1/1 Running 0 4m locust-worker-wdh99 1/1 Running 0 4m locust-worker-wh5sd 0/1 Pending 0 3m locust-worker-wnpvk 1/1 Running 0 3m locust-worker-xpwqh 1/1 Running 0 4m locust-worker-zcz6m 1/1 Running 0 4m

To execute the Locust tests, get the external IP address by following command:

$ kubectl get svc locust-master NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE locust-master LoadBalancer 10.35.253.55 35.202.16.162 8089:31521/TCP,5557:31809/TCP,5558:30194/TCP 11m

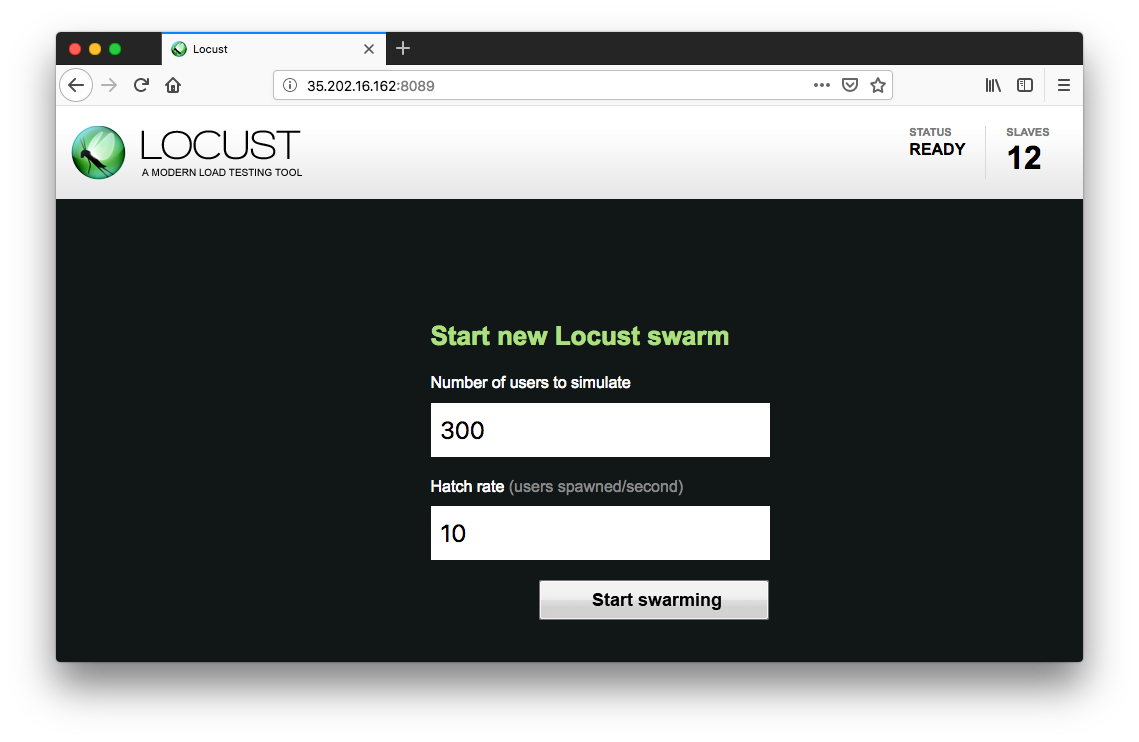

Specify the total number of users to simulate and a rate at which each user should be spawned. Next, click Start swarming to begin the simulation.

For example we can specify number of users as 300 and rate as 10.

Click Start swarming:

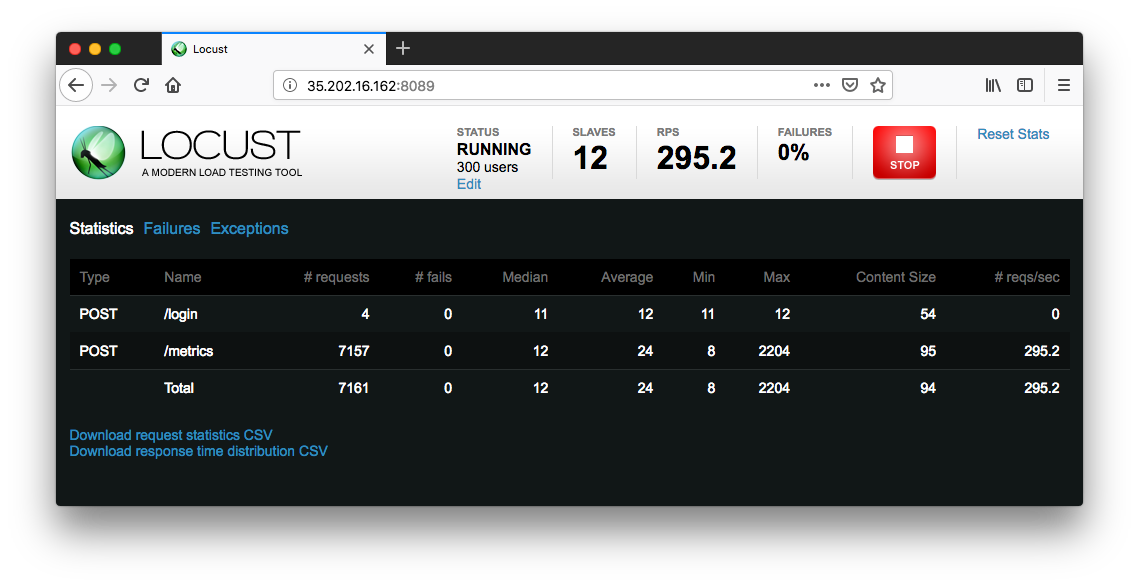

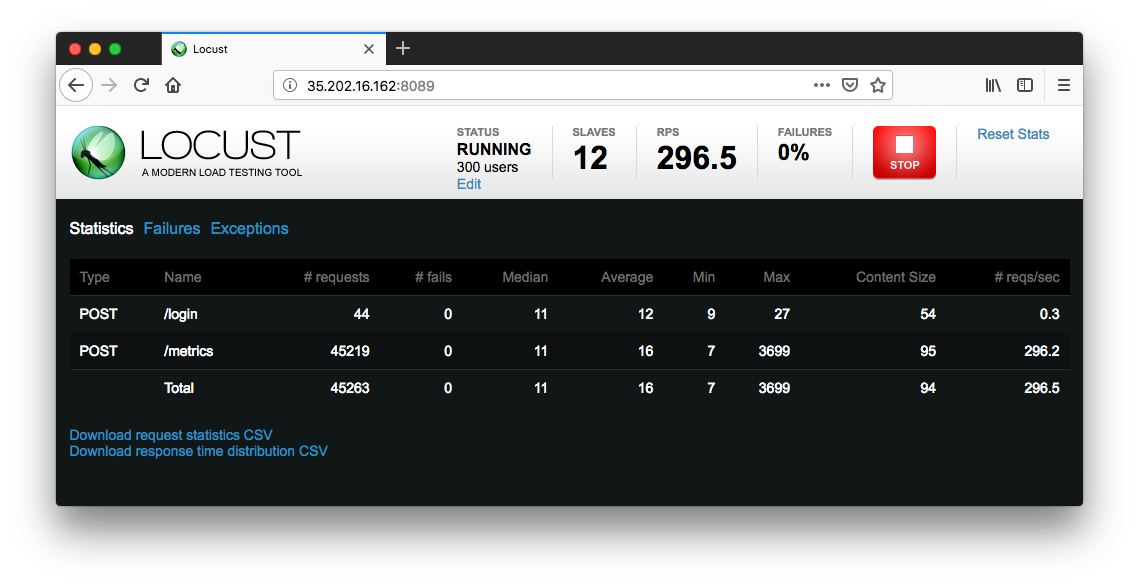

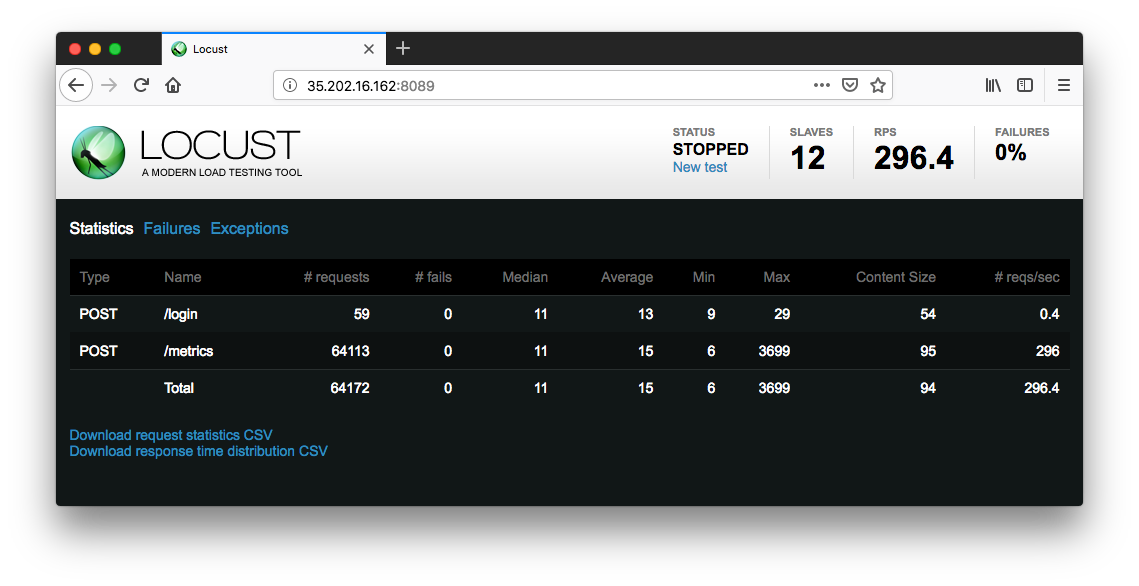

As time progress and users are spawned, we will see statistics begin to aggregate for simulation metrics, such as the number of requests and requests per second:

To stop the simulation, click Stop and the test will terminate:

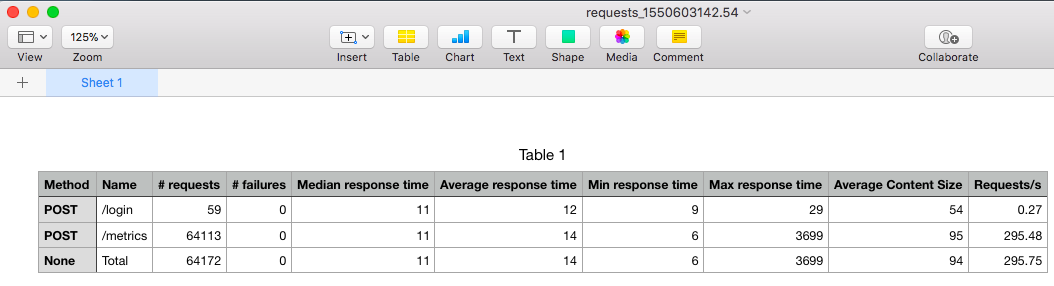

The complete results can be downloaded into a spreadsheet:

Reference: Distributed Load Testing Using Kubernetes

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization