Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

Elastic Stack docker/kubernetes series:

This tutorial is based on:

- Elastic Cloud on Kubernetes [1.0] » Quickstart

- Getting started with Elastic Cloud on Kubernetes: Deployment

- elastic / cloud-on-k8s

- Elastic Cloud on Kubernetes

Supported versions:

- kubectl 1.11+

- Kubernetes 1.12+

- Elastic Stack: 6.8+, 7.1+

"Built on the Kubernetes Operator pattern, Elastic Cloud on Kubernetes (ECK) extends the basic Kubernetes orchestration capabilities to support the setup and management of Elasticsearch, Kibana and APM Server on Kubernetes."

In this tutorial, we'll do the following:

- Deploying ECK into a Kubernetes cluster (Minikube).

- Deploying the Elastic Stack into ECK.

- Scaling and upgrading Elasticsearch and Kibana inside ECK.

- Deploying a sample application instrumented with Elastic APM and sending APM data to the ECK-managed Elasticsearch cluster.

- Deploying Metricbeat to Kubernetes as a DaemonSet and securely connecting Metricbeat to the ECK-managed Elasticsearch cluster.

The installation guide is available at Install Minikube.

On Mac:

$ brew install minikube

Start it up. My previous virtualbox VM has only 2038MB of memory. So, I have to delete one minikube and create a new one.

$ minikube delete

$ minikube config set memory 4196

$ minikube start

o minikube v1.0.1 on darwin (amd64)

$ Downloading Kubernetes v1.14.1 images in the background ...

> Creating virtualbox VM (CPUs=2, Memory=4196MB, Disk=20000MB) ...

- "minikube" IP address is 192.168.99.105

- Configuring Docker as the container runtime ...

- Version of container runtime is 18.06.3-ce

: Waiting for image downloads to complete ...

- Preparing Kubernetes environment ...

- Pulling images required by Kubernetes v1.14.1 ...

- Launching Kubernetes v1.14.1 using kubeadm ...

: Waiting for pods: apiserver proxy etcd scheduler controller dns

- Configuring cluster permissions ...

- Verifying component health .....

+ kubectl is now configured to use "minikube"

= Done! Thank you for using minikube!

We can set the memory size via an argument of the "minikube start" instead of using the "minikube config":

$ minikube start --memory 4196

If the cluster is running, the output from minikube status should be similar to:

$ minikube status host: Running kubelet: Running apiserver: Running kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.102

Another way to verify our single-node Kubernetes cluster is up and running:

$ kubectl cluster-info Kubernetes master is running at https://192.168.99.105:8443 KubeDNS is running at https://192.168.99.105:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

Dashboard is a web-based Kubernetes user interface. We can use Dashboard to deploy containerized applications to a Kubernetes cluster, troubleshoot our containerized application, and manage the cluster resources. To access the Kubernetes Dashboard, run this command:

$ minikube dashboard

The Elasticsearch Operator automates the process of managing Elasticsearch on Kubernetes. ECK simplifies deploying the whole Elastic stack on Kubernetes, giving us tools to automate and streamline critical operations.

If we want to get up and running quickly, we can use the Operator though we may opt to choose Helm chart instead if we are concerned about the additional Kubernetes resources such as a separate namespace as well as it's a new tool to learn.

Install custom resource definitions and the operator with its Role-based access control (RBAC) rules using the latest ECK 1.0.1 (as of this writing). Installing the Elasticsearch Operator is as simple as running one command:

$ kubectl apply -f https://download.elastic.co/downloads/eck/1.0.1/all-in-one.yaml customresourcedefinition.apiextensions.k8s.io/apmservers.apm.k8s.elastic.co created customresourcedefinition.apiextensions.k8s.io/elasticsearches.elasticsearch.k8s.elastic.co created customresourcedefinition.apiextensions.k8s.io/kibanas.kibana.k8s.elastic.co created clusterrole.rbac.authorization.k8s.io/elastic-operator created clusterrolebinding.rbac.authorization.k8s.io/elastic-operator created namespace/elastic-system created statefulset.apps/elastic-operator created serviceaccount/elastic-operator created validatingwebhookconfiguration.admissionregistration.k8s.io/elastic-webhook.k8s.elastic.co created service/elastic-webhook-server created secret/elastic-webhook-server-cert created

$ kubectl get namespaces NAME STATUS AGE default Active 5h2m elastic-system Active 13m kube-node-lease Active 5h2m kube-public Active 5h2m kube-system Active 5h2m

The Operator lives under the elastic-system namespace. We can check the resources by running this command:

$ kubectl -n elastic-system get all NAME READY STATUS RESTARTS AGE pod/elastic-operator-0 1/1 Running 0 6m18s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/elastic-webhook-server ClusterIP 10.101.26.29443/TCP 6m18s NAME READY AGE statefulset.apps/elastic-operator 1/1 6m19s

To monitor the operator logs:

$ kubectl -n elastic-system logs -f statefulset.apps/elastic-operator

{"level":"info","@timestamp":"2020-04-14T20:18:05.380Z","logger":"manager","message":"Setting up client for manager","ver":"1.0.1-bcb74688"}

{"level":"info","@timestamp":"2020-04-14T20:18:05.380Z","logger":"manager","message":"Setting up scheme","ver":"1.0.1-bcb74688"}

{"level":"info","@timestamp":"2020-04-14T20:18:05.387Z","logger":"manager","message":"Setting up manager","ver":"1.0.1-bcb74688"}

{"level":"info","@timestamp":"2020-04-14T20:18:05.387Z","logger":"manager","message":"Operator configured to manage all namespaces","ver":"1.0.1-bcb74688"}

{"level":"info","@timestamp":"2020-04-14T20:18:05.892Z","logger":"controller-runtime.metrics","message":"metrics server is starting to listen","ver":"1.0.1-bcb74688","addr":":0"}

{"level":"info","@timestamp":"2020-04-14T20:18:05.926Z","logger":"manager","message":"Setting up controllers","ver":"1.0.1-bcb74688","roles":["all"]}

{"level":"info","@timestamp":"2020-04-14T20:18:05.926Z","logger":"manager","message":"Automatic management of the webhook certificates enabled","ver":"1.0.1-bcb74688"}

{"level":"info","@timestamp":"2020-04-14T20:18:05.943Z","logger":"webhook-certificates-controller","message":"Creating new webhook certificates","ver":"1.0.1-bcb74688","webhook":"elastic-webhook.k8s.elastic.co","secret_namespace":"elastic-system","secret_name":"elastic-webhook-server-cert"}

...

We may also want to take a look at the newly created Custom resources definitions (CRDs):

$ kubectl get crd NAME CREATED AT apmservers.apm.k8s.elastic.co 2020-04-14T20:17:39Z elasticsearches.elasticsearch.k8s.elastic.co 2020-04-14T20:17:39Z kibanas.kibana.k8s.elastic.co 2020-04-14T20:17:39Z

Those are the APIs we'll have access to for streamlining the process of creating and managing Elasticsearch resources in our Kubernetes cluster.

Now that ECK is running we have the access elasticsearch.k8s.elastic.co/v1 API. Apply a simple Elasticsearch cluster specification, with a single Elasticsearch node:

$ cat <<EOF | kubectl apply -f -

apiVersion: elasticsearch.k8s.elastic.co/v1

kind: Elasticsearch

metadata:

name: quickstart

spec:

version: 7.6.2

nodeSets:

- name: default

count: 1

config:

node.master: true

node.data: true

node.ingest: true

node.store.allow_mmap: false

EOF

elasticsearch.elasticsearch.k8s.elastic.co/quickstart created

Note: If the Kubernetes cluster does not have any Kubernetes nodes with at least 2GiB of free memory, the pod will be stuck in Pending state.

The operator automatically creates and manages Kubernetes resources to achieve the desired state of the Elasticsearch cluster. It may take up to a few minutes until all the resources are created and the cluster is ready for use.

We can get an overview of the current Elasticsearch clusters in the Kubernetes cluster, including the name of the clusster, health, number of nodes, version, and phase:

$ kubectl get elasticsearch NAME HEALTH NODES VERSION PHASE AGE quickstart green 1 7.6.2 Ready 2m25s

We can get more info:

$ kubectl get nodes NAME STATUS ROLES AGE VERSION minikube Ready master 46m v1.14.1 $ kubectl get pods NAME READY STATUS RESTARTS AGE quickstart-es-default-0 1/1 Running 0 36m $ kubectl get services NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 46m quickstart-es-default ClusterIP None <none> <none> 37m quickstart-es-http ClusterIP 10.100.184.98 <none> 9200/TCP 37m $ kubectl get deployment No resources found in default namespace. $ kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-7d1de949-7ea5-11ea-9ee6-08002783d7bc 1Gi RWO Delete Bound default/elasticsearch-data-quickstart-es-default-0 standard 28m ki.hong:~/ELK/myMinikube$ kubectl get nodes

More detailed info:

$ kubectl describe pods quickstart-es-default-0

Name: quickstart-es-default-0

Namespace: default

Priority: 0

Node: minikube/10.0.2.15

Start Time: Tue, 14 Apr 2020 16:13:48 -0700

Labels: common.k8s.elastic.co/type=elasticsearch

controller-revision-hash=quickstart-es-default-74746cb567

elasticsearch.k8s.elastic.co/cluster-name=quickstart

elasticsearch.k8s.elastic.co/config-hash=2034778696

elasticsearch.k8s.elastic.co/http-scheme=https

elasticsearch.k8s.elastic.co/node-data=true

elasticsearch.k8s.elastic.co/node-ingest=true

elasticsearch.k8s.elastic.co/node-master=true

elasticsearch.k8s.elastic.co/node-ml=true

elasticsearch.k8s.elastic.co/statefulset-name=quickstart-es-default

elasticsearch.k8s.elastic.co/version=7.6.2

statefulset.kubernetes.io/pod-name=quickstart-es-default-0

Annotations: update.k8s.elastic.co/timestamp: 2020-04-14T23:14:28.934985235Z

Status: Running

IP: 172.17.0.6

IPs: <none>

Controlled By: StatefulSet/quickstart-es-default

Init Containers:

elastic-internal-init-filesystem:

Container ID: docker://791103b7fbcdd03fdf34c07e52af368bf1aced6b7cb15c3e460feb592a6e231e

Image: docker.elastic.co/elasticsearch/elasticsearch:7.6.2

Image ID: docker-pullable://docker.elastic.co/elasticsearch/elasticsearch@sha256:59342c577e2b7082b819654d119f42514ddf47f0699c8b54dc1f0150250ce7aa

Port: <none>

Host Port: <none>

Command:

bash

-c

/mnt/elastic-internal/scripts/prepare-fs.sh

State: Terminated

Reason: Completed

Exit Code: 0

Started: Tue, 14 Apr 2020 16:14:27 -0700

Finished: Tue, 14 Apr 2020 16:14:29 -0700

Ready: True

Restart Count: 0

Limits:

cpu: 100m

memory: 50Mi

Requests:

cpu: 100m

memory: 50Mi

Environment:

POD_IP: (v1:status.podIP)

POD_NAME: quickstart-es-default-0 (v1:metadata.name)

POD_IP: (v1:status.podIP)

POD_NAME: quickstart-es-default-0 (v1:metadata.name)

Mounts:

/mnt/elastic-internal/downward-api from downward-api (ro)

/mnt/elastic-internal/elasticsearch-bin-local from elastic-internal-elasticsearch-bin-local (rw)

/mnt/elastic-internal/elasticsearch-config from elastic-internal-elasticsearch-config (ro)

/mnt/elastic-internal/elasticsearch-config-local from elastic-internal-elasticsearch-config-local (rw)

/mnt/elastic-internal/elasticsearch-plugins-local from elastic-internal-elasticsearch-plugins-local (rw)

/mnt/elastic-internal/probe-user from elastic-internal-probe-user (ro)

/mnt/elastic-internal/scripts from elastic-internal-scripts (ro)

/mnt/elastic-internal/transport-certificates from elastic-internal-transport-certificates (ro)

/mnt/elastic-internal/unicast-hosts from elastic-internal-unicast-hosts (ro)

/mnt/elastic-internal/xpack-file-realm from elastic-internal-xpack-file-realm (ro)

/usr/share/elasticsearch/config/http-certs from elastic-internal-http-certificates (ro)

/usr/share/elasticsearch/data from elasticsearch-data (rw)

/usr/share/elasticsearch/logs from elasticsearch-logs (rw)

Containers:

elasticsearch:

Container ID: docker://a66de6f89a27319140ebd49cc65a69da8405b6544ea190596dc7feb067fe3f42

Image: docker.elastic.co/elasticsearch/elasticsearch:7.6.2

Image ID: docker-pullable://docker.elastic.co/elasticsearch/elasticsearch@sha256:59342c577e2b7082b819654d119f42514ddf47f0699c8b54dc1f0150250ce7aa

Ports: 9200/TCP, 9300/TCP

Host Ports: 0/TCP, 0/TCP

State: Running

Started: Tue, 14 Apr 2020 16:14:29 -0700

Ready: True

Restart Count: 0

Limits:

memory: 2Gi

Requests:

memory: 2Gi

Readiness: exec [bash -c /mnt/elastic-internal/scripts/readiness-probe-script.sh] delay=10s timeout=5s period=5s #success=1 #failure=3

Environment:

HEADLESS_SERVICE_NAME: quickstart-es-default

NSS_SDB_USE_CACHE: no

POD_IP: (v1:status.podIP)

POD_NAME: quickstart-es-default-0 (v1:metadata.name)

PROBE_PASSWORD_PATH: /mnt/elastic-internal/probe-user/elastic-internal-probe

PROBE_USERNAME: elastic-internal-probe

READINESS_PROBE_PROTOCOL: https

Mounts:

/mnt/elastic-internal/downward-api from downward-api (ro)

/mnt/elastic-internal/elasticsearch-config from elastic-internal-elasticsearch-config (ro)

/mnt/elastic-internal/probe-user from elastic-internal-probe-user (ro)

/mnt/elastic-internal/scripts from elastic-internal-scripts (ro)

/mnt/elastic-internal/unicast-hosts from elastic-internal-unicast-hosts (ro)

/mnt/elastic-internal/xpack-file-realm from elastic-internal-xpack-file-realm (ro)

/usr/share/elasticsearch/bin from elastic-internal-elasticsearch-bin-local (rw)

/usr/share/elasticsearch/config from elastic-internal-elasticsearch-config-local (rw)

/usr/share/elasticsearch/config/http-certs from elastic-internal-http-certificates (ro)

/usr/share/elasticsearch/config/transport-certs from elastic-internal-transport-certificates (ro)

/usr/share/elasticsearch/data from elasticsearch-data (rw)

/usr/share/elasticsearch/logs from elasticsearch-logs (rw)

/usr/share/elasticsearch/plugins from elastic-internal-elasticsearch-plugins-local (rw)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

elasticsearch-data:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: elasticsearch-data-quickstart-es-default-0

ReadOnly: false

downward-api:

Type: DownwardAPI (a volume populated by information about the pod)

Items:

metadata.labels -> labels

elastic-internal-elasticsearch-bin-local:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit: <unset>

elastic-internal-elasticsearch-config:

Type: Secret (a volume populated by a Secret)

SecretName: quickstart-es-default-es-config

Optional: false

elastic-internal-elasticsearch-config-local:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit: <unset>

elastic-internal-elasticsearch-plugins-local:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit: <unset>

elastic-internal-http-certificates:

Type: Secret (a volume populated by a Secret)

SecretName: quickstart-es-http-certs-internal

Optional: false

elastic-internal-probe-user:

Type: Secret (a volume populated by a Secret)

SecretName: quickstart-es-internal-users

Optional: false

elastic-internal-scripts:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: quickstart-es-scripts

Optional: false

elastic-internal-transport-certificates:

Type: Secret (a volume populated by a Secret)

SecretName: quickstart-es-transport-certificates

Optional: false

elastic-internal-unicast-hosts:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: quickstart-es-unicast-hosts

Optional: false

elastic-internal-xpack-file-realm:

Type: Secret (a volume populated by a Secret)

SecretName: quickstart-es-xpack-file-realm

Optional: false

elasticsearch-logs:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit: <unset>

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 39m default-scheduler Successfully assigned default/quickstart-es-default-0 to minikube

Normal Pulling 39m kubelet, minikube Pulling image "docker.elastic.co/elasticsearch/elasticsearch:7.6.2"

Normal Pulled 38m kubelet, minikube Successfully pulled image "docker.elastic.co/elasticsearch/elasticsearch:7.6.2"

Normal Created 38m kubelet, minikube Created container elastic-internal-init-filesystem

Normal Started 38m kubelet, minikube Started container elastic-internal-init-filesystem

Normal Pulled 38m kubelet, minikube Container image "docker.elastic.co/elasticsearch/elasticsearch:7.6.2" already present on machine

Normal Created 38m kubelet, minikube Created container elasticsearch

Normal Started 38m kubelet, minikube Started container elasticsearch

Warning Unhealthy 38m kubelet, minikube Readiness probe failed: {"timestamp": "2020-04-14T23:14:40+0000", "message": "readiness probe failed", "curl_rc": "7"}

Warning Unhealthy 38m kubelet, minikube Readiness probe failed: {"timestamp": "2020-04-14T23:14:45+0000", "message": "readiness probe failed", "curl_rc": "7"}

Warning Unhealthy 38m kubelet, minikube Readiness probe failed: {"timestamp": "2020-04-14T23:14:50+0000", "message": "readiness probe failed", "curl_rc": "7"}

Warning Unhealthy 38m kubelet, minikube Readiness probe failed: {"timestamp": "2020-04-14T23:14:55+0000", "message": "readiness probe failed", "curl_rc": "7"}

Warning Unhealthy 38m kubelet, minikube Readiness probe failed: {"timestamp": "2020-04-14T23:15:00+0000", "message": "readiness probe failed", "curl_rc": "7"}

Warning Unhealthy 38m kubelet, minikube Readiness probe failed: {"timestamp": "2020-04-14T23:15:05+0000", "message": "readiness probe failed", "curl_rc": "7"}

A ClusterIP Service is automatically created for our cluster:

$ kubectl get service quickstart-es-http NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE quickstart-es-http ClusterIP 10.100.184.98 <none> 9200/TCP 57m

- Getting the credentials.

- Requesting the Elasticsearch endpoint.

- Specify a Kibana instance and associate it with our Elasticsearch cluster:

- Monitor Kibana health and creation progress. Similar to Elasticsearch, we can retrieve details about Kibana instances:

- Access Kibana. A ClusterIP Service is automatically created for Kibana:

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

A default user named elastic is automatically created with the password stored in a Kubernetes secret:

$ kubectl get secret quickstart-es-elastic-user

NAME TYPE DATA AGE

quickstart-es-elastic-user Opaque 1 60m

$ PASSWORD=$(kubectl get secret quickstart-es-elastic-user -o=jsonpath='{.data.elastic}' | base64 --decode)

$ echo $PASSWORD

d5vzwgmkxq5g9k69m6q9xcwk

From inside the Kubernetes cluster:

$ curl -u "elastic:$PASSWORD" -k "https://quickstart-es-http:9200"

From our local workstation, use the following command in a separate terminal:

$ kubectl port-forward service/quickstart-es-http 9200 Forwarding from 127.0.0.1:9200 -> 9200 Forwarding from [::1]:9200 -> 9200

Then request localhost:

$ curl -u "elastic:$PASSWORD" -k "https://localhost:9200"

{

"name" : "quickstart-es-default-0",

"cluster_name" : "quickstart",

"cluster_uuid" : "r0ifP1QyTe6p0geXP8l6fQ",

"version" : {

"number" : "7.6.2",

"build_flavor" : "default",

"build_type" : "docker",

"build_hash" : "ef48eb35cf30adf4db14086e8aabd07ef6fb113f",

"build_date" : "2020-03-26T06:34:37.794943Z",

"build_snapshot" : false,

"lucene_version" : "8.4.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

To deploy a Kibana instance, go through the following steps.

$ cat <<EOF | kubectl apply -f -

apiVersion: kibana.k8s.elastic.co/v1

kind: Kibana

metadata:

name: quickstart

spec:

version: 7.6.2

count: 1

elasticsearchRef:

name: quickstart

EOF

kibana.kibana.k8s.elastic.co/quickstart created

$ kubectl get pods NAME READY STATUS RESTARTS AGE quickstart-es-default-0 1/1 Running 0 4h8m quickstart-kb-6f664c4f5c-6pknc 1/1 Running 0 7m25s $ kubectl get pod -l 'kibana.k8s.elastic.co/name=quickstart' NAME READY STATUS RESTARTS AGE quickstart-kb-6f664c4f5c-6pknc 1/1 Running 0 10m

$ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4h16m quickstart-es-default ClusterIP None <none> <none> 4h7m quickstart-es-http ClusterIP 10.100.184.98 <none> 9200/TCP 4h7m quickstart-kb-http ClusterIP 10.102.153.18 <none> 5601/TCP 5m46s

Use kubectl port-forward to access Kibana from our local workstation:

$ kubectl port-forward service/quickstart-kb-http 5601Forwarding from 127.0.0.1:5601 -> 5601 Forwarding from [::1]:5601 -> 5601

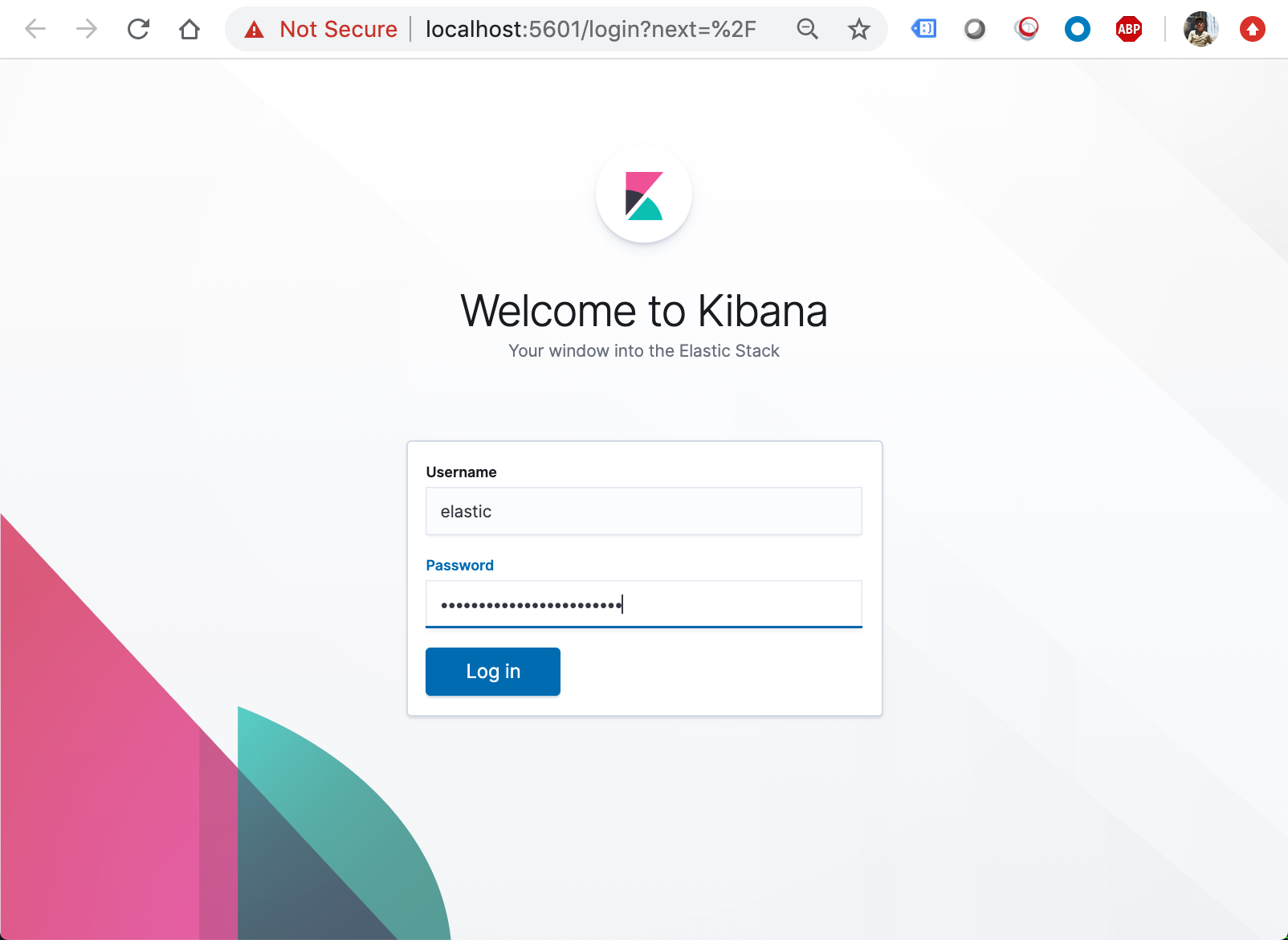

Open https://localhost:5601 in the browser. The browser will show a warning because the self-signed certificate configured by default is not verified by a third party certificate authority and not trusted by our browser. We can temporarily acknowledge the warning for the purposes of this quick start but it is highly recommended that we configure valid certificates for any production deployments.

Login as the elastic user. The password can be obtained with the following command:

$ kubectl get secret quickstart-es-elastic-user -o=jsonpath='{.data.elastic}' | base64 --decode; echo

d5vzwgmkxq5g9k69m6q9xcwk

Docker & K8s

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization