Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

Initially, a bridged networking would allow ECS to communicate with the host ENI. This means all of our tasks running on the same instance share the instance's elastic network interface (eni).

This was a limitation when duplicated services were running on the same host (that gives us headache of port conflicts), but with AWS's recent improvement of awsvpc, individual interfaces are assigned to individual containers. Each task gets its own elastic networking interface and IP address so that multiple applications or copies of a single application can run on the same port number without any conflicts.

The awsvpc mode provides this networking support to our tasks natively - the awsvpc network mode give Amazon ECS tasks the same networking properties as Amazon EC2 instances with an Elastic Network Interface (ENI), such as it's own Security Group. In other words, when we use the awsvpc network mode in our task definitions, every task that is launched from that task definition gets its own elastic network interface, a primary private IP address, and an internal DNS hostname.

Note that ECS uses the bridge network mode, by default. But Fargate requires using the awsvpc network mode.

We only specify a containerPort value, not a hostPort value, as there is no host to manage. Our container port is the port that we access on our elastic network interface IP address.

Also note that with awsvpc network mode, links are not allowed as they are a property of the bridge network mode (and are now a legacy feature of Docker). Instead, containers share a network namespace and communicate with each other over the localhost interface.

For more information about Fargate network works including "Best Practices for Fargate Networking", please check Task Networking in AWS Fargate

We can use Docker Compose files in any format (v1, v2, or v3) to deploy containers using the Amazon ECS CLI (Amazon ECS CLI Supports Docker Compose Version 3).

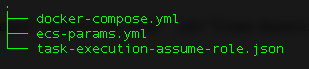

Here are the files we need in this post:

Let's install ECS CLI (Amazon ECS Command Line Reference) on Mac (Installing the Amazon ECS CLI):

$ sudo curl -o /usr/local/bin/ecs-cli https://s3.amazonaws.com/amazon-ecs-cli/ecs-cli-darwin-amd64-latest

Verify the downloaded binary with the MD5 sum provided. Compare the two output strings (the checksum that is returned from local md5 run should match the from on the S3 source):

$ curl -s https://s3.amazonaws.com/amazon-ecs-cli/ecs-cli-darwin-amd64-latest.md5 && md5 -q /usr/local/bin/ecs-cli 4ff2288545af59bb2e68750a45d5ac00 4ff2288545af59bb2e68750a45d5ac00

Apply execute permissions to the binary:

$ sudo chmod +x /usr/local/bin/ecs-cli

Verify that the CLI is working properly by checking the versio:

$ ecs-cli --version ecs-cli version 1.12.1 (e70f1b1)

The Amazon ECS CLI requires some basic configuration information (Configuring the Amazon ECS CLI) before we can use it, such as our AWS credentials, the AWS Region in which to create our cluster, and the name of the Amazon ECS cluster to use.

Starting from v1.0.0, the new YAML formatted configuration files are splitt into two separate files. Credential information is stored in ~/.ecs/credentials and cluster configuration information is stored in ~/.ecs/config.

Set up a CLI profile with the following command, substituting profile_name with desired profile name, $AWS_ACCESS_KEY_ID and $AWS_SECRET_ACCESS_KEY environment variables with our AWS credentials. Let's set "wordpress" as a default profile:

$ ecs-cli configure profile --profile-name wordpress --access-key $AWS_ACCESS_KEY_ID --secret-key $AWS_SECRET_ACCESS_KEY

$ cat ~/.ecs/credentials

version: v1

default: wordpress

ecs_profiles:

wordpress:

aws_access_key_id: AKIAIY6RID2W2C5I3P5Q

aws_secret_access_key: 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

Create a cluster configuration, which defines the AWS region to use, resource creation prefixes, and the cluster name to use with the Amazon ECS CLI:

$ ecs-cli configure --cluster wordpress --region us-east-1 --default-launch-type FARGATE --config-name wordpress

Check our config that's been created:

$ cat ~/.ecs/config

version: v1

default: wordpress

clusters:

wordpress:

cluster: wordpress

region: us-east-1

default_launch_type: FARGATE

The ecs-cli configure command configures the AWS Region to use, resource creation prefixes, and the Amazon ECS cluster name to use with the Amazon ECS CLI. Stores a single named cluster configuration in the ~/.ecs/config file. The first cluster configuration that is created is set as the default.

Amazon ECS needs permissions so that our Fargate task can store logs in CloudWatch. These permissions are covered by the task execution IAM role.

To create the task execution IAM role using the AWS CLI, we need to create a file named task-execution-assume-role.json with the following contents:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "",

"Effect": "Allow",

"Principal": {

"Service": "ecs-tasks.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

Create the task execution role if not already exists:

$ aws iam --region us-east-1 create-role --role-name ecsTaskExecutionRole --assume-role-policy-document file://task-execution-assume-role.json

Attach the task execution role policy:

$ aws iam --region us-east-1 attach-role-policy --role-name ecsTaskExecutionRole --policy-arn arn:aws:iam::aws:policy/service-role/AmazonECSTaskExecutionRolePolicy

Create an Amazon ECS cluster with the ecs-cli up command. Because we specified Fargate as our default launch type in the cluster configuration, this command creates an empty cluster and a VPC configured with two public subnets.

$ ecs-cli up --cluster wordpress INFO[0001] Created cluster cluster=wordpress region=us-east-1 INFO[0002] Waiting for your cluster resources to be created... INFO[0002] Cloudformation stack status stackStatus=CREATE_IN_PROGRESS INFO[0064] Cloudformation stack status stackStatus=CREATE_IN_PROGRESS VPC created: vpc-09495d1e00bb5e8de Subnet created: subnet-0525e82a6ef9682dc Subnet created: subnet-022fe5b9391d8148d Cluster creation succeeded.

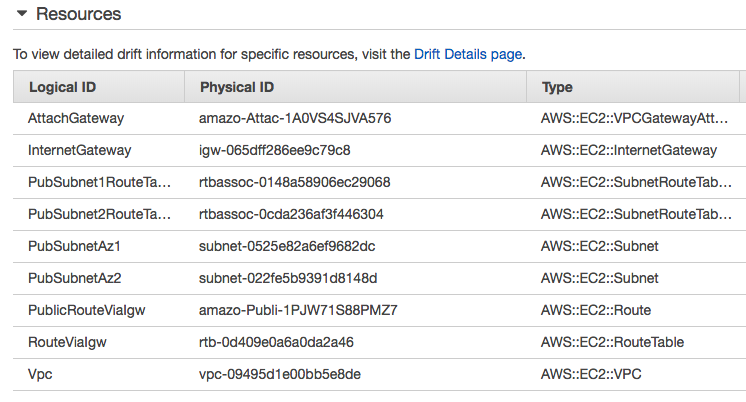

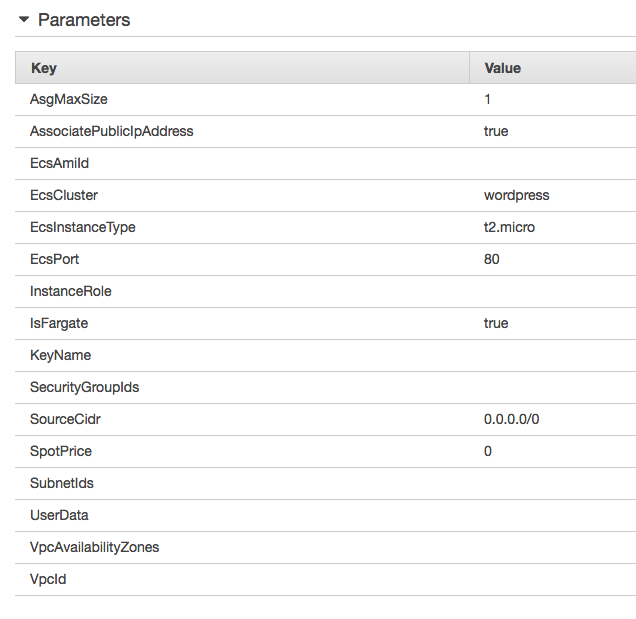

This command creates a new AWS CloudFormation stack called amazon-ecs-cli-setup-cluster_name. We can view the progress of the stack creation in the AWS Management Console.

Here is the resource created by the CloudFormation stack:

The resources were created using the following parameters:

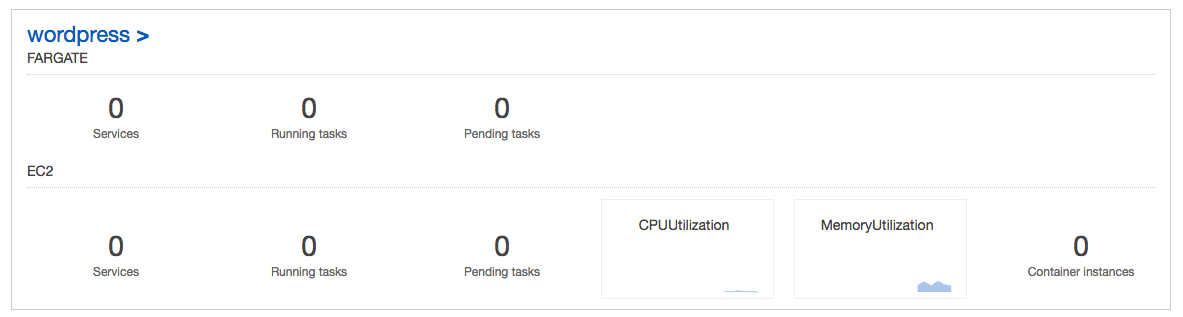

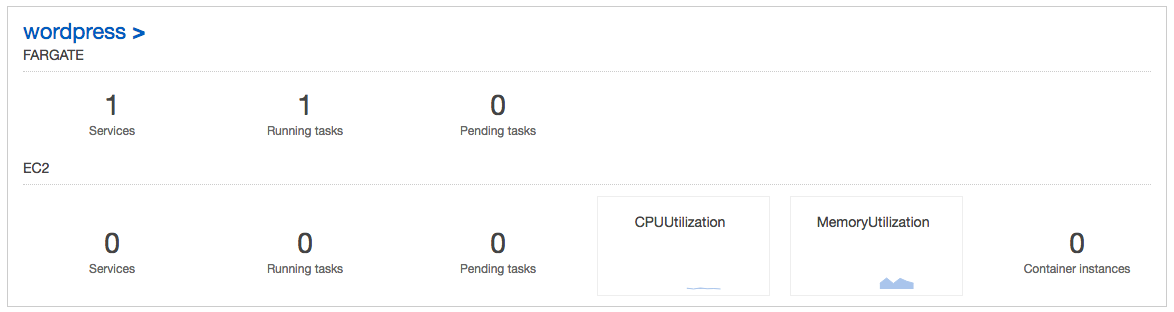

We can see the empty cluster that's been created:

Using the AWS CLI, create a security group using the VPC ID from the previous output:

$ aws ec2 create-security-group --group-name "my-fargate-sg" --description "My Fargate security group" --vpc-id "vpc-09495d1e00bb5e8de"

{

"GroupId": "sg-0998fddd5a8f96cf3"

}

Add a security group rule to allow inbound access on port 80:

$ aws ec2 authorize-security-group-ingress --group-id "sg-0998fddd5a8f96cf3" --protocol tcp --port 80 --cidr 0.0.0.0/0

For this step, we'll create a simple Docker compose file that creates a WordPress application consisting of a web server and a MySQL database. At this time, the Amazon ECS CLI supports Docker compose file syntax versions 1, 2, and 3.

Here is our compose file (docker-compose.yml) from Tutorial: Creating a Cluster with a Fargate Task Using the Amazon ECS CLI:

version: '3'

services:

wordpress:

image: wordpress

ports:

- "80:80"

logging:

driver: awslogs

options:

awslogs-group: ecs-fargate-tutorial

awslogs-region: us-east-1

awslogs-stream-prefix: wordpress

The wordpress container exposes port 80 for inbound traffic to the web server. It also configures container logs to go to the CloudWatch log group. This is the recommended best practice for Fargate tasks.

In addition to the Docker compose information, there are some parameters specific to Amazon ECS that we must specify for the service. Using the VPC, subnet, and security group IDs from the previous step, create a file named ecs-params.yml with the following content:

version: 1

task_definition:

task_execution_role: ecsTaskExecutionRole

ecs_network_mode: awsvpc

task_size:

mem_limit: 0.5GB

cpu_limit: 256

run_params:

network_configuration:

awsvpc_configuration:

subnets:

- "subnet-0525e82a6ef9682dc"

- "subnet-022fe5b9391d8148d"

security_groups:

- "sg-0998fddd5a8f96cf3"

assign_public_ip: ENABLED

After creating the compose file, we can deploy it to our cluster with ecs-cli compose service up. By default, the command looks for files called docker-compose.yml and ecs-params.yml in the current directory; we can specify a different docker compose file with the --file option, and a different ECS Params file with the --ecs-params option. By default, the resources created by this command have the current directory in their titles, but we can override that with the --project-name option. The --create-log-groups option creates the CloudWatch log groups for the container logs.

$ ecs-cli compose --project-name ecs-fargate service up --create-log-groups --cluster-config wordpress --cluster wordpress INFO[0001] Using ECS task definition TaskDefinition="ecs-fargate:1" INFO[0001] Created Log Group tutorial in us-east-1 INFO[0002] Created an ECS service service=ecs-fargate taskDefinition="ecs-fargate:1" INFO[0002] Updated ECS service successfully desiredCount=1 force-deployment=false service=ecs-fargate INFO[0065] Service status desiredCount=1 runningCount=1 serviceName=ecs-fargate INFO[0065] ECS Service has reached a stable state desiredCount=1 runningCount=1 serviceName=ecs-fargate

After we deploy the compose file, we can view the containers that are running on our cluster with the ecs-cli ps command:

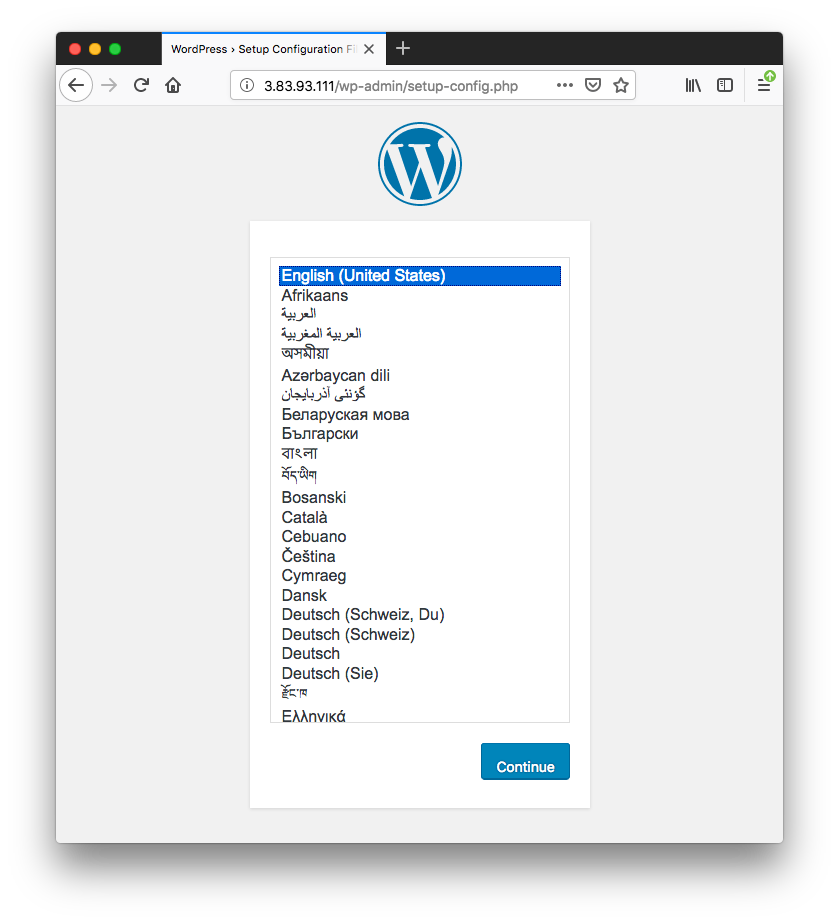

$ ecs-cli ps --cluster wordpress Name State Ports TaskDefinition Health 6b26badc-9e80-47d2-9ba8-5b001bfd8903/wordpress RUNNING 3.83.93.111:80->80/tcp ecs-fargate:1 UNKNOWN

We can see the wordpress containers from our compose file, and also the IP address and port of the web server. If we point a web browser to that address, we should see the WordPress installation wizard.

As discussed earlier, because each Fargate task has its own isolated networking stack, there is no need for dynamic ports to avoid port conflicts between different tasks as in other networking modes. The static ports make it easy for containers to communicate with each other.

To view the logs for the task:

$ ecs-cli logs --task-id 6b26badc-9e80-47d2-9ba8-5b001bfd8903 --follow --cluster-config wordpress --cluster wordpress WordPress not found in /var/www/html - copying now... Complete! WordPress has been successfully copied to /var/www/html [Sun Dec 23 05:21:35.654929 2018] [mpm_prefork:notice] [pid 1] AH00163: Apache/2.4.25 (Debian) PHP/7.2.13 configured -- resuming normal operations [Sun Dec 23 05:21:35.655441 2018] [core:notice] [pid 1] AH00094: Command line: 'apache2 -D FOREGROUND' 175.111.181.252 - - [23/Dec/2018:05:35:56 +0000] "GET / HTTP/1.0" 302 238 "-" "-" 178.141.81.115 - - [23/Dec/2018:05:45:04 +0000] "GET / HTTP/1.0" 302 238 "-" "-" 178.141.81.115 - - [23/Dec/2018:05:45:05 +0000] "GET / HTTP/1.0" 302 238 "-" "-" 178.141.81.115 - - [23/Dec/2018:05:45:07 +0000] "GET / HTTP/1.0" 302 238 "-" "-" 178.141.81.115 - - [23/Dec/2018:05:45:11 +0000] "GET / HTTP/1.0" 302 238 "-" "-" 108.239.135.40 - - [23/Dec/2018:05:51:02 +0000] "GET / HTTP/1.1" 302 288 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.13; rv:63.0) Gecko/20100101 Firefox/63.0" 108.239.135.40 - - [23/Dec/2018:05:51:02 +0000] "GET /wp-admin/setup-config.php HTTP/1.1" 200 4204 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.13; rv:63.0) Gecko/20100101 Firefox/63.0" 108.239.135.40 - - [23/Dec/2018:05:51:04 +0000] "GET /wp-includes/css/buttons.min.css?ver=5.0.2 HTTP/1.1" 200 1827 "http://3.83.93.111/wp-admin/setup-config.php" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.13; rv:63.0) Gecko/20100101 Firefox/63.0" ...

The "--follow" option tells the Amazon ECS CLI to continuously poll for logs.

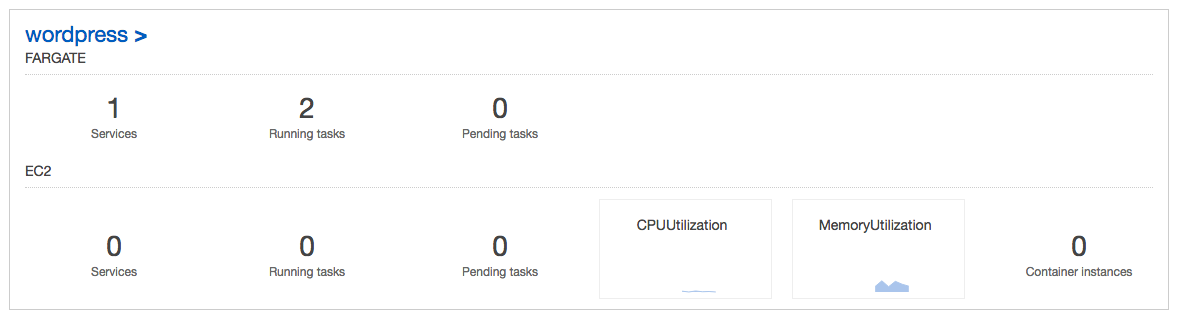

We can scale up our task count to increase the number of instances of our application with ecs-cli compose service scale. We'll increase the running count of the application to two:

$ ecs-cli compose --project-name ecs-fargate service scale 2 --cluster-config wordpress --cluster wordpress INFO[0002] Updated ECS service successfully desiredCount=2 force-deployment=false service=ecs-fargate INFO[0002] Service status desiredCount=2 runningCount=1 serviceName=ecs-fargate INFO[0017] (service ecs-fargate) has started 1 tasks: (task d7277f34-0063-4e36-8af0-2e59cead1a37). timestamp="2018-12-23 06:31:51 +0000 UTC" INFO[0048] Service status desiredCount=2 runningCount=2 serviceName=ecs-fargate INFO[0048] (service ecs-fargate) has reached a steady state. timestamp="2018-12-23 06:32:30 +0000 UTC" INFO[0048] ECS Service has reached a stable state desiredCount=2 runningCount=2 serviceName=ecs-fargate

Now we should see two more containers in our cluster:

$ ecs-cli compose --project-name ecs-fargate service ps --cluster-config wordpress --cluster wordpress Name State Ports TaskDefinition Health 6b26badc-9e80-47d2-9ba8-5b001bfd8903/wordpress RUNNING 3.83.93.111:80->80/tcp ecs-fargate:1 UNKNOWN d7277f34-0063-4e36-8af0-2e59cead1a37/wordpress RUNNING 3.80.8.126:80->80/tcp ecs-fargate:1 UNKNOWN

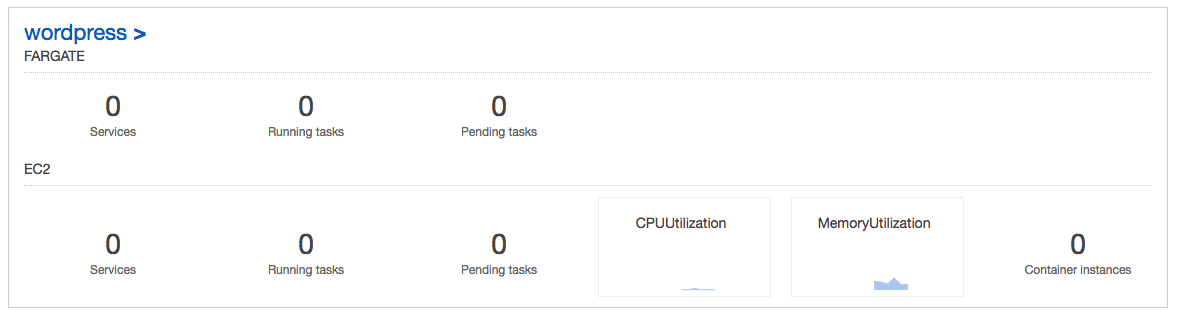

When we are finished, we should clean up our resources so they do not incur any more charges. First, delete the service so that it stops the existing containers and does not try to run any more tasks.

$ ecs-cli compose --project-name ecs-fargate service down --cluster-config wordpress --cluster wordpress INFO[0006] Updated ECS service successfully desiredCount=0 force-deployment=false service=ecs-fargate INFO[0006] Service status desiredCount=0 runningCount=2 serviceName=ecs-fargate INFO[0021] Service status desiredCount=0 runningCount=0 serviceName=ecs-fargate INFO[0021] ECS Service has reached a stable state desiredCount=0 runningCount=0 serviceName=ecs-fargate INFO[0022] Deleted ECS service service=ecs-fargate INFO[0022] ECS Service has reached a stable state desiredCount=0 runningCount=0 serviceName=ecs-fargate

Now, take down our cluster, which cleans up the resources that we created earlier with ecs-cli up:

$ ecs-cli down --force --cluster-config wordpress --cluster wordpress INFO[0001] Waiting for your cluster resources to be deleted... INFO[0002] Cloudformation stack status stackStatus=DELETE_IN_PROGRESS INFO[0063] Cloudformation stack status stackStatus=DELETE_IN_PROGRESS INFO[0124] Cloudformation stack status stackStatus=DELETE_IN_PROGRESS INFO[0186] Cloudformation stack status stackStatus=DELETE_IN_PROGRESS

The command deleted the cloudformation with resources created.

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization