Kubernetes III - kubeadm on AWS

This tutorial is based on Installing Kubernetes on Linux with kubeadm, and it will show how to install a Kubernetes 1.6 cluster on machines running Ubuntu 16.04 using a tool called kubeadm which is part of Kubernetes.

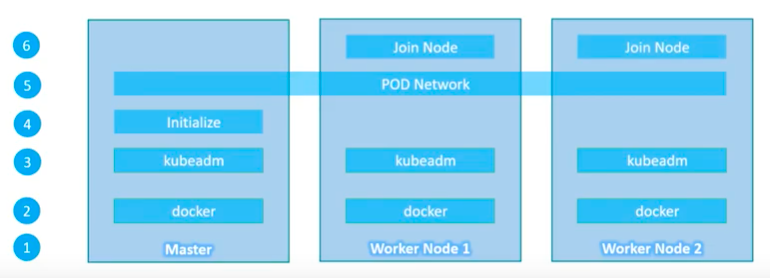

The picture below shows the steps how we setup Kubernetes clusters via the kubeadm:

Picture source : Kubernetes for the Absolute Beginners - Setup Kubernetes - kubeadm

Note the difference between kubeadm and minikube. The minikube let us setup only one node while with the kubeadm we can setup multiple nodes on our local machine via virtualbox.

In this post, we'll use two AWS VM instances:

- master: 54.88.23.51 (172.31.60.33)

- node: 54.172.245.224 (172.31.41.29)

We'll do the following:

- Install a secure Kubernetes cluster on our machines.

- Install a pod network on the cluster so that application components (pods) can talk to each other.

- Install a sample microservices application (a socks shop) on the cluster.

- Nodes:

Hosts that run Kubernetes applications - Containers:

Units of packaging - Pods:

Units of deployment which is collection of containers - Replication Controller:

Ensures availability and scalability - Labels:

Key-value pairs for identification - Services:

Collection of pods exposed as an endpoint

We will install the following packages on all the machines:

- docker: the container runtime, which Kubernetes depends on. v1.12 is recommended, but v1.10 and v1.11 are known to work as well. v1.13 and 17.03+ have not yet been tested and verified by the Kubernetes node team.

- kubelet: the most core component of Kubernetes. It runs on all of the machines in our cluster and does things like starting pods and containers.

- kubectl: the command to control the cluster once it's running. We will only need this on the master, but it can be useful to have on the other nodes as well.

- kubeadm: the command to bootstrap the cluster.

For each host, run the following machine:

ubuntu@ip-172-31-60-33:~$ sudo su root@ip-172-31-60-33:/home/ubuntu# apt-get update && apt-get install -y apt-transport-https root@ip-172-31-60-33:/home/ubuntu# curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add - root@ip-172-31-60-33:/home/ubuntu# cat <<EOF >/etc/apt/sources.list.d/kubernetes.list deb http://apt.kubernetes.io/ kubernetes-xenial main EOF root@ip-172-31-60-33:/home/ubuntu# apt-get update root@ip-172-31-60-33:/home/ubuntu# apt-get install -y docker.io root@ip-172-31-60-33:/home/ubuntu# apt-get install -y kubelet kubeadm kubectl kubernetes-cni

The kubelet is now restarting every few seconds, as it waits in a crashloop for kubeadm to tell it what to do.

The master is the machine where the control plane components run, including etcd (the cluster database) and the API server (which the kubectl CLI communicates with).

To initialize the master, pick one of the machines we previously installed kubeadm on, and run:

ubuntu@ip-172-31-60-33:/home/ubuntu# kubeadm init [kubeadm] WARNING: kubeadm is in beta, please do not use it for production clusters. [init] Using Kubernetes version: v1.6.0 [init] Using Authorization mode: RBAC [preflight] Running pre-flight checks [certificates] Generated CA certificate and key. [certificates] Generated API server certificate and key. [certificates] API Server serving cert is signed for DNS names [ip-172-31-60-33 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 172.31.60.33] [certificates] Generated API server kubelet client certificate and key. [certificates] Generated service account token signing key and public key. [certificates] Generated front-proxy CA certificate and key. [certificates] Generated front-proxy client certificate and key. [certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf" [apiclient] Created API client, waiting for the control plane to become ready [apiclient] All control plane components are healthy after 29.327806 seconds [apiclient] Waiting for at least one node to register [apiclient] First node has registered after 3.502761 seconds [token] Using token: 918ba6.e2cb11b266dced53 [apiconfig] Created RBAC rules [addons] Created essential addon: kube-proxy [addons] Created essential addon: kube-dns Your Kubernetes master has initialized successfully! To start using your cluster, you need to run (as a regular user): sudo cp /etc/kubernetes/admin.conf $HOME/ sudo chown $(id -u):$(id -g) $HOME/admin.conf export KUBECONFIG=$HOME/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: http://kubernetes.io/docs/admin/addons/ You can now join any number of machines by running the following on each node as root: kubeadm join --token 918ba6.e2cb11b266dced53 172.31.60.33:6443

kubeadm init will first run a series of prechecks to ensure that the machine is ready to run Kubernetes. It will expose warnings and exit on errors. It will then download and install the cluster database and "control plane" components. This may take several minutes.

Make a record of the kubeadm join command that kubeadm init outputs. We will need this in a moment. The token is used for mutual authentication between the master and the joining nodes. These tokens can be listed, created and deleted with the kubeadm token command.

To start using our cluster, we need to run as a regular user:

ubuntu@ip-172-31-60-33:/home/ubuntu# exit exit ubuntu@ip-172-31-60-33:~$ sudo cp /etc/kubernetes/admin.conf $HOME/ ubuntu@ip-172-31-60-33:~$ sudo chown $(id -u):$(id -g) $HOME/admin.conf ubuntu@ip-172-31-60-33:~$ export KUBECONFIG=$HOME/admin.conf

Let's check the version of kubectl:

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"6", GitVersion:"v1.6.1", GitCommit:"b0b7a323cc5a4a2019b2e9520c21c7830b7f708e", GitTreeState:"clean", BuildDate:"2017-04-03T20:44:38Z", GoVersion:"go1.7.5", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"6", GitVersion:"v1.6.0", GitCommit:"fff5156092b56e6bd60fff75aad4dc9de6b6ef37", GitTreeState:"clean", BuildDate:"2017-03-28T16:24:30Z", GoVersion:"go1.7.5", Compiler:"gc", Platform:"linux/amd64"}

We must install a pod network add-on so that our pods can communicate with each other.

The network must be deployed before any applications. Also, kube-dns, a helper service, will not start up before a network is installed. kubeadm only supports CNI based networks (and does not support kubenet).

We can install a pod network add-on with the following command on master:

kubectl apply -f <add-on.yaml>

Weave Net can be installed onto our CNI-enabled Kubernetes cluster with a single command:

ubuntu@ip-172-31-60-33:~$ kubectl apply -f https://git.io/weave-kube-1.6 clusterrole "weave-net" created serviceaccount "weave-net" created clusterrolebinding "weave-net" created daemonset "weave-net" created

After a few seconds, a Weave Net pod should be running on each Node and any further pods we create will be automatically attached to the Weave network.

We should only install one pod network per cluster.

Once a pod network has been installed, we can confirm that it is working by checking that the kube-dns pod is Running in the output of kubectl get pods --all-namespaces:

ubuntu@ip-172-31-60-33:~$ watch kubectl get pods --all-namespaces Every 2.0s: kubectl get pods --all-namespaces Wed Apr 12 22:11:50 2017 NAMESPACE NAME READY STATUS RESTARTS AGE kube-system etcd-ip-172-31-60-33 1/1 Running 0 22m kube-system kube-apiserver-ip-172-31-60-33 1/1 Running 0 21m kube-system kube-controller-manager-ip-172-31-60-33 1/1 Running 0 21m kube-system kube-dns-3913472980-ng8td 3/3 Running 0 22m kube-system kube-proxy-8chjv 1/1 Running 0 22m kube-system kube-proxy-8cq1c 1/1 Running 0 3m kube-system kube-scheduler-ip-172-31-60-33 1/1 Running 0 22m kube-system weave-net-cl6gp 2/2 Running 0 18m kube-system weave-net-zdh3x 2/2 Running 0 3m

We can see the weave-net is running.

Once the kube-dns pod is up and running, we can continue by joining our nodes.

The nodes are where our workloads (containers and pods, etc) run. To add new nodes to our cluster do the following for each machine besides our master:

# kubeadm join --token <token> <master-ip>:<master-port>

In our case, we can get the command from the kubeadm init outputs:

root@ip-172-31-41-29:/home/ubuntu# kubeadm join --token 918ba6.e2cb11b266dced53 172.31.60.33:6443 [kubeadm] WARNING: kubeadm is in beta, please do not use it for production clusters. [preflight] Running pre-flight checks [discovery] Trying to connect to API Server "172.31.60.33:6443" [discovery] Created cluster-info discovery client, requesting info from "https://172.31.60.33:6443" [discovery] Cluster info signature and contents are valid, will use API Server "https://172.31.60.33:6443" [discovery] Successfully established connection with API Server "172.31.60.33:6443" [bootstrap] Detected server version: v1.6.0 [bootstrap] The server supports the Certificates API (certificates.k8s.io/v1beta1) [csr] Created API client to obtain unique certificate for this node, generating keys and certificate signing request [csr] Received signed certificate from the API server, generating KubeConfig... [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf" Node join complete: * Certificate signing request sent to master and response received. * Kubelet informed of new secure connection details. Run 'kubectl get nodes' on the master to see this machine join.

To see this machine join, on the master, let's run:

ubuntu@ip-172-31-60-33:~$ kubectl get nodes NAME STATUS AGE VERSION ip-172-31-41-29 Ready 32s v1.6.1 ip-172-31-60-33 Ready 19m v1.6.1

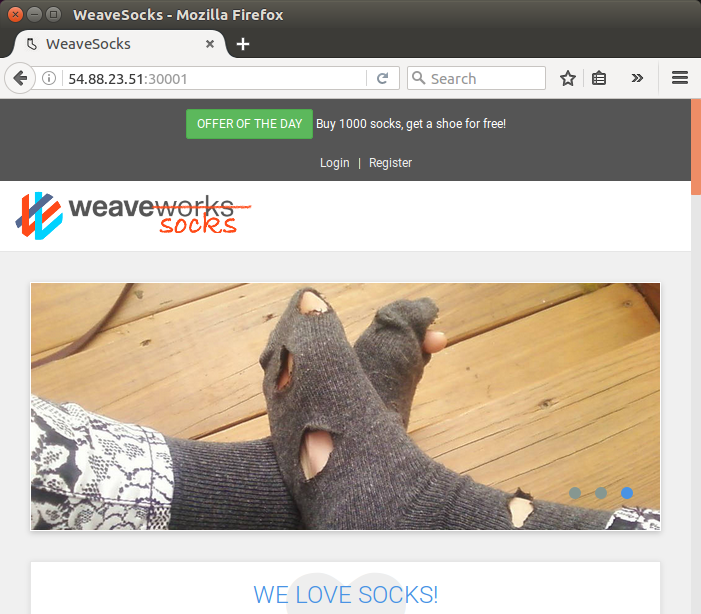

Now it is time to check if our new cluster is working. Sock Shop is a sample microservices application that shows how to run and connect a set of services on Kubernetes:

ubuntu@ip-172-31-60-33:~$ kubectl create namespace sock-shop namespace "sock-shop" created

Then, apply to install all the component of Sock Shop:

ubuntu@ip-172-31-60-33:~$ kubectl apply -n sock-shop -f "https://github.com/microservices-demo/microservices-demo/blob/master/deploy/kubernetes/complete-demo.yaml?raw=true" Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl apply namespace "sock-shop" configured namespace "zipkin" created deployment "carts-db" created service "carts-db" created deployment "carts" created service "carts" created deployment "catalogue-db" created service "catalogue-db" created deployment "catalogue" created service "catalogue" created deployment "front-end" created service "front-end" created deployment "orders-db" created service "orders-db" created deployment "orders" created service "orders" created deployment "payment" created service "payment" created deployment "queue-master" created service "queue-master" created deployment "rabbitmq" created service "rabbitmq" created deployment "shipping" created service "shipping" created deployment "user-db" created service "user-db" created deployment "user" created service "user" created the namespace from the provided object "zipkin" does not match the namespace "sock-shop". You must pass '--namespace=zipkin' to perform this operation. the namespace from the provided object "zipkin" does not match the namespace "sock-shop". You must pass '--namespace=zipkin' to perform this operation. the namespace from the provided object "zipkin" does not match the namespace "sock-shop". You must pass '--namespace=zipkin' to perform this operation. the namespace from the provided object "zipkin" does not match the namespace "sock-shop". You must pass '--namespace=zipkin' to perform this operation. the namespace from the provided object "zipkin" does not match the namespace "sock-shop". You must pass '--namespace=zipkin' to perform this operation.

We can then find out the port that the NodePort feature of services allocated for the front-end service by running:

ubuntu@ip-172-31-60-33:~$ kubectl -n sock-shop get svc front-end NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE front-end 10.97.82.211 <nodes> 80:30001/TCP 8m

It takes several minutes to download and start all the containers, watch the output of kubectl get pods -n sock-shop to see when they're all up and running:

ubuntu@ip-172-31-60-33:~$ kubectl get pods -n sock-shop NAME READY STATUS RESTARTS AGE carts-153328538-dt7hc 1/1 Running 0 13m carts-db-4256839670-cph4g 1/1 Running 0 13m catalogue-114596073-1rt4p 1/1 Running 0 13m catalogue-db-1956862931-v87jr 1/1 Running 0 13m front-end-3570328172-l2m00 1/1 Running 0 13m orders-2365168879-93fpx 1/1 Running 0 13m orders-db-836712666-pfnmq 1/1 Running 0 13m payment-1968871107-3pdtd 1/1 Running 0 13m queue-master-2798459664-p8vzt 1/1 Running 0 13m rabbitmq-3429198581-n25zq 1/1 Running 0 13m shipping-2899287913-749pj 1/1 Running 0 13m user-468431046-rhtq2 1/1 Running 0 13m user-db-1166754267-f6gd2 1/1 Running 0 13m

Then go to the IP address of our cluster's master node in our browser, and specify the given port. So for example, http://<master_ip>:<port>. In the example above, this was 30001, but the port may be a different.

If there is a firewall, make sure it exposes this port to the internet before trying to access it.

To uninstall the socks shop, run kubectl delete namespace sock-shop on the master.

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization