Docker - AWS ECS service discovery with Flask and Redis

In this post, we build a simple Python web application running on Docker Compose. The application uses the Flask and maintains a hit counter in Redis.

Before we move on to ECS, in this section, we'll play with our app via docker-compose on our local machine.

First, we'll deploy the app to two containers and then we mount a local volume to the container so that we can update our app on the flow.

./app.py:

import time

import redis

from flask import Flask

app = Flask(__name__)

cache = redis.Redis(host='redis', port=6379)

def get_hit_count():

retries = 5

while True:

try:

return cache.incr('hits')

except redis.exceptions.ConnectionError as exc:

if retries == 0:

raise exc

retries -= 1

time.sleep(0.5)

@app.route('/')

def hello():

count = get_hit_count()

return 'Hello World! I have been seen {} times.\n'.format(count)

if __name__ == "__main__":

app.run(host="0.0.0.0", debug=True)

In the sample code, redis is the hostname of the redis container on the application’s network. We use the default port for Redis, 6379.

./app/Dockerfile:

FROM python:3.7-alpine ADD . /code WORKDIR /code RUN pip install -r requirements.txt CMD ["python", "app.py"]

This tells Docker to do the followings:

- build an image starting with the Python 3.7 image.

- add the current directory . into the path /code in the image.

- set the working directory to /code. Note that the WORKDIR instruction sets the working directory for any RUN, CMD, ENTRYPOINT, COPY and ADD instructions that follow it in the Dockerfile.

- install the Python dependencies.

- set the default command for the container to python app.py.

Little thing but later this may help: we can replace:

CMD ["python", "app.py"]

with:

ENTRYPOINT ["python"] CMD ["app.py"]

OK. Now that our Dockerfile for our Flask app is ready we may want to define two services in a Compose file, docker-compose.yaml:

version: '3'

services:

web:

build: .

ports:

- "5000:5000"

redis:

image: "redis:alpine"

This Compose file defines two services, web and redis.

The web service:

- Uses an image that's built from the Dockerfile in the current directory.

- Forwards the exposed port 5000 on the container to port 5000 on the host machine. We use the default port for the Flask web server, 5000.

The redis service:

- uses a public Redis image pulled from the Docker Hub registry.

From our project directory (.), start up our application by running docker-compose up.

compose ├── Dockerfile ├── app.py ├── docker-compose.yaml └── requirements.txt $ docker-compose up Creating compose_redis_1 ... done Creating compose_web_1 ... done Attaching to compose_redis_1, compose_web_1 redis_1 | 1:C 31 Mar 2019 00:14:55.371 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo redis_1 | 1:C 31 Mar 2019 00:14:55.371 # Redis version=5.0.4, bits=64, commit=00000000, modified=0, pid=1, just started redis_1 | 1:C 31 Mar 2019 00:14:55.371 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf redis_1 | 1:M 31 Mar 2019 00:14:55.373 * Running mode=standalone, port=6379. redis_1 | 1:M 31 Mar 2019 00:14:55.373 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128. redis_1 | 1:M 31 Mar 2019 00:14:55.373 # Server initialized redis_1 | 1:M 31 Mar 2019 00:14:55.373 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot. Redis must be restarted after THP is disabled. redis_1 | 1:M 31 Mar 2019 00:14:55.373 * Ready to accept connections web_1 | * Serving Flask app "app" (lazy loading) web_1 | * Environment: production web_1 | WARNING: Do not use the development server in a production environment. web_1 | Use a production WSGI server instead. web_1 | * Debug mode: on web_1 | * Running on http://0.0.0.0:5000/ (Press CTRL+C to quit) web_1 | * Restarting with stat web_1 | * Debugger is active! web_1 | * Debugger PIN: 281-524-074

Compose pulls a Redis image, builds an image for our code, and starts the services (web and redis) we defined. In this case, the code is statically copied into the image at build time:

$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES bd02dcee62f7 redis:alpine "docker-entrypoint.s…" 2 minutes ago Up 2 minutes 6379/tcp compose_redis_1 31b8f8dc116f compose_web "python app.py" 2 minutes ago Up 2 minutes 0.0.0.0:5000->5000/tcp compose_web_1 $ docker exec -it 31b8f8dc116f /bin/sh /code # ls Dockerfile app.py requirements.txt

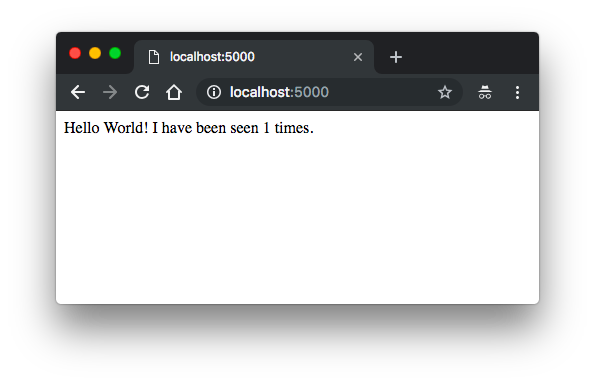

The web app should now be listening on port 5000 on our Docker daemon host. Enter http://0.0.0.0:5000/ in a browser to see the application running:

Refresh the page. Then the number should have been incremented:

Hello World! I have been seen 2 times.

Stop the application, either by running docker-compose down from within our project directory, or by hitting CTRL+C.

$ docker-compose down Stopping compose_redis_1 ... done Stopping compose_web_1 ... done Removing compose_redis_1 ... done Removing compose_web_1 ... done Removing network compose_default

With current setup, whenever we modify our code (app.py), we need to rebuild the app image. Let's mount a volume via volumes key in the docker-compose.yaml:

version: '3'

services:

web:

build:

context: .

ports:

- "5000:5000"

volumes:

- .:/code

redis:

image: "redis:alpine"

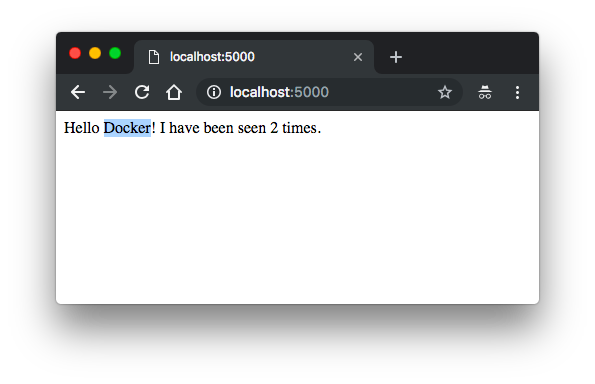

We added a bind mount for the web service. The new volumes key mounts the project directory (current directory, '.') on the host to /code inside the container, allowing us to modify the code on the fly, without having to rebuild the image.

Let's re-build and run the app with Compose:

Hello World! I have been seen 1 times.

Now that the application code is mounted into the container using a volume, we can make changes to its code and see the changes instantly, without having to rebuild the image.

Change the greeting in app.py on our local machine and save it. For example, change the "Hello World!" message to "Hello from Docker!":

We may see the following message from the terminal:

... web_1 | * Detected change in '/code/app.py', reloading web_1 | * Restarting with stat web_1 | * Debugger is active! web_1 | * Debugger PIN: 147-007-146

If we want to run our services in the background, we can pass the -d flag (for "detached" mode) to docker-compose up and use docker-compose ps to see what is currently running:

$ docker-compose down

Stopping compose_redis_1 ... done

Stopping compose_web_1 ... done

Removing compose_redis_1 ... done

Removing compose_web_1 ... done

Removing network compose_default

$ docker-compose up -d

Creating network "compose_default" with the default driver

Creating compose_redis_1 ... done

Creating compose_web_1 ... done

$ docker-compose ps

Name Command State Ports

---------------------------------------------------------------------------------

compose_redis_1 docker-entrypoint.sh redis ... Up 6379/tcp

compose_web_1 python app.py Up 0.0.0.0:5000->5000/tcp

If we started Compose with docker-compose up -d, stop our services once we've finished with them:

$ docker-compose stop Stopping compose_web_1 ... done Stopping compose_redis_1 ... done

We can bring everything down, removing the containers entirely, with the down command:

$ docker-compose down Removing compose_web_1 ... done Removing compose_redis_1 ... done Removing network compose_default

Compose, by default, sets up a single network for our app. Each container for a service joins the default network and is both reachable by other containers on that network, and discoverable by them at a hostname identical to the container name.

$ docker network ls NETWORK ID NAME DRIVER SCOPE 89b79dfc6a7e bridge bridge local 5697c2c84486 compose_default bridge local

The network name, 'compose_default', is actually a combination of the project name (here, 'compose') and 'default'. The Compose created it for us. If our project name (the folder name) is 'base', the Compose would create "base_default" instead.

In our docker-compose.yaml:

version: '3'

services:

web:

build:

context: .

ports:

- "5000:5000"

volumes:

- .:/code

redis:

image: "redis:alpine"

- A network called compose_default is created.

- A container is created using web's configuration. It joins the network compose_default under the name web.

- A container is created using redis' configuration. It joins the network compose_default under the name redis.

In other words, each container can now look up the hostname web or redis and get back the appropriate container’s IP address.

If we want to define network, the following would give us the same network and driver (bridge):

version: '3'

services:

web:

build:

context: .

ports:

- "5000:5000"

volumes:

- .:/code

redis:

image: "redis:alpine"

networks:

default:

driver: bridge

$ docker-compose up Creating network "compose_default" with driver "bridge" Creating compose_web_1 ... done Creating compose_redis_1 ... done ...

We can create our own network and use it with the following compose:

version: '3'

services:

web:

build:

context: .

ports:

- "5000:5000"

volumes:

- .:/code

networks:

- my-network

redis:

image: "redis:alpine"

networks:

- my-network

networks:

my-network:

driver: bridge

$ docker-compose up Creating network "compose_my-network" with driver "bridge" Creating compose_web_1 ... done Creating compose_redis_1 ... done ...

Instead of just using the default app network, we can specify custom networks with the top-level networks key (this may require plugins installed). This lets us create more complex topologies and specify custom network drivers and options. We can also use it to connect services to externally-created networks which aren't managed by Compose.

. ├── Dockerfile ├── app.py ├── docker-compose.yml ├── ecs-params.yml └── requirements.txt

Let's start by creating an Amazon ECS cluster with the ecs-cli up command.

Unlike ECS/EC2 type which we're responsible for managing the underlying EC2 instances which use to host our containers, with the Fargate, AWS does that for us. It's a serverless container ready to serve whatever we want as an endpoint. The container is hosted somewhere, we do not have access to those machines, and therefore we cannot ssh into them.

We specified Fargate as our default launch type in the cluster configuration, so this command creates an empty cluster and a VPC configured with two public subnets:

$ ecs-cli up --cluster my-flask-cluster INFO[0001] Created cluster cluster=my-flask-cluster region=us-east-1 INFO[0002] Waiting for your cluster resources to be created... INFO[0002] Cloudformation stack status stackStatus=CREATE_IN_PROGRESS INFO[0063] Cloudformation stack status stackStatus=CREATE_IN_PROGRESS VPC created: vpc-0d3c0d5b3d8753c0c Subnet created: subnet-083f774bd55bc5d5a Subnet created: subnet-056abc38570d37666 Cluster creation succeeded.

Though the Cloudformation created a default security group for the VPC, we may want to create our own with the ingress rule attached:

$ aws ec2 create-security-group --group-name "my-fargate-service-discovery-sg" \

--description "My Fargate Service Discovery security group" \

--vpc-id "vpc-0d3c0d5b3d8753c0c"

{

"GroupId": "sg-0e3a05aa1381ead6b"

}

$ aws ec2 authorize-security-group-ingress \

--group-id "sg-0e3a05aa1381ead6b" --protocol \

tcp --port 5000 --cidr 0.0.0.0/0

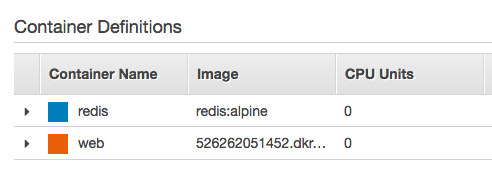

We need to modify the docker-compose.yml:

version: '3'

services:

web:

image: "526262051452.dkr.ecr.us-east-1.amazonaws.com/ecs-flask-app"

ports:

- "5000:5000"

volumes:

- /code # host path for volumes not supported for Fargate

redis:

image: "redis:alpine"

This is important! We need to change hostname from "redis" to "localhost" in app.py otherwise we get an error related to "resolving name" such as "connection error : redis:6379". So, our app.py should look like this:

import time

import redis

from flask import Flask

app = Flask(__name__)

#cache = redis.Redis(host='redis', port=6379)

cache = redis.Redis(host='localhost', port=6379)

def get_hit_count():

retries = 5

while True:

try:

return cache.incr('hits')

except redis.exceptions.ConnectionError as exc:

if retries == 0:

raise exc

retries -= 1

time.sleep(0.5)

@app.route('/')

def hello():

count = get_hit_count()

return 'Hello Docker on ECS! I have been seen {} times.\n'.format(count)

if __name__ == "__main__":

app.run(host="0.0.0.0", debug=True)

Two things:

- Instead of using "build: ." for the "web" service, we had to specify the "image". So, we need to push the image to the ECR (or may be to DockerHub):

- For the mounting volume, the host path not supported. So, we just specify the volume on the container:

$ docker build -t flask-app .

...

Successfully tagged flask-app:latest

$ aws ecr create-repository --repository-name ecs-flask-app

{

"repository": {

"repositoryArn": "arn:aws:ecr:us-east-1:526262051452:repository/ecs-flask-app",

"registryId": "526262051452",

"repositoryName": "ecs-flask-app",

"repositoryUri": "526262051452.dkr.ecr.us-east-1.amazonaws.com/ecs-flask-app",

"createdAt": 1554157559.0

}

}

$ docker tag flask-app 526262051452.dkr.ecr.us-east-1.amazonaws.com/ecs-flask-app

$ $(aws ecr get-login --no-include-email --region us-east-1)

WARNING! Using --password via the CLI is insecure. Use --password-stdin.

Login Succeeded

$ docker push 526262051452.dkr.ecr.us-east-1.amazonaws.com/ecs-flask-app

The push refers to repository [526262051452.dkr.ecr.us-east-1.amazonaws.com/ecs-flask-app]

1fe47956ce43: Pushed

3723bea9cc87: Pushed

d69483a6face: Pushed

latest: digest: sha256:f550ca489dd97d892481b19cf064fb447c25c0114c89ecdc1a7720d3bfdfe3ad size: 949

volumes:

- /code

Also, we need some preparation for using ecs-cli:

$ ecs-cli configure profile --profile-name ecs-fargate-svs-discovery-profile --access-key $AWS_ACCESS_KEY_ID --secret-key $AWS_SECRET_ACCESS_KEY $ ecs-cli configure --cluster my-flask-cluster --region us-east-1 --default-launch-type FARGATE --config-name ecs-fargate-svs-discovery-config

We can see the outcome from ~/.ecs.

ecs-params.yml:

version: 1

task_definition:

task_execution_role: ecsTaskExecutionRole

ecs_network_mode: awsvpc

task_size:

mem_limit: 0.5GB

cpu_limit: 256

run_params:

network_configuration:

awsvpc_configuration:

subnets:

- "subnet-083f774bd55bc5d5a"

- "subnet-056abc38570d37666"

security_groups:

- "sg-0e3a05aa1381ead6b"

assign_public_ip: ENABLED

The Dockerfile remains the same:

FROM python:3.7-alpine ADD . /code WORKDIR /code RUN pip install -r requirements.txt CMD ["python", "app.py"]

Now that we're ready, let's run ecs-cli compose:

$ ecs-cli compose --project-name ecs-fargate-svs-discovery \ service up --cluster-config ecs-fargate-svs-discovery-config \ --cluster my-flask-cluster INFO[0001] Using ECS task definition TaskDefinition="ecs-fargate-svs-discovery:6" INFO[0001] Created an ECS service service=ecs-fargate-svs-discovery taskDefinition="ecs-fargate-svs-discovery:6" INFO[0002] Updated ECS service successfully desiredCount=1 force-deployment=false service=ecs-fargate-svs-discovery INFO[0017] (service ecs-fargate-svs-discovery) has started 1 tasks: (task 756cda6c-4397-4d8f-a9a2-5eb56047e7d4). timestamp="2019-04-01 22:41:51 +0000 UTC" INFO[0048] Service status desiredCount=1 runningCount=1 serviceName=ecs-fargate-svs-discovery INFO[0048] ECS Service has reached a stable state desiredCount=1 runningCount=1 serviceName=ecs-fargate-svs-discovery

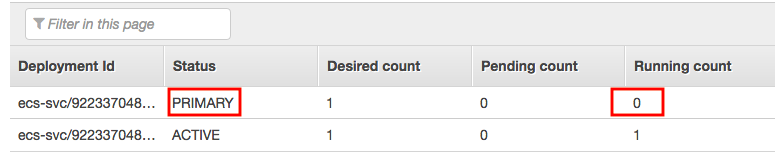

Note that if we just uploaded a new image to the repository, we can simply use "Force new deployment" during the "Update" service:

Then, wait for PRIMAY be active:

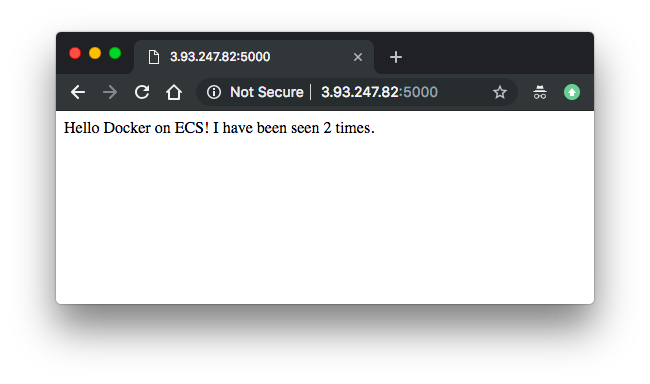

After we deploy the compose file, we can view the containers that are running on our cluster with the ecs-cli ps command:

$ ecs-cli ps --cluster my-flask-cluster Name State Ports TaskDefinition Health f8886ffb-dfc8-4634-acab-1bf9883ddab6/web RUNNING 3.93.247.82:5000->5000/tcp ecs-fargate-svs-discovery:7 UNKNOWN f8886ffb-dfc8-4634-acab-1bf9883ddab6/redis RUNNING ecs-fargate-svs-discovery:7 UNKNOWN

We can see the "ecs-fargate-svs-discovery" containers from our compose file, and also the IP address and port of the web server.

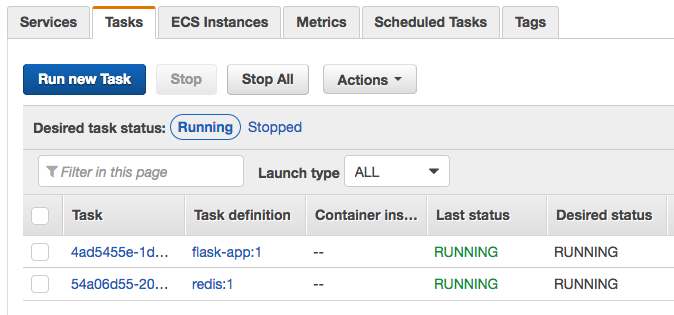

It appears everything goes well. However, until now because we're dealing with communications between containers within the same task with awsvpc network mode (ENI per-task basis),

we're able to use 'localhost'.

But that's not what we want. We should be able to use Service Discovery provided by AWS ECS such as via private DNS namespaces.

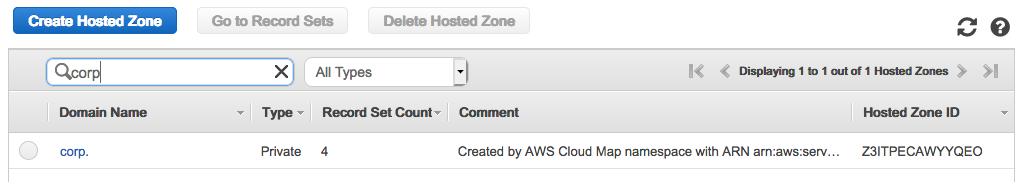

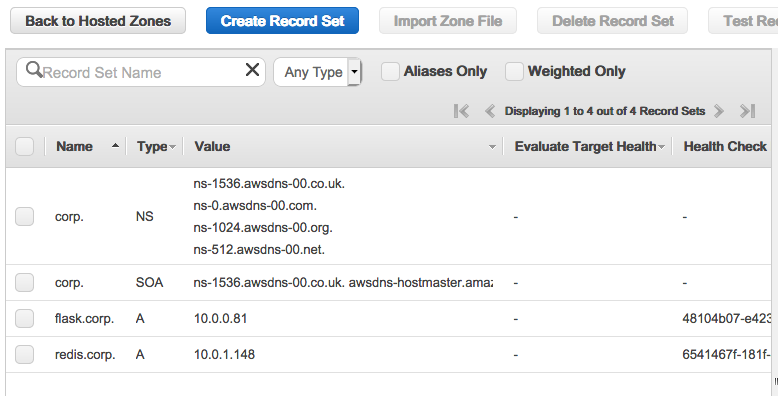

We can use the new service discovery feature of ECS to run the redis container as a service that has a linked DNS record in Route 53. Wherever the redis container gets placed the DNS record will be updated to point at the IP address of the task. Then in our Flask app code we connect to that DNS record.

Got stuck at connection to redis. May need to comback later.

After setting up two services (redis/flask) with equivalent tasks (via Console), failed to connect redis even after setting up service recovery:

$ aws route53 list-resource-record-sets --hosted-zone-id Z3ITPECAWYYQEO --region us-east-1

{

...

{

"Name": "redis.corp.",

"Type": "A",

"SetIdentifier": "54a06d55-20e5-4a26-bb41-d9ff8f2190d9",

"MultiValueAnswer": true,

"TTL": 60,

"ResourceRecords": [

{

"Value": "10.0.1.148"

}

],

"HealthCheckId": "6541467f-181f-4189-b5dd-03b2c02ecc37"

}

]

}

$ aws servicediscovery list-instances --service-id srv-wjhwfjh4dqwhvkjh --region us-east-1

{

"Instances": [

{

"Id": "54a06d55-20e5-4a26-bb41-d9ff8f2190d9",

"Attributes": {

"AVAILABILITY_ZONE": "us-east-1b",

"AWS_INIT_HEALTH_STATUS": "HEALTHY",

"AWS_INSTANCE_IPV4": "10.0.1.148",

"ECS_CLUSTER_NAME": "my-flask-cluster",

"ECS_SERVICE_NAME": "redis",

"ECS_TASK_DEFINITION_FAMILY": "redis",

"REGION": "us-east-1"

}

}

]

}

None of these are not working:

cache = redis.Redis(host='10.0.1.148', port=6379) cache = redis.Redis(host='redis.corp', port=6379)

Let's do a cleanup. To delete the service so that it stops the existing containers and does not try to run any more tasks:

$ ecs-cli compose --project-name ecs-fargate-svs-discovery \ service down --cluster-config ecs-fargate-svs-discovery-config \ --cluster my-flask-cluster INFO[0001] Updated ECS service successfully desiredCount=0 force-deployment=false service=ecs-fargate-svs-discovery INFO[0001] Service status desiredCount=0 runningCount=1 serviceName=ecs-fargate-svs-discovery INFO[0016] Service status desiredCount=0 runningCount=0 serviceName=ecs-fargate-svs-discovery INFO[0016] (service ecs-fargate-svs-discovery) has stopped 1 running tasks: (task 4798a1fb-ec59-4079-aac6-f9e008eec47b). timestamp="2019-04-01 01:36:04 +0000 UTC" INFO[0016] ECS Service has reached a stable state desiredCount=0 runningCount=0 serviceName=ecs-fargate-svs-discovery INFO[0017] Deleted ECS service service=ecs-fargate-svs-discovery INFO[0017] ECS Service has reached a stable state desiredCount=0 runningCount=0 serviceName=ecs-fargate-svs-discovery

Take down our cluster, which cleans up the resources that we created earlier with "ecs-cli up":

$ ecs-cli down --force --cluster-config ecs-fargate-svs-discovery-config \ --cluster my-flask-cluster INFO[0001] Waiting for your cluster resources to be deleted... INFO[0001] Cloudformation stack status stackStatus=DELETE_IN_PROGRESS INFO[0063] Deleted cluster cluster=my-flask-cluster

To delete Route53 zone created by ECS service discovery:

$ aws servicediscovery list-services

{

"Services": [

{

"Id": "srv-wjhwfjh4dqwhvkjh",

"Arn": "arn:aws:servicediscovery:us-east-1:526262051452:service/srv-wjhwfjh4dqwhvkjh",

"Name": "redis"

},

{

"Id": "srv-xnvcxkx6r4akgaf6",

"Arn": "arn:aws:servicediscovery:us-east-1:526262051452:service/srv-xnvcxkx6r4akgaf6",

"Name": "flask"

}

]

}

# aws servicediscovery delete-service --id=<id-from-list-output>

$ aws servicediscovery delete-service --id=srv-wjhwfjh4dqwhvkjh

$ aws servicediscovery delete-service --id=srv-xnvcxkx6r4akgaf6

$ aws servicediscovery list-services

{

"Services": []

}

$ aws servicediscovery list-namespaces

{

"Namespaces": [

{

"Id": "ns-2kr26yqpu7aw7jux",

"Arn": "arn:aws:servicediscovery:us-east-1:526262051452:namespace/ns-2kr26yqpu7aw7jux",

"Name": "corp",

"Type": "DNS_PRIVATE"

}

]

}

# aws servicediscovery delete-namespace --id=<ns-id>

$ aws servicediscovery delete-namespace --id=ns-2kr26yqpu7aw7jux

$ aws servicediscovery list-namespaces

{

"Namespaces": []

}

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization