ECS Fargate

The basics of ECS Fargate available from Deploy Docker Containers and Getting Started with Amazon Elastic Container Service (Amazon ECS) using Fargate

In this post, we will see how to run a Docker-enabled sample application on an Amazon ECS cluster behind a load balancer, test the sample application, and delete the resources.

AWS Fargate allows us to run containers without having to manage clusters. With AWS Fargate, we no longer have to provision, configure, and scale clusters to run containers so that we can focus on designing and building our applications instead of managing the infrastructure that runs them. Actually, it's a serverless container orchestration platform.

We can think of AWS Fargate as Container-as-a-Service.

By default, ECS uses the bridge network mode. Fargate requires using the awsvpc network mode. The implication of using awsvpc network mode is that the tasks with Fargate can get the same VPC networking and security controls at the task level that were previously only available with EC2 instances when we use the bridge network mode.

Now with Fargate, each task gets its own elastic networking interface (eni) and IP address so that multiple applications or copies of a single application can run on the same port number without any conflicts.

When we use Docker locally, we use a command like this to run our app container:

docker run --rm -p 8080:80 myapp

where the -p instructs Docker to make port 80 on the container available as port 8080 on the host.

When using Fargate, however, we don't have any control over the host, so we only specify the ports that we want to make available in the portMappings section of our container definition. With Fargate, we set the containerPort to the port that the container listens to (e.g., 80 for our app). Fargate will ensure that we then can access this container on the same port on the ENI.

Note that via tasks we can run multiple containers listening to the same port, one container listening to the same port per task, however, we cannot run multiple containers in a task that listen to the same port!

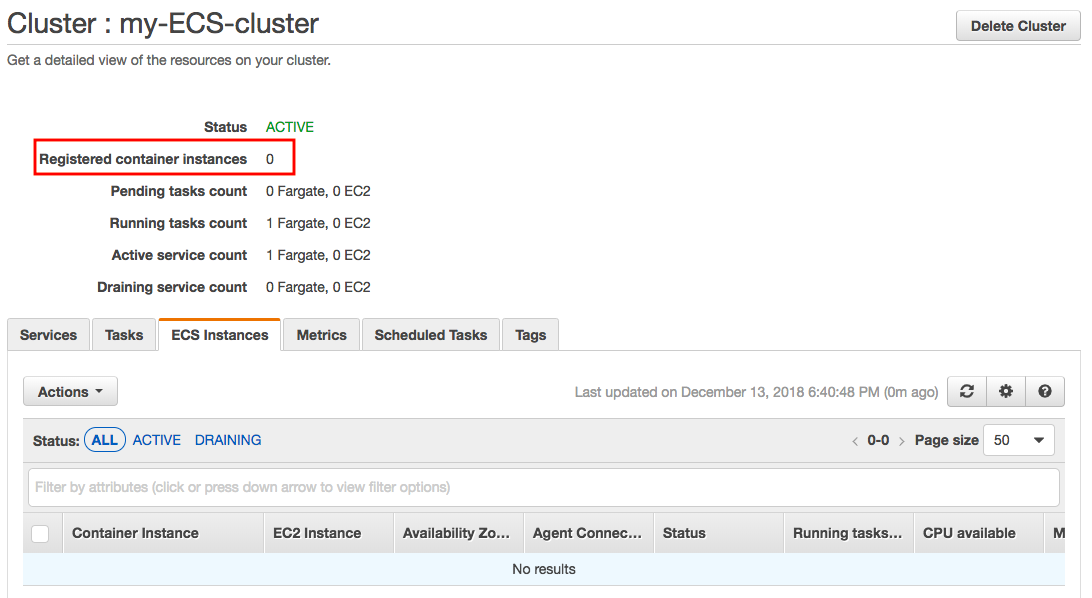

As we can see later, here is the Fargate ECS dashboard that shows us the running ECS services and tasks without any EC2 instance:

Compared with EC2 launch type, Fargate launch type, all we have to do is package our application in containers, specify the CPU and memory requirements, define networking and IAM policies, and launch the application. The EC2 launch type, on the hand, allows us to have server-level, more granular control over the infrastructure that runs our container applications, which gives us a broader range of customization options compared with Fargate launch type.

ECS allows us to scale at the task level: we can configure rules to scale the number of tasks running. However, these are containers running on EC2 instances, which may not have available CPU or RAM resources. So in practice, we may have to define another Auto-scaling rule at the ECS cluster level, where we define scale up or down based on the CPU or RAM reservation on the cluster.

With Fargate, the auto-scaling much easier: we simply need to define it at the task level, and we are good to go.

AWS Fargate pricing is calculated based on the vCPU and memory resources used from the time we start to download our container image (docker pull) until the Amazon ECS Task terminates.

Though the cost per container in Fargate will be higher, the maintenance costs probably be lower than ECS considering the hours for troubleshooting and the effort we need to put for upgrading ECS instance agents and updating EC2 instance packages.

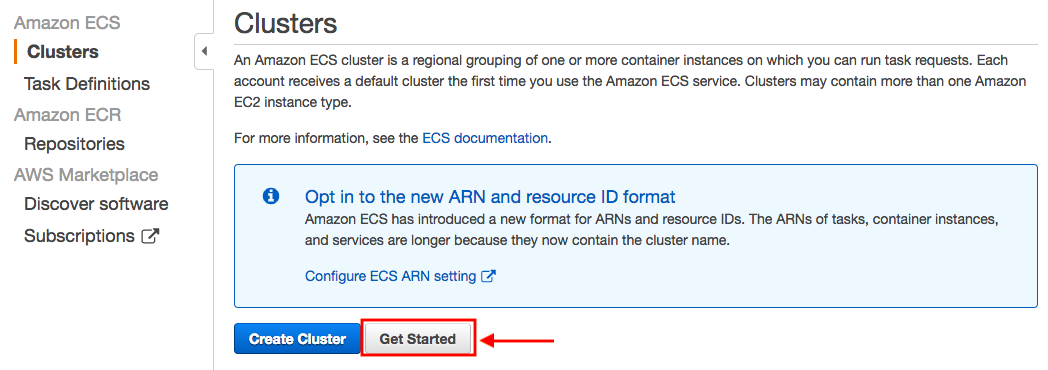

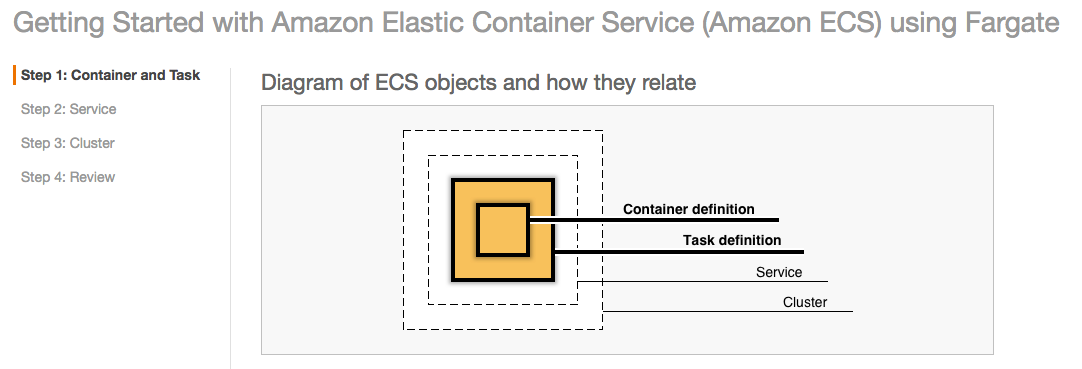

The Amazon ECS first run wizard will guide us through creating a cluster and launching a sample web application.

We can start it from the console:

We'll use Amazon Elastic Container Registry (Amazon ECR) to create an image repository and push an image to it as part of the first run wizard.

Let's create an Amazon ECR repository to store our nginx image. Note the repositoryUri in the output:

$ aws ecr create-repository --repository-name ecs-fargate-repo

{

"repository": {

"repositoryArn": "arn:aws:ecr:us-east-1:526262051452:repository/ecs-fargate-repo",

"registryId": "526262051452",

"repositoryName": "ecs-fargate-repo",

"repositoryUri": "526262051452.dkr.ecr.us-east-1.amazonaws.com/ecs-fargate-repo",

"createdAt": 1544741719.0

}

}

We want to test Fargate with "nginx". So, let's pull it from DockerHub and store it locally:

$ docker pull nginx Using default tag: latest latest: Pulling from library/nginx a5a6f2f73cd8: Already exists 1ba02017c4b2: Pull complete 33b176c904de: Pull complete Digest: sha256:5d32f60db294b5deb55d078cd4feb410ad88e6fe77500c87d3970eca97f54dba Status: Downloaded newer image for nginx:latest

Then, tag the "nginx" image with the repositoryUri value from the previous step:

$ docker tag nginx 526262051452.dkr.ecr.us-east-1.amazonaws.com/ecs-fargate-repo

Run a docker login authentication command string from the output we get the "aws ecr get-login --no-include-email" command. The command provides an authorization token that is valid for 12 hours:

$ $(aws ecr get-login --no-include-email --region us-east-1) WARNING! Using --password via the CLI is insecure. Use --password-stdin. Login Succeeded

Now, we want to push the local "nginx" image to Amazon ECR with the repositoryUri value from the earlier step.

$ docker push 526262051452.dkr.ecr.us-east-1.amazonaws.com/ecs-fargate-repo The push refers to repository [526262051452.dkr.ecr.us-east-1.amazonaws.com/ecs-fargate-repo] ece4f9fdef59: Pushed ad5345cbb119: Pushed ef68f6734aa4: Pushed latest: digest: sha256:87e9b6904b4286b8d41bba4461c0b736835fcc218f7ecbe5544b53fdd467189f size: 948

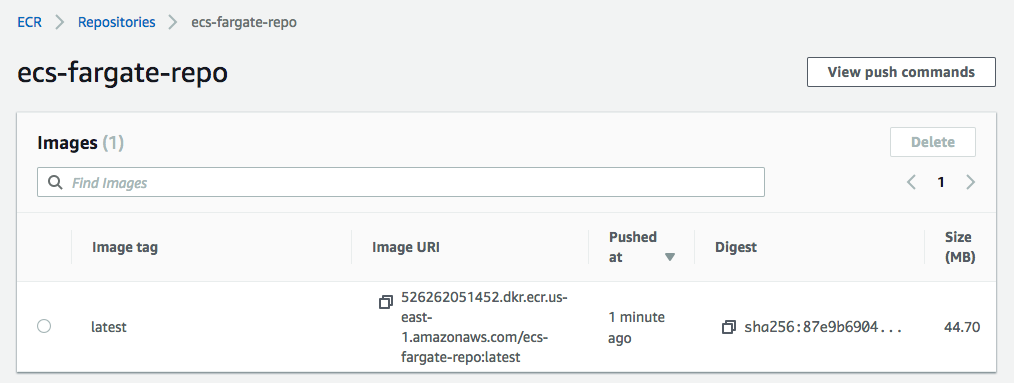

Check if the image in the ecr repository:

$ aws ecr describe-images --repository-name ecs-fargate-repo

{

"imageDetails": [

{

"registryId": "526262051452",

"repositoryName": "ecs-fargate-repo",

"imageDigest": "sha256:87e9b6904b4286b8d41bba4461c0b736835fcc218f7ecbe5544b53fdd467189f",

"imageTags": [

"latest"

],

"imageSizeInBytes": 44697677,

"imagePushedAt": 1544741846.0

}

]

}

Or we can check it from console:

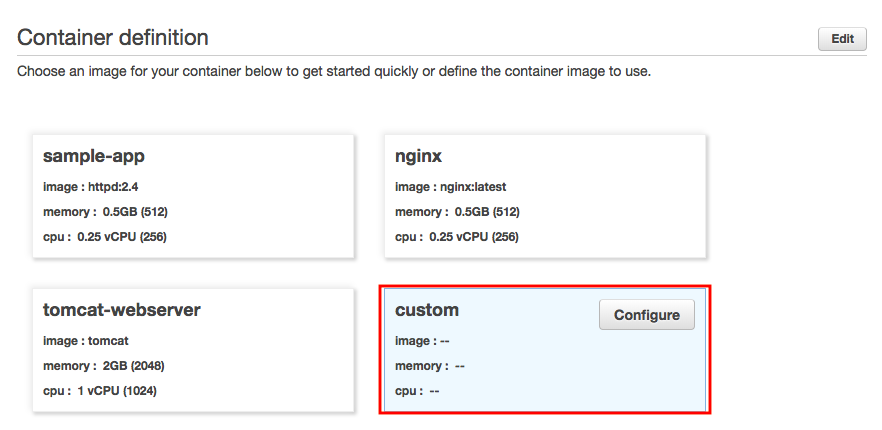

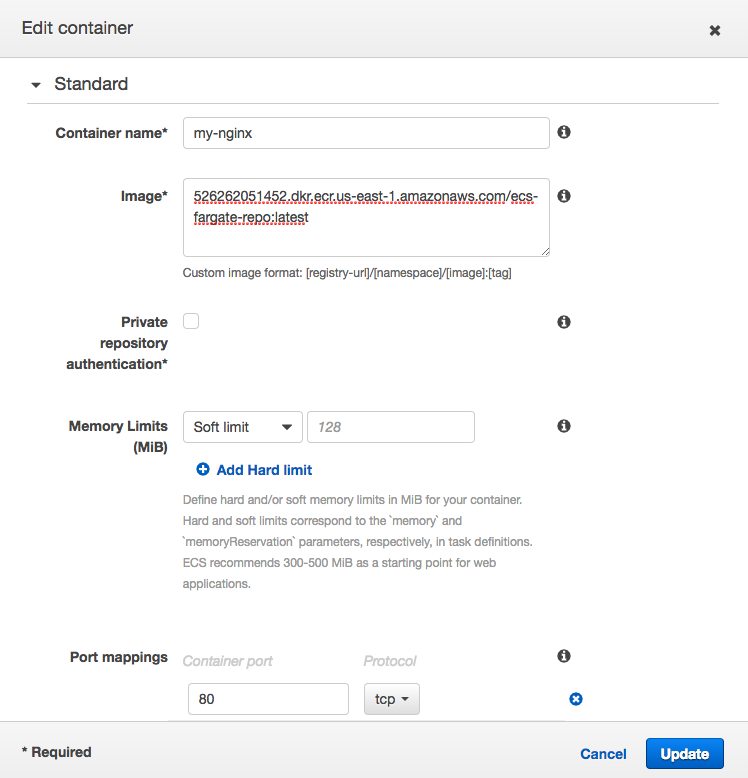

As mentioned earlier, we'll use Amazon Elastic Container Registry (Amazon ECR). So, we may want to click "Configure" to use custom container from ECR:

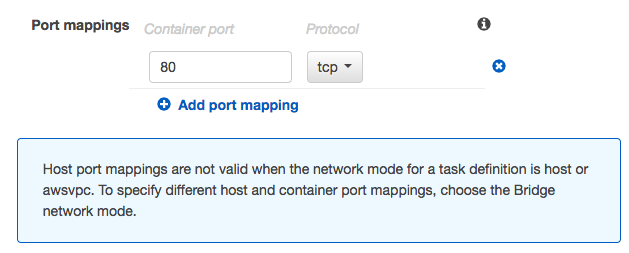

Regarding the host mapping, please see the next section ("Task definitions").

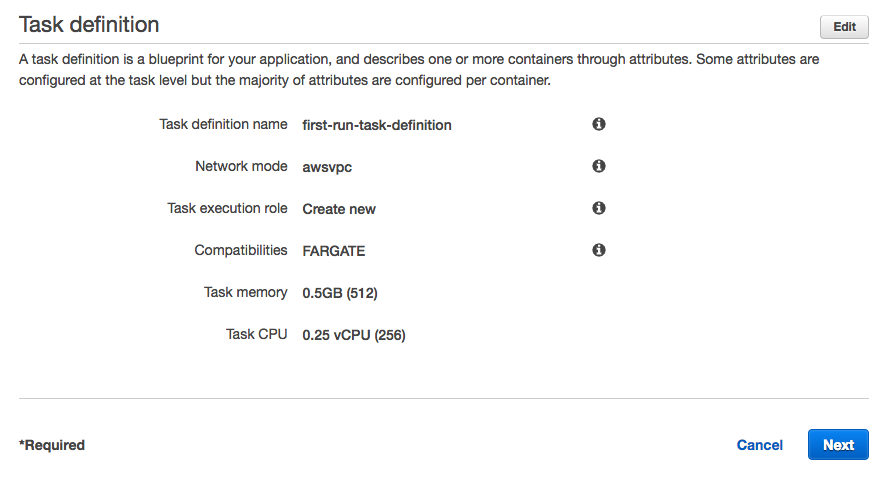

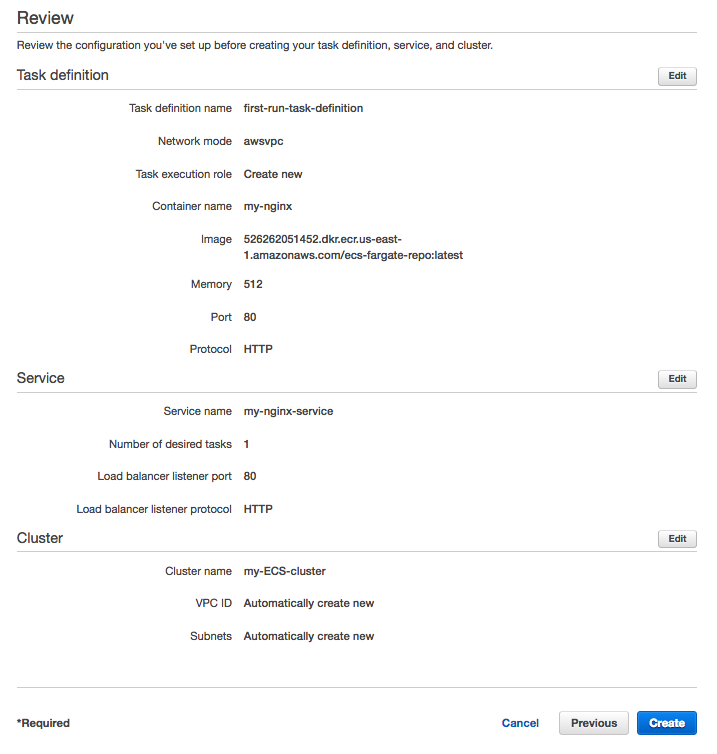

Here, we specify a task definition so Amazon ECS knows which Docker image to use for containers, how many containers to use in the task, and the resource allocation for each container.

Note that because we are using containers in a task with the awsvpc (same for host network mode), exposed ports was specified using containerPort. So, the hostPort is set the same value as the containerPort (AWS Documentation => Amazon Elastic Container Service => API Reference => Data Types => PortMapping)

This is inevitable because there is no host to manage. So, we only specify a containerPort value, not a hostPort value.

Click "Next.

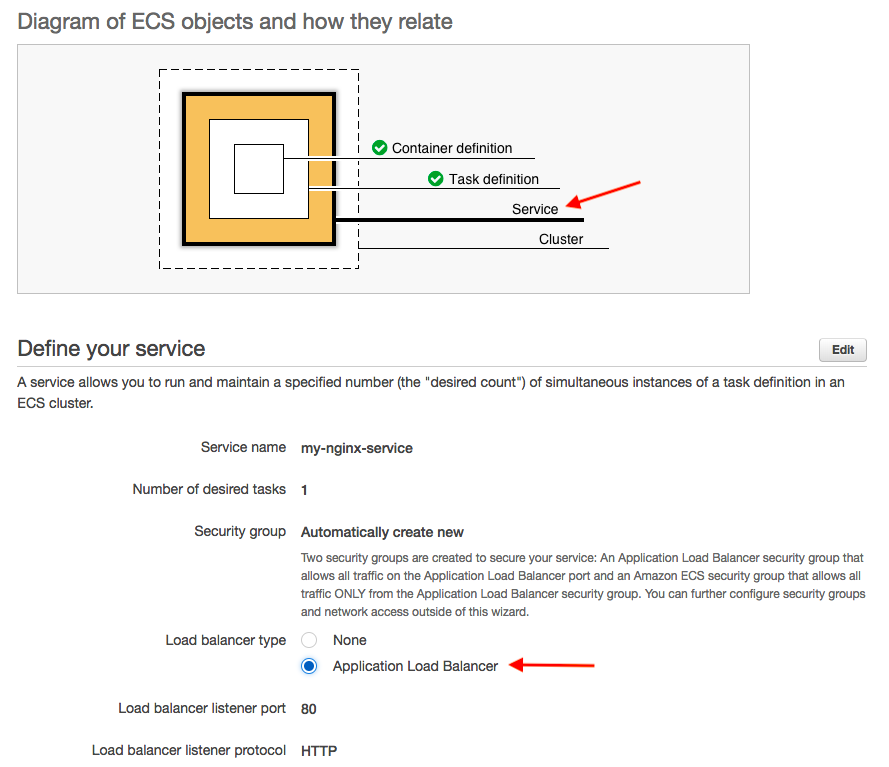

Now that we have created a task definition, we will configure the Amazon ECS service. By running an application as a service, Amazon ECS will auto-recover any stopped tasks and maintain the number of copies we specify: it is meant to run indefinitely, so by running it as a service, it will restart if the task becomes unhealthy or unexpectedly stops.

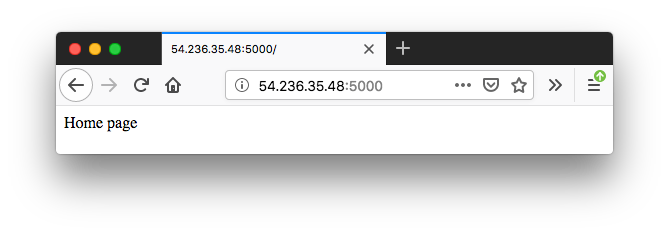

We can access our container app without using LB via Public IP which we can get from the Task Details page under the network section. For example, though our current container port 80, if the container is running on port 5000, we can see the app using http://<PUBLIC_IP>:5000/:

However, in this post, we're going to use a load balancer for our service.

Note that for awsvpc mode, and therefore for Fargate, we use the IP target type instead of the instance target type.

The two security groups will be created to secure our service:

- An Application Load Balancer security group that allows all traffic on the Application Load Balancer port

- An ECS security group that allows all traffic ONLY from the Application Load Balancer security group.

Note also that ECS will create a Service IAM Role named ecsServiceRole if it has not been created.

Review the settings and select "Next"

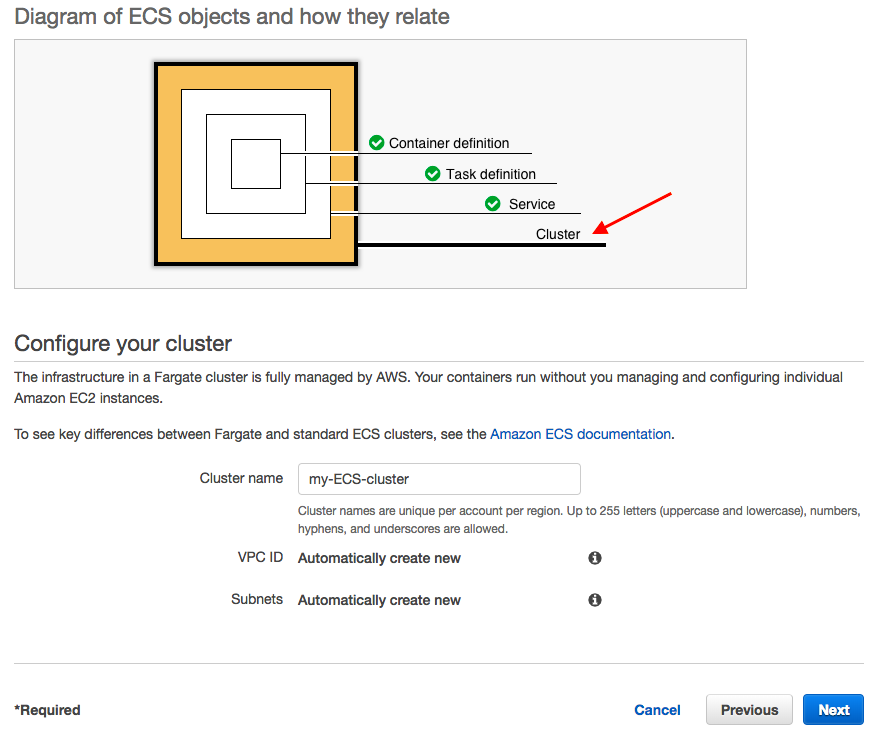

Our Amazon ECS tasks run on a cluster, which is the set of container instances running the Amazon ECS container agent.

We have a final chance to review our task definition, task configuration, and cluster configurations before launching.

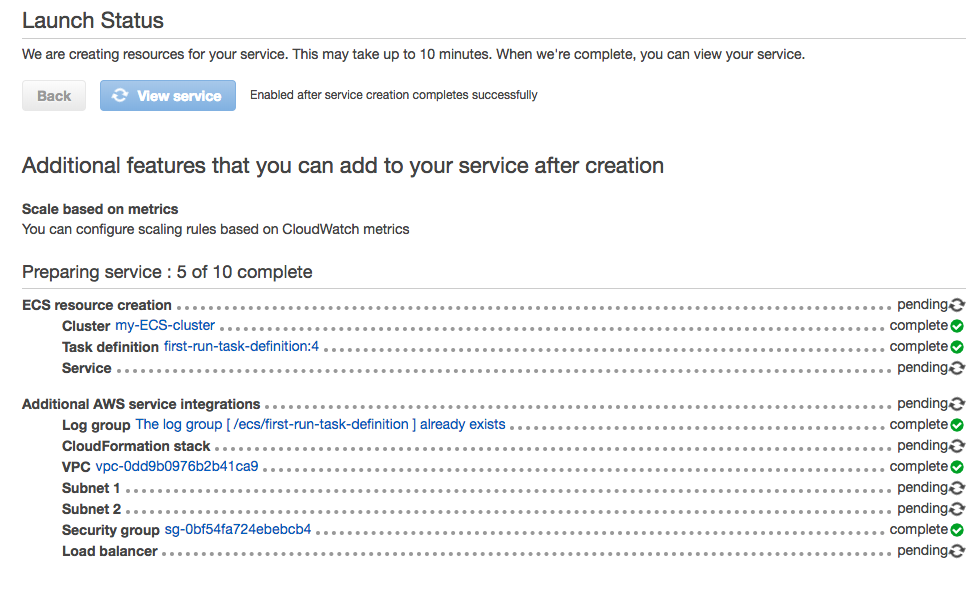

Select "Create", then we can see the launch status:

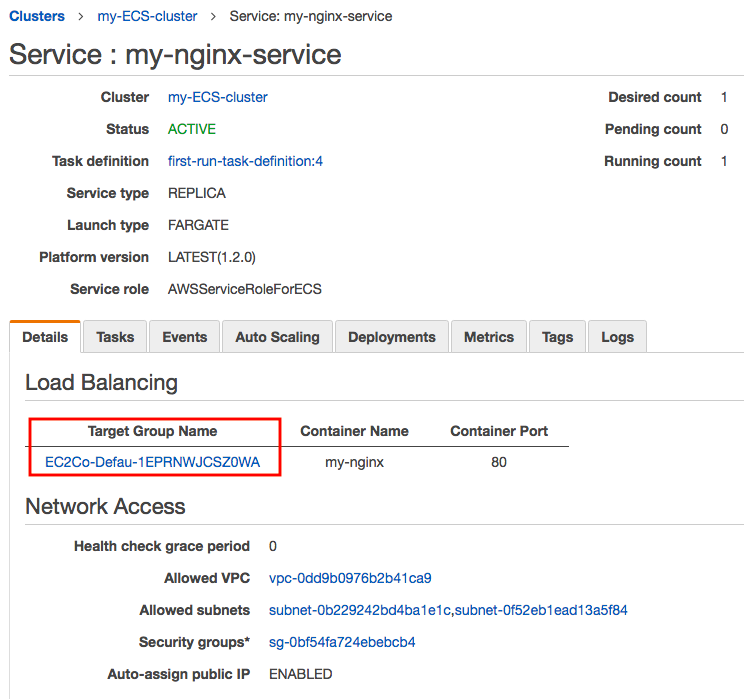

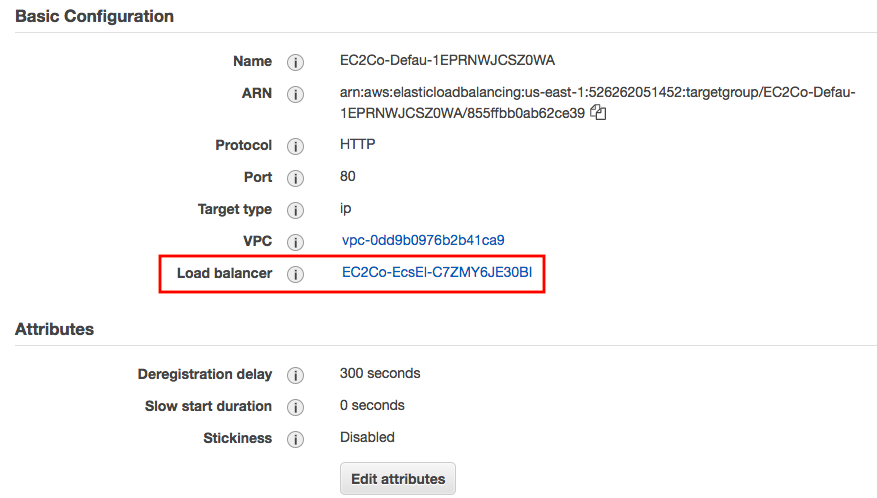

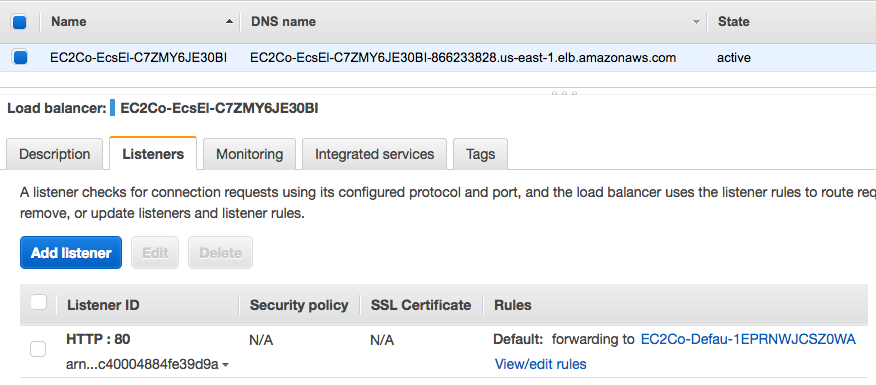

Click on Target Group Name:

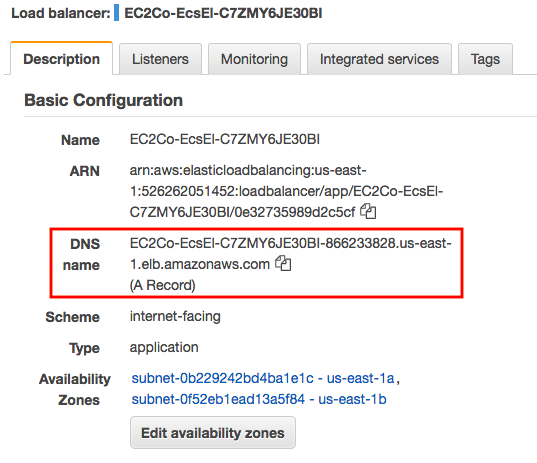

It leads us to the Load Balancer and DNS:

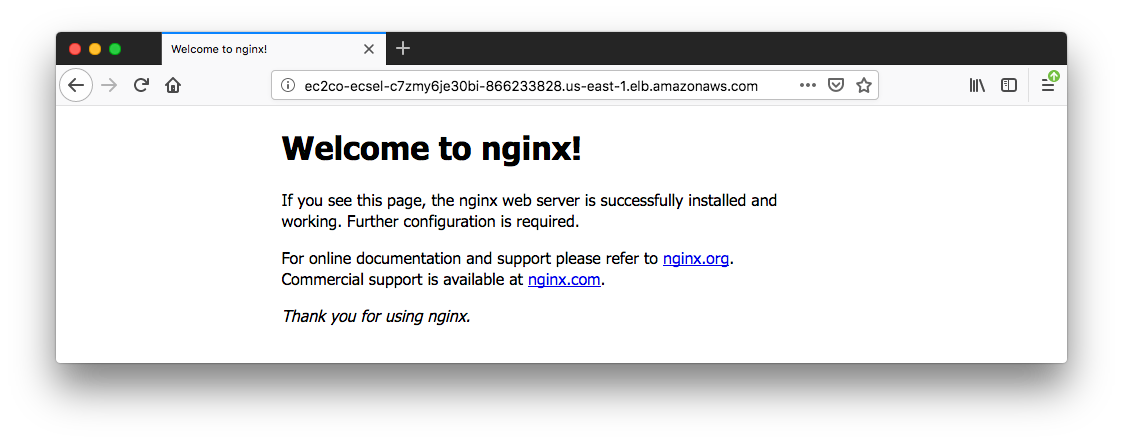

Copy the ELB DNS name and paste it into a browser:

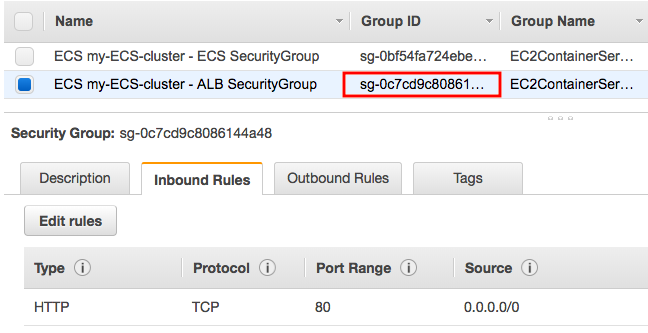

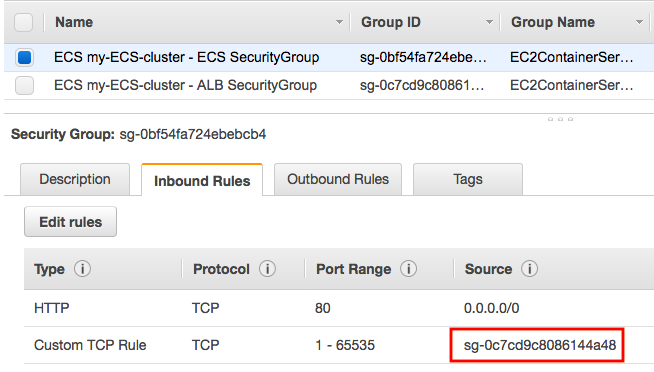

The wizard set up Security groups for us.

Load balancer security group:

ECS security group:

The Two security groups were setup as the following:

- An Application Load Balancer security group that allows all traffic on the Application Load Balancer port

- An ECS security group that allows all traffic ONLY from the Application Load Balancer security group.

ALB setup with forwarded to the target group:

When we are finished using a particular Amazon ECS cluster, we should clean up the resources associated with it to avoid incurring charges for resources that we are not using.

If our cluster contains any services, we should first scale down the desired count of tasks in these services to 0 so that Amazon ECS does not try to start new tasks on our container instances while we are cleaning up:

$ aws ecs update-service --cluster my-ECS-cluster --service my-nginx-service --desired-count 0 --region us-east-1

Before we can delete a cluster, we must delete the services inside that cluster. After our service has scaled down to 0 tasks, we can delete it:

$ aws ecs delete-service --cluster my-ECS-cluster --service my-nginx-service --region us-east-1

Before we can delete a cluster, we must deregister the container instances inside that cluster. But in the ECS with Fargate, we do not have any instance.

After we have removed the active resources from our Amazon ECS cluster, we can delete it:

$ aws ecs delete-cluster --cluster my-ECS-cluster --region us-east-1

$ aws ecs list-clusters

{

"clusterArns": [

"arn:aws:ecs:us-east-1:526262051452:cluster/default"

]

}

Our cluster has been deleted!

We may want to delete the LB we created as well.

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization