Dockerfiles : building Docker images automatically - FROM, MAINTAINER, and build context

We create Docker containers using [base] images. An image can be basic, with nothing but the operating-system fundamentals, or it can consist of a sophisticated pre-built application stack ready for launch.

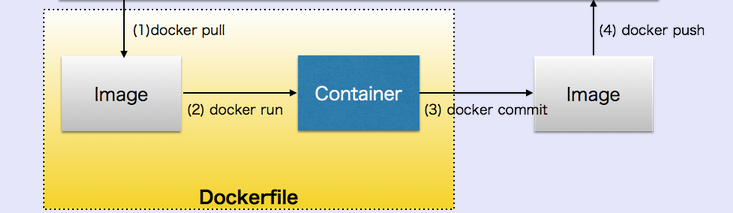

When we build images with docker, each action taken (i.e. a command executed such as apt-get install) forms a new layer on top of the previous one. These base images then can be used to create new containers.

Image source: Docker

In this chapter, we're going to learn how to automate this process via instructions in Dockerfiles. A Dockerfile is a text document that contains all the commands we would normally execute manually in order to build a Docker image. By calling docker image build from our terminal, we can have Docker build our image executing the instructions successively step-by-step, layer-by-layer, automatically from a source (base) image.

The docker project offers higher-level tools which work together, built on top of some Linux kernel features. The goal is to help developers and system administrators port applications with all of their dependencies included, and get them running across systems and machines headache free.

Docker achieves this by creating safe, LXC (Linux Containers) based environments for applications called docker containers. These containers are created using docker images, which can be built either by executing commands manually or automatically through Dockerfiles.

Each Dockerfile is a script, composed of various commands (instructions) and arguments listed successively to automatically perform actions on a base image in order to create (or form) a new one. They are used for organizing things and greatly help with deployments by simplifying the process start-to-finish.

Dockerfiles begin with defining an image FROM which the build process starts. Followed by various other methods, commands and arguments (or conditions), in return, provide a new image which is to be used for creating docker containers.

Here is our Dockerfile we're going to playing with in this chapter. We'll run instructions from this file step by step by uncommenting and commenting each line.

FROM debian:latest MAINTAINER devops@bogotobogo.com # 1 - RUN RUN apt-get update && DEBIAN_FRONTEND=noninteractive apt-get install -yq apt-utils RUN DEBIAN_FRONTEND=noninteractive apt-get install -yq htop RUN apt-get clean # 2 - CMD CMD ["htop"] CMD ["ls", "-l"] # 3 - WORKDIR and ENV WORKDIR /root ENV DZ version1 # 4 - ADD ADD run.sh /root/run.sh CMD ["./run.sh"] # 5 - ENTRYPOINT (vs CMD) ENTRYPOINT ["./run.sh"] CMD ["arg1"]

Here is the format of the Dockerfile:

# Comment INSTRUCTION arguments

The Instruction is not case-sensitive, however convention is for them to be UPPERCASE in order to distinguish them from arguments more easily.

Docker runs the instructions in a Dockerfile in order. The first instruction must be FROM in order to specify the Base Image from which we are building.

Docker will treat lines that begin with # as a comment.

The FROM instruction sets the Base Image for subsequent instructions. As such, a valid Dockerfile must have FROM as its first instruction. The image can be any valid image. But it is especially easier to start by pulling an image from the Public Repositories.

MAINTAINER <name>

The MAINTAINER instruction allows us to set the Author field of the generated images.

To build an image from a source repository, we create a description file called Dockerfile at the root of our repository. This file will describe the steps to assemble the image.

Then we call docker image build with the path of our source repository as the argument:

Usage: docker image build [OPTIONS] PATH | URL | -

It will build a new image from the source code at PATH.

Here is an example using the current directory (".") as its path:

$ sudo docker image build .

The path to the source repository defines where to find the context of the build. The build is run by the Docker daemon, not by the CLI, so the whole context must be transferred to the daemon. The Docker CLI reports "Sending build context to Docker daemon" when the context is sent to the daemon.

Note: Avoid using root directory, /, as the root of the source repository. The docker image build command will use whatever directory contains the Dockerfile as the build context (including all of its subdirectories). The build context will be sent to the Docker daemon before building the image, which means if we use / as the source repository, the entire contents of our hard drive will get sent to the daemon (and thus to the machine running the daemon). We probably don't want that.

In most cases, it's best to put each Dockerfile in an empty directory, and then add only the files needed for building that Dockerfile to that directory. To further speed up the build, we can exclude files and directories by adding a .dockerignore file to the same directory.

The Docker daemon will run our steps one-by-one, committing the result to a new image if necessary, before finally outputting the ID of our new image. The Docker daemon will automatically clean up the context we sent.

Note that each instruction is run independently, and causes a new image to be created.

Whenever possible, Docker will re-use the intermediate images, accelerating docker build significantly.

$ docker build --help Usage: docker build [OPTIONS] PATH | URL | - Build a new image from the source code at PATH -t, --tag="" Repository name (and optionally a tag) to be applied to the resulting image in case of success

Continued in Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching.

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization