Docker: Setting up a private cluster on GCP Kubernetes

In this post, we'll learn how to create a private cluster in Google Kubernetes Engine.

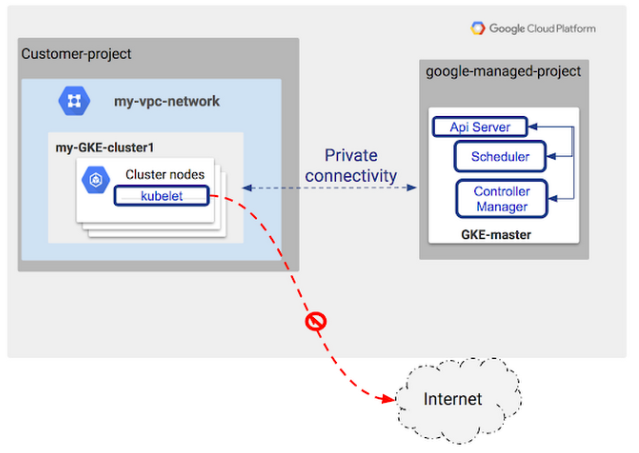

In Kubernetes Engine, a private cluster is a cluster that makes our master (every cluster has a Kubernetes API server called the master) inaccessible from the public internet. In a private cluster, nodes have internal RFC 1918 IP addresses only and do not have public IP addresses, which ensures that their workloads are isolated from the public Internet. Nodes and masters communicate with each other using VPC peering.

Picture from Kubernetes Engine Private Clusters now available in beta

A private cluster can use an HTTP(S) load balancer or a network load balancer to accept incoming traffic, even though the cluster nodes do not have public IP addresses. A private cluster can also use an internal load balancer to accept traffic from within our VPC network.

The private clusters use the following GCP features Setting up a private cluster:

VPC Network Peering:

Private clusters require VPC Network Peering. Your VPC network contains the cluster nodes, but a separate VPC network in a Google-owned project contains the master. The two VPC networks are connected using VPC Network Peering. Traffic between nodes and the master is routed entirely using internal IP addresses.

Private Google Access:

Private nodes do not have outbound Internet access because they don't have external IP addresses. Private Google Access provides private nodes and their workloads with limited outbound access to Google Cloud Platform APIs and services over Google's private network. For example, Private Google Access makes it possible for private nodes to pull container images from Google Container Registry, and to send logs to Stackdriver.

Google Cloud Shell is loaded with development tools and it offers a persistent 5GB home directory and runs on the Google Cloud. Google Cloud Shell provides command-line access to our GCP resources. We can activate the shell: in GCP console, on the top right toolbar, click the Open Cloud Shell button:

In the dialog box that opens, click "START CLOUD SHELL".

gcloud is the command-line tool for Google Cloud Platform. It comes pre-installed on Cloud Shell and supports tab-completion.

Setup the zone in Cloud Shell:

$ gcloud config set compute/zone us-central1-a Updated property [compute/zone].

We can list all available zones with: gcloud compute zones list:

$ gcloud compute zones list NAME REGION STATUS NEXT_MAINTENANCE TURNDOWN_DATE us-east1-b us-east1 UP us-east1-c us-east1 UP us-east1-d us-east1 UP us-east4-c us-east4 UP us-east4-b us-east4 UP us-east4-a us-east4 UP us-central1-c us-central1 UP us-central1-a us-central1 UP us-central1-f us-central1 UP us-central1-b us-central1 UP us-west1-b us-west1 UP us-west1-c us-west1 UP us-west1-a us-west1 UP ...

When we create a private cluster, we must specify a /28 CIDR range for the VMs that run the Kubernetes master components and we need to enable IP aliases.

Then, we'll create a cluster named private-cluster, and specify a CIDR range of 172.16.0.16/28 for the masters. When we enable IP aliases, we let Kubernetes Engine automatically create a subnetwork for us.

We'll create the private cluster by using the --private-cluster, --master-ipv4-cidr, and --enable-ip-alias flags.

Run the following to create the cluster:

$ gcloud beta container clusters create private-cluster \

--private-cluster \

--master-ipv4-cidr 172.16.0.16/28 \

--enable-ip-alias \

--create-subnetwork ""

...

kubeconfig entry generated for private-cluster.

NAME LOCATION MASTER_VERSION MASTER_IP MACHINE_TYPE NODE_VERSION NUM_NODES STATUS

private-cluster us-central1-a 1.11.7-gke.4 35.225.169.164 n1-standard-1 1.11.7-gke.4 3 RUNNING

List the subnets in the default network:

$ gcloud compute networks subnets list --network default NAME REGION NETWORK RANGE default us-west2 default 10.168.0.0/20 default asia-northeast1 default 10.146.0.0/20 default us-west1 default 10.138.0.0/20 default southamerica-east1 default 10.158.0.0/20 default europe-west4 default 10.164.0.0/20 default asia-east1 default 10.140.0.0/20 default europe-north1 default 10.166.0.0/20 default asia-southeast1 default 10.148.0.0/20 default us-east4 default 10.150.0.0/20 default europe-west1 default 10.132.0.0/20 default europe-west2 default 10.154.0.0/20 default europe-west3 default 10.156.0.0/20 default australia-southeast1 default 10.152.0.0/20 default asia-south1 default 10.160.0.0/20 default us-east1 default 10.142.0.0/20 default us-central1 default 10.128.0.0/20 gke-private-cluster-subnet-d1a99990 us-central1 default 10.33.40.0/22 default asia-east2 default 10.170.0.0/20 default northamerica-northeast1 default 10.162.0.0/20

In the output, find the name of the subnetwork that was automatically created for our cluster. For example, gke-private-cluster-subnet-xxxxxxxx. Save the name of the cluster, we'll use it in the next step.

Now get information about the automatically created subnet, replacing [SUBNET_NAME] with our subnet by running:

# gcloud compute networks subnets describe [SUBNET_NAME] --region us-central1 $ gcloud compute networks subnets describe gke-private-cluster-subnet-d1a99990 --region us-central1 ... gatewayAddress: 10.33.40.1 id: '8907292442116278553' ipCidrRange: 10.33.40.0/22 kind: compute#subnetwork name: gke-private-cluster-subnet-d1a99990 ... privateIpGoogleAccess: true ... secondaryIpRanges: - ipCidrRange: 10.33.0.0/20 rangeName: gke-private-cluster-services-d1a99990 - ipCidrRange: 10.36.0.0/14 rangeName: gke-private-cluster-pods-d1a99990 ...

The output shows us the primary address range with the name of our GKE private cluster and the secondary ranges.

In the output, we can see that one secondary range is for pods and the other secondary range is for services.

Notice that privateIPGoogleAccess is set to true. This enables our cluster hosts, which have only private IP addresses, to communicate with Google APIs and services.

At this point, the only IP addresses that have access to the master are the addresses in these ranges:

- The primary range of our subnetwork. This is the range used for nodes.

- The secondary range of our subnetwork that is used for pods.

To provide additional access to the master, we must authorize selected address ranges.

Let's create a VM instance which we'll use to check the connectivity to Kubernetes clusters:

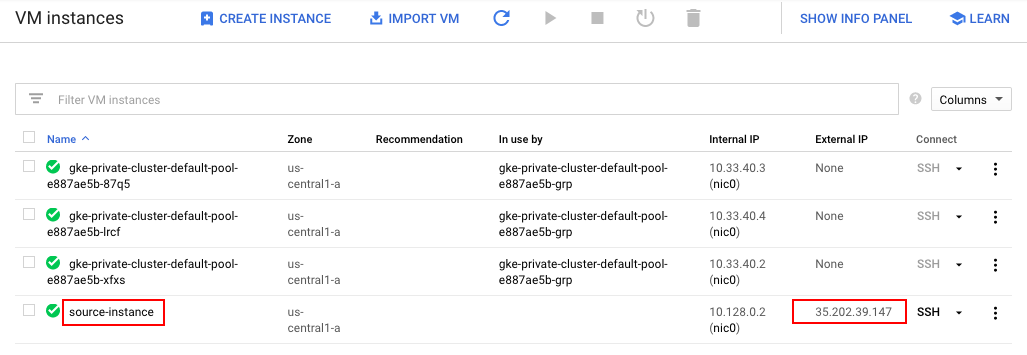

$ gcloud compute instances create source-instance --zone us-central1-a --scopes 'https://www.googleapis.com/auth/cloud-platform' NAME ZONE MACHINE_TYPE PREEMPTIBLE INTERNAL_IP EXTERNAL_IP STATUS source-instance us-central1-a n1-standard-1 10.128.0.2 35.202.39.147 RUNNING

We can also get the <External_IP> of the source-instance with:

$ gcloud compute instances describe source-instance --zone us-central1-a | grep natIP natIP: 35.202.39.147

We'll use the <nat_IP> address in later steps.

Run the following to Authorize our external address range, replacing [MY_EXTERNAL_RANGE] with the CIDR range of the external addresses from the previous output (our CIDR range is natIP/32). With CIDR range as natIP/32, we are "whitelisting one specific IP address":

# gcloud container clusters update private-cluster \

--enable-master-authorized-networks \

--master-authorized-networks [MY_EXTERNAL_RANGE]

$ gcloud container clusters update private-cluster \

--enable-master-authorized-networks \

--master-authorized-networks 35.202.39.147/32

Updating private-cluster...done.

...

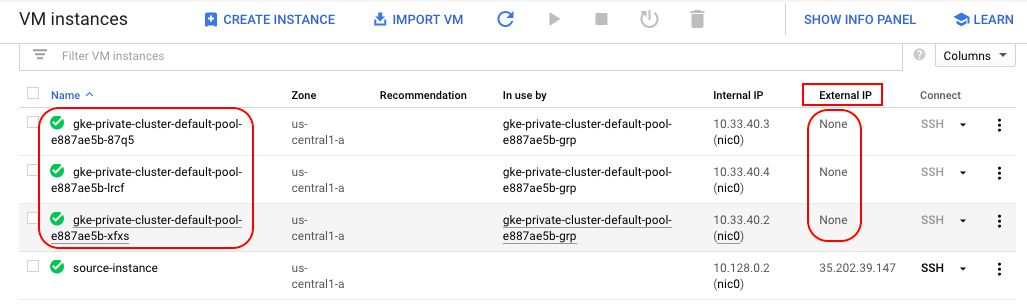

Now that we have access to the master from a range of external addresses, we'll install kubectl so we can use it to get information about our cluster. For example, we can use kubectl to verify that our nodes do not have external IP addresses.

SSH into source-instance with:

$ gcloud compute ssh source-instance --zone us-central1-a ... This tool needs to create the directory [/home/google2522490_student/.ssh] before being able to generate SSH keys. Do you want to continue (Y/n)? y Generating public/private rsa key pair. Enter passphrase (empty for no passphrase): Enter same passphrase again: ...

In SSH shell install kubectl:

$ $ sudo apt-get install kubectl Reading package lists... Done Building dependency tree Reading state information... Done The following NEW packages will be installed: kubectl 0 upgraded, 1 newly installed, 0 to remove and 0 not upgraded. Need to get 7,852 kB of archives. After this operation, 39.3 MB of additional disk space will be used. Get:1 http://packages.cloud.google.com/apt cloud-sdk-stretch/main amd64 kubectl amd64 1.13.3-00 [7,852 kB] Fetched 7,852 kB in 0s (43.7 MB/s) Selecting previously unselected package kubectl. (Reading database ... 34432 files and directories currently installed.) Preparing to unpack .../kubectl_1.13.3-00_amd64.deb ... Unpacking kubectl (1.13.3-00) ... Setting up kubectl (1.13.3-00) ...

Configure access to the Kubernetes cluster from SSH shell with:

$ gcloud container clusters get-credentials private-cluster --zone us-central1-a Fetching cluster endpoint and auth data. kubeconfig entry generated for private-cluster.

Verify that our cluster nodes do not have external IP addresses:

$ kubectl get nodes --output yaml | grep -A4 addresses

addresses:

- address: 10.33.40.3

type: InternalIP

- address: ""

type: ExternalIP

--

addresses:

- address: 10.33.40.4

type: InternalIP

- address: ""

type: ExternalIP

--

addresses:

- address: 10.33.40.2

type: InternalIP

- address: ""

type: ExternalIP

The output shows that the nodes have internal IP addresses but do not have external addresses.

Here is another command we can use to verify that our nodes do not have external IP addresses:

$ kubectl get nodes --output wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VER

SION CONTAINER-RUNTIME

gke-private-cluster-default-pool-e887ae5b-87q5 Ready <none> 41m v1.11.7-gke.4 10.33.40.3 Container-Optimized OS from Google 4.14.89+

docker://17.3.2

gke-private-cluster-default-pool-e887ae5b-lrcf Ready <none> 41m v1.11.7-gke.4 10.33.40.4 Container-Optimized OS from Google 4.14.89+

docker://17.3.2

gke-private-cluster-default-pool-e887ae5b-xfxs Ready <none> 41m v1.11.7-gke.4 10.33.40.2 Container-Optimized OS from Google 4.14.89+

docker://17.3.2

Delete the Kubernetes cluster:

$ gcloud container clusters delete private-cluster --zone us-central1-a The following clusters will be deleted. - [private-cluster] in [us-central1-a] Do you want to continue (Y/n)? Y Deleting cluster private-cluster...done. ...

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization