Docker : Dockerfile - NodeJS with hostname

In this post, we'll learn how to run a Node.js application in a Docker container, and then we'll run it in Kubernetest (actually, on minikube).

To package our app into an image, we first need to create a Dockerfile, which will contain a list of instructions that Docker will perform when building the image.

The Dockerfile needs to be in the same directory as the app.js file (see next section) and should contain the commands as the following:

FROM node:7 ADD app.js /app.js ENTRYPOINT ["node", "app.js"]

The FROM line defines the container image we'll use as a starting point (the base image we're building on top of). In our case, we're using the node container image, tag 7.

In the second line, we're adding our app.js file from our local directory into the root directory in the image, under the same name (app.js).

Finally, in the third line, we're defining what command should be executed when somebody runs the image.

In our case, the command is node app.js.

Before we build our Docker images, we need to create a simple web application in Node.js.

Let's create a directory ('NODEJS') for our project in our non-root user's home directory.

$ mkdir NODEJS $ cd NODEJS

This will be the root directory of our project.

Now we'll build a simple Node.js web application and package it into a container image. The application will accept HTTP requests and respond with the hostname of the machine it's running in. This way, we'll see that an app running inside a container sees its own hostname and not that of the host machine, even though it's running on the host like any other process.

This will be useful when we deploy the app on Kubernetes and scale it out and we'll see our HTTP requests hitting different instances of the app.app.js:

const http = require('http');

const os = require('os');

console.log("bogo server starting and listening on 8080...");

var handler = function(request, response) {

console.log("Received request from " + request.connection.remoteAddress);

response.writeHead(200);

response.end("You've hit " + os.hostname() + "\n");

};

var www = http.createServer(handler);

www.listen(8080);

It starts up an HTTP server on port 8080. The server responds with an HTTP response status code 200 OK and the text "You've hit <hostname>" to every request. The request handler also logs the client's IP address to the standard output, which we'll need later.

The returned hostname is the server's actual hostname, not the one the client sends in the HTTP request's Host header.

Now that we have our Dockerfile and the app.js file, we have everything we need to build our image. To build it, run the following Docker command:

$ docker build -t bogo .

We're telling Docker to build an image called bogo based on the contents of the current directory (note the dot at the end of the build command). Docker will look for the Dockerfile in the directory and build the image based on the instructions in the file.

The build process isn't performed by the Docker client. Instead, the contents of the whole directory are uploaded to the Docker daemon and the image is built there.

During the build process, Docker will first pull the base image (node:7) from the public image repository (Docker Hub), unless the image has already been pulled and is stored on our machine.

An image isn't a single, big, binary blob, rather it is composed of multiple layers. Different images may share several layers, which makes storing and transferring images much more efficient. For example, if we create multiple images based on the same base image (such as node:7 in the example), all the layers comprising the base image will be stored only once. Also, when pulling an image, Docker will download each layer individually. Several layers may already be stored on our machine, so Docker will only download those that aren't.

We may think that each Dockerfile creates only a single new layer, but that's not the case. When building an image, a new layer is created for each individual command in the Dockerfile.

During the build of our image, after pulling all the layers of the base image, Docker will create a new layer on top of them and add the app.js file into it. Then it will create yet another layer that will specify the command that should be executed when the image is run. This last layer will then be tagged as bogo:latest.

When the build process completes, we have a new image stored locally. We can see it by telling Docker to list all locally stored images, as shown in the following:

$ docker images REPOSITORY TAG IMAGE ID CREATED SIZE bogo latest 5ed8ebb22b16 9 minutes ago 660MB

Dockerfiles are the usual way of building container images with Docker, but we could also build the image manually by running a container from an existing image, executing commands in the container, exiting the container, and committing the final state as a new image. This is exactly what happens when we build from a Dockerfile, but it's performed automatically and is repeatable, which allows us to make changes to the Dockerfile and rebuild the image any time, without having to manually retype all the commands again.

We can now run our image with the following command:

$ docker run --name bogo-container -p 8080:8080 -d bogo 5291fb56f8586acf40a08d4a7969198cb6e60d029dbec079dd2b94187f3df3f

This tells Docker to run a new container called bogo-container from the bogo image.

The container will be detached from the console (-d flag), which means it will run in the background. Port 8080 on the local machine will be mapped to port 8080 inside the container (-p 8080:8080 option), so we can access the app through http://localhost:8080.

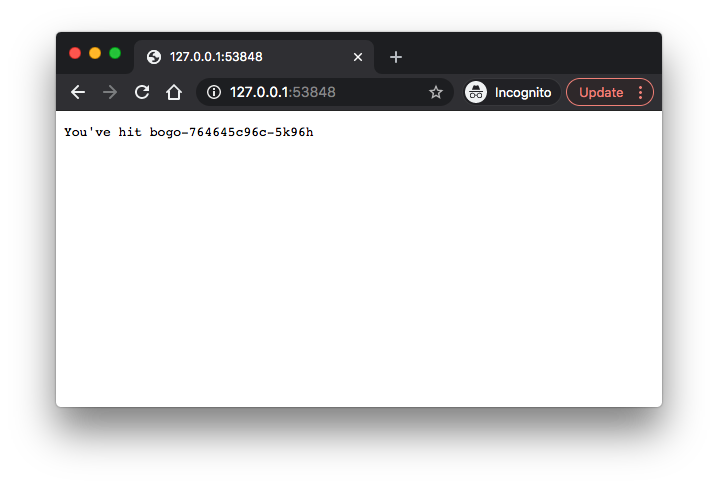

Now try to access our application at http://localhost:8080:

$ curl localhost:8080 You've hit 5291fb56f858

That’s the response from our app. Our tiny application is now running inside a container, isolated from everything else. As we can see, it’s returning 5291fb56f858 as its hostname, and not the actual hostname of our host machine. The hexadecimal number is the ID of the Docker container.

Let's list all running containers:

$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 5291fb56f858 bogo "node app.js" 5 minutes ago Up 5 minutes 0.0.0.0:8080->8080/tcp bogo-container

A single container is running. For each container, Docker prints out its ID and name, the image used to run the container, and the command that's executing inside the container.

The docker ps command only shows the most basic information about the containers.

To see additional information, we can use docker inspect:

$ docker inspect bogo-container

Docker will print out a long JSON containing low-level information about the container.

What if we want to see what the environment is like inside the container? Because multiple processes can run inside the same container, we can always run an additional process in it to see what's inside. We can even run a shell, provided that the shell's binary executable is available in the image.

The Node.js image on which we've based our image contains the bash shell, so we can run the shell inside the container like this:

$ docker exec -it bogo-container bash root@5291fb56f858:/#

This will run bash inside the existing bogo-container container. The bash process will have the same Linux namespaces as the main container process. This allows us to explore the container from within and see how Node.js and our app see the system when running inside the container. The -it option is shorthand for two options:

- -i, which makes sure STDIN is kept open. We need this for entering commands into the shell.

- -t, which allocates a pseudo terminal (TTY).

Note that we need both if we want the use the shell like we're used to. (If we leave out the first one, we can't type any commands, and if we leave out the second one, the command prompt won't be displayed.)

Let's see how to use the shell and see the processes running in the container:

# ps aux USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND root 1 0.0 1.0 614436 26676 ? Ssl 20:07 0:00 node app.js root 11 0.0 0.1 20248 3084 pts/0 Ss 20:20 0:00 bash root 16 0.0 0.0 17504 2056 pts/0 R+ 20:26 0:00 ps aux

We only see three processes. Note that we don't see any other processes from the host OS.

If we now open another terminal and list the processes on the host OS itself, we will, among all other host processes, also see the processes running in the container. On MacOS, we'll need to log into the VM where the Docker daemon is running to see these processes:

$ screen ~/Library/Containers/com.docker.docker/Data/vms/0/tty docker-desktop:~# ps aux|grep app.js 136727 root 0:00 node app.js 136789 root 0:00 grep app.js

This proves that processes running in the container are running in the host OS. Notice that the processes have different IDs inside the container vs. on the host. The container is using its own PID Linux namespace and has a completely isolated process tree, with its own sequence of numbers.

Listing the contents of the root directory inside the container will only show the files in the container and will include all the files that are in the image plus any files that are created while the container is running (log files and similar) as shown below:

root@5291fb56f858:/# ls / app.js boot etc lib media opt root sbin sys usr bin dev home lib64 mnt proc run srv tmp var

It contains the app.js file and other system directories that are part of the node:7 base image

we're using. To exit the container, we exit the shell by running the exit command

and we'll be returned to our host machine.

To stop our app, we tell Docker to stop the bogo-container container:

$ docker stop bogo-container bogo-container

This will stop the main process running in the container and consequently stop the container,

because no other processes are running inside the container.

The container itself still exists and we can see it with docker ps -a.

The -a option prints out all the containers, those running and those that have been stopped.

To truly remove a container, we need to remove it with the docker rm command:

$ docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 5291fb56f858 bogo "node app.js" 3 hours ago Exited (137) 2 minutes ago bogo-container $ docker rm bogo-container bogo-container $ docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

This deletes the container. All its contents are removed and it can't be started again.

The image we built only been available on our local machine. To allow us to run it on any other machine, we need to push the image to an external image registry, Docker Hub (http://hub.docker.com) which is one of the publicly available registries.

Before we do that, we need to re-tag our image according to Docker Hub’s rules. Docker Hub will allow us to push an image if the image's repository name starts with our Docker Hub ID. Here, I'll use my own ID (dockerbogo):

$ docker tag bogo dockerbogo/bogo

Note that this doesn't rename the tag, instead it creates an additional tag for the same image.

We can confirm this by listing the images stored on our system with the docker images command as shown here:

$ docker images REPOSITORY TAG IMAGE ID CREATED SIZE dockerbogo/bogo latest 5ed8ebb22b16 3 hours ago 660MB bogo latest 5ed8ebb22b16 3 hours ago 660MB

As we see, both bogo and dockerbogo/bogo point to the same image ID, so they're in fact one single image with two tags.

Before we push the image to Docker Hub, we need to log in under user ID with the docker login command.

Once we're logged in, we can push the dockerbogo/bogo image to Docker Hub like this:

$ docker login -u dockerbogo Password: Login Succeeded $ docker push dockerbogo/bogo

The simplest way to deploy our app is to use the kubectl run command,

which will create all the necessary components without having to deal with JSON or YAML.

We'll run the image we pushed to Docker Hub earlier in Kubernetes:

$ minikube start

$ kubectl create deployment bogo --image=dockerbogo/bogo

deployment.apps/bogo created

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

bogo-764645c96c-5k96h 1/1 Running 0 31s

$ kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

bogo 1/1 1 1 51s

When we ran the kubectl command, Kubelet on that node saw that the pod was scheduled to it

and instructed Docker to pull the specified image from the registry

because the image wasn't available locally. After downloading the image, Docker created and ran the container.

With our pod running, how do we access it?

Each pod gets its own IP address, howeer, this address is internal to the cluster and isn't accessible from outside of it. To make the pod accessible from the outside, we'll expose it through a Service object.

We need create a special service of type LoadBalancer, because if we create a regular service (a ClusterIP service), like the pod, it would also only be accessible from inside the cluster. By creating a LoadBalancer-type service, an external load balancer will be created and we can connect to the pod through the load balancer's public IP.

$ kubectl expose deployment bogo --type=LoadBalancer --name=bogo-service --port=8080 service/bogo-service exposed $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE bogo-service LoadBalancer 10.109.92.19 <pending> 8080:30500/TCP 2m33s kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 130m

The list shows two services. Ignore the kubernetes service for now and take a close look at the bodo-service we created. It doesn't have an external IP address yet, because it takes time for the load balancer to be created by the cloud infrastructure Kubernetes is running on. Once the load balancer is up, the external IP address of the service should be displayed, if the cloud infra is AWS or GCP. But unfortunately, we're on Minikube which doesn't support LoadBalancer services, so the service will never get an external IP.

Let's wait a while and list the services again as shown below:However, we can access the service anyway with its external port by running:

minikube service <service_name>

$ minikube service bogo-service |-----------|--------------|-------------|-------------------------| | NAMESPACE | NAME | TARGET PORT | URL | |-----------|--------------|-------------|-------------------------| | default | bogo-service | 8080 | http://172.17.0.4:30500 | |-----------|--------------|-------------|-------------------------| Starting tunnel for service bogo-service. |-----------|--------------|-------------|------------------------| | NAMESPACE | NAME | TARGET PORT | URL | |-----------|--------------|-------------|------------------------| | default | bogo-service | | http://127.0.0.1:53848 | |-----------|--------------|-------------|------------------------| Opening service default/bogo-service in default browser... Because you are using a Docker driver on darwin, the terminal needs to be open to run it.

If we look closely, we'll see that the app is reporting the name of the pod as its hostname. As already mentioned earlier, each pod behaves like a separate independent machine with its own IP address and hostname. Even though the application is running in the worker node's operating system, to the app it appears as though it's running on a separate machine dedicated to the app itself—no other processes are running alongside it.

The basic building block in Kubernetes is the pod.

But, we didn't really create any pods either, at least not directly.

By running the kubectl command we created a Deployment,

and this Deployment is what created the actual Pod object.

To make that pod accessible from outside the cluster, we told Kubernetes to expose all the pods as a single Service.

The most important component in our system is the pod. It contains only a single container, but generally a pod can contain as many containers as we want. Inside the container is our Node.js process, which is bound to port 8080 and is waiting for HTTP requests. The pod has its own unique private IP address and hostname.

Another component of our system is the "bogo-service" service. To understand why we need services, we need to learn a key detail about pods. They're ephemeral. A pod may disappear at any time because the node it's running on has failed, because someone deleted the pod, or because the pod was evicted from an otherwise healthy node. When any of those occurs, a missing pod is replaced with a new one by the Replication-Controller. This new pod gets a different IP address from the pod it's replacing. This is where services come in and solve the problem of ever-changing pod IP addresses, as well as exposing multiple pods at a single constant IP and port pair.

On the other hand, when a service is created, it gets a static IP, which never changes during the lifetime of the service. Instead of connecting to pods directly, clients should connect to the service through its constant IP address. The service makes sure one of the pods receives the connection, regardless of where the pod is currently running and regardless of what its IP address is.

Services represent a static location for a group of one or more pods that all provide the same service. Requests coming to the IP and port of the service will be forwarded to the IP and port of one of the pods belonging to the service at that moment.

$ kubectl scale --replicas=3 deployment/bogo deployment.apps/bogo scaled $ kubectl get pods NAME READY STATUS RESTARTS AGE bogo-764645c96c-5k96h 1/1 Running 0 62m bogo-764645c96c-m26f2 0/1 ContainerCreating 0 8s bogo-764645c96c-r6r9q 0/1 ContainerCreating 0 8s $ kubectl get pods NAME READY STATUS RESTARTS AGE bogo-764645c96c-5k96h 1/1 Running 0 64m bogo-764645c96c-m26f2 1/1 Running 0 96s bogo-764645c96c-r6r9q 1/1 Running 0 96s

We've now told Kubernetes to make sure three instances of our pod are always running. Notice that we didn't instruct Kubernetes what action to take. We didn't tell it to add two more pods. We only set the new desired number of instances and let Kubernetes determine what actions it needs to take to achieve the requested state.

This is one of the most fundamental Kubernetes principles. Instead of telling Kubernetes exactly what actions it should perform, we're only declaratively changing the desired state of the system and letting Kubernetes examine the current actual state and reconcile it with the desired state.

Now that we have multiple instances of our app running, let's see what happens if we hit the service URL again. Will we always hit the same app instance or not?

We got the the following each time we issued minikube service bogo-service command:

You've hit bogo-764645c96c-5k96h You've hit bogo-764645c96c-r6r9q You've hit bogo-764645c96c-5k96h You've hit bogo-764645c96c-5k96h You've hit bogo-764645c96c-r6r9q You've hit bogo-764645c96c-r6r9q You've hit bogo-764645c96c-m26f2 You've hit bogo-764645c96c-m26f2

Requests are hitting different pods randomly. This is what services in Kubernetes do when more than one pod instance backs them. They act as a load balancer standing in front of multiple pods. When there's only one pod, services provide a static address for the single pod. Whether a service is backed by a single pod or a group of pods, those pods come and go as they're moved around the cluster, which means their IP addresses change, but the service is always there at the same address. This makes it easy for clients to connect to the pods, regardless of how many exist and how often they change location.

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization