Ansible - Playbook for LAMP HAProxy

Ansible 2.0

In this tutorial, we're going to use one of Ansible's most complete example playbooks as a template: lamp_haproxy

Ansible-Playbooks-Samples.git.

The playbook uses a lot of Ansible features: roles, templates, and group variables, and it also comes with an orchestration playbook that can do zero-downtime rolling upgrades of the web application stack.

The playbooks deploy Apache, PHP, MySQL, Nagios, and HAProxy to a CentOS-based set of servers.

This tutorial is based on Continuous Delivery and Rolling Upgrades.

The following is our site-wide deployment playbook (site.yml).

--- # This playbook deploys the whole application stack in this site. # Apply common configuration to all hosts - hosts: all roles: - common # Configure and deploy database servers. - hosts: dbservers roles: - db # Configure and deploy the web servers. Note that we include two roles # here, the 'base-apache' role which simply sets up Apache, and 'web' # which includes our example web application. - hosts: webservers roles: - base-apache - web # Configure and deploy the load balancer(s). - hosts: lbservers roles: - haproxy # Configure and deploy the Nagios monitoring node(s). - hosts: monitoring roles: - base-apache - na

gios

We can use the playbook to initially deploy the site, as well as push updates to all of the servers.

In this playbook we have 5 plays.

The first one targets all hosts and applies the common role to all of the hosts. This is for site-wide things like yum repository configuration, firewall configuration, and anything else that needs to apply to all of the servers.

The next four plays run against specific host groups and apply specific roles to those servers.

Along with the roles for Nagios monitoring, the database, and the web application, we've implemented a base-apache role that installs and configures a basic Apache setup. This is used by both the sample web application and the Nagios hosts.

Roles in Ansible build on the idea of include files and combine them to form clean, reusable abstractions - they allow us to focus more on the big picture and only dive down into the details when needed.

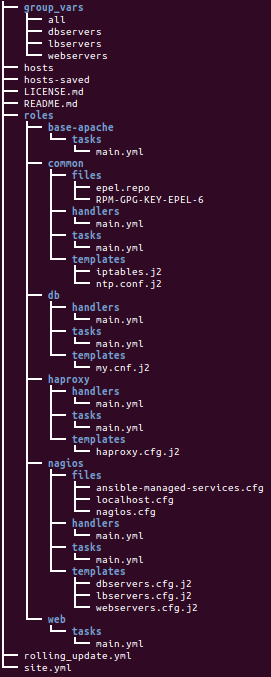

Roles are a way to organize content into reusable components by building a directory structure:

- files - handlers - meta - templates - tasks - vars

What is the best way to organize our playbooks?

The short answer is to use roles!

Roles are ways of automatically loading certain vars_files, tasks, and handlers based on a known file structure.

Grouping content by roles also allows easy sharing of roles with other users.

Our example has six roles: common, base-apache, db, haproxy, nagios, and web.

How we organize our roles is up to us and our application, but most sites will have one or more common roles that are applied to all systems, and then a series of application-specific roles that install and configure particular parts of the site.

Roles can have variables and dependencies, and we can pass in parameters to roles to modify their behavior.

Group variables are variables that are applied to groups of servers.

Here is lamp_haproxy's group_vars/all file. These variables are applied to all of the machines in our inventory:

--- httpd_port: 80 ntpserver: 192.168.1.2

We are just setting two variables, one for the port for the web server, and one for the NTP server that our machines should use for time synchronization.

Here's another group variables file (group_vars/dbservers) which applies to the hosts in the dbservers group:

--- mysqlservice: mysqld mysql_port: 3306 dbuser: root dbname: foodb upassword: usersecret

Note that the playbook (site.yml) has other group variables for the webservers group and the lbservers group.

These group variables are used in a variety of places.

We can use them in playbooks, fir example, in roles/db/tasks/main.yml:

- name: Create Application Database

mysql_db: name={{ dbname }} state=present

- name: Create Application DB User

mysql_user: name={{ dbuser }} password={{ upassword }}

priv=*.*:ALL host='%' state=present

We can also use these variables in templates, like this, in roles/common/templates/ntp.conf.j2:

driftfile /var/lib/ntp/drift

restrict 127.0.0.1

restrict -6 ::1

server {{ ntpserver }}

includefile /etc/ntp/crypto/pw

keys /etc/ntp/keys

In templates, we can also use for loops and if statements to handle more complex situations, like this, in roles/common/templates/iptables.j2:

{% if inventory_hostname in groups['dbservers'] %}

-A INPUT -p tcp --dport 3306 -j ACCEPT

{% endif %}

{% for host in groups['monitoring'] %}

-A INPUT -p tcp -s {{ hostvars[host].ansible_default_ipv4.address }} --dport 5666 -j ACCEPT

{% endfor %}

The first loop is to see if the inventory name of the machine we're currently operating on (inventory_hostname) exists in the inventory group dbservers. If so, that machine will get an iptables ACCEPT line for port 3306.

The second monitoring group loops over all of the hosts in the group called monitoring, and adds an ACCEPT line for each monitoring hosts' default IPV4 address to the current machine's iptables configuration, so that Nagios can monitor those hosts.

Now we have a fully-deployed site with web servers, a load balancer, and monitoring.

How do we update it?

This is where Ansible's orchestration features come into play.

Ansible has the capability to do operations on multi-tier applications in a coordinated way, making it easy to orchestrate a sophisticated zero-downtime rolling upgrade of our web application.

This is implemented in a separate playbook, called rolling_upgrade.yml:

---

# This playbook does a rolling update for all webservers serially (one at a time).

# Change the value of serial: to adjust the number of server to be updated.

#

# The three roles that apply to the webserver hosts will be applied: common,

# base-apache, and web. So any changes to configuration, package updates, etc,

# will be applied as part of the rolling update process.

#

# gather facts from monitoring nodes for iptables rules

- hosts: monitoring

tasks: []

- hosts: webservers

remote_user: root

serial: 1

# These are the tasks to run before applying updates:

pre_tasks:

- name: disable nagios alerts for this host webserver service

nagios: 'action=disable_alerts host={{ inventory_hostname }} services=webserver'

delegate_to: "{{ item }}"

with_items: groups.monitoring

- name: disable the server in haproxy

haproxy: 'state=disabled backend=myapplb host={{ inventory_hostname }} socket=/var/lib/haproxy/stats'

delegate_to: "{{ item }}"

with_items: groups.lbservers

roles:

- common

- base-apache

- web

# These tasks run after the roles:

post_tasks:

- name: wait for webserver to come up

wait_for: 'host={{ inventory_hostname }} port=80 state=started timeout=80'

- name: enable the server in haproxy

haproxy: 'state=enabled backend=myapplb host={{ inventory_hostname }} socket=/var/lib/haproxy/stats'

delegate_to: "{{ item }}"

with_items: groups.lbservers

- name: re-enable nagios alerts

nagios: 'action=enable_alerts host={{ inventory_hostname }} services=webserver'

delegate_to: "{{ item }}"

with_items: groups.monitoring

Looking at the playbook, we can see it is made up of two plays.

The first play is very simple and looks like this:

- hosts: monitoring tasks: []

What's going on here, and why are there no tasks?

We know that Ansible gathers "facts" from the servers before operating upon them. These facts are useful for all sorts of things: networking information, OS/distribution versions, etc.

In our case, we need to know something about all of the monitoring servers in our environment before we perform the update, so this simple play forces a fact-gathering step on our monitoring servers.

The next part is the update play, and it looks like this:

- hosts: webservers user: root serial: 1

This is just a normal play definition, operating on the webservers group. The serial keyword tells Ansible how many servers to operate on at once. If it's not specified, Ansible will parallelize these operations up to the default "forks" limit specified in the configuration file.

But for a zero-downtime rolling upgrade, wemay not want to operate on that many hosts at once. If we had just a handful of webservers, we may want to set serial to 1, for one host at a time. If we have 100, maybe we could set serial to 10, for ten at a time.

Let's look into the next update play:

pre_tasks:

- name: disable nagios alerts for this host webserver service

nagios: action=disable_alerts host={{ inventory_hostname }} services=webserver

delegate_to: "{{ item }}"

with_items: groups.monitoring

- name: disable the server in haproxy

shell: echo "disable server myapplb/{{ inventory_hostname }}" | socat stdio /var/lib/haproxy/stats

delegate_to: "{{ item }}"

with_items: groups.lbservers

The pre_tasks keyword just lets us list tasks to run before the roles are called.

Let's look at the names of these tasks. We can see that we are disabling Nagios alerts and then removing the webserver that we are currently updating from the HAProxy load balancing pool.

The delegate_to and with_items arguments, used together, cause Ansible to loop over each monitoring server and load balancer, and perform that operation (delegate that operation) on the monitoring or load balancing server, "on behalf" of the webserver.

In programming terms, the outer loop is the list of web servers, and the inner loop is the list of monitoring servers.

The next step simply re-applies the proper roles to the web servers.

This will cause any configuration management declarations in web and base-apache roles to be applied to the web servers, including an update of the web application code itself:

roles: - common - base-apache - web

In the post_tasks section, we reverse the changes to the Nagios configuration and put the web server back in the load balancing pool:

post_tasks:

- name: Enable the server in haproxy

shell: echo "enable server myapplb/{{ inventory_hostname }}" | socat stdio /var/lib/haproxy/stats

delegate_to: "{{ item }}"

with_items: groups.lbservers

- name: re-enable nagios alerts

nagios: action=enable_alerts host={{ inventory_hostname }} services=webserver

delegate_to: "{{ item }}"

For integration with Continuous Integration systems, we can easily trigger playbook runs using the ansible-playbook command line tool.

For testing, we'll use one aws instance. So, host file should be modified like this:

[webservers] 54.153.115.201 [dbservers] 54.153.115.201 [lbservers] 54.153.115.201 [monitoring] 54.153.115.201

Connection test:

$ ansible -i hosts all -m ping -u ec2-user

54.153.115.201 | SUCCESS => {

"changed": false,

"ping": "pong"

}

To run playbook, we may want to change remote_user from root to ec2-user:

$ ansible-playbook -i hosts -s -u ec2-user site.yml PLAY [all] ********************************************************************* TASK [setup] ******************************************************************* ok: [54.153.115.201] TASK [common : Install python bindings for SE Linux] *************************** ok: [54.153.115.201] => (item=[u'libselinux-python', u'libsemanage-python']) TASK [common : Create the repository for EPEL] ********************************* changed: [54.153.115.201] TASK [common : Create the GPG key for EPEL] ************************************ changed: [54.153.115.201] TASK [common : install some useful nagios plugins] ***************************** changed: [54.153.115.201] => (item=[u'nagios-nrpe', u'nagios-plugins-swap', u'nagios-plugins-users', u'nagios-plugins-procs', u'nagios-plugins-load', u'nagios-plugins-disk']) TASK [common : Install ntp] **************************************************** changed: [54.153.115.201] TASK [common : Configure ntp file] ********************************************* changed: [54.153.115.201] TASK [common : Start the ntp service] ****************************************** changed: [54.153.115.201] TASK [common : insert iptables template] *************************************** changed: [54.153.115.201] TASK [common : test to see if selinux is running] ****************************** ok: [54.153.115.201] RUNNING HANDLER [common : restart ntp] ***************************************** changed: [54.153.115.201] TASK [setup] ******************************************************************* ok: [54.153.115.201] TASK [db : Install Mysql package] ********************************************** ok: [54.153.115.201] => (item=[u'mysql-server', u'MySQL-python']) NO MORE HOSTS LEFT ************************************************************* to retry, use: --limit @site.retry ...

Ansible 2.0

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization