Docker & Kubernetes : Nginx Ingress Controller on Minikube

On a Mac, we cannot use the NodePort service directly because of the way Docker networking is implemented. Instead, we must use the Minikube tunnel.

To use the Minikube tunnel, simply run the following command:

minikube service <service-name>

It is built around the Kubernetes Ingress resource, using a ConfigMap to store the NGINX configuration.

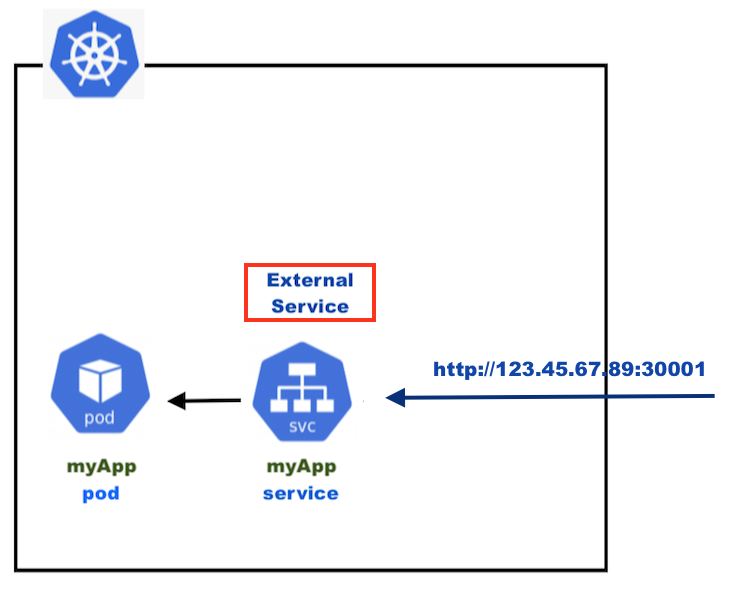

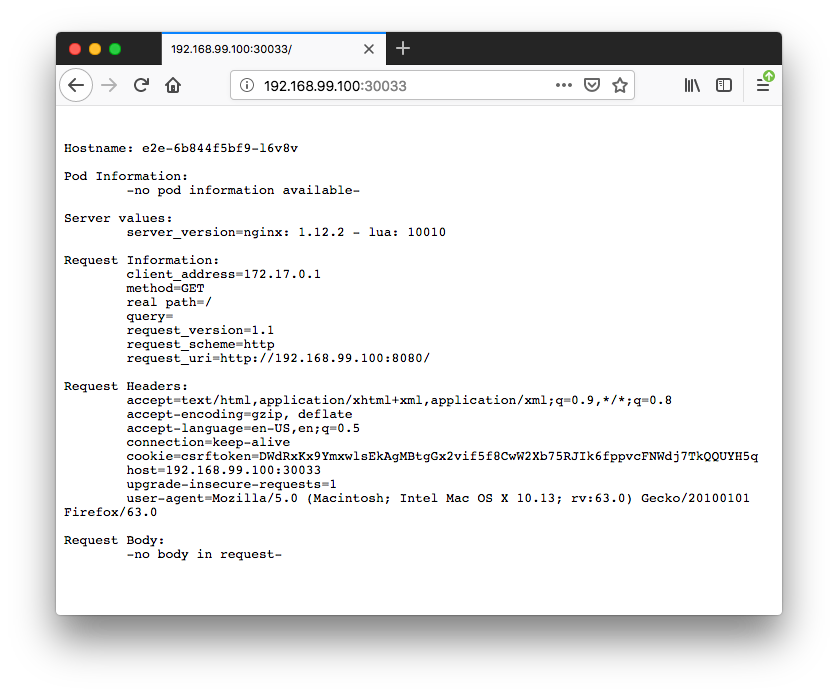

The following diagram shows how we can access using the Kubernetes service:

As we can see, we use http://node-ip:port but what we want is to access our pod via https://myApp. This is where the Ingress comes into the picture:

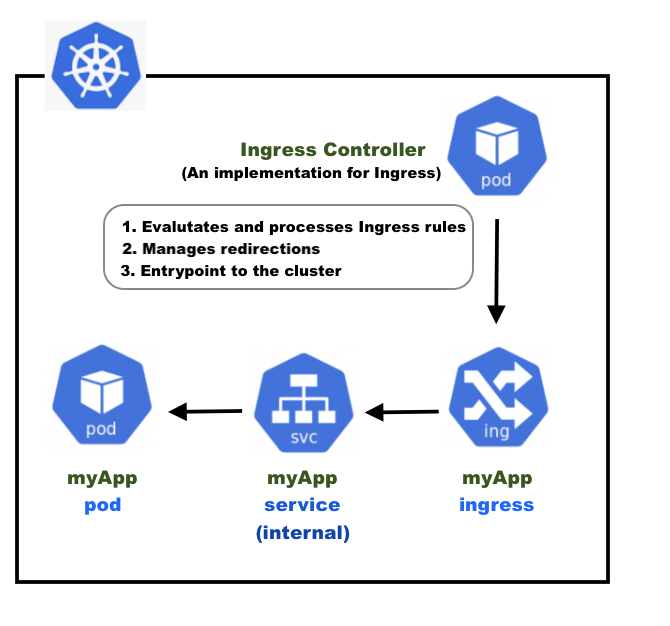

The Ingress exposes HTTP and HTTPS routes from outside the cluster to services within the cluster. Traffic routing is controlled by rules defined on the Ingress resource. An Ingress may be configured to give Services externally-reachable URLs, load balance traffic, terminate SSL / TLS, and offer name-based virtual hosting.

For more details on Ingress, please check out Kubernetes Documentation/ Concepts/ Services, Load Balancing, and Networking/ Ingress.

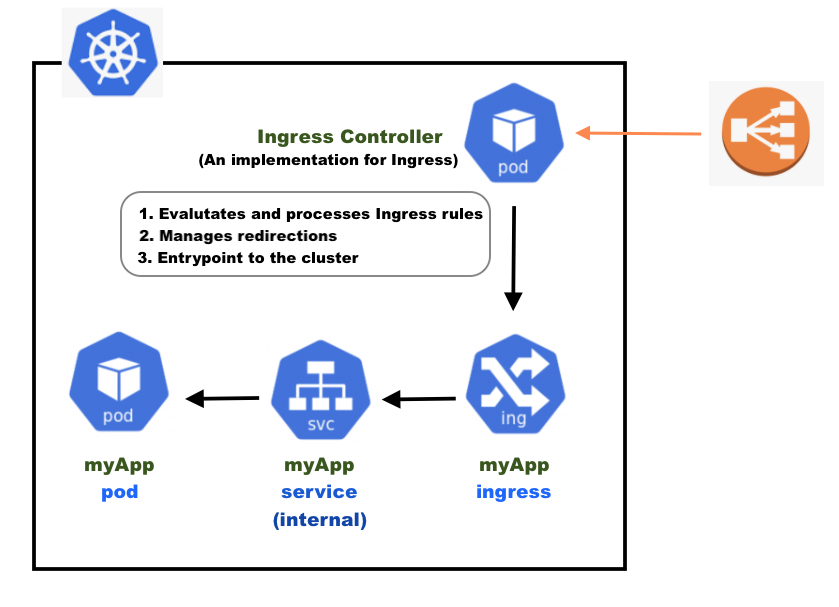

The Ingress controller is responsible for fulfilling the Ingress, usually with a load balancer, though it may also configure our edge router or additional frontends to help handle the traffic:

If we use a Cloud provider's load balancer, we don't have to implement it by ourselves.

We'll use Minikube which runs a single-node (or multi-node with minikube 1.10.1 or higher) Kubernetes cluster inside a VM on our laptop:

$ minikube version minikube version: v0.30.0 $ minikube start Starting local Kubernetes v1.10.0 cluster... Starting VM... Getting VM IP address... Moving files into cluster... Setting up certs... Connecting to cluster... Setting up kubeconfig... Starting cluster components... Kubectl is now configured to use the cluster. Loading cached images from config file. $ minikube status minikube: Running cluster: Running kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.100 $ kubectl config current-context minikube $ kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-c4cffd6dc-xjr2v 1/1 Running 2 25m etcd-minikube 1/1 Running 0 7m kube-addon-manager-minikube 1/1 Running 2 25m kube-apiserver-minikube 1/1 Running 0 7m kube-controller-manager-minikube 1/1 Running 0 7m kube-dns-86f4d74b45-4d72t 3/3 Running 7 26m kube-proxy-6vq42 1/1 Running 0 6m kube-scheduler-minikube 1/1 Running 2 26m kubernetes-dashboard-6f4cfc5d87-7f8vl 1/1 Running 5 25m storage-provisioner 1/1 Running 6 25m

The Ingress Controller is created when we run the "minikube addons enable ingress". It creates an "nginx-ingress-controller" pod in the "kube-system" namespace.

$ minikube addons enable ingress ingress was successfully enabled

After enabled the ingress, we can see that the nginx-ingress-controller is in the list of pods:

$ kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-c4cffd6dc-xjr2v 1/1 Running 2 30m default-http-backend-544569b6d7-fqczz 1/1 Running 0 2m etcd-minikube 1/1 Running 0 11m kube-addon-manager-minikube 1/1 Running 2 29m kube-apiserver-minikube 1/1 Running 0 11m kube-controller-manager-minikube 1/1 Running 0 11m kube-dns-86f4d74b45-4d72t 3/3 Running 7 30m kube-proxy-6vq42 1/1 Running 0 10m kube-scheduler-minikube 1/1 Running 2 30m kubernetes-dashboard-6f4cfc5d87-7f8vl 1/1 Running 5 30m nginx-ingress-controller-8566746984-9bxbh 1/1 Running 0 2m storage-provisioner 1/1 Running 6 30m

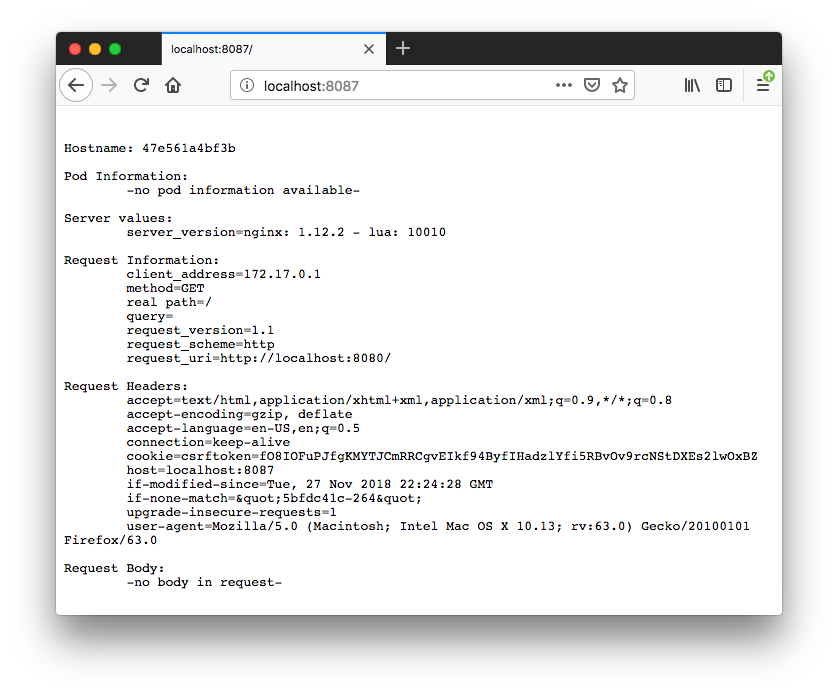

We'll deploy the following gcr.io/kubernetes-e2e-test-images/echoserver:

$ docker run -p 8087:8080 gcr.io/kubernetes-e2e-test-images/echoserver:2.2 Generating self-signed cert Generating a 2048 bit RSA private key ............................+++ .....................................................................................................................+++ writing new private key to '/certs/privateKey.key' ----- Starting nginx 172.17.0.1 - - [04/Dec/2018:19:49:30 +0000] "GET / HTTP/1.1" 200 857 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.13; rv:63.0) Gecko/20100101 Firefox/63.0" 172.17.0.1 - - [04/Dec/2018:19:49:30 +0000] "GET /favicon.ico HTTP/1.1" 200 755 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.13; rv:63.0) Gecko/20100101 Firefox/63.0"

Here is the e2e-deploy.yaml for deployment:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: e2e

spec:

replicas: 2

selector:

matchLabels:

app: e2e

template:

metadata:

labels:

app: e2e

spec:

containers:

- name: http-echo

image: gcr.io/kubernetes-e2e-test-images/echoserver:2.2

ports:

- containerPort: 8080

$ kubectl apply -f e2e-deploy.yaml deployment.extensions/e2e created $ kubectl get deployments NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE e2e 2 2 2 0 11s $ kubectl get pods NAME READY STATUS RESTARTS AGE e2e-6b844f5bf9-l6v8v 1/1 Running 0 3m e2e-6b844f5bf9-w6fhk 1/1 Running 0 3m

At this time, though the e2e app container is running, we cannot access the app. So, we need to deploy the service. As we can see from the deployment yaml, the port that the application is running on is 8080: "note that containerPort: 8080". So, let's expose our pods using Services (e2e-service.yaml).

In the ServiceSpec yaml below, we're using type: nodePort though it's not the type of we want to expose an app in this post.

Note that Services can be exposed in different ways by specifying a type in the ServiceSpec, and the default is ClusterIP which exposes the Service on an internal IP in the cluster. However, this type makes the Service only reachable from within the cluster.

apiVersion: v1

kind: Service

metadata:

name: e2e-svc

spec:

type: NodePort

ports:

- port: 80

targetPort: 8080

nodePort: 30033

protocol: TCP

name: http

selector:

app: e2e

Here we set three ports. Let's see how they are different:

- port: This is the port number which makes a service visible to other services running within the same K8s cluster. In other words, in case a service wants to invoke another service running within the same Kubernetes cluster, it will be able to do so using port specified against "port" in the service spec file.

- targetPort: This port is the port on the POD where the service is running.

- nodePort: This port is the port on which the service can be accessed from external users using Kube-Proxy.

So, with our yaml, the port is 80 which represents that the service can be accessed by other services in the cluster. The targetPort 8080 is our service that is actually running on port 8080 on pods. The nodePort 30033 represents that our service can be accessed via kube-proxy.

Let's get our url:

$ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE e2e-svc NodePort 10.98.228.133 <none> 8088:30033/TCP 9s kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5h $ minikube service e2e-svc --url http://192.168.99.100:30033

Let's modify our service (e2e-service.yaml) to make it work with Ingress:

apiVersion: v1

kind: Service

metadata:

name: e2e-svc

spec:

ports:

- port: 80

targetPort: 8080

protocol: TCP

name: http

selector:

app: e2e

Note that we dropped the type of "NodePort".

Here is our Ingress Rules (e2e-ingress.yam):

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: e2e-ingress

annotations:

nginx.ingress.kubernetes.io/ssl-redirect: \"false\"

spec:

rules:

- http:

paths:

- path: /e2e-test

backend:

serviceName: e2e-svc

servicePort: 80

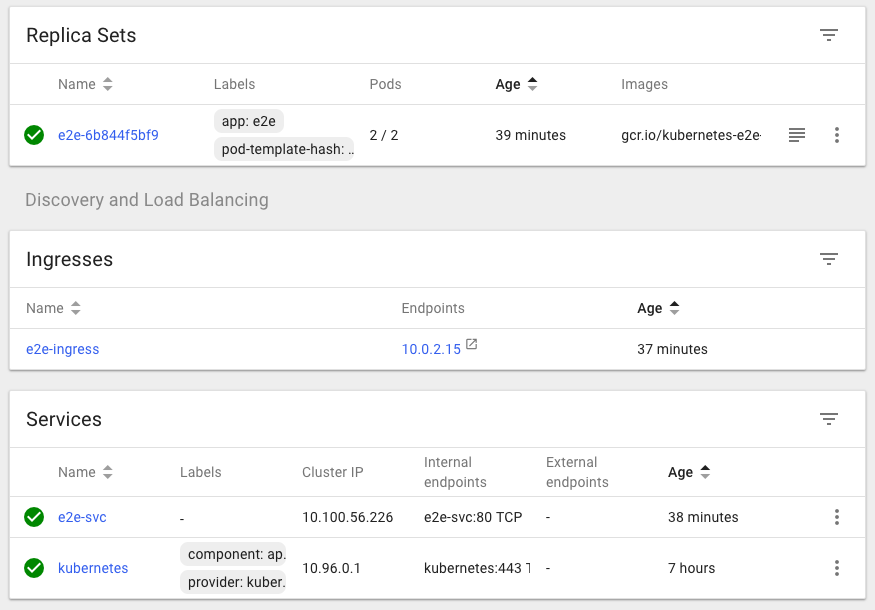

$ kubectl apply -f e2e-ingress.yaml ingress.extensions/e2e-ingress created $ kubectl get ing NAME HOSTS ADDRESS PORTS AGE e2e-ingress * 80 19s

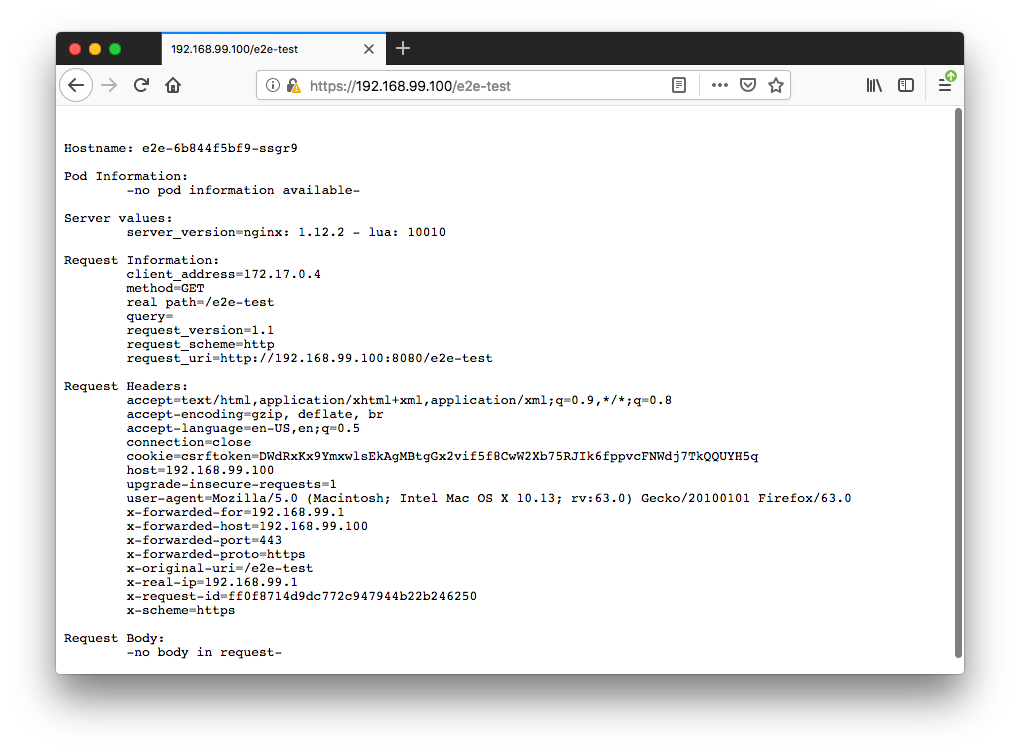

The setup allows us to access the service e2e-svc via the /e2e-test. Since we didn't specify a host, then we can access it using the clusterIP which is the default type of exposing a Service.

The nginx.ingress.kubernetes.io/ssl-redirect annotation is used because we are not specifying a host. When no host is specified, then the default-server is hit. The default-server is configured with a self-signed certificate, so the traffic redirects http to https (nginx-ingress-controller always redirect to HTTPS regardless of Ingress annotations if host was not specified).

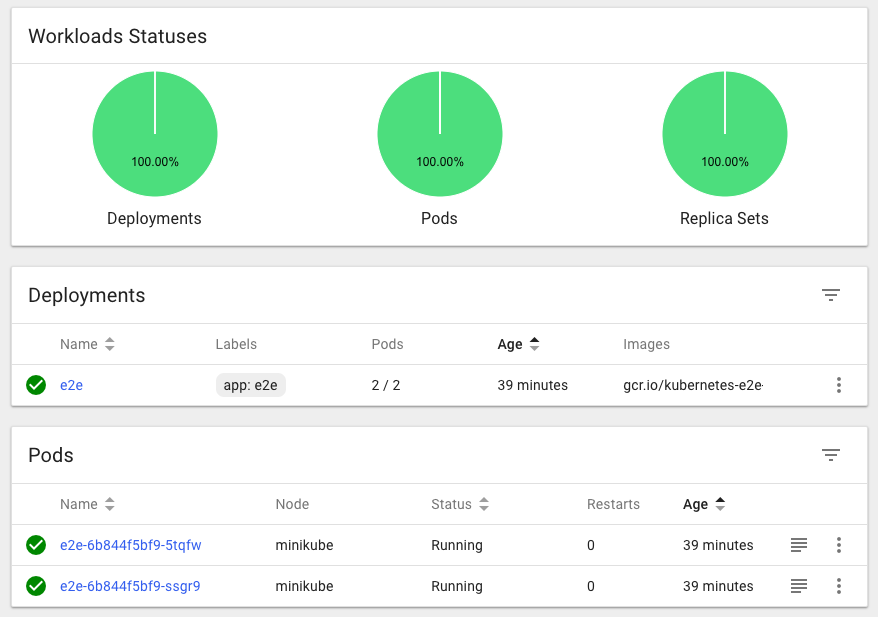

Now that the Ingress rules are configured, we can test our e2e app by sending some requests:

We may get a warning from a browser saying something like "not secure ...". If we add an exception, we get the response from the browser.

Note: on my mac, I could not get the same response from curl:

$ curl --insecure 192.168.99.100/e2e-test <html> <head><title>308 Permanent Redirect</title></head> <body bgcolor="white"> <center><h1>308 Permanent Redirect</h1></center> <hr><center>nginx/1.15.3</center> </body> </html>

We can check how nginx configures the application routing rules:

$ kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE ... nginx-ingress-controller-8566746984-9bxbh 1/1 Running 0 7h ... $ kubectl exec -it \ > -n kube-system nginx-ingress-controller-8566746984-9bxbh \ > cat /etc/nginx/nginx.conf # Configuration checksum: 11152342504644643882 # setup custom paths that do not require root access pid /tmp/nginx.pid; daemon off; worker_processes 2; ...

Reference: Getting Started with Kubernetes Ingress-Nginx on Minikube

$ minikube dashboard Opening http://127.0.0.1:50198/api/v1/namespaces/kube-system/services/http:kubernetes-dashboard:/proxy/ in your default browser...

Please check out Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube.

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization