Docker / Kubernetes & Terraform on Mac

Kubernetes (K8S) is an open-source workload scheduler with focus on containerized applications. We will be running a Kubernetes cluster on our local machine using the following tools:

- Homebrew: a package manager for the Mac.

- Docker for Mac: Docker is used to create, manage, and run our containers. It lets us construct containers that will run in Kubernetes Pods.

- Minikube: a Kubernetes-specific package that runs a Kubernetes cluster on our machine. That cluster has a single node and has some unique features that make it more suitable for local development. Minikube tells VirtualBox to run. Minikube can use other virtualization tools not just VirtualBox. However, these require extra configuration.

- VirtualBox: a generic tool for running virtual machines. We can use it to run Ubuntu, Windows, etc. inside our macOS operating system host.

- kubectl: a command line application that lets us interact with our Minikube Kubernetes cluster. It is a CLI-based tool mapped to API calls to manage all Kubernetes resources described in YAML files. It sends request to the Kubernetes API server running on the cluster to manage our Kubernetes environment. kubectl is like any other application that runs on our Mac-it just makes HTTP requests to the Kubernetes API on the cluster.

This page is incomplete. Kubectl + Terraform + AWS not done yet!

Failed in creating AWS load balancer.

Provider = AWS or Kubernetes?

The only pre-requisite for this guide is that we should have Homebrew installed. Homebrew is a package manager for the Mac. We'll also need Homebrew Cask, which we can install after Homebrew by running brew tap caskroom/cask in our Terminal:

$ brew tap caskroom/cask Updating Homebrew... ==> Auto-updated Homebrew! Updated 1 tap (homebrew/core). ==> New Formulae pagmo ==> Updated Formulae bitwarden-cli checkbashisms git-ftp jsonrpc-glib mmseqs2 phpunit swiftformat v8 bzt curl iso-codes kakoune mongodb shibboleth-sp template-glib webpack caf gauge jhipster libdazzle php-cs-fixer sphinx-doc topgrade xml-tooling-c ==> Tapping homebrew/cask Cloning into '/usr/local/Homebrew/Library/Taps/homebrew/homebrew-cask'... remote: Counting objects: 4144, done. remote: Compressing objects: 100% (4129/4129), done. remote: Total 4144 (delta 28), reused 612 (delta 12), pack-reused 0 Receiving objects: 100% (4144/4144), 1.30 MiB | 779.00 KiB/s, done. Resolving deltas: 100% (28/28), done. Tapped 1 command and 4046 casks (4,153 files, 4.1MB).

Install Docker for Mac. Docker is used to create, manage, and run our containers. It lets us construct containers that will run in Kubernetes Pods.

Install VirtualBox for Mac using Homebrew. Run

brew cask install virtualboxin the Terminal. VirtualBox lets us run virtual machines on our Mac (like running Windows inside macOS, except for a Kubernetes cluster.):$ brew cask install virtualbox ==> Caveats To install and/or use virtualbox you may need to enable their kernel extension in System Preferences → Security & Privacy → General For more information refer to vendor documentation or the Apple Technical Note: https://developer.apple.com/library/content/technotes/tn2459/_index.html ==> Satisfying dependencies ==> Downloading https://download.virtualbox.org/virtualbox/5.2.18/VirtualBox-5.2.18-124319-OSX.dmg Already downloaded: /Users/kihyuckhong/Library/Caches/Homebrew/downloads/4615b4fafa6c94c2f112089c0a964e613b0f6113b016ea526ccdb40cfc9f20de--VirtualBox-5.2.18-124319-OSX.dmg ==> Verifying checksum for Cask virtualbox ==> Installing Cask virtualbox ==> Running installer for virtualbox; your password may be necessary. ==> Package installers may write to any location; options such as --appdir are ignored. installer: Package name is Oracle VM VirtualBox installer: Installing at base path / installer: The install was successful. virtualbox was successfully installed!

Install

kubectlfor Mac. This is the command-line interface that lets us interact with Kuberentes. Runbrew install kubectlin the Terminal:$ brew install kubectl ==> Downloading https://homebrew.bintray.com/bottles/kubernetes-cli-1.11.2.high_sierra.bottle.tar.gz ######################################################################## 100.0% ==> Pouring kubernetes-cli-1.11.2.high_sierra.bottle.tar.gz ==> Caveats Bash completion has been installed to: /usr/local/etc/bash_completion.d zsh completions have been installed to: /usr/local/share/zsh/site-functions ==> Summary /usr/local/Cellar/kubernetes-cli/1.11.2: 196 files, 53.7MB

Install Minikube via the Installation > OSX instructions from the latest release. Run the following command in Terminal:

curl -Lo minikube https://storage.googleapis.com/minikube/releases/v0.28.2/minikube-darwin-amd64 &&\ chmod +x minikube &&\ sudo mv minikube /usr/local/bin/Minikube will run a Kubernetes cluster with a single node.

Now, everything should work! Start our Minikube cluster with

minikube start.minikube startmight take a few minutes.$ minikube start Starting local Kubernetes v1.10.0 cluster... Starting VM... Downloading Minikube ISO 160.27 MB / 160.27 MB [============================================] 100.00% 0s Getting VM IP address... Moving files into cluster... Downloading kubeadm v1.10.0 Downloading kubelet v1.10.0 Finished Downloading kubelet v1.10.0 Finished Downloading kubeadm v1.10.0 Setting up certs... Connecting to cluster... Setting up kubeconfig... Starting cluster components... Kubectl is now configured to use the cluster. Loading cached images from config file.

Then run

kubectl api-versions. If we see a list of versions, everything's working!$ kubectl api-versions admissionregistration.k8s.io/v1beta1 apiextensions.k8s.io/v1beta1 apiregistration.k8s.io/v1 apiregistration.k8s.io/v1beta1 apps/v1 apps/v1beta1 apps/v1beta2 authentication.k8s.io/v1 authentication.k8s.io/v1beta1 authorization.k8s.io/v1 authorization.k8s.io/v1beta1 autoscaling/v1 autoscaling/v2beta1 batch/v1 batch/v1beta1 certificates.k8s.io/v1beta1 events.k8s.io/v1beta1 extensions/v1beta1 networking.k8s.io/v1 policy/v1beta1 rbac.authorization.k8s.io/v1 rbac.authorization.k8s.io/v1beta1 storage.k8s.io/v1 storage.k8s.io/v1beta1

We can also check if Kubernetes are running well with kubectl command:

$ kubectl cluster-info Kubernetes master is running at https://192.168.99.100:8443 KubeDNS is running at https://192.168.99.100:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

- Press

Command+Spaceand type Terminal and press enter/return key. - Run in Terminal app:

ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)" < /dev/null 2> /dev/null

and press enter/return key.

If the screen prompts us to enter a password, enter Mac's user password to continue. When we type the password, it won't be displayed on screen, but the system would accept it. So just type our password and press ENTER/RETURN key. Then wait for the command to finish. - Run:

$ brew install terraform ==> Downloading https://homebrew.bintray.com/bottles/terraform-0.11.8.high_sierra.bottle.tar.gz ######################################################################## 100.0% ==> Pouring terraform-0.11.8.high_sierra.bottle.tar.gz /usr/local/Cellar/terraform/0.11.8: 6 files, 88.9MB

Done! We can now use terraform.

$ which terraform /usr/local/bin/terraform

Terraform uses text files to describe infrastructure and to set variables. These text files are called Terraform configurations and end in .tf.

The easiest way to configure the provider is by creating/generating a config in a default location (~/.kube/config). That allows us to leave the provider block completely empty.

provider "kubernetes" {}

The main 'thing' in any Kubernetes application is a Pod. The Pod consists of one or more containers that are placed on cluster nodes based on CPU or memory availability.

In this example, we create a pod with a single container running the nginx web server, exposing port 80 (HTTP) which can be then exposed through the load balancer to the real user via Kubernetes service in later section.

resource "kubernetes_pod" "nginx_server" {

metadata {

name = "nginx-example"

labels {

App = "nginx_server"

}

}

spec {

container {

image = "nginx:1.7.8"

name = "example"

port {

container_port = 80

}

}

}

}

Note that we added labels that will allow Kubernetes to find all pods (instances) for the purpose of forwarding the traffic to the exposed port. Unlike this example, in the real world, we'd commonly run more than a single instance of our application in production to reach high availability. In that case, the labels help Kubernetes to find pods.

Kubernetes Service allows us to provision a load-balancer. It manages the relationship between pods and the load balancer as new pods are launched and others die for any reason. Actually, the Service is a way to expose our application to users.

resource "kubernetes_service" "nginx_server" {

metadata {

name = "nginx-example"

}

spec {

selector {

App = "${kubernetes_pod.nginx_server.metadata.0.labels.App}"

}

port {

port = 80

target_port = 80

}

type = "LoadBalancer"

}

}

We may also want to add an output. It will expose the hostname to the user:

output "lb_hostname" {

value = "${kubernetes_service.nginx_server.load_balancer_ingress.0.hostname}"

}

Save the contents to a file named main.tf. We need to make sure that there are no other *.tf files in our directory, since Terraform loads all of them. Here is the configuration file that combines the provider, resources, service, and the output:

provider "kubernetes" {}

resource "kubernetes_pod" "nginx_server" {

metadata {

name = "nginx-example"

labels {

App = "nginx_server"

}

}

spec {

container {

image = "nginx:1.7.8"

name = "example"

port {

container_port = 80

}

}

}

}

resource "kubernetes_service" "nginx_server" {

metadata {

name = "nginx-example"

}

spec {

selector {

App = "${kubernetes_pod.nginx_server.metadata.0.labels.App}"

}

port {

port = 80

target_port = 80

}

type = "LoadBalancer"

}

}

output "lb_hostname" {

value = "${kubernetes_service.nginx_server.load_balancer_ingress.0.hostname}"

}

The plan will provide us an overview of planned changes, in this case we should see 2 resources (Pod + Service) being added:

$ terraform plan

Refreshing Terraform state in-memory prior to plan...

The refreshed state will be used to calculate this plan, but will not be

persisted to local or remote state storage.

------------------------------------------------------------------------

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

+ kubernetes_pod.nginx_server

id: <computed>

metadata.#: "1"

metadata.0.generation: <computed>

metadata.0.labels.%: "1"

metadata.0.labels.App: "nginx_server"

metadata.0.name: "nginx-example"

metadata.0.namespace: "default"

metadata.0.resource_version: <computed>

metadata.0.self_link: <computed>

metadata.0.uid: <computed>

spec.#: "1"

spec.0.container.#: "1"

spec.0.container.0.image: "nginx:1.7.8"

spec.0.container.0.image_pull_policy: <computed>

spec.0.container.0.name: "example"

spec.0.container.0.port.#: "1"

spec.0.container.0.port.0.container_port: "80"

spec.0.container.0.port.0.protocol: "TCP"

spec.0.container.0.resources.#: <computed>

spec.0.container.0.stdin: "false"

spec.0.container.0.stdin_once: "false"

spec.0.container.0.termination_message_path: "/dev/termination-log"

spec.0.container.0.tty: "false"

spec.0.dns_policy: "ClusterFirst"

spec.0.host_ipc: "false"

spec.0.host_network: "false"

spec.0.host_pid: "false"

spec.0.hostname: <computed>

spec.0.image_pull_secrets.#: <computed>

spec.0.node_name: <computed>

spec.0.restart_policy: "Always"

spec.0.service_account_name: <computed>

spec.0.termination_grace_period_seconds: "30"

+ kubernetes_service.nginx_server

id: <computed>

load_balancer_ingress.#: <computed>

metadata.#: "1"

metadata.0.generation: <computed>

metadata.0.name: "nginx-example"

metadata.0.namespace: "default"

metadata.0.resource_version: <computed>

metadata.0.self_link: <computed>

metadata.0.uid: <computed>

spec.#: "1"

spec.0.cluster_ip: <computed>

spec.0.port.#: "1"

spec.0.port.0.node_port: <computed>

spec.0.port.0.port: "80"

spec.0.port.0.protocol: "TCP"

spec.0.port.0.target_port: "80"

spec.0.selector.%: "1"

spec.0.selector.App: "nginx_server"

spec.0.session_affinity: "None"

spec.0.type: "LoadBalancer"

Plan: 2 to add, 0 to change, 0 to destroy.

------------------------------------------------------------------------

Note: You didn't specify an "-out" parameter to save this plan, so Terraform

can't guarantee that exactly these actions will be performed if

"terraform apply" is subsequently run.

This commands gets more useful as our infrastructure grows and becomes more complex with more components depending on each other and it's especially helpful during updates.

Docker & K8s

- Docker install on Amazon Linux AMI

- Docker install on EC2 Ubuntu 14.04

- Docker container vs Virtual Machine

- Docker install on Ubuntu 14.04

- Docker Hello World Application

- Nginx image - share/copy files, Dockerfile

- Working with Docker images : brief introduction

- Docker image and container via docker commands (search, pull, run, ps, restart, attach, and rm)

- More on docker run command (docker run -it, docker run --rm, etc.)

- Docker Networks - Bridge Driver Network

- Docker Persistent Storage

- File sharing between host and container (docker run -d -p -v)

- Linking containers and volume for datastore

- Dockerfile - Build Docker images automatically I - FROM, MAINTAINER, and build context

- Dockerfile - Build Docker images automatically II - revisiting FROM, MAINTAINER, build context, and caching

- Dockerfile - Build Docker images automatically III - RUN

- Dockerfile - Build Docker images automatically IV - CMD

- Dockerfile - Build Docker images automatically V - WORKDIR, ENV, ADD, and ENTRYPOINT

- Docker - Apache Tomcat

- Docker - NodeJS

- Docker - NodeJS with hostname

- Docker Compose - NodeJS with MongoDB

- Docker - Prometheus and Grafana with Docker-compose

- Docker - StatsD/Graphite/Grafana

- Docker - Deploying a Java EE JBoss/WildFly Application on AWS Elastic Beanstalk Using Docker Containers

- Docker : NodeJS with GCP Kubernetes Engine

- Docker : Jenkins Multibranch Pipeline with Jenkinsfile and Github

- Docker : Jenkins Master and Slave

- Docker - ELK : ElasticSearch, Logstash, and Kibana

- Docker - ELK 7.6 : Elasticsearch on Centos 7

- Docker - ELK 7.6 : Filebeat on Centos 7

- Docker - ELK 7.6 : Logstash on Centos 7

- Docker - ELK 7.6 : Kibana on Centos 7

- Docker - ELK 7.6 : Elastic Stack with Docker Compose

- Docker - Deploy Elastic Cloud on Kubernetes (ECK) via Elasticsearch operator on minikube

- Docker - Deploy Elastic Stack via Helm on minikube

- Docker Compose - A gentle introduction with WordPress

- Docker Compose - MySQL

- MEAN Stack app on Docker containers : micro services

- MEAN Stack app on Docker containers : micro services via docker-compose

- Docker Compose - Hashicorp's Vault and Consul Part A (install vault, unsealing, static secrets, and policies)

- Docker Compose - Hashicorp's Vault and Consul Part B (EaaS, dynamic secrets, leases, and revocation)

- Docker Compose - Hashicorp's Vault and Consul Part C (Consul)

- Docker Compose with two containers - Flask REST API service container and an Apache server container

- Docker compose : Nginx reverse proxy with multiple containers

- Docker & Kubernetes : Envoy - Getting started

- Docker & Kubernetes : Envoy - Front Proxy

- Docker & Kubernetes : Ambassador - Envoy API Gateway on Kubernetes

- Docker Packer

- Docker Cheat Sheet

- Docker Q & A #1

- Kubernetes Q & A - Part I

- Kubernetes Q & A - Part II

- Docker - Run a React app in a docker

- Docker - Run a React app in a docker II (snapshot app with nginx)

- Docker - NodeJS and MySQL app with React in a docker

- Docker - Step by Step NodeJS and MySQL app with React - I

- Installing LAMP via puppet on Docker

- Docker install via Puppet

- Nginx Docker install via Ansible

- Apache Hadoop CDH 5.8 Install with QuickStarts Docker

- Docker - Deploying Flask app to ECS

- Docker Compose - Deploying WordPress to AWS

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI EC2 type)

- Docker - WordPress Deploy to ECS with Docker-Compose (ECS-CLI Fargate type)

- Docker - ECS Fargate

- Docker - AWS ECS service discovery with Flask and Redis

- Docker & Kubernetes : minikube

- Docker & Kubernetes 2 : minikube Django with Postgres - persistent volume

- Docker & Kubernetes 3 : minikube Django with Redis and Celery

- Docker & Kubernetes 4 : Django with RDS via AWS Kops

- Docker & Kubernetes : Kops on AWS

- Docker & Kubernetes : Ingress controller on AWS with Kops

- Docker & Kubernetes : HashiCorp's Vault and Consul on minikube

- Docker & Kubernetes : HashiCorp's Vault and Consul - Auto-unseal using Transit Secrets Engine

- Docker & Kubernetes : Persistent Volumes & Persistent Volumes Claims - hostPath and annotations

- Docker & Kubernetes : Persistent Volumes - Dynamic volume provisioning

- Docker & Kubernetes : DaemonSet

- Docker & Kubernetes : Secrets

- Docker & Kubernetes : kubectl command

- Docker & Kubernetes : Assign a Kubernetes Pod to a particular node in a Kubernetes cluster

- Docker & Kubernetes : Configure a Pod to Use a ConfigMap

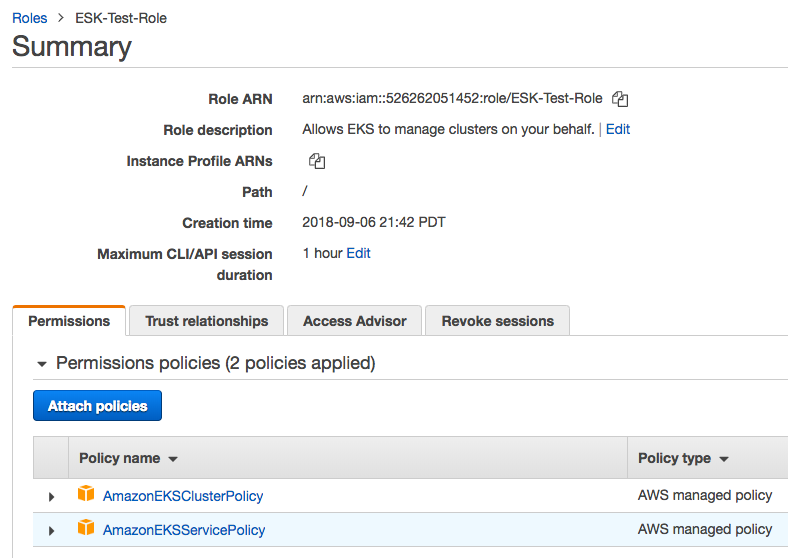

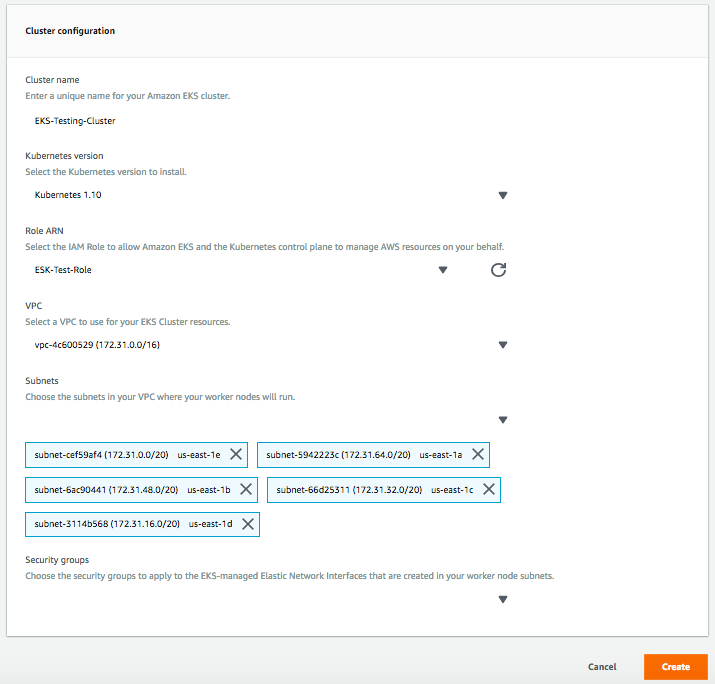

- AWS : EKS (Elastic Container Service for Kubernetes)

- Docker & Kubernetes : Run a React app in a minikube

- Docker & Kubernetes : Minikube install on AWS EC2

- Docker & Kubernetes : Cassandra with a StatefulSet

- Docker & Kubernetes : Terraform and AWS EKS

- Docker & Kubernetes : Pods and Service definitions

- Docker & Kubernetes : Service IP and the Service Type

- Docker & Kubernetes : Kubernetes DNS with Pods and Services

- Docker & Kubernetes : Headless service and discovering pods

- Docker & Kubernetes : Scaling and Updating application

- Docker & Kubernetes : Horizontal pod autoscaler on minikubes

- Docker & Kubernetes : From a monolithic app to micro services on GCP Kubernetes

- Docker & Kubernetes : Rolling updates

- Docker & Kubernetes : Deployments to GKE (Rolling update, Canary and Blue-green deployments)

- Docker & Kubernetes : Slack Chat Bot with NodeJS on GCP Kubernetes

- Docker & Kubernetes : Continuous Delivery with Jenkins Multibranch Pipeline for Dev, Canary, and Production Environments on GCP Kubernetes

- Docker & Kubernetes : NodePort vs LoadBalancer vs Ingress

- Docker & Kubernetes : MongoDB / MongoExpress on Minikube

- Docker & Kubernetes : Load Testing with Locust on GCP Kubernetes

- Docker & Kubernetes : MongoDB with StatefulSets on GCP Kubernetes Engine

- Docker & Kubernetes : Nginx Ingress Controller on Minikube

- Docker & Kubernetes : Setting up Ingress with NGINX Controller on Minikube (Mac)

- Docker & Kubernetes : Nginx Ingress Controller for Dashboard service on Minikube

- Docker & Kubernetes : Nginx Ingress Controller on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Ingress with AWS ALB Ingress Controller in EKS

- Docker & Kubernetes : Setting up a private cluster on GCP Kubernetes

- Docker & Kubernetes : Kubernetes Namespaces (default, kube-public, kube-system) and switching namespaces (kubens)

- Docker & Kubernetes : StatefulSets on minikube

- Docker & Kubernetes : RBAC

- Docker & Kubernetes Service Account, RBAC, and IAM

- Docker & Kubernetes - Kubernetes Service Account, RBAC, IAM with EKS ALB, Part 1

- Docker & Kubernetes : Helm Chart

- Docker & Kubernetes : My first Helm deploy

- Docker & Kubernetes : Readiness and Liveness Probes

- Docker & Kubernetes : Helm chart repository with Github pages

- Docker & Kubernetes : Deploying WordPress and MariaDB with Ingress to Minikube using Helm Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 2 Chart

- Docker & Kubernetes : Deploying WordPress and MariaDB to AWS using Helm 3 Chart

- Docker & Kubernetes : Helm Chart for Node/Express and MySQL with Ingress

- Docker & Kubernetes : Deploy Prometheus and Grafana using Helm and Prometheus Operator - Monitoring Kubernetes node resources out of the box

- Docker & Kubernetes : Deploy Prometheus and Grafana using kube-prometheus-stack Helm Chart

- Docker & Kubernetes : Istio (service mesh) sidecar proxy on GCP Kubernetes

- Docker & Kubernetes : Istio on EKS

- Docker & Kubernetes : Istio on Minikube with AWS EC2 for Bookinfo Application

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part I)

- Docker & Kubernetes : Deploying .NET Core app to Kubernetes Engine and configuring its traffic managed by Istio (Part II - Prometheus, Grafana, pin a service, split traffic, and inject faults)

- Docker & Kubernetes : Helm Package Manager with MySQL on GCP Kubernetes Engine

- Docker & Kubernetes : Deploying Memcached on Kubernetes Engine

- Docker & Kubernetes : EKS Control Plane (API server) Metrics with Prometheus

- Docker & Kubernetes : Spinnaker on EKS with Halyard

- Docker & Kubernetes : Continuous Delivery Pipelines with Spinnaker and Kubernetes Engine

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-dind (docker-in-docker)

- Docker & Kubernetes : Multi-node Local Kubernetes cluster : Kubeadm-kind (k8s-in-docker)

- Docker & Kubernetes : nodeSelector, nodeAffinity, taints/tolerations, pod affinity and anti-affinity - Assigning Pods to Nodes

- Docker & Kubernetes : Jenkins-X on EKS

- Docker & Kubernetes : ArgoCD App of Apps with Heml on Kubernetes

- Docker & Kubernetes : ArgoCD on Kubernetes cluster

- Docker & Kubernetes : GitOps with ArgoCD for Continuous Delivery to Kubernetes clusters (minikube) - guestbook

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization